摘要:本文介绍了tensorflow的常用函数。

1、tensorflow常用函数

TensorFlow 将图形定义转换成分布式执行的操作, 以充分利用可用的计算资源(如 CPU 或 GPU。一般你不需要显式指定使用 CPU 还是 GPU, TensorFlow 能自动检测。如果检测到 GPU, TensorFlow 会尽可能地利用找到的第一个 GPU 来执行操作.

并行计算能让代价大的算法计算加速执行,TensorFlow也在实现上对复杂操作进行了有效的改进。大部分核相关的操作都是设

备相关的实现,比如GPU。下面是一些重要的操作/核:

操作组

操作

TensorFlow的算术操作如下:

操作

描述

# ‘a' is [0.9, 2.5, 2.3, -4.4]

tf.round(a) ==> [ 1.0, 3.0, 2.0, -4.0 ]

# tensor ‘x' is [[2, 2], [3, 3]]

# tensor ‘y' is [[8, 16], [2, 3]]

tf.pow(x, y) ==> [[256, 65536], [9, 27]]

张量操作Tensor Transformations

- 数据类型转换Casting

操作

描述

(string_tensor, out_type=None, name=None)

# tensor

a is [1.8, 2.2], dtype=tf.floattf.cast(a, tf.int32) ==> [1, 2] # dtype=tf.int32

- 形状操作Shapes and Shaping

操作

描述

# ‘t' is [[[1, 1, 1], [2, 2, 2]], [[3, 3, 3], [4, 4, 4]]]

shape(t) ==> [2, 2, 3]

# ‘t' is [[[1, 1, 1], [2, 2, 2]], [[3, 3, 3], [4, 4, 4]]]]

size(t) ==> 12

注意:此rank不同于矩阵的rank,

tensor的rank表示一个tensor需要的索引数目来唯一表示任何一个元素

也就是通常所说的 “order”, “degree”或”ndims”

#'t' is [[[1, 1, 1], [2, 2, 2]], [[3, 3, 3], [4, 4, 4]]]

# shape of tensor ‘t' is [2, 2, 3]

rank(t) ==> 3

# tensor ‘t' is [1, 2, 3, 4, 5, 6, 7, 8, 9]

# tensor ‘t' has shape [9]

reshape(t, [3, 3]) ==>

[[1, 2, 3],

[4, 5, 6],

[7, 8, 9]]

#如果shape有元素[-1],表示在该维度打平至一维

# -1 将自动推导得为 9:

reshape(t, [2, -1]) ==>

[[1, 1, 1, 2, 2, 2, 3, 3, 3],

[4, 4, 4, 5, 5, 5, 6, 6, 6]]

#该操作要求-1-input.dims()

# ‘t' is a tensor of shape [2]

shape(expand_dims(t, 0)) ==> [1, 2]

shape(expand_dims(t, 1)) ==> [2, 1]

shape(expand_dims(t, -1)) ==> [2, 1] <= dim <= input.dims()

- 切片与合并(Slicing and Joining)

操作

描述

其中size[i] = input.dim_size(i) - begin[i]

该操作要求 0 <= begin[i] <= begin[i] + size[i] <= Di for i in [0, n]

#'input' is

#[[[1, 1, 1], [2, 2, 2]],[[3, 3, 3], [4, 4, 4]],[[5, 5, 5], [6, 6, 6]]]

tf.slice(input, [1, 0, 0], [1, 1, 3]) ==> [[[3, 3, 3]]]

tf.slice(input, [1, 0, 0], [1, 2, 3]) ==>

[[[3, 3, 3],

[4, 4, 4]]]

tf.slice(input, [1, 0, 0], [2, 1, 3]) ==>

[[[3, 3, 3]],

[[5, 5, 5]]]

# ‘value' is a tensor with shape [5, 30]

# Split ‘value' into 3 tensors along dimension 1

split0, split1, split2 = tf.split(1, 3, value)

tf.shape(split0) ==> [5, 10]

t1 = [[1, 2, 3], [4, 5, 6]]

t2 = [[7, 8, 9], [10, 11, 12]]

tf.concat(0, [t1, t2]) ==> [[1, 2, 3], [4, 5, 6], [7, 8, 9], [10, 11, 12]]

tf.concat(1, [t1, t2]) ==> [[1, 2, 3, 7, 8, 9], [4, 5, 6, 10, 11, 12]]

如果想沿着tensor一新轴连结打包,那么可以:

tf.concat(axis, [tf.expand_dims(t, axis) for t in tensors])

等同于tf.pack(tensors, axis=axis)

# ‘x' is [1, 4], ‘y' is [2, 5], ‘z' is [3, 6]

pack([x, y, z]) => [[1, 4], [2, 5], [3, 6]]

# 沿着第一维pack

pack([x, y, z], axis=1) => [[1, 2, 3], [4, 5, 6]]

等价于tf.pack([x, y, z]) = np.asarray([x, y, z])

其中dim为列表,元素为bool型,size等于rank(tensor)

# tensor ‘t' is

[[[[ 0, 1, 2, 3],

#[ 4, 5, 6, 7],

#[ 8, 9, 10, 11]],

#[[12, 13, 14, 15],

#[16, 17, 18, 19],

#[20, 21, 22, 23]]]]

# tensor ‘t' shape is [1, 2, 3, 4]

# ‘dims' is [False, False, False, True]

reverse(t, dims) ==>

[[[[ 3, 2, 1, 0],

[ 7, 6, 5, 4],

[ 11, 10, 9, 8]],

[[15, 14, 13, 12],

[19, 18, 17, 16],

[23, 22, 21, 20]]]]

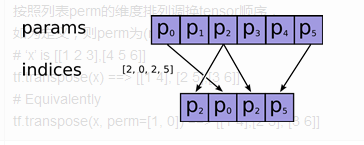

按照列表perm的维度排列调换tensor顺序,

如为定义,则perm为(n-1…0)

# ‘x' is [[1 2 3],[4 5 6]]

tf.transpose(x) ==> [[1 4], [2 5],[3 6]]

# Equivalently

tf.transpose(x, perm=[1, 0]) ==> [[1 4],[2 5], [3 6]]

(indices, depth, on_value=None, off_value=None,

axis=None, dtype=None, name=None)

depth = 3

on_value = 5.0

off_value = 0.0

axis = -1

#Then output is [4 x 3]:

output =

[5.0 0.0 0.0] // one_hot(0)

[0.0 0.0 5.0] // one_hot(2)

[0.0 0.0 0.0] // one_hot(-1)

[0.0 5.0 0.0] // one_hot(1)

矩阵相关运算

操作

描述

# ‘diagonal' is [1, 2, 3, 4]

tf.diag(diagonal) ==>

[[1, 0, 0, 0]

[0, 2, 0, 0]

[0, 0, 3, 0]

[0, 0, 0, 4]]

按照列表perm的维度排列调换tensor顺序,

如为定义,则perm为(n-1…0)

# ‘x' is [[1 2 3],[4 5 6]]

tf.transpose(x) ==> [[1 4], [2 5],[3 6]]

# Equivalently

tf.transpose(x, perm=[1, 0]) ==> [[1 4],[2 5], [3 6]]

transpose_b=False, a_is_sparse=False,

b_is_sparse=False, name=None)

即把一个对称正定的矩阵表示成一个下三角矩阵L和其转置的乘积的分解A=LL^T

matrix为方阵shape为[M,M],rhs的shape为[M,K],output为[M,K]

复数操作

操作

描述

# tensor ‘real' is [2.25, 3.25]

# tensor

imag is [4.75, 5.75]tf.complex(real, imag) ==> [[2.25 + 4.75j], [3.25 + 5.75j]]

# tensor ‘x' is [[-2.25 + 4.75j], [-3.25 + 5.75j]]

tf.complex_abs(x) ==> [5.25594902, 6.60492229]

tf.real(input, name=None)

归约计算(Reduction)

操作

描述

keep_dims=False, name=None)

# ‘x' is [[1, 1, 1]

# [1, 1, 1]]

tf.reduce_sum(x) ==> 6

tf.reduce_sum(x, 0) ==> [2, 2, 2]

tf.reduce_sum(x, 1) ==> [3, 3]

tf.reduce_sum(x, 1, keep_dims=True) ==> [[3], [3]]

tf.reduce_sum(x, [0, 1]) ==> 6

reduction_indices=None,

keep_dims=False, name=None)

reduction_indices=None,

keep_dims=False, name=None)

reduction_indices=None,

keep_dims=False, name=None)

reduction_indices=None,

keep_dims=False, name=None)

reduction_indices=None,

keep_dims=False, name=None)

# ‘x' is

# [[True, True]

# [False, False]]

tf.reduce_all(x) ==> False

tf.reduce_all(x, 0) ==> [False, False]

tf.reduce_all(x, 1) ==> [True, False]

reduction_indices=None,

keep_dims=False, name=None)

tensor_dtype=None, name=None)

# tensor ‘a' is [[1, 2], [3, 4]]

# tensor

b is [[5, 0], [0, 6]]tf.accumulate_n([a, b, a]) ==> [[7, 4], [6, 14]]

reverse=False, name=None)

tf.cumsum([a, b, c]) ==> [a, a + b, a + b + c]

tf.cumsum([a, b, c], exclusive=True) ==> [0, a, a + b]

tf.cumsum([a, b, c], reverse=True) ==> [a + b + c, b + c, c]

tf.cumsum([a, b, c], exclusive=True, reverse=True) ==> [b + c, c, 0]

分割(Segmentation)

操作

描述

其中segment_ids为一个size与data第一维相同的tensor

其中id为int型数据,最大id不大于size

c = tf.constant([[1,2,3,4], [-1,-2,-3,-4], [5,6,7,8]])

tf.segment_sum(c, tf.constant([0, 0, 1]))

==>[[0 0 0 0]

[5 6 7 8]]

上面例子分为[0,1]两id,对相同id的data相应数据进行求和,

并放入结果的相应id中,

且segment_ids只升不降

num_segments, name=None)

不同在于segment_ids中id顺序可以是无序的

segment_ids, name=None)

c = tf.constant([[1,2,3,4], [-1,-2,-3,-4], [5,6,7,8]])

# Select two rows, one segment.

tf.sparse_segment_sum(c, tf.constant([0, 1]), tf.constant([0, 0]))

==> [[0 0 0 0]]

对原data的indices为[0,1]位置的进行分割,

并按照segment_ids的分组进行求和

序列比较与索引提取(Sequence Comparison and Indexing)

操作

描述

# ‘input' tensor is

#[[True, False]

#[True, False]]

# ‘input' 有两个'True',那么输出两个坐标值.

# ‘input'的rank为2, 所以每个坐标为具有两个维度.

where(input) ==>

[[0, 0],

[1, 0]]

idx为x数据对应y元素的index

# tensor ‘x' is [1, 1, 2, 4, 4, 4, 7, 8, 8]

y, idx = unique(x)

y ==> [1, 2, 4, 7, 8]

idx ==> [0, 0, 1, 2, 2, 2, 3, 4, 4]

# tensor

x is [3, 4, 0, 2, 1]invert_permutation(x) ==> [2, 4, 3, 0, 1]

神经网络(Neural Network)

- 激活函数(Activation Functions)

操作

描述

Exponential Linear Units (ELUs)

noise_shape=None, seed=None, name=None)

noise_shape为噪声的shape

此函数为tf.add的特殊情况,bias仅为一维,

函数通过广播机制进行与value求和,

数据格式可以与value不同,返回为与value相同格式

- 卷积函数(Convolution)

操作

描述

use_cudnn_on_gpu=None, data_format=None, name=None)

输入shape为 [batch, height, width, in_channels]

输入shape为[batch, in_depth, in_height, in_width, in_channels]

- 池化函数(Pooling)

操作

描述

data_format='NHWC', name=None)

data_format='NHWC', name=None)

padding, Targmax=None, name=None)

padding, name=None)

padding, name=None)

- 数据标准化(Normalization)

操作

描述

output = x / sqrt(max(sum(x**2), epsilon))

keep_dims=False, name=None)

返回4维元组,*元素个数,*元素总和,*元素的平方和,*shift结果

参见算法介绍

name=None, keep_dims=False)

- 损失函数(Losses)

操作

描述

- 分类函数(Classification)

操作

描述

(logits, targets, name=None)*

softmax[i, j] = exp(logits[i, j]) / sum_j(exp(logits[i, j]))

(logits, labels, name=None)

logits, labels必须为相同的shape与数据类型

(logits, labels, name=None)

(logits, targets, pos_weight, name=None)

但给正向样本损失加了权重pos_weight

- 符号嵌入(Embeddings)

操作

描述

(params, ids, partition_strategy='mod',

name=None, validate_indices=True)

如果len(params) > 1,id将会安照partition_strategy策略进行分割

1、如果partition_strategy为”mod”,

id所分配到的位置为p = id % len(params)

比如有13个ids,分为5个位置,那么分配方案为:

[[0, 5, 10], [1, 6, 11], [2, 7, 12], [3, 8], [4, 9]]

2、如果partition_strategy为”div”,那么分配方案为:

[[0, 1, 2], [3, 4, 5], [6, 7, 8], [9, 10], [11, 12]]

sp_ids, sp_weights, partition_strategy='mod',

name=None, combiner='mean')

1、sp_ids为一个N x M的稀疏tensor,

N为batch大小,M为任意,数据类型int64

2、sp_weights的shape与sp_ids的稀疏tensor权重,

浮点类型,若为None,则权重为全'1'

- 循环神经网络(Recurrent Neural Networks)

操作

描述

sequence_length=None, scope=None)

initial_state=None, dtype=None, parallel_iterations=None,

swap_memory=False, time_major=False, scope=None)

与一般rnn不同的是,该函数会根据输入动态展开

返回(outputs,state)

sequence_length=None, scope=None)

initial_state_fw=None, initial_state_bw=None, dtype=None,

sequence_length=None, scope=None)

(outputs, output_state_fw, output_state_bw)

- 求值网络(Evaluation)

操作

描述

是否在在predictions前k个位置中,

返回数据类型为bool类型,len与predictions同

- 监督候选采样网络(Candidate Sampling)

对于有巨大量的多分类与多标签模型,如果使用全连接softmax将会占用大量的时间与空间资源,所以采用候选采样方法仅使用一小部分类别与标签作为监督以加速训练。

操作

描述

num_classes, num_true=1, sampled_values=None,

remove_accidental_hits=False, partition_strategy='mod',

name='nce_loss')

num_sampled, num_classes, num_true=1, sampled_values=None,

remove_accidental_hits=True, partition_strategy='mod',

name='sampled_softmax_loss')

参考- Jean et al., 2014第3部分

num_sampled, unique, range_max, seed=None, name=None)

返回三元tuple

1、sampled_candidates 候选集合。

2、期望的true_classes个数,为浮点值

3、期望的sampled_candidates个数,为浮点值

num_sampled, unique, range_max, seed=None, name=None)

(true_classes, num_true, num_sampled, unique,

range_max, seed=None, name=None)

返回三元tuple

num_sampled, unique, range_max, vocab_file=”,

distortion=1.0, num_reserved_ids=0, num_shards=1,

shard=0, unigrams=(), seed=None, name=None)

保存与恢复变量

操作

描述

sharded=False, max_to_keep=5,

keep_checkpoint_every_n_hours=10000.0,

name=None, restore_sequentially=False,

saver_def=None, builder=None)

var_list定义需要存储和恢复的变量

latest_filename=None, meta_graph_suffix='meta',

write_meta_graph=True)

到此这篇关于tensorflow常用函数API介绍的文章就介绍到这了,更多相关tensorflow常用函数内容请搜索易盾网络以前的文章或继续浏览下面的相关文章希望大家以后多多支持易盾网络!