1.安装pyinstaller https://www.jb51.net/article/177160.htm 2.安装pywin32 https://www.jb51.net/article/187388.htm 3.安装其他模块 注意点: scrapy用pyinstaller打包不能用 cmdline.execute('scrapy crawl douban -o test.csv --nolog

1.安装pyinstaller https://www.jb51.net/article/177160.htm

2.安装pywin32 https://www.jb51.net/article/187388.htm

3.安装其他模块

注意点:

scrapy用pyinstaller打包不能用

cmdline.execute('scrapy crawl douban -o test.csv --nolog'.split())

我用的是CrawlerProcess方式来输出

举个栗子:

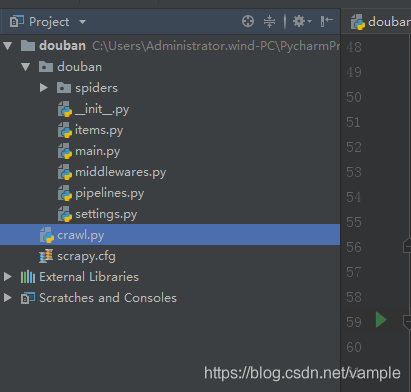

1、在scrapy项目根目录下建一个crawl.py(你可以自己定义)如下图

cralw.py代码如下

# -*- coding: utf-8 -*- from scrapy.crawler import CrawlerProcess from scrapy.utils.project import get_project_settings from douban.spiders.douban_spider import Douban_spider #打包需要的import import urllib.robotparser import scrapy.spiderloader import scrapy.statscollectors import scrapy.logformatter import scrapy.dupefilters import scrapy.squeues import scrapy.extensions.spiderstate import scrapy.extensions.corestats import scrapy.extensions.telnet import scrapy.extensions.logstats import scrapy.extensions.memusage import scrapy.extensions.memdebug import scrapy.extensions.feedexport import scrapy.extensions.closespider import scrapy.extensions.debug import scrapy.extensions.httpcache import scrapy.extensions.statsmailer import scrapy.extensions.throttle import scrapy.core.scheduler import scrapy.core.engine import scrapy.core.scraper import scrapy.core.spidermw import scrapy.core.downloader import scrapy.downloadermiddlewares.stats import scrapy.downloadermiddlewares.httpcache import scrapy.downloadermiddlewares.cookies import scrapy.downloadermiddlewares.useragent import scrapy.downloadermiddlewares.httpproxy import scrapy.downloadermiddlewares.ajaxcrawl import scrapy.downloadermiddlewares.chunked import scrapy.downloadermiddlewares.decompression import scrapy.downloadermiddlewares.defaultheaders import scrapy.downloadermiddlewares.downloadtimeout import scrapy.downloadermiddlewares.httpauth import scrapy.downloadermiddlewares.httpcompression import scrapy.downloadermiddlewares.redirect import scrapy.downloadermiddlewares.retry import scrapy.downloadermiddlewares.robotstxt import scrapy.spidermiddlewares.depth import scrapy.spidermiddlewares.httperror import scrapy.spidermiddlewares.offsite import scrapy.spidermiddlewares.referer import scrapy.spidermiddlewares.urllength import scrapy.pipelines import scrapy.core.downloader.handlers.http import scrapy.core.downloader.contextfactory from douban.pipelines import DoubanPipeline from douban.items import DoubanItem import douban.settings if __name__ == '__main__': setting = get_project_settings() process = CrawlerProcess(settings=setting) process.crawl(Douban_spider) process.start()

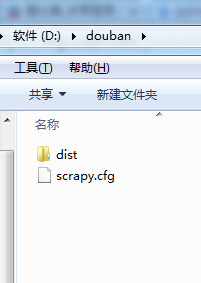

2、在crawl.py目录下pyinstaller crawl.py 生成dist,build(可删)和crawl.spec(可删)。

3、在crawl.exe目录下创建文件夹scrapy,然后到自己安装的scrapy文件夹中把VERSION和mime.types两个文件复制到刚才创建的scrapy文件夹中。

4、发布程序 包括douban/dist 和douban/scrapy.cfg

如果没有scrapy.cfg无法读取settings.py和pipelines.py的配置

5、在另外一台机器上测试成功

6、对于自定义的pipelines和settings,貌似用pyinstaller打包后的 exe无法读取到settings和pipelines,哪位高手看看能解决这个问题???

到此这篇关于Pyinstaller打包Scrapy项目的实现步骤的文章就介绍到这了,更多相关Pyinstaller打包Scrapy内容请搜索易盾网络以前的文章或继续浏览下面的相关文章希望大家以后多多支持易盾网络!