Sleuth+logback 设置traceid及自定义信息 背景: 分布式系统中,如何快速定位某个用户的请求日志? 使用Sleuth生成的traceid可以跟踪某个请求,但是很多时候我们需要知道traceid 与某个用户的

Sleuth+logback 设置traceid及自定义信息

背景:

分布式系统中,如何快速定位某个用户的请求日志?

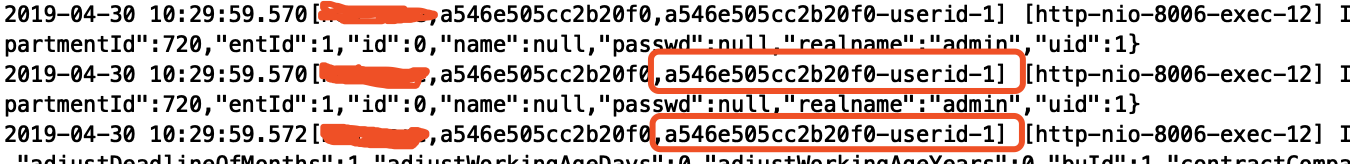

使用Sleuth生成的traceid可以跟踪某个请求,但是很多时候我们需要知道traceid 与某个用户的映射关系,方便定位某个用户的日志

方案:

@Component

@Order(TraceWebServletAutoConfiguration.TRACING_FILTER_ORDER + 1)

public class CustomHttpSpanExtractor extends GenericFilterBean {

private final Tracer tracer;

CustomHttpSpanExtractor(Tracer tracer) {

this.tracer = tracer;

}

@Override

public void doFilter(ServletRequest request, ServletResponse response,

FilterChain chain) throws IOException, ServletException {

Span currentSpan = this.tracer.currentSpan();

if (currentSpan == null) {

chain.doFilter(request, response);

return;

}

HttpServletRequest httpRequest = (HttpServletRequest) request;

try {

// mdc(httpRequest,currentSpan.context().traceIdString());

MDC.put(MdcConstant.USER_ID,"userid-12345");

} catch (Exception e) {

e.printStackTrace();

}

try {

chain.doFilter(request, response);

} finally {

MDC.clear();//must be,threadLocal

}

}}

logback 配置

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS}[${applicationName},%X{X-B3-TraceId:-},%X{userId:-}] [%thread] %-5level %logger{50} - %msg%n</pattern>

logback简单使用,自定义key

1.logback的pom依赖

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>4.11</version>

</dependency>

2.配置文件: logback.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="false" scanPeriod="5000" debug="false">

<springProperty scope="context" name="springAppName" source="spring.application.name" defaultValue="defaultAppName"/>

<timestamp key="bySecond" datePattern="yyyy-MM-dd HH:mm:ss"/>

<appender name="STASH-REDIS" class="com.jlb.yts.common.util.aop.RedisAppender">

<key>${springAppName:-}</key>

<!-- <filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>ALL</level>

<onMatch>ACCEPT</onMatch>

<onMismatch>DENY</onMismatch>

</filter> -->

<layout>

<pattern>

{

"time": "%d{yyyy-MM-dd HH:mm:ss}",

"application":"tech_cloud",

"subapplication":"${springAppName:-}",

"level": "%level",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"parent": "%X{X-B3-ParentSpanId:-}",

"classname": "%logger{100}",

"msg": "%message"

}

</pattern>

</layout>

</appender>

<appender name="ASYNC" class="ch.qos.logback.classic.AsyncAppender">

<!-- 不丢失日志.默认的,如果队列的80%已满,则会丢弃TRACT、DEBUG、INFO级别的日志 -->

<discardingThreshold>0</discardingThreshold>

<!-- 更改默认的队列的深度,该值会影响性能.默认值为256 -->

<queueSize>5120</queueSize>

<appender-ref ref="STASH-REDIS" />

</appender>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder charset="UTF-8">

<pattern>%d{yyyy-MM-dd HH:mm:ss} [%thread] %-5level %logger{40} - %msg%n</pattern>

</encoder>

</appender>

<logger name="com.jlb.yts" additivity="true" level="ERROR">

<appender-ref ref="ASYNC" />

</logger>

<logger name="com.jlb.yts" additivity="true" level="INFO">

<appender-ref ref="ASYNC" />

</logger>

<!-- mongodb打印语句 -->

<logger name="org.springframework.data.mongodb.core" level="DEBUG"/>

<logger name="com.jlb.yts" additivity="true" level="DEBUG">

<appender-ref ref="ASYNC" />

</logger>

<root level="INFO">

<appender-ref ref="STDOUT" />

</root>

</configuration>

3.自定义appender

package com.jlb.yts.common.util.aop;

import ch.qos.logback.classic.spi.ILoggingEvent;

import ch.qos.logback.classic.spi.IThrowableProxy;

import ch.qos.logback.classic.spi.LoggingEvent;

import ch.qos.logback.classic.spi.StackTraceElementProxy;

import ch.qos.logback.core.Layout;

import ch.qos.logback.core.UnsynchronizedAppenderBase;

import ch.qos.logback.core.filter.Filter;

import com.jlb.yts.common.util.redis.RedisHandle;

import com.jlb.yts.common.util.redis.ReidsConnect;

import net.logstash.logback.layout.LoggingEventCompositeJsonLayout;

import java.lang.reflect.Field;

public class RedisAppender extends UnsynchronizedAppenderBase<ILoggingEvent> {

public static String service;

Layout<ILoggingEvent> layout;

public void setFilter(Filter<ILoggingEvent> filter) {

if(filter != null){

this.addFilter(filter);

}

}

String key = null;

public RedisAppender() {

layout = new LoggingEventCompositeJsonLayout();

}

private final static RedisHandle redisHandle = ReidsConnect.init("redis-log.properties","redis-log.properties");

@Override

protected void append(ILoggingEvent event) {

try {

LoggingEvent loggingEvent = (LoggingEvent) event;

String json = layout.doLayout(loggingEvent);

redisHandle.addList(key,json);

} catch (Exception e) {

e.printStackTrace();

} finally {

}

}

public String getKey() {

return key;

}

public void setKey(String key) {

String prefix = (System.getenv("APPLICATION_ENV") == null)?"dev":System.getenv("APPLICATION_ENV");

key = prefix + "-" + key;

this.key = key;

}

public Layout<ILoggingEvent> getLayout() {

return layout;

}

public void setLayout(Layout<ILoggingEvent> layout) {

this.layout = layout;

}

}

4.代码实例

//注意导入的包都是slf4j的包

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class LogbackTest {

private static final Logger LOGGER = LoggerFactory.getLogger(LogbackTest.class);

public static void main(String[] args) {

LOGGER.trace("logback的--trace日志--输出了");

LOGGER.debug("logback的--debug日志--输出了");

LOGGER.info("logback的--info日志--输出了");

LOGGER.warn("logback的--warn日志--输出了");

LOGGER.error("logback的--error日志--输出了");

}

}

5.日志添加自定义KEY

import org.slf4j.MDC; MDC.put(key, value);

logback.xml 配置

"key":"%X{key:-}"

以上为个人经验,希望能给大家一个参考,也希望大家多多支持自由互联。