鉴于内容过多,先上太长不看版:

grpc就是请求流&响应流特殊一点的Http请求,性能和WebAPI比起来只快在Protobuf上;

附上完整试验代码:GrpcWithOutSDK.zip

另附小Demo,基于 Controller 和 HttpClient 的在线聊天室:ChatRoomOnController.zip

本文内容有点长,涉及较多基础知识点,某些结论可能直接得出,没有上下文,限于篇幅,不会在本文内详细描述,如有疑惑请友好交流或尝试搜索互联网。

本文仅代表个人试验结果和观点,可能会有偏颇,请自行判断。

一、背景

个人经常在网上看到 grpc、高性能 字眼的文章;有幸也面试过一些同僚,问及 grpc 对比 WebAPI,答案都是更快、性能更高;至于能快多少,答案就各种各样了,几倍到几十倍的回答都有,但大概是统一的:“grpc 要快得多”。那么具体快在哪里呢?回答我就觉得不那么准确了。

现在我们就来探索一下 grpc 和 WebAPI 的差别是什么? grpc 快在哪里?

就是个常规的 asp.net core 使用 grpc 的步骤

创建服务端- 建立一个

asp.net core grpc项目

- 添加一个测试的

reverse.proto用于测试grpc的几种通讯模式,并为其生成服务端

syntax = "proto3";

option csharp_namespace = "GrpcWithOutSDK";

package reverse;

service Reverse {

rpc Simple (Request) returns (Reply);

rpc ClientSide (stream Request) returns (Reply);

rpc ServerSide (Request) returns (stream Reply);

rpc Bidirectional (stream Request) returns (stream Reply);

}

message Request {

string message = 1;

}

message Reply {

string message = 1;

}

- 新建

ReverseService.cs实现具体的方法逻辑

public class ReverseService : Reverse.ReverseBase

{

private readonly ILogger<ReverseService> _logger;

public ReverseService(ILogger<ReverseService> logger)

{

_logger = logger;

}

private static Reply CreateReplay(Request request)

{

return new Reply

{

Message = new string(request.Message.Reverse().ToArray())

};

}

private void DisplayReceivedMessage(Request request, [CallerMemberName] string? methodName = null)

{

_logger.LogInformation($"{methodName} Received: {request.Message}");

}

public override async Task Bidirectional(IAsyncStreamReader<Request> requestStream, IServerStreamWriter<Reply> responseStream, ServerCallContext context)

{

while (await requestStream.MoveNext())

{

DisplayReceivedMessage(requestStream.Current);

await responseStream.WriteAsync(CreateReplay(requestStream.Current));

}

}

public override async Task<Reply> ClientSide(IAsyncStreamReader<Request> requestStream, ServerCallContext context)

{

var total = 0;

while (await requestStream.MoveNext())

{

total++;

DisplayReceivedMessage(requestStream.Current);

}

return new Reply

{

Message = $"{nameof(ServerSide)} Received Over. Total: {total}"

};

}

public override async Task ServerSide(Request request, IServerStreamWriter<Reply> responseStream, ServerCallContext context)

{

DisplayReceivedMessage(request);

for (int i = 0; i < 5; i++)

{

await responseStream.WriteAsync(CreateReplay(request));

}

}

public override Task<Reply> Simple(Request request, ServerCallContext context)

{

return Task.FromResult(CreateReplay(request));

}

}

最后记得 app.MapGrpcService<ReverseService>();

- 新建一个控制台项目,并添加

Google.Protobuf、Grpc.Net.Client、Grpc.Tools这几个包的引用 - 引用之前写好的

reverse.proto并为其生成客户端 - 写几个用于测试各种通讯模式的方法

private static async Task Bidirectional(Reverse.ReverseClient client)

{

var stream = client.Bidirectional();

var sendTask = Task.Run(async () =>

{

for (int i = 0; i < 10; i++)

{

await stream.RequestStream.WriteAsync(new() { Message = $"{nameof(Bidirectional)}-{i}" });

}

await stream.RequestStream.CompleteAsync();

});

var receiveTask = Task.Run(async () =>

{

while (await stream.ResponseStream.MoveNext(default))

{

DisplayReceivedMessage(stream.ResponseStream.Current);

}

});

await Task.WhenAll(sendTask, receiveTask);

}

private static async Task ClientSide(Reverse.ReverseClient client)

{

var stream = client.ClientSide();

for (int i = 0; i < 5; i++)

{

await stream.RequestStream.WriteAsync(new() { Message = $"{nameof(ClientSide)}-{i}" });

}

await stream.RequestStream.CompleteAsync();

var reply = await stream.ResponseAsync;

DisplayReceivedMessage(reply);

}

private static async Task Sample(Reverse.ReverseClient client)

{

var reply = await client.SimpleAsync(new() { Message = nameof(Sample) });

DisplayReceivedMessage(reply);

}

private static async Task ServerSide(Reverse.ReverseClient client)

{

var stream = client.ServerSide(new() { Message = nameof(ServerSide) });

while (await stream.ResponseStream.MoveNext(default))

{

DisplayReceivedMessage(stream.ResponseStream.Current);

}

}

- 测试代码

const string Host = "http://localhost:5035";

var channel = GrpcChannel.ForAddress(Host);

var grpcClient = new Reverse.ReverseClient(channel);

await Sample(grpcClient);

await ClientSide(grpcClient);

await ServerSide(grpcClient);

await Bidirectional(grpcClient);

- 将服务端的

Microsoft.AspNetCore日志等级调整为Information以打印请求日志 - 运行服务端与客户端

- 不出意外的话服务端会看到如下输出(为便于观察,已按方法进行分段,不重要的信息已省略)

info: Microsoft.AspNetCore.Hosting.Diagnostics[1]

Request starting HTTP/2 POST http://localhost:5035/reverse.Reverse/Simple application/grpc -

info: Microsoft.AspNetCore.Routing.EndpointMiddleware[0]

Executing endpoint 'gRPC - /reverse.Reverse/Simple'

info: Microsoft.AspNetCore.Routing.EndpointMiddleware[1]

Executed endpoint 'gRPC - /reverse.Reverse/Simple'

info: Microsoft.AspNetCore.Hosting.Diagnostics[2]

Request finished HTTP/2 POST http://localhost:5035/reverse.Reverse/Simple application/grpc - - 200 - application/grpc 99.1956ms

info: Microsoft.AspNetCore.Hosting.Diagnostics[1]

Request starting HTTP/2 POST http://localhost:5035/reverse.Reverse/ClientSide application/grpc -

info: Microsoft.AspNetCore.Routing.EndpointMiddleware[0]

Executing endpoint 'gRPC - /reverse.Reverse/ClientSide'

info: Microsoft.AspNetCore.Routing.EndpointMiddleware[1]

Executed endpoint 'gRPC - /reverse.Reverse/ClientSide'

info: Microsoft.AspNetCore.Hosting.Diagnostics[2]

Request finished HTTP/2 POST http://localhost:5035/reverse.Reverse/ClientSide application/grpc - - 200 - application/grpc 21.9445ms

info: Microsoft.AspNetCore.Hosting.Diagnostics[1]

Request starting HTTP/2 POST http://localhost:5035/reverse.Reverse/ServerSide application/grpc -

info: Microsoft.AspNetCore.Routing.EndpointMiddleware[0]

Executing endpoint 'gRPC - /reverse.Reverse/ServerSide'

info: Microsoft.AspNetCore.Routing.EndpointMiddleware[1]

Executed endpoint 'gRPC - /reverse.Reverse/ServerSide'

info: Microsoft.AspNetCore.Hosting.Diagnostics[2]

Request finished HTTP/2 POST http://localhost:5035/reverse.Reverse/ServerSide application/grpc - - 200 - application/grpc 12.7054ms

info: Microsoft.AspNetCore.Hosting.Diagnostics[1]

Request starting HTTP/2 POST http://localhost:5035/reverse.Reverse/Bidirectional application/grpc -

info: Microsoft.AspNetCore.Routing.EndpointMiddleware[0]

Executing endpoint 'gRPC - /reverse.Reverse/Bidirectional'

info: Microsoft.AspNetCore.Routing.EndpointMiddleware[1]

Executed endpoint 'gRPC - /reverse.Reverse/Bidirectional'

info: Microsoft.AspNetCore.Hosting.Diagnostics[2]

Request finished HTTP/2 POST http://localhost:5035/reverse.Reverse/Bidirectional application/grpc - - 200 - application/grpc 41.2414ms

对日志进行一些分析我们可以发现:

- 所有类型的

grpc通讯模式执行逻辑都是相同的,都是一次完整的http请求周期; - 请求的协议使用的是

HTTP/2; - 方法都为

POST; - 所有grpc方法都映射到了对应的终结点

/{package名}.{service名}/{方法名}; - 请求&响应的

ContentType都为application/grpc;

如果我们上一步的分析是对的,那么数据只能承载在 请求流 & 响应流 中,我们可以尝试获取流中的数据,进一步分析具体细节;

借助 asp.net core 的中间件,我们可以比较容易的进行 请求流 & 响应流 的内容 dump;

请求流 是只读的,响应流 是只写的,我们需要两个代理流替换原有的流,进行数据dump,将数据保存到 MemoryStream 中,以便我们观察;

这两个流分别为 ReadCacheProxyStream.cs 和 WriteCacheProxyStream.cs,直接上代码:

public class ReadCacheProxyStream : Stream

{

private readonly Stream _innerStream;

public MemoryStream CachedStream { get; } = new MemoryStream(1024);

public override bool CanRead => _innerStream.CanRead;

public override bool CanSeek => false;

public override bool CanWrite => false;

public override long Length => _innerStream.Length;

public override long Position { get => _innerStream.Length; set => throw new NotSupportedException(); }

public ReadCacheProxyStream(Stream innerStream)

{

_innerStream = innerStream;

}

public override void Flush() => throw new NotSupportedException();

public override Task FlushAsync(CancellationToken cancellationToken) => _innerStream.FlushAsync(cancellationToken);

public override int Read(byte[] buffer, int offset, int count) => throw new NotSupportedException();

public override async ValueTask<int> ReadAsync(Memory<byte> buffer, CancellationToken cancellationToken = default)

{

var len = await _innerStream.ReadAsync(buffer, cancellationToken);

if (len > 0)

{

CachedStream.Write(buffer.Span.Slice(0, len));

}

return len;

}

public override long Seek(long offset, SeekOrigin origin) => throw new NotSupportedException();

public override void SetLength(long value) => throw new NotSupportedException();

public override void Write(byte[] buffer, int offset, int count) => throw new NotSupportedException();

}

public class WriteCacheProxyStream : Stream

{

private readonly Stream _innerStream;

public MemoryStream CachedStream { get; } = new MemoryStream(1024);

public override bool CanRead => false;

public override bool CanSeek => false;

public override bool CanWrite => _innerStream.CanWrite;

public override long Length => _innerStream.Length;

public override long Position { get => _innerStream.Length; set => throw new NotSupportedException(); }

public WriteCacheProxyStream(Stream innerStream)

{

_innerStream = innerStream;

}

public override void Flush() => throw new NotSupportedException();

public override Task FlushAsync(CancellationToken cancellationToken) => _innerStream.FlushAsync(cancellationToken);

public override int Read(byte[] buffer, int offset, int count) => throw new NotSupportedException();

public override long Seek(long offset, SeekOrigin origin) => throw new NotSupportedException();

public override void SetLength(long value) => throw new NotSupportedException();

public override void Write(byte[] buffer, int offset, int count) => throw new NotSupportedException();

public override async ValueTask WriteAsync(ReadOnlyMemory<byte> buffer, CancellationToken cancellationToken = default)

{

await _innerStream.WriteAsync(buffer, cancellationToken);

CachedStream.Write(buffer.Span);

}

}

- 在请求管道中替换流

将如下中间件添加到请求管道的最开始

app.Use(async (context, next) =>

{

var originRequestBody = context.Request.Body;

var originResponseBody = context.Response.Body;

var requestCacheStream = new ReadCacheProxyStream(originRequestBody);

var responseCacheStream = new WriteCacheProxyStream(originResponseBody);

context.Request.Body = requestCacheStream;

context.Response.Body = responseCacheStream;

try

{

await next();

}

finally

{

await context.Response.CompleteAsync();

//要不要还回去不在这里进行讨论了

context.Request.Body = originRequestBody;

context.Response.Body = originResponseBody;

var requestData = requestCacheStream.CachedStream.ToArray();

var responseData = requestCacheStream.CachedStream.ToArray();

}

});

- 接下来在

finally块的最后打上断点,然后运行服务端和客户端,即可在中间件中通过requestData和responseData观察数据交互

理论上我们可以直接使用 Protobuf 进行解析,不过这里我们目的是为了手动实现一个超级简单的编码器。。。

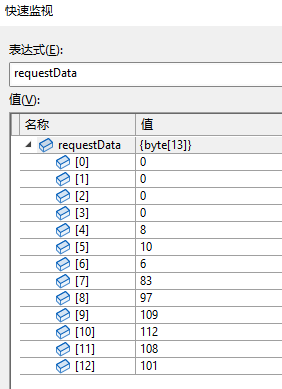

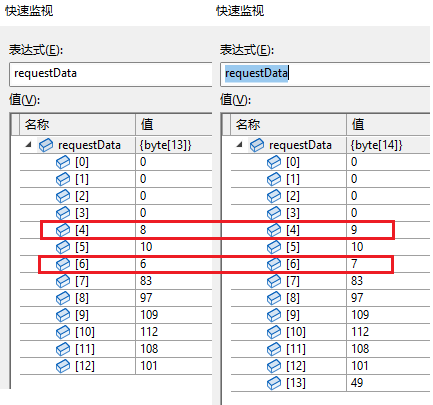

客户端执行 Sample 方法,并在服务端获取 requestData 和 responseData:

requestData

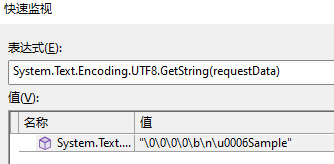

这个样子太不直观了,由于我们的消息定义 Request 只有一个 string 类型的字段,那么如果之前猜测正确,这个数据里面必定有对应字符串。我们直接尝试拿来看看:

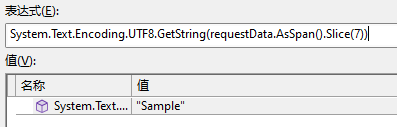

果然有对应的数据 Sample ,我们尝试去掉多余的数据看看:

那么前7个byte是干什么的呢,我们改一下请求的消息内容,将 Sample 修改为 Sample1 再次进行分析:

这样就比较明显了,稍做分析,我们可以先做个简单的总结,第5个字节为消息的总长度,第6个字节应该是字段描述之类的,当前消息体固定为10,第7个字节为Request.message字段的长度;

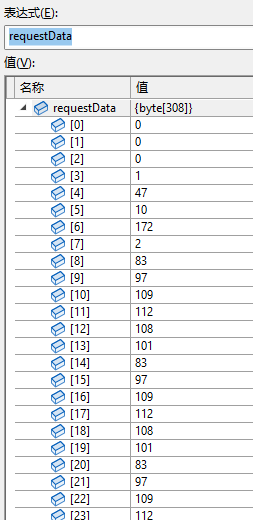

不过这样有点草率,byte最大为255,我们再探索一下内容超过255时,是什么结构。将 Sample 修改为 50 个重复的 Sample 再次进行分析:

情况一下就复杂了。。。不过第6个字节仍然是10,那么前5个字节应该有描述消息总长度,[0,0,0,1,47] 和长度 303 (注:308-5)之间的关系是什么呢;稍微试了一下,数据的第1个字节目前假设固定为0,第2-5字节应该是一个大端序的uint32,用来声明消息总长度 但是第

但是第7、8个字节如何转换为300,就有点难琢磨了。。。算了,我们先不处理内容过大的情况吧(具体编码逻辑可参见 protocol-buffers-encoding)

responseData

查看后发现结构和 requestData 是一样的(因为 Request 和 Reply 消息声明的结构相同),这里就不多描述了,可以自行Debug查看。

requestData和responseData

分析后发现流式请求里面的多个消息每个都是单个消息的结构,然后顺序放到请求或响应流中,这里也不多描述了,可以自行Debug进行查看,直接上基于以上总结的解码器代码:

public static IEnumerable<string> ReadMessages(byte[] originData)

{

var slice = originData.AsMemory();

while (!slice.IsEmpty)

{

var messageLen = BinaryPrimitives.ReadInt32BigEndian(slice.Slice(1, 4).Span);

var messageData = slice.Slice(5, messageLen);

slice = slice.Slice(5 + messageLen);

int len = messageData.Span[1];

var content = Encoding.UTF8.GetString(messageData.Slice(2, len).Span);

yield return content;

}

}

然后在中间件中展示内容

TempMessageCodecUtil.DisplayMessages(requestData);

TempMessageCodecUtil.DisplayMessages(responseData);

再次运行程序,能够正确看到控制台直接输出的请求和响应消息内容,形如:

Controller 实现能够与 Grpc Client SDK 交互的服务端

基于之前的分析,理论上我们只需要满足:

- 请求的协议使用的是 `HTTP/2`;

- 方法都为 `POST`;

- 所有grpc方法都映射到了对应的终结点 `/{package名}.{service名}/{方法名}`;

- 请求&响应的 `ContentType` 都为 `application/grpc`;

然后正确的从请求流中解析数据结构,将正确的数据结构写入响应流,就可以响应 Grpc Client 的请求了。

- 现在我们需要一个编码器,能够将字符串编码为

Reply消息格式;以及一个解码器,从请求流中读取Request消息。直接上代码。编码器:

public static byte[] BuildMessage(string message)

{

var contentData = Encoding.UTF8.GetBytes(message);

if (contentData.Length > 127)

{

throw new ArgumentException();

}

var messageData = new byte[contentData.Length + 7];

Array.Copy(contentData, 0, messageData, 7, contentData.Length);

messageData[5] = 10;

messageData[6] = (byte)contentData.Length;

BinaryPrimitives.WriteInt32BigEndian(messageData.AsSpan().Slice(1), contentData.Length + 2);

return messageData;

}

解码器:

private async IAsyncEnumerable<string> ReadMessageAsync([EnumeratorCancellation] CancellationToken cancellationToken)

{

var pipeReader = Request.BodyReader;

while (!cancellationToken.IsCancellationRequested)

{

var readResult = await pipeReader.ReadAsync(cancellationToken);

var buffer = readResult.Buffer;

if (readResult.IsCompleted

&& buffer.IsEmpty)

{

yield break;

}

if (buffer.Length < 5)

{

pipeReader.AdvanceTo(buffer.Start, buffer.End);

continue;

}

var messageBuffer = buffer.IsSingleSegment

? buffer.First

: buffer.ToArray();

var messageLen = BinaryPrimitives.ReadInt32BigEndian(messageBuffer.Slice(1, 4).Span);

if (buffer.Length < messageLen + 5)

{

pipeReader.AdvanceTo(buffer.Start, buffer.End);

continue;

}

messageBuffer = messageBuffer.Slice(5);

int len = messageBuffer.Span[1];

var content = Encoding.UTF8.GetString(messageBuffer.Slice(2, len).Span);

yield return content;

pipeReader.AdvanceTo(readResult.Buffer.GetPosition(7 + len));

}

}

- 实现一个

ReverseController.cs,映射reverse.proto中对应的方法,实现和ReverseService.cs中相同的执行逻辑。代码如下:

[Route("reverse.Reverse")]

[ApiController]

public class ReverseController : ControllerBase

{

[HttpPost]

[Route(nameof(Bidirectional))]

public async Task Bidirectional()

{

await foreach (var item in ReadMessageAsync(HttpContext.RequestAborted))

{

DisplayReceivedMessage(item);

await ReplayReverseAsync(item);

}

}

[HttpPost]

[Route(nameof(ClientSide))]

public async Task ClientSide()

{

var total = 0;

await foreach (var item in ReadMessageAsync(HttpContext.RequestAborted))

{

total++;

DisplayReceivedMessage(item);

}

await ReplayAsync($"{nameof(ServerSide)} Received Over. Total: {total}");

}

[HttpPost]

[Route(nameof(ServerSide))]

public async Task ServerSide()

{

string message = null!;

await foreach (var item in ReadMessageAsync(HttpContext.RequestAborted))

{

message = item;

}

DisplayReceivedMessage(message);

for (int i = 0; i < 5; i++)

{

await ReplayReverseAsync(message);

}

}

[HttpPost]

[Route(nameof(Simple))]

public async Task Simple()

{

string message = null!;

await foreach (var item in ReadMessageAsync(HttpContext.RequestAborted))

{

message = item;

}

DisplayReceivedMessage(message);

await ReplayReverseAsync(message);

}

private async Task ReplayAsync(string message)

{

if (!Response.HasStarted)

{

Response.Headers.ContentType = "application/grpc";

Response.AppendTrailer("grpc-status", "0");

await Response.StartAsync();

}

await Response.Body.WriteAsync(TempMessageCodecUtil.BuildMessage(message));

}

private Task ReplayReverseAsync(string rawMessage) => ReplayAsync(new string(rawMessage.Reverse().ToArray()));

//省略其他信息

}

最后记得 services.AddControllers() 和 app.MapControllers() 并取消Grpc的ServiceMap;

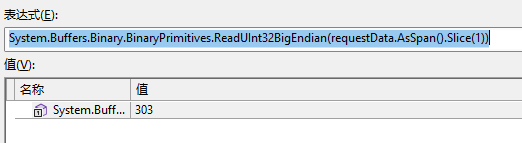

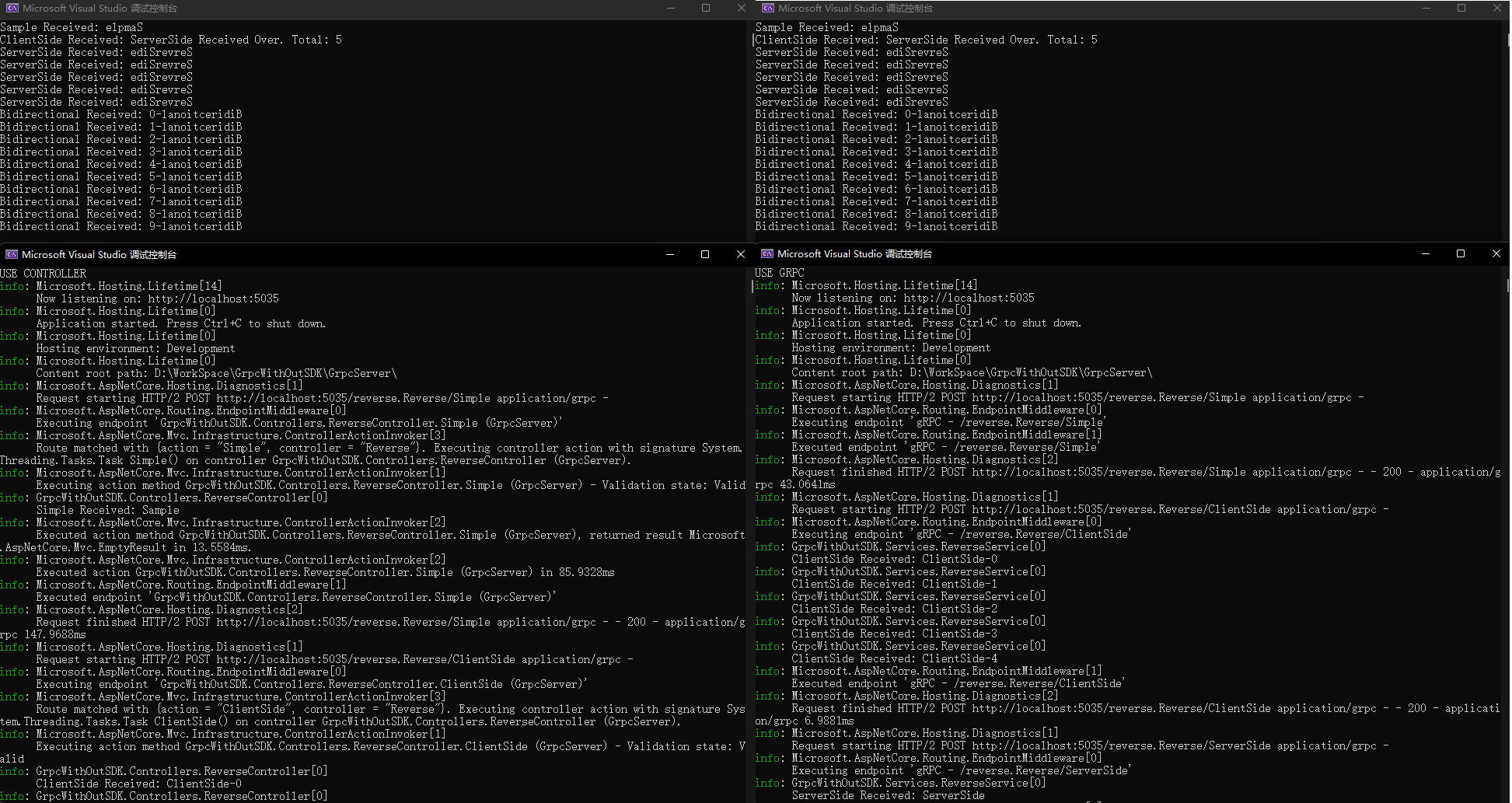

此时分别使用 Controller 和 GrpcService 运行服务端,并查看客户端日志,可以看到运行结果相同,如图:

HttpClient 实现能够与 Grpc Server 交互的客户端

在上面我们已经使用原生 Controller 实现了一个可以让客户端正常运行的服务端,现在我们不使用 Grpc SDK 来实现一个可以和服务端交互的客户端。

- 服务端获取请求流和响应流比较简单,目前

HttpClient没有直接获取请求流的办法,我们需要从HttpContent的SerializeToStreamAsync方法中获取到真正的请求流。具体细节不在这里赘述,直接上代码:

class LongAliveHttpContent : HttpContent

{

private readonly TaskCompletionSource<Stream> _streamGetCompletionSource = new(TaskCreationOptions.RunContinuationsAsynchronously);

private readonly TaskCompletionSource _taskCompletionSource = new(TaskCreationOptions.RunContinuationsAsynchronously);

public LongAliveHttpContent()

{

Headers.ContentType = new MediaTypeHeaderValue("application/grpc");

}

protected override Task SerializeToStreamAsync(Stream stream, TransportContext? context)

{

_streamGetCompletionSource.SetResult(stream);

return _taskCompletionSource.Task;

}

protected override bool TryComputeLength(out long length)

{

length = -1;

return false;

}

public void Complete()

{

_taskCompletionSource.TrySetResult();

}

public Task<Stream> GetStreamAsync()

{

return _streamGetCompletionSource.Task;

}

}

- 客户端同样需要满足对应的请求要求:

- 请求的协议使用的是 `HTTP/2`;

- 方法都为 `POST`;

- 所有grpc方法都映射到了对应的终结点 `/{package名}.{service名}/{方法名}`;

- 请求&响应的 `ContentType` 都为 `application/grpc`;

直接上代码,使用 HttpClient 发起请求,并获取 请求流 & 响应流:

private static (Task<Stream> RequestStreamGetTask, Task<Stream> ResponseStreamGetTask, LongAliveHttpContent HttpContent) CreateStreamGetTasksAsync(HttpClient client, string path)

{

var content = new LongAliveHttpContent();

var httpRequestMessage = new HttpRequestMessage()

{

Method = HttpMethod.Post,

RequestUri = new Uri(path, UriKind.Relative),

Content = content,

Version = HttpVersion.Version20,

VersionPolicy = HttpVersionPolicy.RequestVersionExact,

};

var responseStreamGetTask = client.SendAsync(httpRequestMessage, HttpCompletionOption.ResponseHeadersRead)

.ContinueWith(m => m.Result.Content.ReadAsStreamAsync())

.Unwrap();

return (content.GetStreamAsync(), responseStreamGetTask, content);

}

- 实现和

Grpc客户端相同的执行逻辑。代码如下:

private static async Task BidirectionalWithOutSDK(HttpClient client)

{

var (requestStreamGetTask, responseStreamGetTask, httpContent) = CreateStreamGetTasksAsync(client, "reverse.Reverse/Bidirectional");

var requestStream = await requestStreamGetTask;

var sendTask = Task.Run(async () =>

{

for (int i = 0; i < 10; i++)

{

await requestStream.WriteAsync(TempMessageCodecUtil.BuildMessage($"{nameof(Bidirectional)}-{i}"));

}

httpContent.Complete();

});

var receiveTask = DisplayReceivedMessageAsync(responseStreamGetTask);

await Task.WhenAll(sendTask, receiveTask);

}

private static async Task ClientSideWithOutSDK(HttpClient client)

{

var (requestStreamGetTask, responseStreamGetTask, httpContent) = CreateStreamGetTasksAsync(client, "reverse.Reverse/ClientSide");

var requestStream = await requestStreamGetTask;

for (int i = 0; i < 5; i++)

{

await requestStream.WriteAsync(TempMessageCodecUtil.BuildMessage($"{nameof(ClientSide)}-{i}"));

await requestStream.FlushAsync();

}

httpContent.Complete();

await DisplayReceivedMessageAsync(responseStreamGetTask);

}

private static async Task SampleWithOutSDK(HttpClient client)

{

var (requestStreamGetTask, responseStreamGetTask, httpContent) = CreateStreamGetTasksAsync(client, "reverse.Reverse/Simple");

var requestStream = await requestStreamGetTask;

await requestStream.WriteAsync(TempMessageCodecUtil.BuildMessage(nameof(Sample)));

httpContent.Complete();

await DisplayReceivedMessageAsync(responseStreamGetTask);

}

private static async Task ServerSideWithOutSDK(HttpClient client)

{

var (requestStreamGetTask, responseStreamGetTask, httpContent) = CreateStreamGetTasksAsync(client, "reverse.Reverse/ServerSide");

var requestStream = await requestStreamGetTask;

await requestStream.WriteAsync(TempMessageCodecUtil.BuildMessage(nameof(ServerSide)));

httpContent.Complete();

await DisplayReceivedMessageAsync(responseStreamGetTask);

}

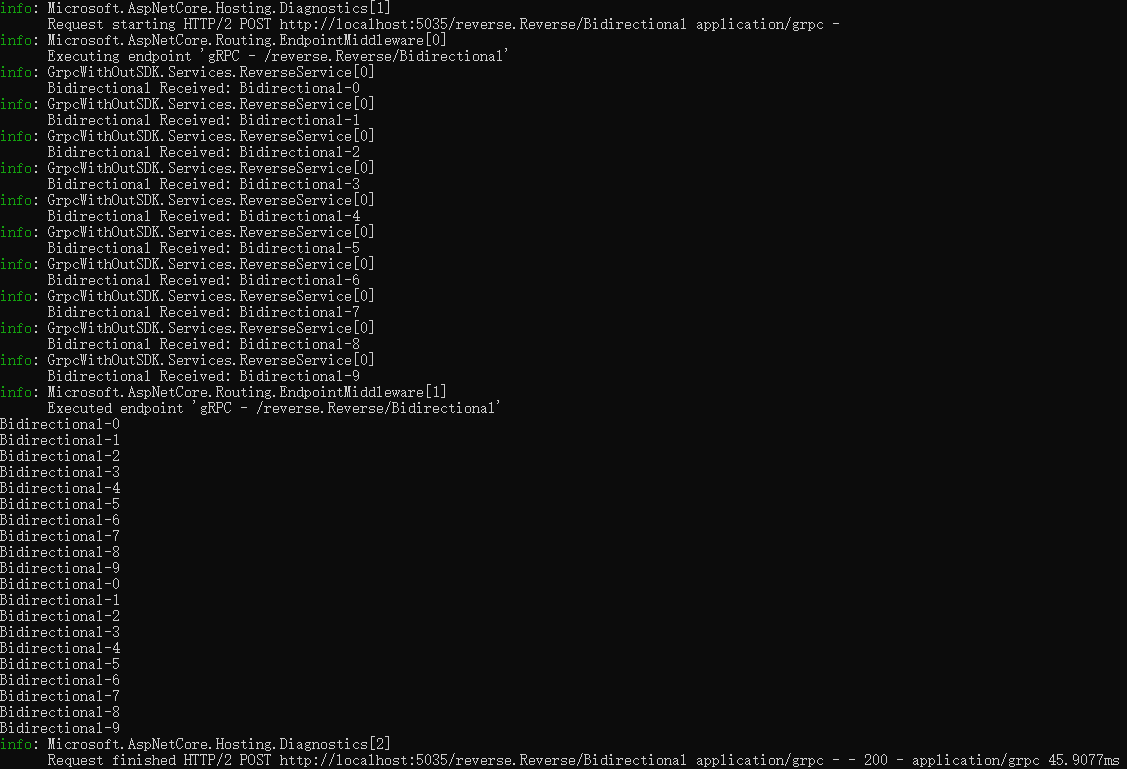

此时分别进行如下测试:

- 使用

GrpcService运行服务端,并分别使用sdk客户端和HttpClient客户端进行请求; - 使用

Controller运行服务端,并分别使用sdk客户端和HttpClient客户端进行请求;

可以看到客户端运行结果相同,如下:

Sample Received: elpmaS

ClientSide Received: ServerSide Received Over. Total: 5

ServerSide Received: ediSrevreS

ServerSide Received: ediSrevreS

ServerSide Received: ediSrevreS

ServerSide Received: ediSrevreS

ServerSide Received: ediSrevreS

Bidirectional Received: 0-lanoitceridiB

Bidirectional Received: 1-lanoitceridiB

Bidirectional Received: 2-lanoitceridiB

Bidirectional Received: 3-lanoitceridiB

Bidirectional Received: 4-lanoitceridiB

Bidirectional Received: 5-lanoitceridiB

Bidirectional Received: 6-lanoitceridiB

Bidirectional Received: 7-lanoitceridiB

Bidirectional Received: 8-lanoitceridiB

Bidirectional Received: 9-lanoitceridiB

----------------- WithOutSDK -----------------

SampleWithOutSDK Received: elpmaS

ClientSideWithOutSDK Received: ServerSide Received Over. Total: 5

ServerSideWithOutSDK Received: ediSrevreS

ServerSideWithOutSDK Received: ediSrevreS

ServerSideWithOutSDK Received: ediSrevreS

ServerSideWithOutSDK Received: ediSrevreS

ServerSideWithOutSDK Received: ediSrevreS

BidirectionalWithOutSDK Received: 0-lanoitceridiB

BidirectionalWithOutSDK Received: 1-lanoitceridiB

BidirectionalWithOutSDK Received: 2-lanoitceridiB

BidirectionalWithOutSDK Received: 3-lanoitceridiB

BidirectionalWithOutSDK Received: 4-lanoitceridiB

BidirectionalWithOutSDK Received: 5-lanoitceridiB

BidirectionalWithOutSDK Received: 6-lanoitceridiB

BidirectionalWithOutSDK Received: 7-lanoitceridiB

BidirectionalWithOutSDK Received: 8-lanoitceridiB

BidirectionalWithOutSDK Received: 9-lanoitceridiB

至此,我们稍作分析和总结,可以得出结论:

Grpc所有类型的方法调用都是普通的Http请求,只是请求和响应的内容是经过Protobuf编码的数据;

我们再稍作拓展,可以得出更多结论:

多路复用、Header压缩什么的,都是Http2带来的优化,不是和Grpc绑定的,使用Http2访问常规WebAPI也能享受到其带来的好处;Grpc的Unary请求模式和和WebAPI逻辑是一样的;Server streaming、Client streaming请求模式都可以通过Http1.1进行实现(但不能多路复用,每个请求会独占一个连接);Bidirectional streaming是基于二进制分帧的,只能在Http2及以上版本实现双向流通讯;

基于以上结论,我们总结一下 Grpc 比 WebAPI 的优势在哪里:

- 运行速度更快(一定情况下),

Protobuf基于二进制的编码,在数据量较多时,比json这种基于文本的编码效率更高;但丢失了直接的可阅读性;(没做性能测试,理论是这样,如果性能打不过json的话,那就没有存在价值了。理论上数据量越大,性能差距越大) - 传输数据更少,

json因为要自我描述,所有字段都有名字,在序列化List时这种浪费就比较多了,重复对象越多,浪费越多(但可阅读性也是这样来的);Protobuf没有这方面的浪费,还有一些其它的优化,参见 protocol-buffers-encoding; - 开发速度更快,SDK使用

proto文件直接生成服务端和客户端,上手更快,跨语言也能快速生成客户端(这点其实见仁见智,WebAPI也有类似的工具);

Grpc 比传统 WebAPI 的劣势有哪些呢:

- 可阅读性;不借助工具

Grpc的消息内容是没法直接阅读的; HTTP2强绑定;WebAPI可以在低版本协议下运行,某些时候会方便一点;- 依赖

Grpc SDK;虽然Grpc SDK已经覆盖了很多主流语言,但如果恰好某个需求要使用的语言没有SDK,那就有点麻烦了;相比之下基于文本的WebAPI会更通用一点; - 类型不能完全覆盖某些语言的基础类型,需要额外的编码量(方法不能直接接收/返回基础类型、Nullable等);

Protobuf要求严格的格式,字段增删- 额外的学习成本;

最后再基于结论,总结一些我认为有问题的 grpc 使用方法吧:

- 把

grpc当作一个封包/拆包工具;在消息体中放一个json之类的东西,拿到消息之后在反序列化一次。。。这又是何必呢。。。直接基于原生Http写一个基于消息头指定消息长度的分包逻辑并花不了多少工作量,也不会额外引入grpc的相关东西;这个用法也和grpc的高性能背道而驰,还多了一层序列化/反序列化操作;(我在这里没有说nacos) - 使用单独的认证逻辑;

grpc调用就是Http请求,那么Header的工作逻辑是和WebAPI完全一样的;那么grpc请求完全可以使用现有的Http认证 和 Header处理 代码甚至请求管道;额外再自定义消息实现相关功能不是多此一举吗?(我在这里也没有说nacos)

综上,个人认为,不是别人说 grpc 高性能,就认为它碾压传统 WebAPI,就去用它;还是需要了解原理后好好考虑的,确认它能否为你带来理想的效果;有时候或许自己手写一个变体的 Http 请求处理逻辑能更快更好的满足需求;

拓展

如果有闲心的话,理论上甚至可以做下列的玩具:

WebAPI的grpc兼容层,使Controller既能以grpc工作又能处理普通请求;通过Controller定义,反向生成DTO的proto消息定义,以及整个service的proto定义;grpc的WebAPI兼容层,使grpc服务能工作的像Controller一样,对外输入输出json;