Kubernetes各组件功能介绍

一.api-server

Kubernetes API 集群的统一入口,各组件的协调者,提供了k8s所有资源对象的增删改查及watch等HTTPRest接口,这些对象包括pods,services、replicationcontrollers等,APIserver为REST操作提供服务,并为集群的共享状态提供前端,所有其他组件都通过该前端进行交互。处理后再提交给etcd数据库做持久化存储。

RESTful API:

是REST风格的网络接口,REST描述的是在网络中client和server的一种交互形式。

默认端口6443,可通过启动参数"--secure-port"的值来修改默认值。

默认IP地址为非本地(Non-Localhost)网络端口,通过启动参数"--bind-address"设置该 值。

该端口用于接收客户端,dashboard等外部HTTPS请求。

用于基于Token文件或客户端证书以及HTTP Base的认证。

用于基于策略的授权。

1.查看资源的详细信息:

kube-system dashboard-admin-token-nlm6l kubernetes.io/service-account-token 3 69d

2.查看pod的详细信息,并且指定namespace

Name: dashboard-admin-token-nlm6l

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: f96e5cab-2161-4686-83cb-a32963999072

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tbmxtNmwiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZjk2ZTVjYWItMjE2MS00Njg2LTgzY2ItYTMyOTYzOTk5MDcyIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.4nH0wO8VVvosAUGhs1s7-BBqUVkcBBaYpkFivCUc3ecn2GsXqNU98xxafEED9tW9bsducPTjvq4GtSGQPleWOMdc9UAbwGa8hl40Q7RgDAvuMZcOFA1X1tWQMOXPhNPpPUR1ugVJWtxLbbmiY9MFTsWbYJZzFUPPCJHRFzTlNiTgVx_vDWnBGE2L4ozqPIrVHS2PB0k9hZj5t9XD2LQcMMw1HWjG42rAsjxTqYF30gegee9MHcXnRk0sLPZFAlciOXUaSIU2sfj704yvw8Vr8VoRrCXyOnP1NrnF81lCrXrvxHCvEqz47NrNdO-gJtyrRlao-7C4AFS4Gt6N3NVnjQ

ca.crt: 1025 bytes

3.通过token信息,访问k8s api-server的信息

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/",

"/apis/admissionregistration.k8s.io",

"/apis/admissionregistration.k8s.io/v1beta1",

"/apis/apiextensions.k8s.io",

"/apis/apiextensions.k8s.io/v1beta1",

"/apis/apiregistration.k8s.io",

"/apis/apiregistration.k8s.io/v1",

"/apis/apiregistration.k8s.io/v1beta1",

"/apis/apps",

"/apis/apps/v1",

"/apis/apps/v1beta1",

"/apis/apps/v1beta2",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/autoscaling/v2beta1",

"/apis/autoscaling/v2beta2",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v1beta1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1beta1",

"/apis/coordination.k8s.io",

"/apis/coordination.k8s.io/v1",

"/apis/coordination.k8s.io/v1beta1",

"/apis/events.k8s.io",

"/apis/events.k8s.io/v1beta1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/metrics.k8s.io",

"/apis/metrics.k8s.io/v1beta1",

"/apis/monitoring.coreos.com",

"/apis/monitoring.coreos.com/v1",

"/apis/networking.k8s.io",

"/apis/networking.k8s.io/v1",

"/apis/networking.k8s.io/v1beta1",

"/apis/node.k8s.io",

"/apis/node.k8s.io/v1beta1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1",

"/apis/rbac.authorization.k8s.io/v1beta1",

"/apis/scheduling.k8s.io",

"/apis/scheduling.k8s.io/v1",

"/apis/scheduling.k8s.io/v1beta1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/autoregister-completion",

"/healthz/etcd",

"/healthz/log",

"/healthz/ping",

"/healthz/poststarthook/apiservice-openapi-controller",

"/healthz/poststarthook/apiservice-registration-controller",

"/healthz/poststarthook/apiservice-status-available-controller",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/ca-registration",

"/healthz/poststarthook/crd-informer-synced",

"/healthz/poststarthook/generic-apiserver-start-informers",

"/healthz/poststarthook/kube-apiserver-autoregistration",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/healthz/poststarthook/scheduling/bootstrap-system-priority-classes",

"/healthz/poststarthook/start-apiextensions-controllers",

"/healthz/poststarthook/start-apiextensions-informers",

"/healthz/poststarthook/start-kube-aggregator-informers",

"/healthz/poststarthook/start-kube-apiserver-admission-initializer",

"/logs",

"/metrics",

"/openapi/v2",

"/version"

]

}

4.查看api-server版本信息

{

"major": "1",

"minor": "15",

"gitVersion": "v1.15.0",

"gitCommit": "e8462b5b5dc2584fdcd18e6bcfe9f1e4d970a529",

"gitTreeState": "clean",

"buildDate": "2019-06-19T16:32:14Z",

"goVersion": "go1.12.5",

"compiler": "gc",

"platform": "linux/amd64"

}

5.检查监控状态

ok

# curl https://10.2.24.164:6443/apis # 分组API

# curl https://10.2.24.164:6443/api/v1 # 带具体版本号的API

# curl https://10.2.24.164:6443/version # API版本信息

# curl https://10.2.24.164:6443/healthz/etcd # 与etcd的心跳监控

# curl https://10.2.24.164:6443/apis/autoscaling/v1 #API的详细信息

# curl https://10.2.24.164:6443/metrics #指标数据

二. kube-scheduler

Kube-scheduler是Kubernetes默认调度器程序,Kubernetes系统上的调度是指为API Server接收到的每一个Pod创建请求,并在集群中为其匹配出一个最佳工作节点。它在匹配工作节点时的考量因素包括硬件,软件和策略约束,亲和力与反亲和力规范以及数据的局部性等特征。

运行流程:

1.通过调度算法为待调度Pod列表的每个Pod从可用Node列表中选择一个最合适的Node,并将信息写入etcd中。

2.node节点上的kubelet通过API Server监听到kubernetes Scheduler产生的Pod绑定信息,然后获取对应的Pod清单,下载image,并启动容器。

调度分为两大步:

第一步,先排除不合符的资源。

第二步,在剩余的可用节点中筛选出最符合条件的节点。

删除策略:

优先从备选节点列表中选择资源消耗最小的节点(CPU+内存)

优先选择含有指定Label的节点

优先从备选节点列表中选择各项资源使用率最均衡的节点。

三. kube-controller-manager

controller-manager还包括一些自控制器(副本控制器,节点控制器,命名空间控制器和服务账号控制器等),控制器作为集群内部的管理中心,负责集群内的Node,pod副本,服务点(Endpoint),命名空间,服务账号,资源定额的管理,当某个Node意外宕机时,Controller Manager会及时发现并执行自动化修复流程,确保集群中的pod副本始终处于预期的工作状态。

controller-manager控制器每间隔5秒检查一次node节点状态;如果controller-manager控制器没有收到来自节点的心跳,则将该node节点标记为不可达。controller-manager将在标记为无法访问之前等待40秒;如果该node节点被标记为无法访问后5分钟还没有恢复,controller-manager会删除当前node节点的所有pod并在其它可用节点重建这些pod。

四. kube-proxy

kubernets网络代理运行在node上,它反映了node上kubernets api中定义的服务,并可以通过一组后端进行简单的TCP,UDP和SCTP流转发或者在一组后端进行循环TCP,UDP和SCTP转发,用户必须使用apiserver API创建一个服务来配置代理,其实就是kube-proxy通过在主机上维护网络规则并执行连接转发来实现kubernets服务访问。

kube-proxy运行在每个节点上,监听APIServer中服务对象的变化,再通过管理iptables或者IPVS规则来实现网络的转发。IPVS相对于iptables效率会更高一些,使用ipvs模块需要在运行kube-proxy的节点上安装ipvsadmin,ipset工具包或加载ip_vs内核模块,当kube-proxy以IPVS代理模式启动时,kube-proxy将验证节点上是否安装了IPVS模块,如果未安装,则Kube-proxy将回退到iptables代理模式。

五. kubelet

kubelet是在每个Node节点上运行的主要“节点代理”。它会监视已分配给节点的pod。它可以使用主机名,覆盖主机名的标志或云提供商的特定逻辑向api server注册节点。

具体功能如下:

向master汇报node节点的状态信息。

接受指令并在pod中创建docker容器

准备pod所需的数据卷

返回pod的运行状态

在node节点执行容器健康检查

kubelet 是基于 PodSpec 来工作的。每个 PodSpec 是一个描述 Pod 的 YAML 或 JSON 对象。

六. etcd

etcd是CoreOS公司开发目前是kubernetes默认使用的key-value数据库存储系统,它是高度一致的分布式键值存储,用于保存kubernetes的所有集群数据,etcd支持分布式集群功能,生产环境使用时需要etcd数据提供定期备份机制。最少每天备份一次etcd数据库数据。

特征:

获取etcd数据库中所有的数据;

etcdctl get / --keys-only --prefix

dns:

dns负责为整个集群提供dns服务,从而实现服务之间的访问。

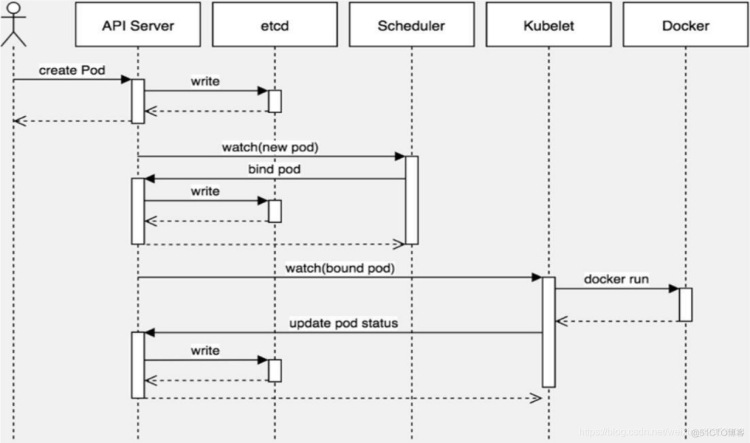

k8s中创建pod的调度流程

Kubernetes通过watch的机制进行每个组件的协作,每个组件之间的设计实现了解耦。

1.用户使用create/apply yaml创建pod,请求给apiseerver,apiserver将yaml中的属性信息(metadata)写入etcd。

2.apiserver触发watch机制准备创建pod,信息转发给调度器,调度器使用调度算法选择node,调度器将node信息给apiserver,apiserver_将绑定的node信息写入etcd。

3.apiserver又通过watch机制,调用kubelet,指定pod信息,触发docker run命 令创建容器。

4.创建完成之后反馈给kubelet,kubelet又将pod的状态信息给apiserver,apiserver又将pod的状态信息写入etcd。

5.其中kubectl get pods命令调用的时etcd_的信息。

使用kubeasz部署k8s集群

1.机器环境介绍:

系统版本:centos7.6角色规划:3个master,2个node,1个harbor

服务器地址:

10.2.24.164 k8s-master1

10.2.24.165 k8s-master2

10.2.24.166 k8s-master3

10.2.24.167 k8s-node1

10.2.24.168 k8s-node2

10.2.24.199 harbor.taoyake.com

2.升级系统内核:

我使用的是centos 7.6的系统,默认是3.10的内核,我把系统内核版本升级到5.4.1。

系统版本升级之前:

升级内核版本的命令如下:(每台机器都升级内核版本到5.4.1)

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

yum --disablerepo=\* --enablerepo=elrepo-kernel repolist

yum --disablerepo=\* --enablerepo=elrepo-kernel list kernel*

yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt.x86_64

yum remove kernel-tools-libs.x86_64 kernel-tools.x86_64 -y

yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt-tools.x86_64

grub2-set-default 0

reboot

系统内核版本升级之后:

[root@k8s-master1 ~]# uname -aLinux k8s-master1 5.4.156-1.el7.elrepo.x86_64 #1 SMP Tue Oct 26 11:19:09 EDT 2021 x86_64 x86_64 x86_64 GNU/Linux

3.部署harbor服务器:

升级系统版本到最新,然后安装python

[root@k8s-master1 ~]# yum update -y[root@harbor ~]# yum install -y python

4.安装docker

[root@harbor ~]# cd /data/apps/

# 需要手动上传docker-19.03.15-binary-install.tar.gz 这个压缩包

[root@harbor apps]# mkdir docker

[root@harbor apps]# tar -zxvf docker-19.03.15-binary-install.tar.gz -C docker

[root@harbor apps]# cd docker && sh docker-install.sh

[root@harbor docker]# cd ..

[root@harbor apps]# wget https://github.com/goharbor/harbor/releases/download/v2.3.2/harbor-offline-installer-v2.3.2.tgz

[root@harbor apps]# tar -zxvf harbor-offline-installer-v2.3.2.tgz

harbor/harbor.v2.3.2.tar.gz

harbor/prepare

harbor/LICENSE

harbor/install.sh

harbor/common.sh

harbor/harbor.yml.tmpl

给harbor生成一个私有key,然后签发一个证书

Generating RSA private key, 2048 bit long modulus

.......................................................+++

.................................+++

e is 65537 (0x10001)

# 签发证书

[root@harbor apps]# openssl req -x509 -new -nodes -key harbor-ca.key -subj "/CN=harbor.taoyake.com" -days 7120 -out harbor-ca.crt

[root@harbor apps]# mkdir certs

[root@harbor apps]# mv harbor-ca.key harbor-ca.crt certs/

[root@harbor apps]# mkdir /data/harbor

修改harbor配置文件

[root@harbor harbor]# cp harbor.yml.tmpl harbor.yml

编辑harbor.yml配置文件,修改4个地方:

# 主机名修改成

hostname: harbor.taoyake.com

# 开启https 访问模式,并且指定证书位置

certificate: /data/apps/certs/harbor-ca.crt

private_key: /data/apps/certs/harbor-ca.key

# 修改harbor的默认登录密码

harbor_admin_password: 123456

# 修改harbor的默认存储数据的位置

data_volume: /data/harbor

修改完之后,保存退出

安装harbor,出现以下信息,表示harbor安装成功。

[Step 5]: starting Harbor ...

Creating network "harbor_harbor" with the default driver

Creating harbor-log ... done

Creating redis ... done

Creating registryctl ... done

Creating harbor-db ... done

Creating harbor-portal ... done

Creating registry ... done

Creating trivy-adapter ... done

Creating harbor-core ... done

Creating nginx ... done

Creating harbor-jobservice ... done

✔ ----Harbor has been installed and started successfully.----

habor.taoyake.com这个域名需要本地绑定hosts

用google chrome浏览器,访问下域名,登录harbor,创建一个镜像仓库。如下图所示:

我用10.2.24.164作为ansible控制端,来部署k8s。

hostnamectl set-hostname k8s-master2

hostnamectl set-hostname k8s-master3

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

2.修改/etc/hosts文件,添加以下信息

2.修改/etc/hosts文件,添加以下信息10.2.24.164 k8s-master1

10.2.24.165 k8s-master2

10.2.24.166 k8s-master3

10.2.24.167 k8s-node1

10.2.24.168 k8s-node2

3.在k8s-master1上操作:

[root@k8s-master1 ~]# yum install -y git python3-pip

[root@k8s-master1 ~]# pip3 install --upgrade pip

[root@k8s-master1 ~]# pip3 install ansible

4.在master-1上生成一个秘钥

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:LCWjRkAY85nGv/JZnMdlNOTjay6JE4T43kBuietwITU root@k8s-master1

The key's randomart image is:

+---[RSA 2048]----+

|o+o . |

|.+ + o |

| E...o . = |

| o.+o..= o o |

|. .=+o. S + |

| ..o*o.+ o . |

|. o+.o+oo.o |

| o.o.o+.oo |

| .. o . .. |

+----[SHA256]-----+

5.执行脚本,批量做免秘钥登录,脚本内容如下:

[root@k8s-master1 ~]# cat ssh-copy.sh

#目标主机列表

IP="

10.2.24.164

10.2.24.165

10.2.24.166

10.2.24.167

10.2.24.168

"

for node in ${IP};do

sshpass -p playcrab ssh-copy-id ${node} -o StrictHostKeyChecking=no

if [ $? -eq 0 ];then

echo "${node} 秘钥copy完成"

else

echo "${node} 秘钥copy失败"

fi

done

[root@k8s-master1 ~]# bash ssh-copy.sh

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

(if you think this is a mistake, you may want to use -f option)

10.2.24.164 秘钥copy完成

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

(if you think this is a mistake, you may want to use -f option)

10.2.24.165 秘钥copy完成

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

(if you think this is a mistake, you may want to use -f option)

10.2.24.166 秘钥copy完成

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

(if you think this is a mistake, you may want to use -f option)

10.2.24.167 秘钥copy完成

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

(if you think this is a mistake, you may want to use -f option)

10.2.24.168 秘钥copy完成

6.在部署节点编排k8s安装

下载项目源码,二进制及离线镜像

[root@k8s-master1 ~]# export release=3.1.0

[root@k8s-master1 ~]# echo $release

3.1.0

[root@k8s-master1 ~]# yum install -y wget

[root@k8s-master1 ~]# wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

[root@k8s-master1 ~]# chmod +x ezdown

修改docker版本

[root@k8s-master1 ~]# cat ezdown |grep "DOCKER_VER="

DOCKER_VER=19.03.15

DOCKER_VER="$OPTARG"

[root@k8s-master1 ~]# bash ./ezdown -D

[root@k8s-master1 kubeasz]# ./ezctl new k8s-01

2021-10-31 00:02:48 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-01

2021-10-31 00:02:48 DEBUG set version of common plugins

2021-10-31 00:02:48 DEBUG cluster k8s-01: files successfully created.

2021-10-31 00:02:48 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-01/hosts'

2021-10-31 00:02:48 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-01/config.yml'

修改ansible执行命令时使用的hosts配置文件

[etcd]

10.2.24.164

10.2.24.165

10.2.24.166

# master node(s)

[kube_master]

10.2.24.164

10.2.24.165

#10.2.24.166 # 后边演示单独添加

# work node(s)

[kube_node]

10.2.24.167

#10.2.24.168 # 后边演示单独添加

CLUSTER_NETWORK="calico"

SERVICE_CIDR="10.100.0.0/16"

CLUSTER_CIDR="10.200.0.0/16"

NODE_PORT_RANGE="30000-65000"

bin_dir="/usr/local/bin"

# 安装etcd

[root@k8s-master1 kubeasz]# ./ezctl setup k8s-01 02

验证etcd集群状态

# 根据hosts中配置设置shell变量 $NODE_IPS

[root@k8s-master1 kubeasz]# export NODE_IPS="10.2.24.164 10.2.24.165 10.2.24.166"

[root@k8s-master1 kubeasz]# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://10.2.24.164:2379 is healthy: successfully committed proposal: took = 22.220593ms

https://10.2.24.165:2379 is healthy: successfully committed proposal: took = 16.196003ms

https://10.2.24.166:2379 is healthy: successfully committed proposal: took = 18.281107ms

第三步,部署docker,在k8s-master1上登录harbor.

1.创建harbor的公钥目录

2.添加本地hosts解析

在harbor服务器同步公钥到k8s-master1机器上,然后尝试在k8s-master1登录harbor。

[root@harbor certs]# rsync -avzP /data/apps/certs/harbor-ca.crt 10.2.24.164:/etc/docker/certs.d/harbor.taoyake.com/root@10.2.24.164's password:

sending incremental file list

harbor-ca.crt

1,119 100% 0.00kB/s 0:00:00 (xfr#1, to-chk=0/1)

sent 912 bytes received 35 bytes 270.57 bytes/sec

total size is 1,119 speedup is 1.18

[root@k8s-master1 ~]# docker login harbor.taoyake.com

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

登录成功之后,会在/root/.docker/目录下生成一个认证文件

[root@k8s-master1 ~]# cat /root/.docker/config.json{

"auths": {

"harbor.taoyake.com": {

"auth": "YWRtaW46MTIzNDU2"

}

},

"HttpHeaders": {

"User-Agent": "Docker-Client/19.03.15 (linux)"

}

}

开始安装容器运行时(container-runtime)

[root@k8s-master1 kubeasz]# ./ezctl setup k8s-01 03安装k8s的master组件 kube-master

报错:

TASK [kube-node : 导入dnscache的离线镜像(若执行失败,可忽略)] ******************************************************************

fatal: [10.2.24.164]: FAILED! => {"changed": true, "cmd": "/usr/local/bin/docker load -i /opt/kube/images/k8s-dns-node-cache_1.17.0.tar", "delta": "0:00:00.055947", "end": "2021-10-29 23:35:49.767077", "msg": "non-zero return code", "rc": 127, "start": "2021-10-29 23:35:49.711130", "stderr": "/bin/sh: /usr/local/bin/docker: 没有那个文件或目录", "stderr_lines": ["/bin/sh: /usr/local/bin/docker: 没有那个文件或目录"], "stdout": "", "stdout_lines": []}

找不到/usr/local/bin/下的docker,需要手动给docker做一个软连接

[root@k8s-master1 kubeasz]# whereis docker

docker: /usr/bin/docker /etc/docker /opt/kube/bin/docker

[root@k8s-master1 kubeasz]# ln -s /usr/bin/docker /usr/local/bin/

做完软连接之后,重新执行04

[root@k8s-master1 kubeasz]# ./ezctl setup k8s-01 04

安装完master之后,可以查看下

[root@k8s-master1 kubeasz]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.2.24.164 Ready,SchedulingDisabled master 15m v1.21.0

10.2.24.165 Ready,SchedulingDisabled master 15m v1.21.0

10.2.24.166 Ready,SchedulingDisabled master 15m v1.21.0

修改一下轮训方式:编辑配置文件,添加下边这几行

[root@k8s-master1 kubeasz]# vim roles/kube-node/templates/kube-proxy-config.yaml.j2

ipvs:

scheduler: wrr

执行 ./ezctl setup k8s-01 05,部署node节点

[root@k8s-master1 kubeasz]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.2.24.164 Ready,SchedulingDisabled master 3d v1.21.0

10.2.24.165 Ready,SchedulingDisabled master 3d v1.21.0

10.2.24.166 Ready,SchedulingDisabled master 3d v1.21.0

10.2.24.167 Ready node 3d v1.21.0

10.2.24.168 Ready node 3d v1.21.0

最后一步,需要部署网络组件,就可以在k8s跑服务了

[root@k8s-master1 kubeasz]# ./ezctl setup k8s-01 06

在node1上查看calico网络

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+------------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+------------+-------------+

| 10.2.24.165 | node-to-node mesh | up | 2021-11-01 | Established |

| 10.2.24.166 | node-to-node mesh | up | 2021-11-01 | Established |

| 10.2.24.168 | node-to-node mesh | up | 2021-11-01 | Established |

| 10.2.24.164 | node-to-node mesh | up | 2021-11-01 | Established |

+--------------+-------------------+-------+------------+-------------+

IPv6 BGP status

No IPv6 peers found.

NAMESPACE NAME READY STATUS RESTARTS AGE

default net-test1 1/1 Running 8 3d

default net-test2 1/1 Running 8 3d

default net-test3 1/1 Running 8 3d

kube-system calico-kube-controllers-647f956d86-jm9bl 1/1 Running 0 3d

kube-system calico-node-6mml8 1/1 Running 0 3d

kube-system calico-node-74lst 1/1 Running 0 3d

kube-system calico-node-8fjg8 1/1 Running 0 3d

kube-system calico-node-9gtw4 1/1 Running 0 3d

kube-system calico-node-kmjgh 1/1 Running 0 3d

查看一下master1上的路由

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.2.24.1 0.0.0.0 UG 100 0 0 eth0

10.2.24.0 0.0.0.0 255.255.255.0 U 100 0 0 eth0

10.200.36.64 10.2.24.167 255.255.255.192 UG 0 0 0 tunl0

10.200.135.192 10.2.24.166 255.255.255.192 UG 0 0 0 tunl0

10.200.159.128 0.0.0.0 255.255.255.192 U 0 0 0 *

10.200.169.128 10.2.24.168 255.255.255.192 UG 0 0 0 tunl0

10.200.224.0 10.2.24.165 255.255.255.192 UG 0 0 0 tunl0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

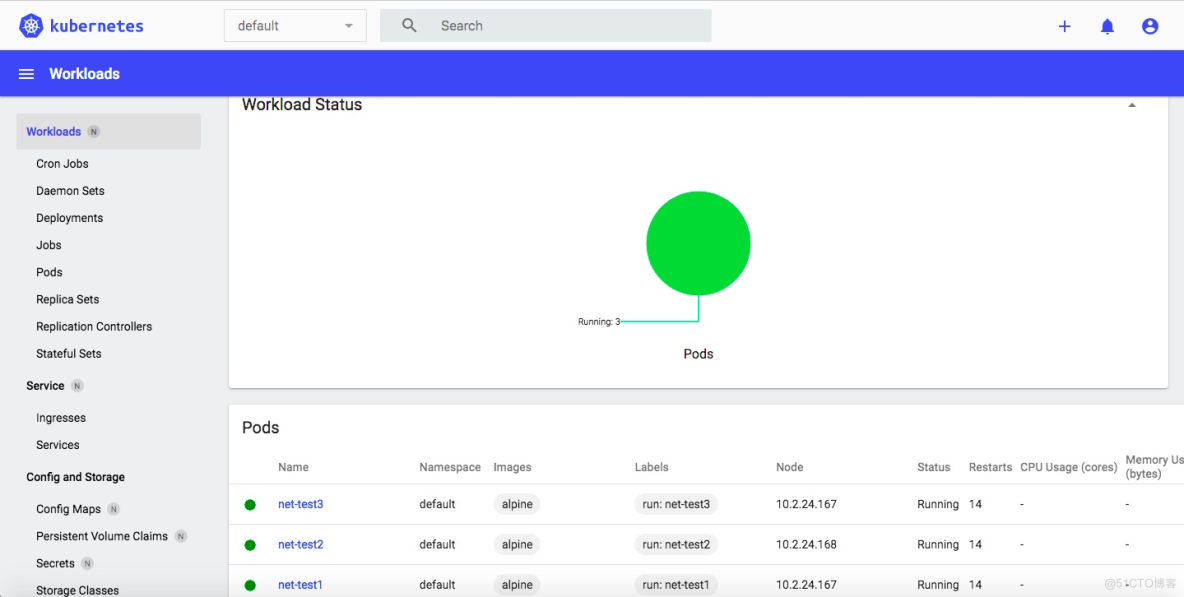

在node1上,使用alpine镜像创建一个容器,验证网络是否正常

pod/net-test1 created

[root@k8s-node1 ~]# kubectl run net-test2 --image=alpine sleep 30000

pod/net-test2 created

[root@k8s-node1 ~]# kubectl run net-test3 --image=alpine sleep 30000

pod/net-test3 created

[root@k8s-node1 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

net-test1 1/1 Running 0 86s

net-test2 1/1 Running 0 69s

net-test3 1/1 Running 0 64s

查看pod运行在那个容器上

[root@k8s-node1 ~]# kubectl get pod -A -o wideNAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test1 1/1 Running 0 7m7s 10.200.36.76 10.2.24.167 <none> <none>

default net-test2 1/1 Running 0 6m50s 10.200.36.77 10.2.24.167 <none> <none>

default net-test3 1/1 Running 0 6m45s 10.200.36.78 10.2.24.167 <none> <none>

kube-system calico-kube-controllers-647f956d86-xn48c 1/1 Running 0 92m 10.2.24.167 10.2.24.167 <none> <none>

kube-system calico-node-hmdk8 1/1 Running 0 92m 10.2.24.165 10.2.24.165 <none> <none>

kube-system calico-node-p8xgs 1/1 Running 0 92m 10.2.24.167 10.2.24.167 <none> <none>

kube-system calico-node-tlrvz 1/1 Running 0 92m 10.2.24.164 10.2.24.164 <none> <none>

进到test2,然后ping一下test3的IP地址,看下是否可以ping通

[root@k8s-node1 ~]# kubectl exec -it net-test2 shkubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ifconfig

eth0 Link encap:Ethernet HWaddr CA:4C:54:5B:91:0B

inet addr:10.200.36.77 Bcast:0.0.0.0 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:5 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:446 (446.0 B) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # ping 10.200.36.78

PING 10.200.36.78 (10.200.36.78): 56 data bytes

64 bytes from 10.200.36.78: seq=0 ttl=63 time=0.413 ms

64 bytes from 10.200.36.78: seq=1 ttl=63 time=0.104 ms

64 bytes from 10.200.36.78: seq=2 ttl=63 time=0.152 ms

删除k8s命令,执行./ezctl destroy k8s-01就会把部署的k8s集群删除掉。

进到容器测试下网络,ip地址是通的,但是域名不能解析

net-test3 1/1 Running 13 4d17h

[root@k8s-master1 ~]# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8): 56 data bytes

64 bytes from 8.8.8.8: seq=0 ttl=113 time=41.702 ms

64 bytes from 8.8.8.8: seq=1 ttl=113 time=42.153 ms

64 bytes from 8.8.8.8: seq=2 ttl=113 time=41.951 ms

^C

--- 8.8.8.8 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 41.702/41.935/42.153 ms

/ # ping baidu.com

在pod里访问域名,需要部署coredns

部署coredns

1.先下载四个压缩包,然后解压移到/usr/local/src/ 目录下

[root@k8s-master1 ~]# wget https://storage.googleapis.com/kubernetes-release/release/v1.21.4/kubernetes.tar.gz[root@k8s-master1 ~]# wget https://storage.googleapis.com/kubernetes-release/release/v1.21.4/kubernetes-client-linux-amd64.tar.gz

[root@k8s-master1 ~]# wget https://storage.googleapis.com/kubernetes-release/release/v1.21.4/kubernetes-node-linux-amd64.tar.gz

[root@k8s-master1 ~]# wget https://storage.googleapis.com/kubernetes-release/release/v1.21.4/kubernetes-server-linux-amd64.tar.gz

[root@k8s-master1 ~]# tar -zxvf kubernetes-node-linux-amd64.tar.gz

[root@k8s-master1 ~]# tar -zxvf kubernetes-client-linux-amd64.tar.gz

[root@k8s-master1 ~]# tar -zxvf kubernetes-server-linux-amd64.tar.gz

[root@k8s-master1 ~]# tar -zxvf kubernetes.tar.gz

[root@k8s-master1 ~]# mv kubernetes /usr/local/src/

2.进到coredns的配置文件目录,重命名coredns.yaml.base配置文件

[root@k8s-master1 coredns]# ls

coredns.yaml.base coredns.yaml.in coredns.yaml.sed Makefile transforms2salt.sed transforms2sed.sed

[root@k8s-master1 coredns]# cp coredns.yaml.base /root/

[root@k8s-master1 coredns]# cd

[root@k8s-master1 ~]# mv coredns.yaml.base coredns-n56.yaml

3.修改一下coredns-n56.yaml配置文件的部分参数

主要配置参数:error: # 错误日志输出到stdout。

health: # CoreDNS的运行状况报告为http://localhost:8080/health

cache: #启用coredns缓存

reload: #配置自动重新加载配置文件,如果修改了configMap配置,会在两分钟后生效。

loadbalance: #一个域名有多个记录会被轮询解析。

cache 30 #缓存时间

kubernetes: #CoreDNS将根据指定的service domain名称在kubernetes svc中进行域名解析。

forward: # 不是kubernetes集群域的域名查询都进行转发指定的服务器(/etc/resolv.conf)

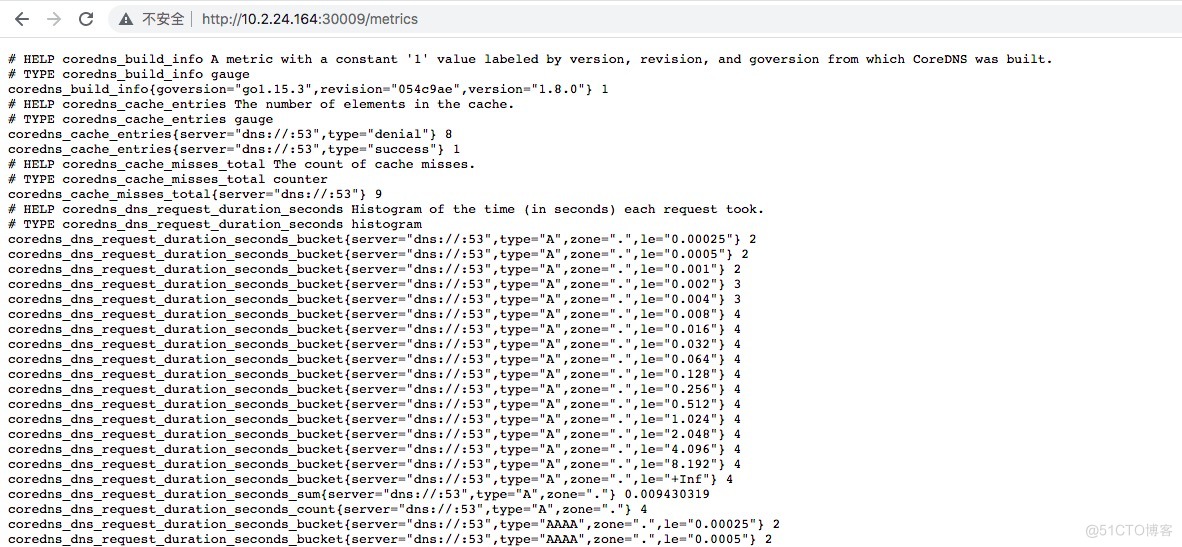

prometheus: # CoreDNS的指标数据可以配置prometheus 访问http://coredns svc:9153/metrics 进行收集。

ready: # 当coredns服务启动完成后会进行在状态检测,会有个URL路径为/ready返回200状态码,否则

返回报错。

配置文件中有一个域名解析,必须跟/etc/kubeasz/clusters/k8s-01/hosts这个配置文件中的域名保持一致的。

[root@k8s-master1 ~]# cat /etc/kubeasz/clusters/k8s-01/hosts |grep CLUSTER_DNS_DOMAINCLUSTER_DNS_DOMAIN="cluster.local"

kubernetes cluster.local in-addr.arpa ip6.arpa

[root@k8s-master1 ~]# cat coredns-n56.yaml |grep cluster.local

kubernetes cluster.local in-addr.arpa ip6.arpa {

第76行的解析,需要修改成223.6.6.6

76: forward . 223.6.6.6 {

139行的limit修改成256Mi

memory: 256Mi

clusterIP: 10.100.0.2 # 地址一般是部署k8s时候svc的第二个地址

# 如果不确定,可以先找个容器进去看一下。[root@k8s-master1 ~]# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # cat /etc/resolv.conf

nameserver 10.100.0.2

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

在service上开启prometheus的端口暴露,最后一段配置文件修改

type: NodePort

selector:

k8s-app: kube-dns

clusterIP: 10.100.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

targetPort: 9153

nodePort: 30009

修改完之后,保存退出。然后执行apply,执行之后,检查一下是否启动成功。

serviceaccount/coredns unchanged

clusterrole.rbac.authorization.k8s.io/system:coredns unchanged

clusterrolebinding.rbac.authorization.k8s.io/system:coredns unchanged

configmap/coredns configured

deployment.apps/coredns configured

service/kube-dns unchanged

[root@k8s-master1 ~]# kubectl get pod -A|grep coredns

kube-system coredns-5d4dd74c8b-lf2mt 1/1 Running 0 17m

此时登录容器,测试容器是否可以解析外部域名

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping baidu.com

PING baidu.com (220.181.38.148): 56 data bytes

64 bytes from 220.181.38.148: seq=0 ttl=49 time=5.290 ms

64 bytes from 220.181.38.148: seq=1 ttl=49 time=5.033 ms

^C

--- baidu.com ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 5.033/5.161/5.290 ms

通过30009端口,可以看到prometheus采集的coredns这个pod的数据,如下图所示:

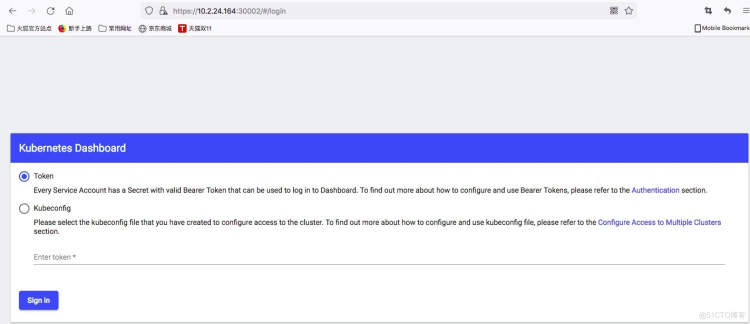

部署dashboad的pod

dashboad是一个web界面提供一些管理k8s的功能。

1.下载官方的yaml文件,并修改文件名

[root@k8s-master1 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml[root@k8s-master1 ~]# mv recommended.yaml dashboard-v2.3.1.yaml

2.对外暴露一个端口,然后创建pod

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30002

selector:

k8s-app: kubernetes-dashboard

[root@k8s-master1 ~]# kubectl apply -f dashboard-v2.3.1.yaml

3.用火狐浏览器,访问https://10.2.24.164:30002,会让输入一个秘钥,如下图所示:

需要上传一个补丁文件。

4.上传补丁文件,apply 启动补丁文件

#在kubernetes-dashboard这个namespace中创建一个admin-user的账号,然后给这个账号做了一个集群角色绑定,授予admin的权限

[root@k8s-master1 ~]# cat admin-user.yamlapiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

[root@k8s-master1 ~]# kubectl apply -f admin-user.yaml

获取admin用户的token,登录dashboard

kubernetes-dashboard admin-user-token-r6mwm kubernetes.io/service-account-token 3 3d23h

[root@k8s-master1 ~]# kubectl describe secrets admin-user-token-r6mwm -n kubernetes-dashboard

Name: admin-user-token-r6mwm

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: a2af01c6-86e8-4130-9b50-6028437eb4bc

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1350 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjFtOE5SZzRpSWJjTVJaN0tDbGNZTFBBR3ZmOVlkaFM4ZVRXa01DUnliekkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXI2bXdtIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJhMmFmMDFjNi04NmU4LTQxMzAtOWI1MC02MDI4NDM3ZWI0YmMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.MyTMn18jtzVCogx4mD9E-4GwafoyCUR-oGdDB1lOBI7KjM1UQJZYsbBmyvSzlN7mlpmk-_peeuXDP--IvM8uTwi8CUdEOf21mrpJj_vOzoSfqUk9H9MNGh16DznOocHRKLdwrpybL6FgQFisCY84KKJj6w-14SAkGPcHN1n6B-L4o4aVTS8PLKB24bD2nVjyhKFa6-Z_2b4WQIFoE-Q-WEtIedGxLSFpE0tjhgQ8cmHDAjo7JUwBFJLo3aiUP755bm_rrxnsOyWlW98NOM-EQjlMYp-zgCquERTV7zJurrocBzse4kysuQcts6CkeZapK5rYZaKXbIxjwsJEkLwtmg