介绍:

到这里基本上集群就已经跑起来了,接下来就要把运行业务系统的程序部署上去。这里会介绍zookeeper、etcd、kafka的部署,会讲到服务为什么需要用Deployment和StatefulSet。这两个东西分别是如何运用的。

zookeeper:

要制作zookeeper的镜像需要了解zk集群运行时的配置方法和原理,其中配置文件有个地方需要处理。下载zookeeper安装包,解压到一个目录中。

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/home/zookeeper/data

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#这里是添加集群节点的配置,下面可以看到zookeeper-0这样的名称。这个是在k8s中运行时的service名称。分别对应到不同的pod。

server.0=zookeeper-0:2888:3888

server.1=zookeeper-1:2888:3888

server.2=zookeeper-2:2888:3888

还有一个地方就是myid文件,通过文件内容中的数字来区分是那个节点。对应配置文件的server.0-server.2。

这个地方我决定在启动脚本里面去处理打开bin/zkServer.sh添加以下内容

hostname | grep -oE [0-9] > /home/zookeeper/data/myid

这样当我们使用StatefulSet方式部署服务时每个容器的hostname就会是服务名称-0这样的格式了。

Dockerfile文件

FROM openjdk:11-jre-slim

RUN mkdir /home/zookeeper/

COPY zookeeper/ /home/zookeeper/

CMD /home/zookeeper/bin/zkServer.sh start-foreground

执行docker build -t docker.xxxx.net/zookeeper:v3.6.3 .

执行docker push docker.xxxx.net/zookeeper:v3.6.3把镜像推送到镜像仓库。记得推送前需要登录镜像仓库,这里的docker.xxxx.net请修改成你自己的域名。

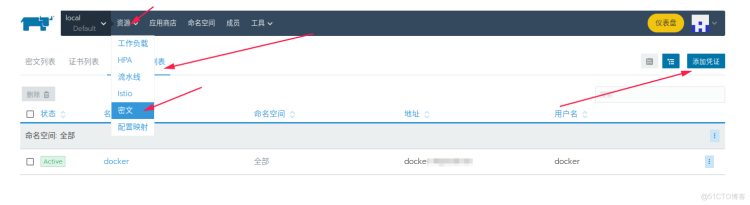

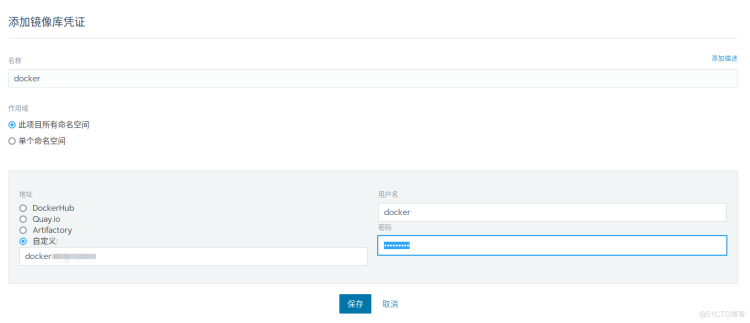

在rancher中添加镜像仓库凭证

部署zookeeper:

文件中的镜像地址请自行修改。

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zookeeper

spec:

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

app: zookeeper

serviceName: zookeeper-svc

template:

metadata:

labels:

app: zookeeper

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- zookeeper

topologyKey: kubernetes.io/hostname

containers:

- env:

- name: JAVA_HOME

value: /usr/local/openjdk-11

- name: LANG

value: C.UTF-8

image: docker.xxxx.net/zookeeper:v3.6.3

imagePullPolicy: IfNotPresent

name: zookeeper

resources:

requests:

cpu: 500m

memory: 512Mi

volumeMounts:

- mountPath: /home/zookeeper/data

name: zookeeper

- mountPath: /etc/localtime

name: volume-localtime

imagePullSecrets:

- name: docker

dnsPolicy: ClusterFirst

restartPolicy: Always

volumes:

- hostPath:

path: /etc/localtime

type: ""

name: volume-localtime

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

volumeMode: Filesystem

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper-0

spec:

ports:

- name: cli

port: 2181

protocol: TCP

targetPort: 2181

- name: pri

port: 2888

protocol: TCP

targetPort: 2888

- name: clu

port: 3888

protocol: TCP

targetPort: 3888

selector:

app: zookeeper

statefulset.kubernetes.io/pod-name: zookeeper-0

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper-1

spec:

ports:

- name: cli

port: 2181

protocol: TCP

targetPort: 2181

- name: pri

port: 2888

protocol: TCP

targetPort: 2888

- name: clu

port: 3888

protocol: TCP

targetPort: 3888

selector:

app: zookeeper

statefulset.kubernetes.io/pod-name: zookeeper-1

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper-2

spec:

ports:

- name: cli

port: 2181

protocol: TCP

targetPort: 2181

- name: pri

port: 2888

protocol: TCP

targetPort: 2888

- name: clu

port: 3888

protocol: TCP

targetPort: 3888

selector:

app: zookeeper

statefulset.kubernetes.io/pod-name: zookeeper-2

type: ClusterIP

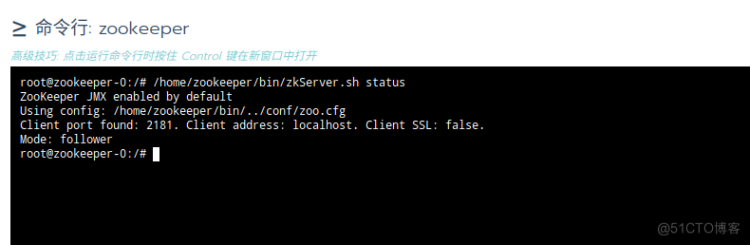

等到一段时间以后分别进入到容器中执行命令查看状态

etcd:

下载etcd包https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz

tar -xf etcd-v3.5.0-linux-amd64.tar.gz

mv etcd-v3.5.0-linux-amd64 etcd

创建etcd启动脚本

cat etcd/start.sh

#!/bin/sh

localIP=`grep $HOSTNAME /etc/hosts|grep -oE "[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}"`

/opt/etcd/etcd --name $HOSTNAME --initial-advertise-peer-urls http://$localIP:2380 --listen-peer-urls http://$localIP:2380 --listen-client-urls http://$localIP:2379 --advertise-client-urls http://$localIP:2379 --initial-cluster-token etcd-im-cluster --initial-cluster "etcd-0=http://etcd-0:2380,etcd-1=http://etcd-1:2380,etcd-2=http://etcd-2:2380" --initial-cluster-state new --data-dir=/opt/etcd/data --logger=zap

这里使用的http方式,要制作https的镜像需要创建证书修改配置等请自行处理。

chmod 755 etcd/start.sh

Dockerfile文件

FROM alpine:latest

VOLUME ["/var/cache"]

ENV LANG=zh_CN.utf8 LANGUAGE=zh_CN:zh LC_ALL=zh_CN.utf8

RUN sed -i "s/dl-cdn.alpinelinux.org/mirrors.tuna.tsinghua.edu.cn/g" /etc/apk/repositories &&\

apk add --no-cache busybox-extras vim curl tzdata && \

cp -rf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \

echo "export LANG=zh_CN.utf8" >> /etc/profile && . /etc/profile && \

echo "export LANG=$LANG" > /etc/profile.d/locale.sh

COPY etcd /opt/etcd

CMD /opt/etcd/start.sh

参照zookeeper的方式执行docker build 和 docker push命令

部署etcd:

文件中的镜像地址请自行修改。

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: etcd

spec:

replicas: 3

selector:

matchLabels:

app: etcd

serviceName: etcd-svc

template:

metadata:

labels:

app: etcd

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- etcd

topologyKey: kubernetes.io/hostname

containers:

- env:

- name: LANG

value: zh_CN.utf8

- name: LANGUAGE

value: zh_CN:zh

- name: LC_ALL

value: zh_CN.utf8

- name: aliyun_logs_wos-app

value: stdout

image: docker.xxxx.net/etcd:v3.4.16

imagePullPolicy: Always

name: etcd

resources:

limits:

memory: 1Gi

requests:

cpu: 250m

memory: 512Mi

volumeMounts:

- mountPath: /etc/localtime

name: volume-localtime

- mountPath: /opt/etcd/data

name: etcd

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: docker

restartPolicy: Always

volumes:

- hostPath:

path: /etc/localtime

type: ""

name: volume-localtime

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: etcd

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

volumeMode: Filesystem

---

apiVersion: v1

kind: Service

metadata:

name: etcd-0

spec:

clusterIP: None

ports:

- name: cli

port: 2379

protocol: TCP

targetPort: 2379

- name: pri

port: 2380

protocol: TCP

targetPort: 2380

selector:

app: etcd

statefulset.kubernetes.io/pod-name: etcd-0

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: etcd-1

spec:

clusterIP: None

ports:

- name: cli

port: 2379

protocol: TCP

targetPort: 2379

- name: pri

port: 2380

protocol: TCP

targetPort: 2380

selector:

app: etcd

statefulset.kubernetes.io/pod-name: etcd-1

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: etcd-2

spec:

clusterIP: None

ports:

- name: cli

port: 2379

protocol: TCP

targetPort: 2379

- name: pri

port: 2380

protocol: TCP

targetPort: 2380

selector:

app: etcd

statefulset.kubernetes.io/pod-name: etcd-2

sessionAffinity: None

type: ClusterIP

等容器正常以后,进入到命令行使用命令/opt/etcd/etcdctl --endpoints=etcd-0:2379,etcd-1:2379,etcd-2:2379 endpoint health检查运行情况

kafka部署:

自行网上下载kafka安装包,修改配置文件:

cat config/server.properties

broker.id=

listeners=PLAINTEXT://

port=19092

auto.create.topics.enable=true

num.network.threads=4

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/opt/kafka/kafkalogs/

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=24

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=zookeeper-0:2181,zookeeper-1:2181,zookeeper-2:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

message.max.byte=5242880

default.replication.factor=2

replica.fetch.max.bytes=5242880

log.cleaner.enable=true

delete.topic.enable=true

log.cleanup.policy = delete

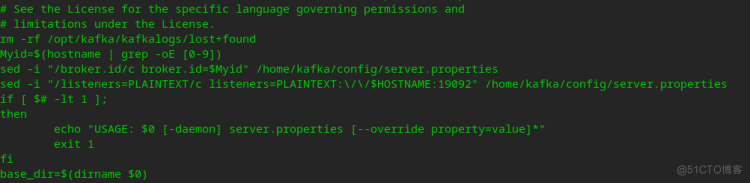

修改启动文件bin/kafka-server-start.sh:

rm -rf /opt/kafka/kafkalogs/lost+found

Myid=$(hostname | grep -oE [0-9])

sed -i "/broker.id/c broker.id=$Myid" /home/kafka/config/server.properties

sed -i "/listeners=PLAINTEXT/c listeners=PLAINTEXT:\/\/$HOSTNAME:19092" /home/kafka/config/server.properties

放到文件这个位置

Dockerfile:

FROM openjdk:11-jre-slim

RUN mkdir /home/kafka/

COPY kafka/ /home/kafka/

CMD /home/kafka/bin/kafka-server-start.sh -daemon /home/kafka/config/server.properties

然后使用docker build和docker push命令制作和上传镜像

部署kafka:

文件中的镜像地址请自行修改。

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: kafka

name: kafka

spec:

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

app: kafka

serviceName: kafka-svc

template:

metadata:

labels:

app: kafka

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- kafka

topologyKey: kubernetes.io/hostname

containers:

- env:

- name: JAVA_HOME

value: /usr/local/openjdk-11

- name: LANG

value: C.UTF-8

- name: JMX_PORT

value: "9988"

image: docker.xxxx.net/kafka:v2.11-2.4.1

imagePullPolicy: Always

name: kafka

resources:

requests:

cpu: "1"

memory: 1224Mi

volumeMounts:

- mountPath: /opt/kafka/kafkalogs/

name: kafka

- mountPath: /etc/localtime

name: volume-localtime

dnsPolicy: ClusterFirst

restartPolicy: Always

imagePullSecrets:

- name: docker

volumes:

- hostPath:

path: /etc/localtime

type: ""

name: volume-localtime

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: kafka

namespace: wos-uat

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

volumeMode: Filesystem

---

apiVersion: v1

kind: Service

metadata:

name: kafka-0

spec:

ports:

- name: client

port: 19092

protocol: TCP

targetPort: 19092

selector:

app: kafka

statefulset.kubernetes.io/pod-name: kafka-0

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: kafka-1

spec:

ports:

- name: client

port: 19092

protocol: TCP

targetPort: 19092

selector:

app: kafka

statefulset.kubernetes.io/pod-name: kafka-1

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: kafka-2

spec:

ports:

- name: client

port: 19092

protocol: TCP

targetPort: 19092

selector:

app: kafka

statefulset.kubernetes.io/pod-name: kafka-2

type: ClusterIP

等运行完成以后进行健康检查。

为防止磁盘空间不回收除了上面配置文件的

log.cleaner.enable=true

delete.topic.enable=true

log.cleanup.policy = delete 三个选项以外还需要手动配置一下策略

bin/kafka-configs.sh --zookeeper zookeeper-0:2181 --entity-type topics --entity-name __consumer_offsets --describe 查看策略默认是compot,改成delete

bin/kafka-configs.sh --zookeeper zookeeper-0:2181 --entity-type topics --entity-name __consumer_offsets --alter --add-config 'cleanup.policy=delete'

Deployment和StatefulSet

很多时候不知道Deployment和StatefulSet的区别在哪里。上面创建的三个服务推荐都使用StatefulSet创建,因为里面有共享存储,而这些程序使用共享存储的方式都是独占里面会有锁文件。如果使用Deployment在程序出现问题时可能会导致文件抢占新的pod启动不了,同时集群中创建service的时候为每个pod创建一个service,Deployment的pod名称是会变化的定位不到pod中会导致集群出现问题。

Deployment适合用于无磁盘文件锁、状态可以中断的应用,比如我们的java微服务程序。StatefulSet会先停止原来的容器,而且总是按照顺序停止和启动,pod名称总是有序的而且不会改变。Deployment默认是先启动新容器再停止老容器,pod名称是不可预知的。

具体就介绍这么多,其它的基础镜像自行制作处理。希望对大家有所启发。