日志分析ELK平台,由ElasticSearch、Logstash和Kiabana三个开源工具组成。

官方网站: https://www.elastic.co/products

Elasticsearch是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash是一个完全开源的工具,他可以对你的日志进行收集、过滤,并将其存储供以后使用(如,搜索)。

Kibana 也是一个开源和免费的工具,它Kibana可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助您汇总、分析和搜索重要数据日志。

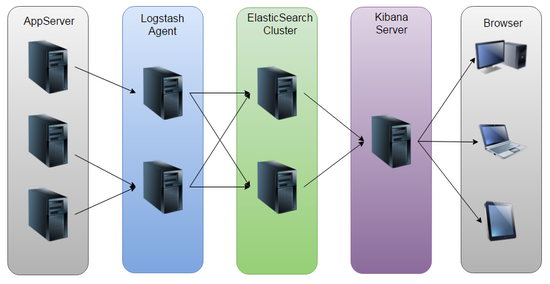

ELK原理图:

如图:Logstash收集AppServer产生的Log,并存放到ElasticSearch集群中,而Kibana则从ES集群中查询数据生成图表,再返回给Browser。

ELK平台搭建

系统环境

System: Centos release 6.7(Final)

ElasticSearch: elasticsearch-5.3.1.tar.gz

Logstash: logstash-5.3.1.tar.gz

Kibana: kibana-5.3.1-linux-x86_64.tar.gz

Java: openjdk version ”1.8.0_131″

ELK官网下载: https://www.elastic.co/downloads/

JAVA环境配置

下载最新版本1.8.0_131

cd /tmp/

wget http://download.oracle.com/otn-pub/java/jdk/8u131-b11/d54c1d3a095b4ff2b6607d096fa80163/jdk-8u131-linux-x64.tar.gz

tar zxf jdk-8u131-linux-x64.tar.gz -C /usr/local/

vim /etc/profile

添加如下内容

JAVA_HOME=/usr/local/jdk1.8.0_131

PATH=$JAVA_HOME/bin:$PATH

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export JAVA_HOME PATH CLASSPATH

source /etc/profile

编辑limits.conf 添加类似如下内容vi /etc/security/limits.conf 添加如下内容:* soft nofile 65536* hard nofile 131072* soft nproc 2048* hard nproc 4096

修改配置sysctl.confvi /etc/sysctl.conf 添加下面配置:vm.max_map_count=655360并执行命令:sysctl -p

ElasticSearch配置

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.3.1.tar.gz

useradd elktest

tar -zxvf elasticsearch-5.3.1.tar.gzcd elasticsearch-5.3.1vi config/elasticsearch.yml

修改以下配置项:

(路径需要先自行创建,并且elktest用户可读写)

cluster.name: elk_clusternode.name: node0path.data: /tmp/elasticsearch/datapath.logs: /tmp/elasticsearch/logsnetwork.host: 192.168.1.5http.port: 9200

其他的选项保持默认,然后启动ES:

su elktest #ES不允许root启动服务 必须是普通用户

./bin/elasticsearch &

配置Logstash

wget https://artifacts.elastic.co/downloads/logstash/logstash-5.3.1.tar.gz

tar -zxvf logstash-5.3.1.tar.gzcd logstash-5.3.1

vi config/logstash.conf

input {

file {

path => ["/var/log/messages"]

type => "syslog"

}

}

filter {

grok {

match => [ "message", "%{SYSLOGBASE} %{GREEDYDATA:content}" ]

}

}

output {

elasticsearch {

hosts => ["192.168.1.5:9200"]

index => "syslog-%{+YYY.MM.dd}"

}

stdout { codec => rubydebug }

}

./bin/logstash -f config/logstash.conf & #启动服务

配置Kibana:

wget https://artifacts.elastic.co/downloads/kibana/kibana-5.3.1-linux-x86_64.tar.gz

tar -zxvf kibana-5.3.1-linux-x86.tar.gzcd kibana-5.3.1-linux-x86vi config/kibana.ymlserver.port: 5601server.host: "192.168.1.5"elasticsearch.url: http://192.168.1.5:9200kibana.index: ".kibana.yml"./bin/kibana &

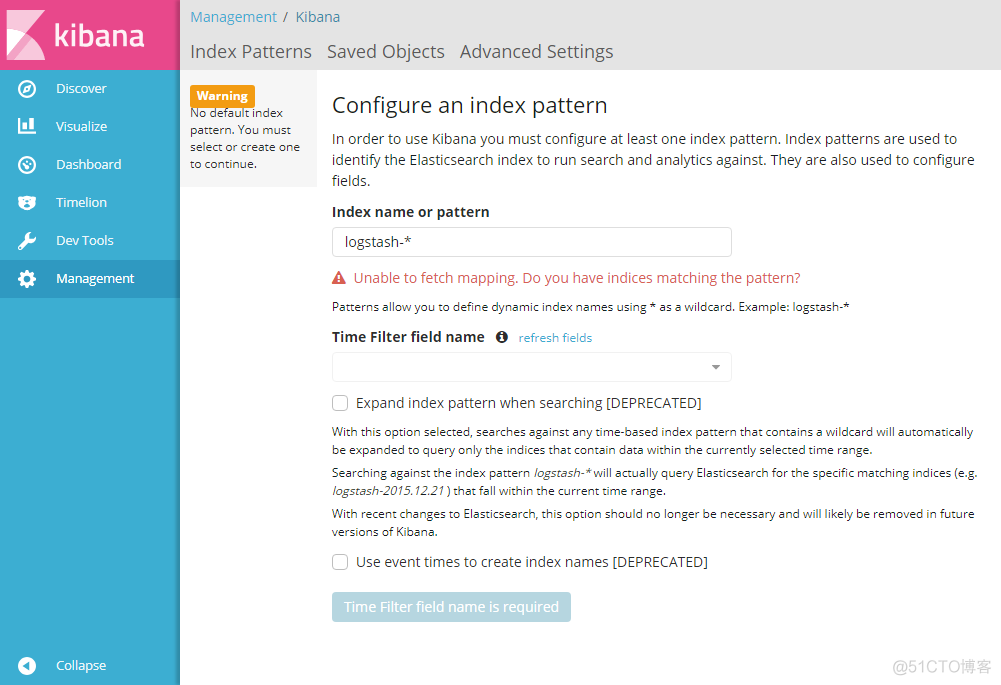

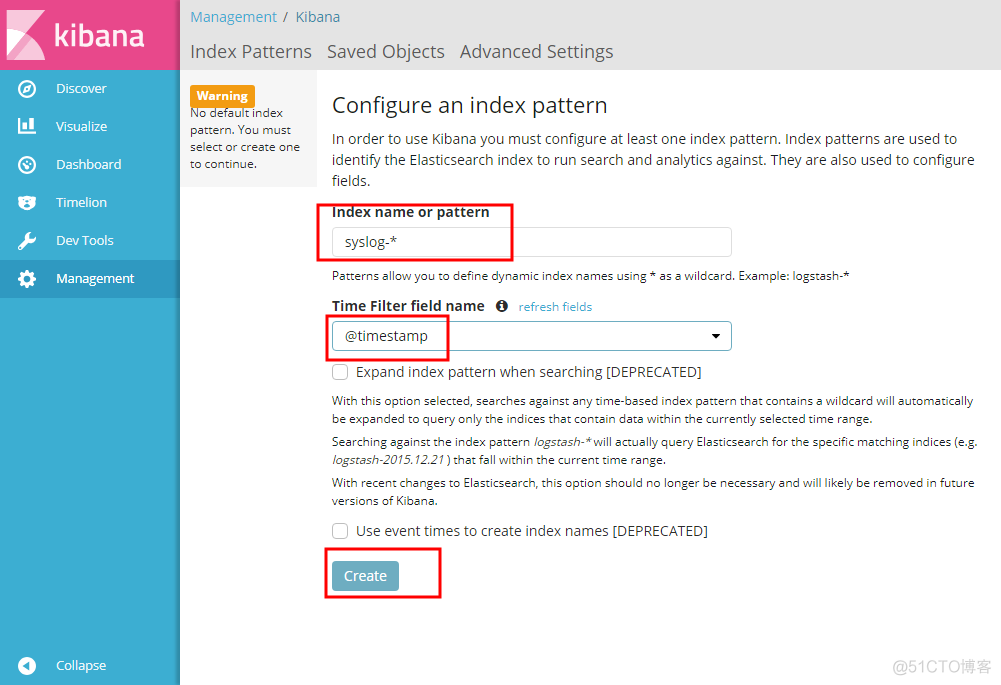

打开kibana界面192.168.1.5:5601

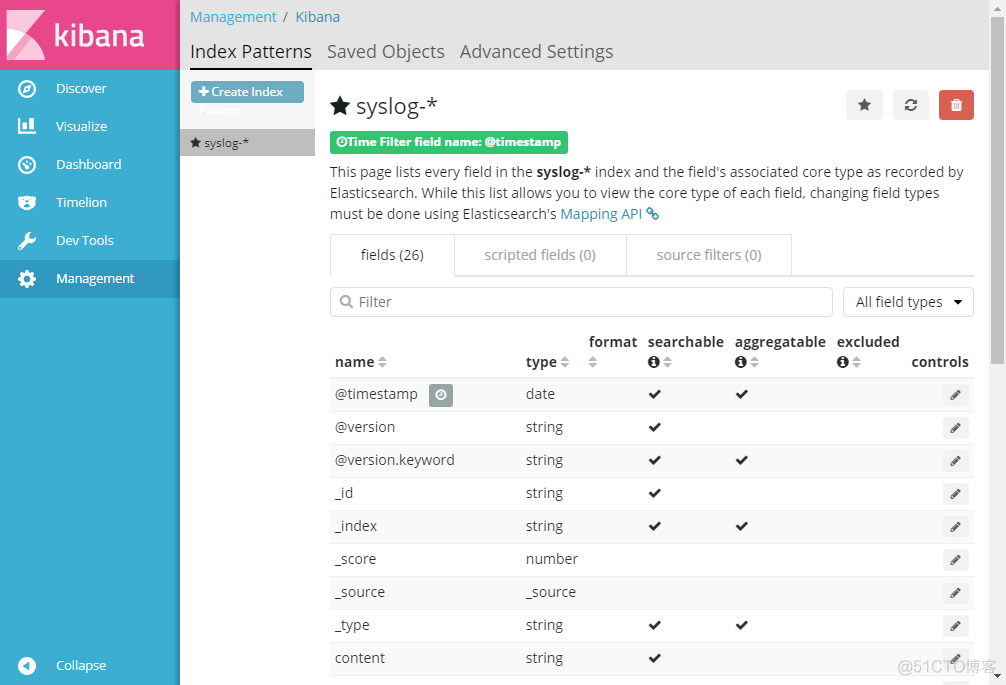

创建索引(logstash.conf 中的index名字 index => "syslog-%{+YYY.MM.dd}")

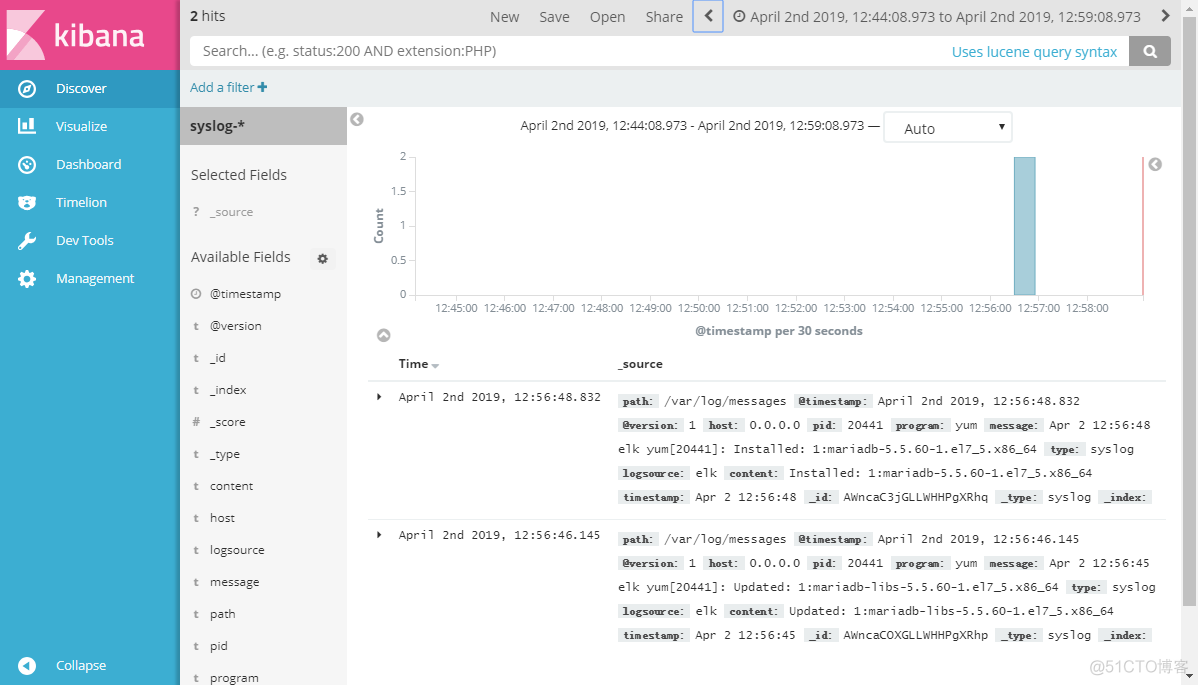

回到discover界面,并在机器上执行安装操作 yum install http -y (用于输出系统日志),可以看到kibana上有日志下刷了

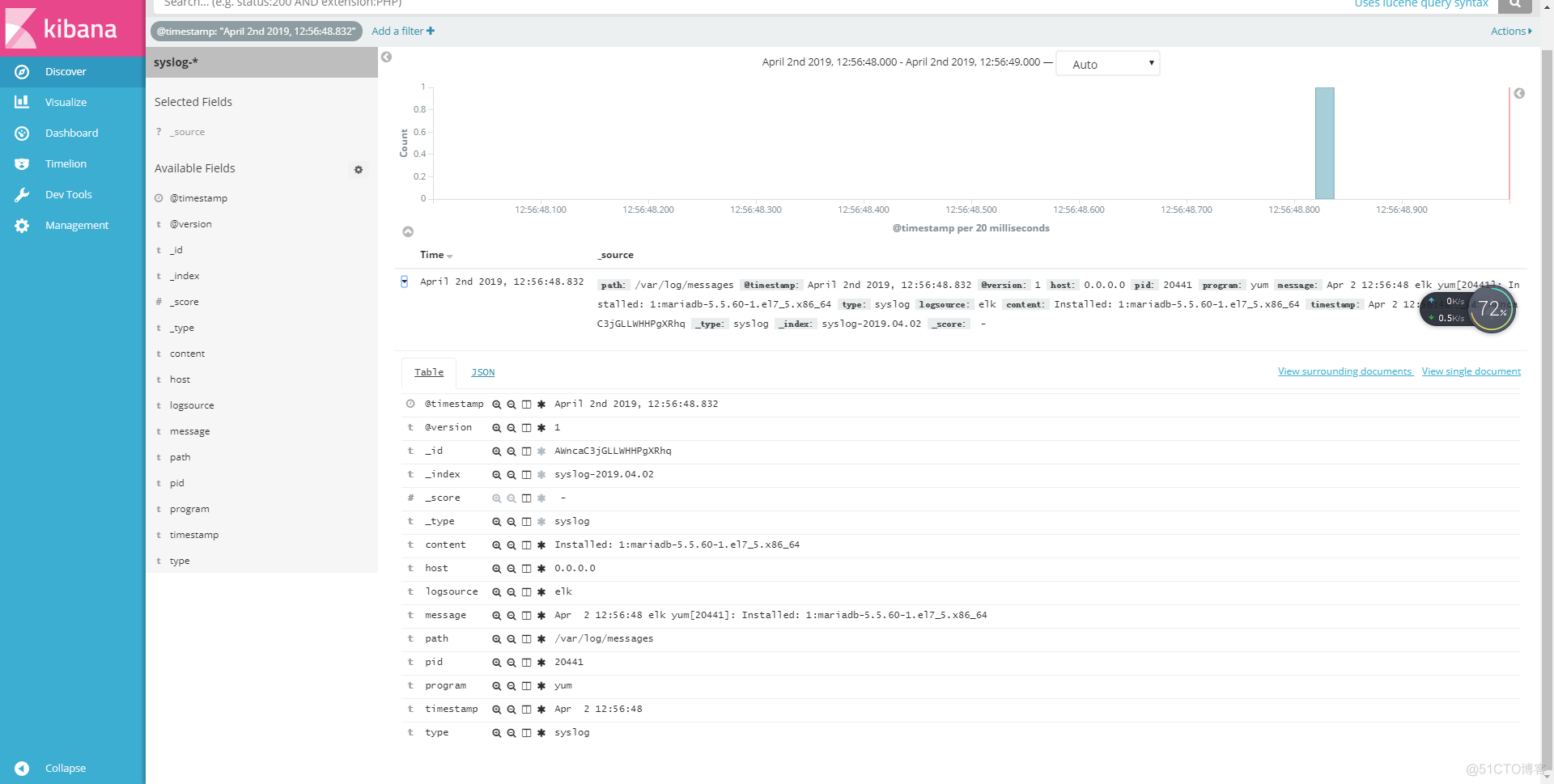

展开最新的一条日志

-------------------------------------------------------------------------------

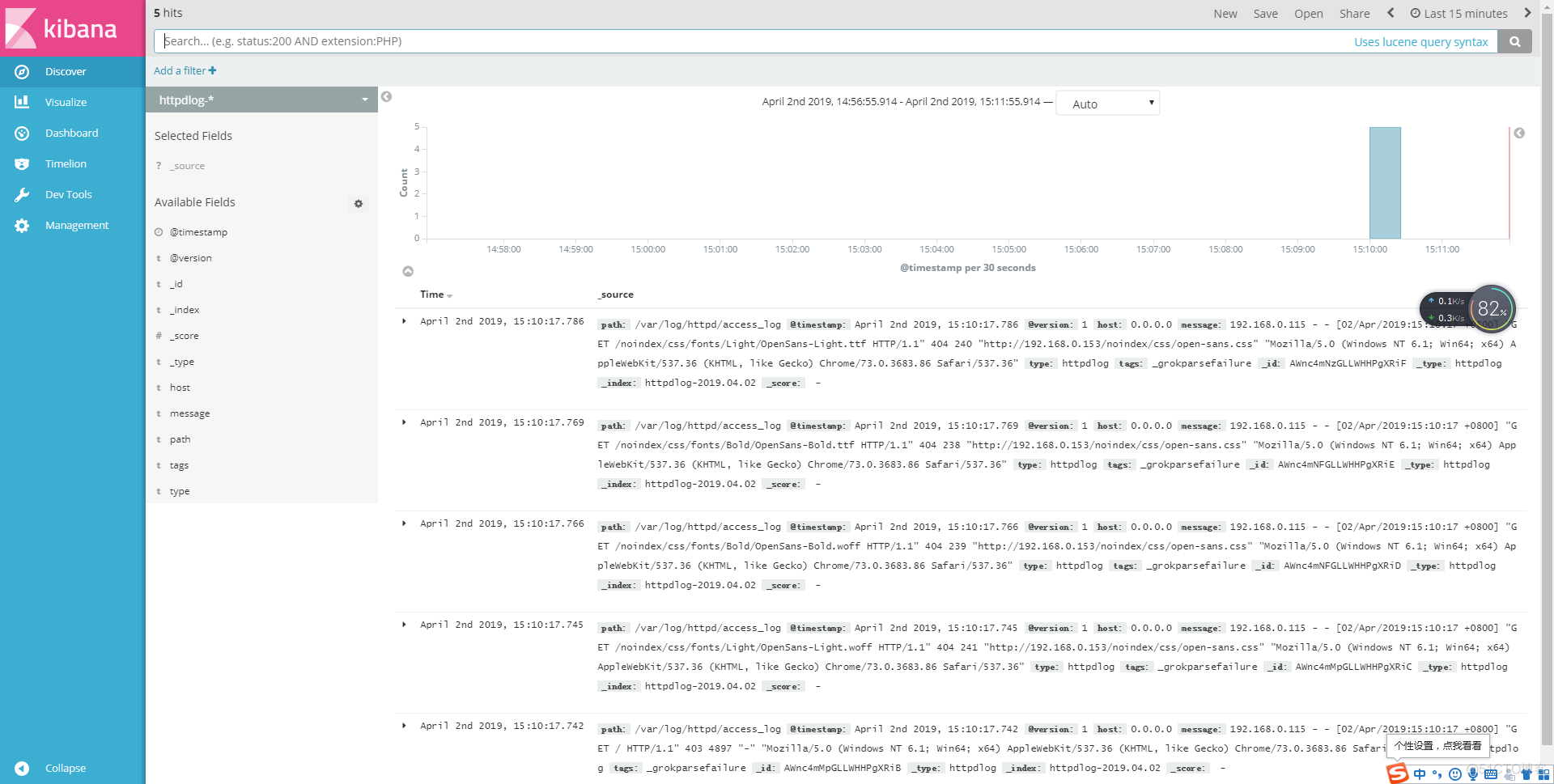

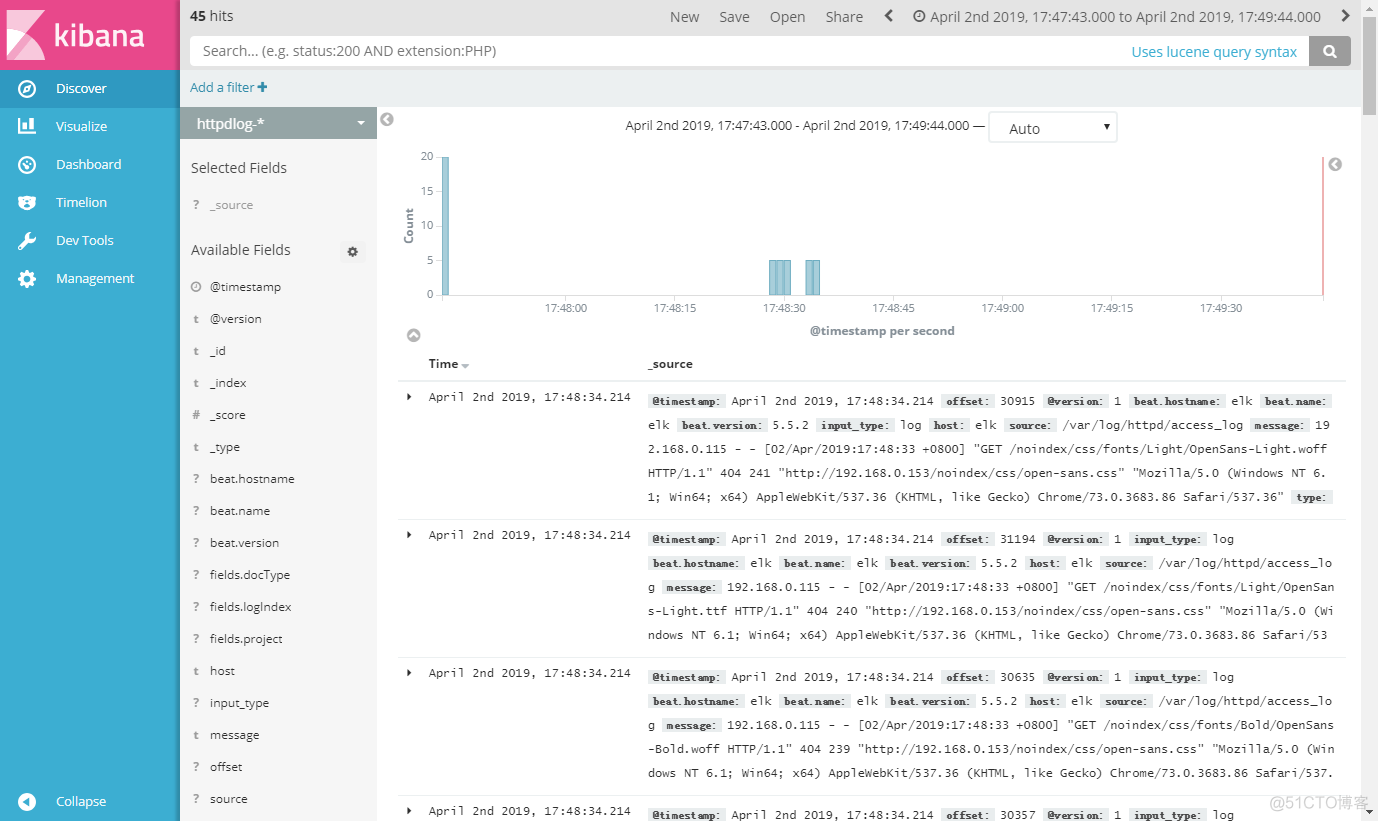

增加多文件输入的logstash配置

[root@elk config]# cat logstash.conf input { file { path => ["/var/log/messages"] type => "syslog" } file { path => ["/var/log/httpd/access_log"] type => "httpdlog" } } filter { grok { match => [ "message", "%{SYSLOGBASE} %{GREEDYDATA:content}" ] } } output { if [type] == "syslog" { elasticsearch { hosts => ["192.168.0.153:9200"] index => "syslog-%{+YYY.MM.dd}" } } if [type] == "httpdlog" { elasticsearch { hosts => ["192.168.0.153:9200"] index => "httpdlog-%{+YYY.MM.dd}" } } stdout { codec => rubydebug } }然后重启logstash,回到kibana上创建httpdlog索引,即可查看不同索引的日志输出情况

---------------------------------------------------------------------------------------

filebeat的加入

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-5.5.2-linux-x86_64.tar.gz

tar zxf filebeat-5.5.2-linux-x86_64.tar.gz

mv filebeat-5.5.2-linux-x86_64 filebeat

cd filebeat/

cp filebeat.yml filebeat.yml.default

vi filebeat.yml [root@elk filebeat]# cat filebeat.yml |grep -v "^$"|grep -v "^#"|grep -v "#" filebeat.prospectors: - input_type: log paths: - /var/log/httpd/access_log fields: logIndex: httpdlog docType: httpd-access project: httpd output.logstash: hosts: ["192.168.0.153:5044"]然后启动filebeat

./filebeat &

修改logstash.conf

[root@elk config]# cat logstash.conf input { beats { port => "5044" } } filter { grok { match => [ "message", "%{SYSLOGBASE} %{GREEDYDATA:content}" ] } } output { elasticsearch { hosts => ["192.168.0.153:9200"] index => "httpdlog-%{+YYY.MM.dd}" } stdout { codec => rubydebug } }重启logstash

./bin/logstash -f config/logstash.conf &

kibana中查看输出正常

------------------------------------------------------------

增加mysql慢日志监控

安装mariadb

yum install mariadb* -y

vim /etc/my.cnf

增加开启慢日志配置

slow_query_log = ON

slow_query_log_file = /var/log/mariadb/slow.log

long_query_time = 1

启动数据库 systemctl start mariadb

执行慢日志语句

[root@elk config]# mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 2 Server version: 5.5.60-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> select sleep(2); +----------+ | sleep(2) | +----------+ | 0 | +----------+ 1 row in set (2.00 sec)查看慢日志

[root@elk logstash]# tailf /var/log/mariadb/slow.log /usr/libexec/mysqld, Version: 5.5.60-MariaDB (MariaDB Server). started with: Tcp port: 0 Unix socket: /var/lib/mysql/mysql.sock Time Id Command Argument # Time: 190404 17:11:50 # User@Host: root[root] @ localhost [] # Thread_id: 2 Schema: QC_hit: No # Query_time: 2.002019 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 0 SET timestamp=1554369110; select sleep(2);增加mysql日志到logstash

在config下创建一个配置目录test ,将所有的conf文件都放到test目录下 ,

test目录下必须是同类型的文件 不能和beat形式的logstash配置混合使用。

启动时候用指定多配置文件目录 例如: ./bin/logstash -f config/test

新建mysqllog.conf的配置

input { file { path => ["/var/log/mariadb/slow.log"] type => "mysqllog" } } filter { grok { match => [ "message", "# User@Host:\s+%{WORD:user1}\[%{WORD:user2}\]\s+@\s+\[(?:%{IP:clientip})?\]\s+#\s+Thread_id:\s+%{NUMBER:thread_id:int}\s+Schema:\s+%{WORD:schema}\s+QC_hit:\s+%{WORD:qc_hit}\s+#\s+Query_time:\s+%{NUMBER:query_time:float}\s+Lock_time:\s+%{NUMBER:lock_time:float}\s+Rows_sent:\s+%{NUMBER:rows_sent:int}\s+Rows_examined:\s+%{NUMBER:rows_examined:int}\s+#\s+Rows_affected:\s+%{NUMBER:rows_affected:int}\s+SET\s+timestamp=%{NUMBER:timestamp};\s+(?<query>(?<action>\w+)\s+.*);"] } } output { elasticsearch { hosts => ["192.168.0.153:9200"] index => "mysqllog-%{+YYY.MM.dd}" } stdout { codec => rubydebug } }启动 ./bin/logstash -f config/test (多个配置文件 启动时指定目录)

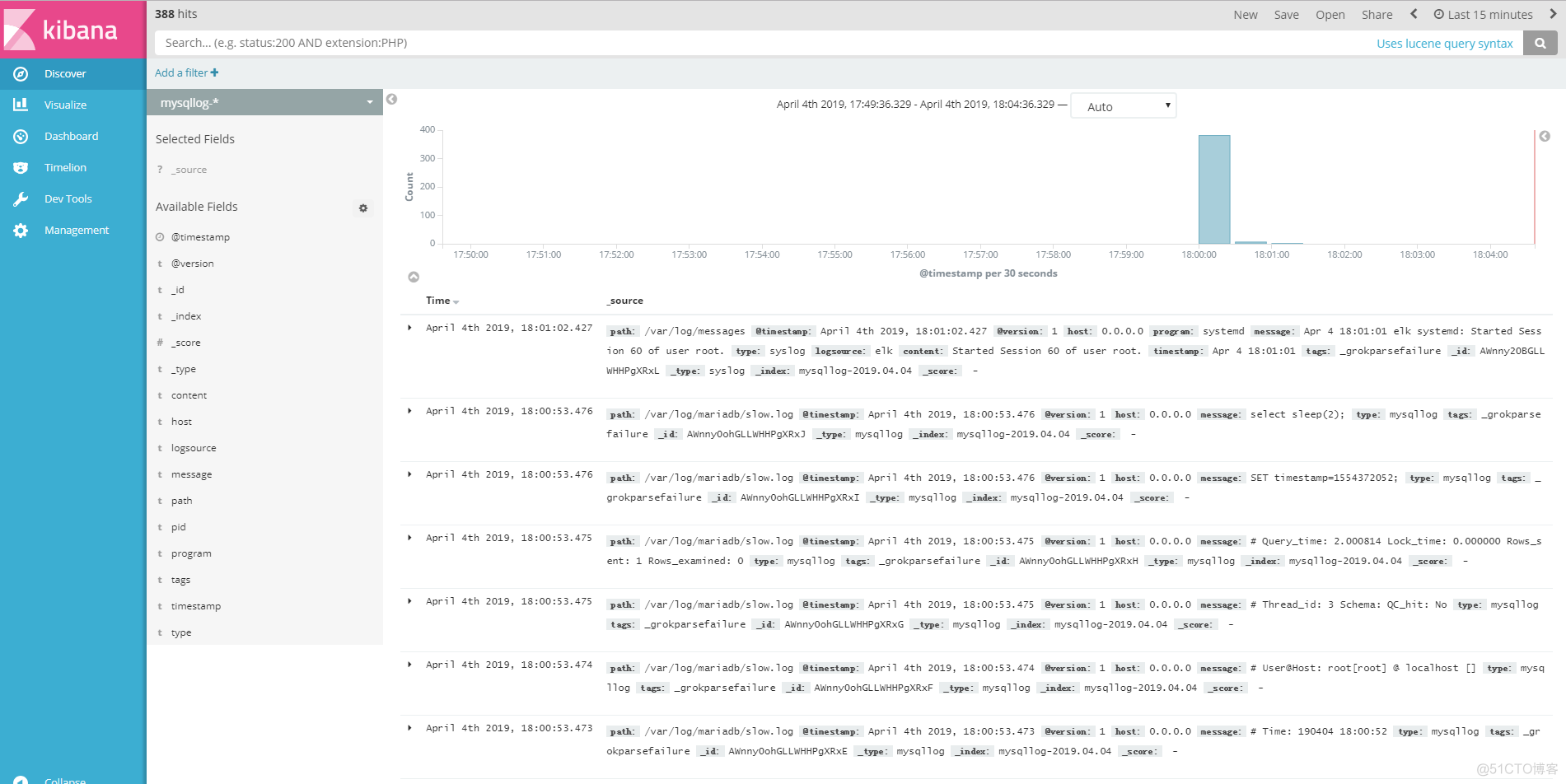

启动后回到页面创建mysqllog的index,然后查看慢日志

部署中的常见错误及解决方法

1、启动 elasticsearch 如出现异常 can not run elasticsearch as root

解决方法:创建ES 账户,修改文件夹 文件 所属用户 组

2、启动异常:ERROR: bootstrap checks failedsystem call filters failed to install; check the logs and fix your configuration or disable system call filters at your own risk

解决方法:在elasticsearch.yml中配置bootstrap.system_call_filter为false,注意要在Memory下面:bootstrap.memory_lock: falsebootstrap.system_call_filter: false

3、启动后,如果只有本地可以访问,尝试修改配置文件 elasticsearch.yml中network.host(注意配置文件格式不是以 # 开头的要空一格, : 后要空一格)为 network.host: 0.0.0.0默认端口是 9200注意:关闭防火墙 或者开放9200端口

4、ERROR: bootstrap checks failedmax file descriptors [4096] for elasticsearch process likely too low, increase to at least [65536]max number of threads [1024] for user [lishang] likely too low, increase to at least [2048]

解决方法:切换到root用户,编辑limits.conf 添加类似如下内容vi /etc/security/limits.conf 添加如下内容:* soft nofile 65536* hard nofile 131072* soft nproc 2048* hard nproc 4096

5、max number of threads [1024] for user [lish] likely too low, increase to at least [2048]解决方法:切换到root用户,进入limits.d目录下修改配置文件。vi /etc/security/limits.d/90-nproc.conf 修改如下内容:* soft nproc 1024#修改为* soft nproc 20486、max virtual memory areas vm.max_map_count [65530] likely too low, increase to at least [262144]解决方法:切换到root用户修改配置sysctl.confvi /etc/sysctl.conf 添加下面配置:vm.max_map_count=655360并执行命令:sysctl -p然后,重新启动elasticsearch,即可启动成功。

7 加载多配置文件

我们知道在启动logstash的时候,只要加上-f /you_path_to_config_file就可以加载配置文件了,如果我们需要加载多个配置文件,只需要-f /you_path_to_config_directory就可以了。简单说,就是在-f后面加上目录就可以。

注意:目录后面不能加 * 号,否则只会读取一个文件,但是在读取日志文件时,*可以匹配所有,比如sys.log*可以匹配所有以sys.log开头的日志文件,如sys.log1,sys.log2等。

示例如下: //比如 /home/husen/config/目录下有 //in1.conf、in2.conf、filter1.conf、filter2.conf、out.conf这5个文件 //我们使用 /logstash-5.5.2/bin/logstash -f /home/husen/config启动logtstash //logstash会自动加载这个5个配置文件,并合并成1个整体的配置文件8 一个配置读取多文件写法示例

input { stdin { } file { path => "D:\ELK\logstash_6.2.3\bin\conf\data_for_test\accounts.json" start_position => "beginning" type => "accounts" } file { path => "D:\ELK\logstash_6.2.3\bin\conf\data_for_test\logs.jsonl" start_position => "beginning" type => "logs" } file { path => "D:\ELK\logstash_6.2.3\bin\conf\data_for_test\shakespeare.json" start_position => "beginning" type => "shakespeare" } } filter { json { source => "message" // 以JSON格式解析 target => "doc" // 解析到doc下面 remove_field =>["message"] // 移除message } } output { if[type] == "accounts"{ elasticsearch { hosts => "localhost:9200" index => "blog_001" document_type => "accounts" } } if[type] == "logs"{ elasticsearch { hosts => "localhost:9200" index => "blog_002" document_type => "logs" } } if[type] == "shakespeare"{ elasticsearch { hosts => "localhost:9200" index => "blog_003" document_type => "shakespeare" } } stdout { codec => rubydebug } }