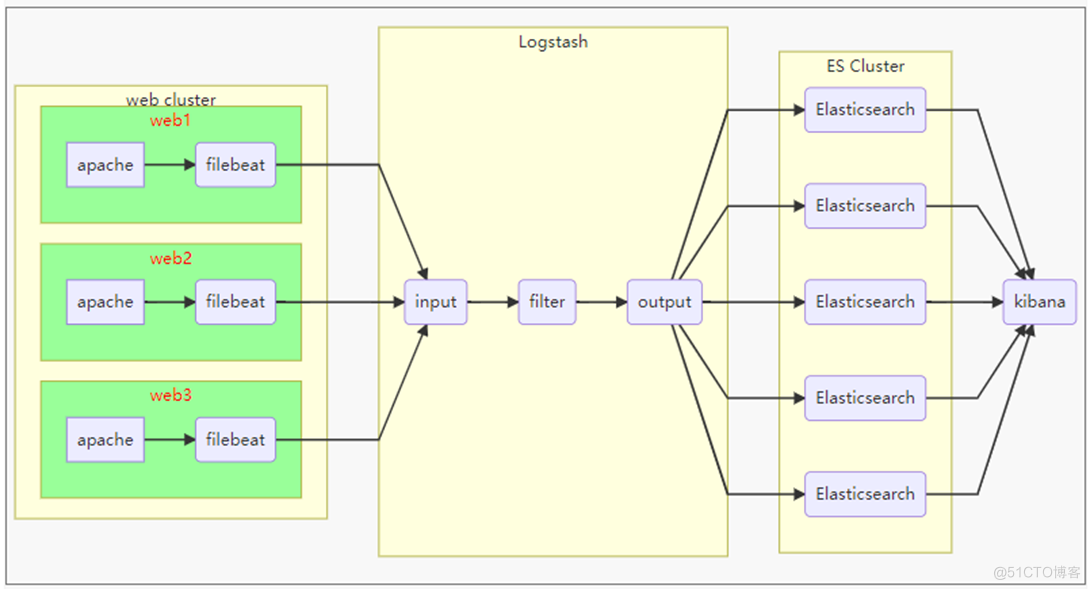

架构图

一、环境准备

1.主机清单

主机名

IP地址

配置

es-0001

192.168.1.41

1cpu,1G内存,10G硬盘

es-0002

192.168.1.42

1cpu,1G内存,10G硬盘

es-0003

192.168.1.43

1cpu,1G内存,10G硬盘

es-0004

192.168.1.44

1cpu,1G内存,10G硬盘

es-0005

192.168.1.45

1cpu,1G内存,10G硬盘

Kibana

192.168.1.46

1cpu,1G内存,10G硬盘

logstash

192.168.1.47

2CPU、2G内存,10G硬盘

apache

192.168.1.48

1PU、1G内存,10G硬盘

2.软件清单

- elasticsearch-2.3.4.rpm

- logstash-2.3.4-1.noarch.rpm

- kibana-4.5.2-1.x86_64.rpm

- filebeat-1.2.3-x86_64.rpm

3.插件清单:

- [x] elasticsearch插件:

软件包

描述

| bigdesk-master.zip | 是ES集群的监控工具 |

| elasticsearch-kopf-master.zip | 一个ElasticSearch的管理工具,提供对ES集群操作的API |

| elasticsearch-head-master.zip | 展现ES集群的# 显示帮助信息 ?help拓扑结构,可进行索引(index)、节点(node)级别的操作 |

4.华为云跳板机

配置yum软件仓库:

[root@ecs-proxy </sub>]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.myhuaweicloud.com/repo/CentOS-Base-7.repo

[root@ecs-proxy <sub>]# yum clean all

[root@ecs-proxy </sub>]# yum makecache

[root@ecs-proxy <sub>]# yum install -y net-tools lftp rsync psmisc vim-enhanced tree vsftpd bash-completion createrepo lrzsz iproute

[root@ecs-proxy </sub>]# mkdir /var/ftp/localrepo

[root@ecs-proxy <sub>]# cd /var/ftp/localrepo

[root@ecs-proxy </sub>]# createrepo .

[root@ecs-proxy <sub>]# createrepo --update . # 更新

[root@ecs-proxy </sub>]# systemctl enable --now vsftpd

[root@ecs-proxy ~]# cp -a elk /var/ftp/localrepo/elk

[root@ecs-proxy elk]# cd /var/ftp/localrepo/

[root@ecs-proxy localrepo]# createrepo --update .

优化系统服务

[root@ecs-proxy </sub>]# yum remove -y postfix firewalld-*

[root@ecs-proxy <sub>]# yum install chrony

[root@ecs-proxy </sub>]# vim /etc/chrony.conf

# 注释掉 server 开头行,添加下面的配置

server ntp.myhuaweicloud.com minpoll 4 maxpoll 10 iburst

[root@ecs-proxy <sub>]# systemctl enable --now chronyd

[root@ecs-proxy </sub>]# chronyc sources -v //验证配置结果 ^* 代表成功

[root@ecs-proxy <sub>]# vim /etc/cloud/cloud.cfg

# manage_etc_hosts: localhost //注释掉这一行

[root@ecs-proxy </sub>]# reboot

安装配置ansible管理主机

[root@ecs-proxy </sub>]# cd /var/ftp/localrepo

[root@ecs-proxy <sub>]# createrepo --update .

[root@ecs-proxy </sub>]# vim /etc/yum.repos.d/local.repo

[local_repo]

name=CentOS-$releasever – Localrepo

baseurl=ftp://192.168.1.252/localrepo

enabled=1

gpgcheck=0

[root@ecs-proxy <sub>]# yum makecache

[root@ecs-proxy </sub>]# yum install -y ansible //去华为云网页下载秘钥,并上传秘钥到跳板机

[root@ecs-proxy <sub>]# mv luck.pem /root/.ssh/id_rsa

[root@ecs-proxy </sub>]# chmod 0400 /root/.ssh/id_rsa

5.华为云模板机(镜像)配置

[root@ecs-host </sub>]# rm -rf /etc/yum.repos.d/*.repo

[root@ecs-host <sub>]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.myhuaweicloud.com/repo/CentOS-Base-7.repo

[root@ecs-host </sub>]# vim /etc/yum.repos.d/local.repo

[local_repo]

name=CentOS-$releasever – Localrepo

baseurl=ftp://192.168.1.252/localrepo

enabled=1

gpgcheck=0

[root@ecs-host <sub>]# yum clean all

[root@ecs-host </sub>]# yum makecache

[root@ecs-host <sub>]# yum repolist

[root@ecs-host </sub>]# yum install -y net-tools lftp rsync psmisc vim-enhanced tree lrzsz bash-completion iproute

优化系统服务

[root@ecs-host </sub>]# yum remove -y postfix at audit tuned kexec-tools firewalld-*

[root@ecs-host <sub>]# yum install chrony

[root@ecs-host </sub>]# vim /etc/chrony.conf

# 注释掉 server 开头行,添加下面的配置

server ntp.myhuaweicloud.com minpoll 4 maxpoll 10 iburst

[root@ecs-host <sub>]# systemctl enable --now chronyd

[root@ecs-host </sub>]# chronyc sources -v

# 验证配置结果 ^* 代表成功

[root@ecs-host <sub>]# vim /etc/cloud/cloud.cfg

# manage_etc_hosts: localhost 注释掉这一行

[root@ecs-host </sub>]# yum clean all

[root@ecs-host ~]# poweroff

1.主机名解析(相互ping通)

192.168.1.41 es-0001

192.168.1.42 es-0002

192.168.1.43 es-0003

192.168.1.44 es-0004

192.168.1.45 es-0005

192.168.1.46 kibana

192.168.1.47 logstash

192.168.1.48 apache

二、【部署Elasticsearch】(所有es节点)

A。部署elasticsearch服务

安装软件

# yum install -y java-1.8.0-openjdk elasticsearch //版本:2.3.4.rpm# java -version

openjdk version "1.8.0_161"

OpenJDK Runtime Environment (build 1.8.0_161-b14)

OpenJDK 64-Bit Server VM (build 25.161-b14, mixed mode)

# sestatus //查看selinux状态

修改配置

# vim /etc/elasticsearch/elasticsearch.yml17| cluster.name: my-ES //配置集群名称

23| node.name: es1 //当前主机名称

55| network.host:0.0.0.0 //监听所有地址/本机IP

68| discovery.zen.ping.unicast.hosts: ["es1","es2","es3"] //声明集群成员(无需全部)

或者

# sed -i '/cluster.name/s/# cluster.name/cluster.name/' /etc/elasticsearch/elasticsearch.yml //去掉注释

# sed -i '/cluster.name/s/my-application/my-ES/' /etc/elasticsearch/elasticsearch.yml

# sed -i '/node.name/s/# node.name: node-1/node.name: es-0002/' /etc/elasticsearch/elasticsearch.yml

# sed -i '/network.host/s/# network.host: 192.168.0.1/network.host: 0.0.0.0/' /etc/elasticsearch/elasticsearch.yml

# sed -i '/discovery.zen.ping/a discovery.zen.ping.unicast.hosts: ["es-0001", "es-0002"]' /etc/elasticsearch/elasticsearch.yml //行下增加配置

启动服务

# systemctl start elasticsearch.service# systemctl enable elasticsearch.service

服务验证

# ss -ntulp | grep 9200# ss -ntulp | grep 9300

# curl http://es-0001:9200/

# curl http://192.168.1.41:9200/_cluster/health?pretty

{

"cluster_name" : "my-ES", //集群标识/名称

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3, //集群成员数量

"number_of_data_nodes" : 3, //集群有多少个节点

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

cluster:集群

health:健康

B.插件部署语应用

插件装在哪一台机器上,只能在哪台机器上使用

# /usr/share/elasticsearch/bin/plugin install file:///root/file/bigdesk-master.zip

# /usr/share/elasticsearch/bin/plugin install file:///root/file/elasticsearch-kopf-master.zip

# /usr/share/elasticsearch/bin/plugin install file:///root/file/elasticsearch-head-master.zip

# /usr/share/elasticsearch/bin/plugin list //查看安装好的插件

Installed plugins in /usr/share/elasticsearch/plugins:

- kopf

- bigdesk

- head

访问插件

1、华为云绑定弹性公网IP给 es-0005 节点

2、http://弹性公网IP:9200/_plugin/插件名称 [bigdesk|head|kopf]

http://公网IP:9200/\_plugin/kopf

http://公网IP:9200/\_plugin/head

http://公网IP:9200/\_plugin/bigdesk

http://公网IP/info.php //请求方法

Elasticsearch基本操作

查询_cat方法

[root@es-0001 </sub>]# curl -XGET http://es-0001:9200/_cat/master # 查具体的信息

[root@es-0001 <sub>]# curl -XGET http://es-0001:9200/_cat/master?v # 显示详细信息 ?v

[root@es-0001 </sub>]# curl -XGET http://es-0001:9200/_cat/master?help # 显示帮助信息 ?help

创建索引

指定索引的名称,指定分片数量,指定副本数量

创建索引使用 PUT 方法,创建完成以后通过 head 插件验证

'{

"settings":{ //创建

"index":{ //索引

"number_of_shards": 5, //分片数量

"number_of_replicas": 1 //副本数量

}

}

}'

相当于建了一个数据库

# curl -XGET http://es-0001:9200/_cat/indices?v //查看索引详细信息

浏览器访问:http://122.9.96.62:9200/_plugin/head/ 的变化,块深的为源数据,浅的为副本

增加数据

'{

"职业": "诗人",

"名字": "李白",

"称号": "诗仙",

"年代": "唐"

}'

查询数据

修改数据

'{

"doc": {

"年代": "公元701"

}

}'

删除数据

[root@es-0001 </sub>]# curl -XDELETE http://es-0001:9200/tedu //删除索引

三.Kibana部署

上传kibana-4.5.2-1.x86_64.rpm

# vim /opt/kibana/config/kibana.yml //修改配置文件

2| server.port: 5601 //端口

5| server.host: "0.0.0.0"

15| elasticsearch.url: http://es1:9200 //集群

23| kibana.index: ".kibana"

26| kibana.defaultAppId: "discover" //kibana默认首页

服务启动/验证

# systemctl enable kibana

# ss -ntulp | grep 5601

访问测试

http://弹性公网IP:5601/status

导入日志数据

拷贝云盘 public/elk/logs.jsonl.gz 到跳板机

# curl -XPOST http://192.168.1.41:9200/_bulk --data-binary @logs.jsonl //需等几分钟

导入成功:

配置kibana,通配符

修改时间与日志一致

【logstash】

安装logstash

# yum install -y java-1.8.0-openjdk logstash# vim /etc/logstash/logstash.conf //手动创建配置文件

input {

stdin {}

}

filter{ }

output{

stdout{}

}

stdin 标准输入(0)

stdout 标准输出(1)

stderr 错误输出(2)

# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf //通过配置文件启动服务

插件与调试格式

使用json格式字符串测试 {"a":"1", "b":"2","c":"3"}

input {

stdin { codec => "json" }

}

filter{ }

output{

stdout{ codec => "rubydebug" }

}

# /opt/logstash/bin/logstash -f /etc/logstash/logstash.conf