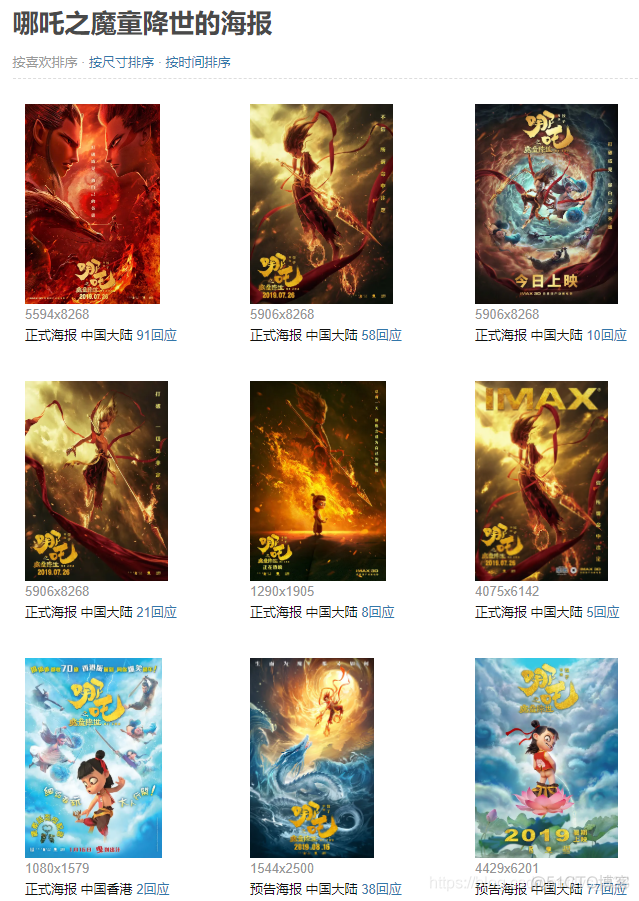

以哪吒之魔童降世影片的海报为例进行图片爬取

参考网址:哪吒之魔童降世官方海报

爬虫逻辑:【分页网页url采集】-【数据采集】-【保存图片】

经过前两篇文章的实践,可以发现两种爬虫逻辑各有优缺点,逻辑(一)可以获得相对详细的信息,但是需要从主url中获取分页url再进行数据的爬取,很消耗时间,而逻辑(二)则是直接获取在第一个url上的信息,爬取即可,很省时,当然相对地获取的信息也就较少一些。而这次获取图片信息不需要再到分页的rul上采集数据,直接进行首页url的图片信息抓取即可,但是不同的是,这里还要多一个图片存储的过程,也就是基于逻辑(二)上还要封装一个函数3,如下

函数1:get_urls(n) → 【分页网页url采集】

n:页数参数

结果:得到一个分页网页的list

函数2:get_pic(ui,d_h,d_c) → 【数据采集】

ui:数据信息网页

d_h:user-agent信息

d_c:cookies信息

结果:得到图片的dict,包括图片id和图片src

函数3:save_pic(srci) → 【保存图片】

srci:图片src

明确采集内容

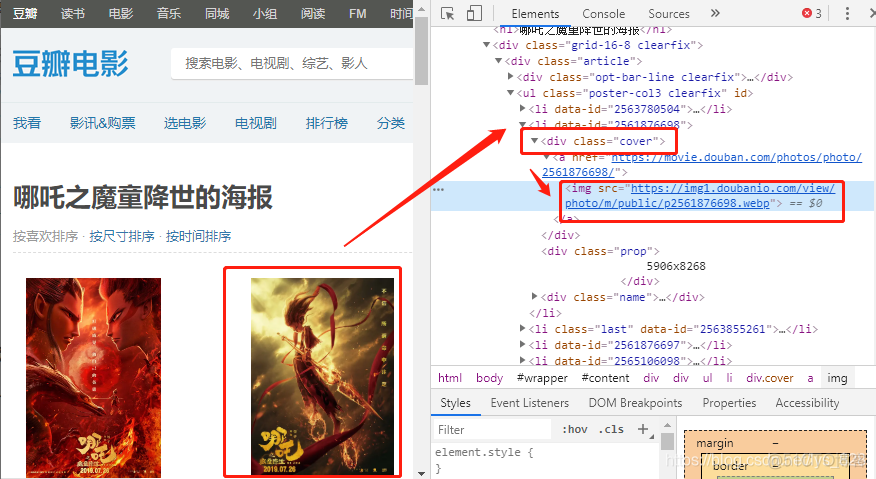

1)img标签中的src属性信息

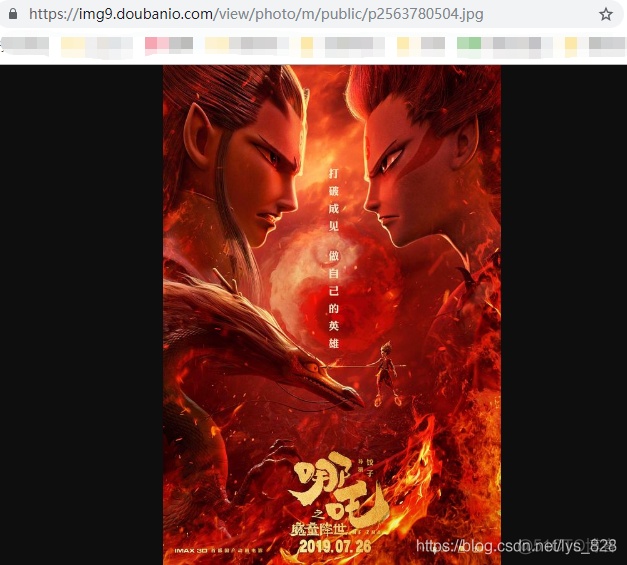

对应的网址打开后,内容如下

2)要求采集150条数据(也是全部的海报数据)

5页【分页网页url采集】- 每页30条数据

3)结果保存一个文件夹,注意批量命名图片

查找url规律

哪吒海报的2-4页的url如下

u2 = https://movie.douban.com/subject/26794435/photos?type=R&start=30&sortby=like&size=a&subtype=au3 = https://movie.douban.com/subject/26794435/photos?type=R&start=60&sortby=like&size=a&subtype=a

u4 = https://movie.douban.com/subject/26794435/photos?type=R&start=90&sortby=like&size=a&subtype=a

......

由上可以推知,url规律是将“start=”后面的数值进行修改,而且每个页面存放的海报数量为30张,因此就可以构建前5页全部url,第一页的规律一般默认是数字从0开始的

urllst = []for i in range(5):

ui = "https://movie.douban.com/subject/26794435/photos?type=R&start={}&sortby=like&size=a&subtype=a".format(i*30)

urllst.append(ui)

导入相关库和封装第一个函数

import requestsfrom bs4 import BeautifulSoup

def get_url(n):

'''

【分页网址url采集】函数

n:页数参数

结果:得到一个分页网页的list

'''

lst = []

for i in range(n):

ui = "https://movie.douban.com/subject/26794435/photos?type=R&start={}&sortby=like&size=a&subtype=a".format(i*30)

#print(ui)

lst.append(ui)

return lst

print(get_url(5))

输出的结果为:

[‘https://movie.douban.com/subject/26794435/photos?type=R&start=0&sortby=like&size=a&subtype=a’,

‘https://movie.douban.com/subject/26794435/photos?type=R&start=30&sortby=like&size=a&subtype=a’,

‘https://movie.douban.com/subject/26794435/photos?type=R&start=60&sortby=like&size=a&subtype=a’,

‘https://movie.douban.com/subject/26794435/photos?type=R&start=90&sortby=like&size=a&subtype=a’,

‘https://movie.douban.com/subject/26794435/photos?type=R&start=120&sortby=like&size=a&subtype=a’]

设置headers和cookies

关于headers和cookies之前已经设置过了,可以直接拿来用,如下

dic_heders = {'User-Agent' : 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'

}

dic_cookies = {}

cookies = 'll="108296"; bid=b9z-Z1JF8wQ; _vwo_uuid_v2=DDF408830197B90007427EFEAB67DF985|b500ed9e7e3b5f6efec01c709b7000c3; douban-fav-remind=1; __yadk_uid=2D7qqvQghjfgVOD0jdPUlybUNa2MBZbz; gr_user_id=5943e535-83de-4105-b840-65b7a0cc92e1; dbcl2="150296873:VHh1cXhumGU"; push_noty_num=0; push_doumail_num=0; __utmv=30149280.15029; __gads=ID=dcc053bfe97d2b3c:T=1579101910:S=ALNI_Ma5JEn6w7PLu-iTttZOFRZbG4sHCw; ct=y; Hm_lvt_cfafef0aa0076ffb1a7838fd772f844d=1579102240; __utmz=81379588.1579138975.5.5.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; __utmz=30149280.1579162528.9.8.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; ck=csBn; _pk_ref.100001.3ac3=%5B%22%22%2C%22%22%2C1581081161%2C%22https%3A%2F%2Fwww.baidu.com%2Flink%3Furl%3DNq2xYeTOYsYNs1a4LeFRmxqwD_0zDOBN253fDrX-5wRdwrQqUpYGFSmifESD4TLN%26wd%3D%26eqid%3De7868ab7001090b7000000035e1fbf95%22%5D; _pk_ses.100001.3ac3=*; __utma=30149280.195590675.1570957615.1581050101.1581081161.16; __utmc=30149280; __utma=81379588.834351582.1571800818.1581050101.1581081161.12; __utmc=81379588; ap_v=0,6.0; gr_session_id_22c937bbd8ebd703f2d8e9445f7dfd03=b6a046c7-15eb-4e77-bc26-3a7af29e68b1; gr_cs1_b6a046c7-15eb-4e77-bc26-3a7af29e68b1=user_id%3A1; gr_session_id_22c937bbd8ebd703f2d8e9445f7dfd03_b6a046c7-15eb-4e77-bc26-3a7af29e68b1=true; _pk_id.100001.3ac3=6ec264aefc5132a2.1571800818.12.1581082472.1581050101.; __utmb=30149280.7.10.1581081161; __utmb=81379588.7.10.1581081161'

cookies_lst = cookies.split("; ")

for i in cookies_lst:

dic_cookies[i.split("=")[0]] = i.split("=")[1]

请求网页信息和网页解析

首先请求网页信息,看是否可以正常访问上面输出的几个网址,拿其中的一个进行试错

urllst_1 = get_url(5)u1 = urllst_1[0]

r1 = requests.get(u1, headers= dic_heders, cookies = dic_cookies)

print(r1)

输出的结果为:

说明网址可以正常访问,下面就进行网页的解析,查找海报对应的标签信息,在浏览器中某处右键点击检查,找到海报对应的标签,所有的海报信息都在【ul】标签内,而每单个海报的信息都存放在【li】标签下的【div,class=‘cover’】里面

因此,选择一个海报的标签信息进行试错,看是否可以正确的获取海报的id和海报的src(id是为了存储时候方便命名文件名称,src就是图片信息)

u1 = urllst_1[0] #选取第一页

r1 = requests.get(u1, headers= dic_heders, cookies = dic_cookies) #返回请求信息

soup_1 = BeautifulSoup(r1.text,'lxml')#解析页面

ul = soup_1.find("ul",class_="poster-col3 clearfix") #查找存放所有海报的ul标签

lis = ul.find_all('li')#再找到存放每个海报的li标签

li_1 = lis[0]#然后选取里面第一个海报

dic_pic = {}#创建字典存放照片的id和src信息

dic_pic['pic_id'] = li_1['data-id']

dic_pic['pic_src'] = li_1.find('img')['src']

print(dic_pic)

输出的结果为:

{‘pic_id’: ‘2563780504’, ‘pic_src’: ‘https://img9.doubanio.com/view/photo/m/public/p2563780504.jpg’}

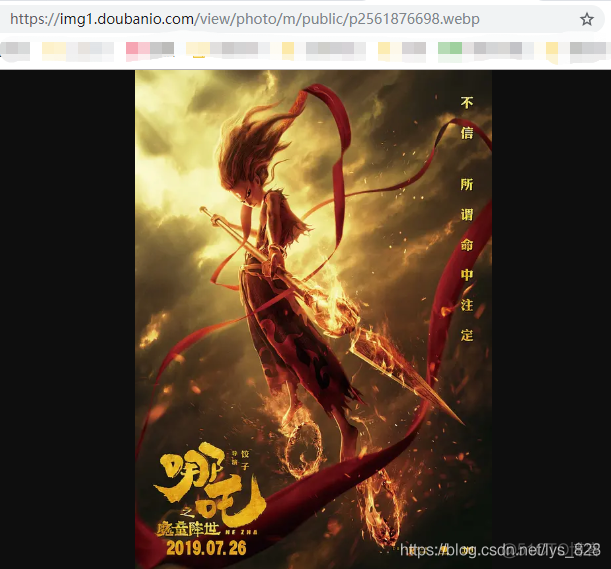

核对一下图片网址信息是否正确,src信息输入后打开网页,显示和该页面下的第一张海报一致,因此试错完成,下一步进行函数2的封装

封装第二个函数

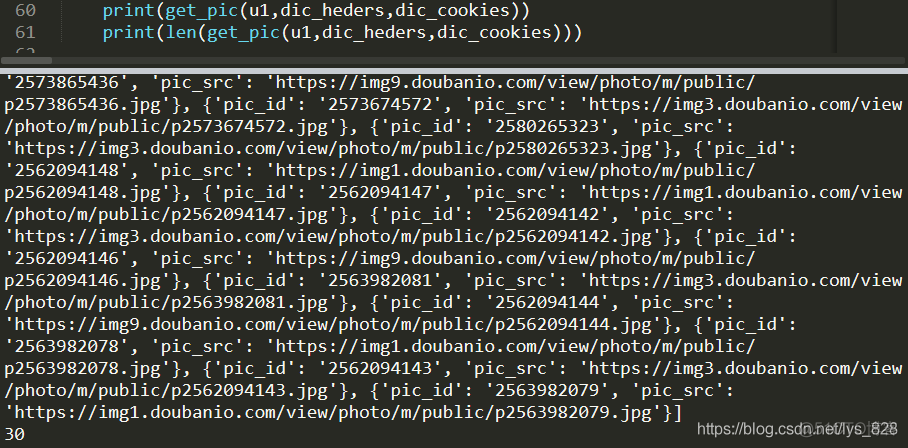

遍历所有的【li】标签,从里面取出,海报的id和src,并把每个海报对应的信息存放在列表里面,可以进行第一个网页的海报信息获取的验证,如下

def get_pic(ui,d_h,d_c):'''

【数据采集】

ui:数据信息网页

d_h:user-agent信息

d_c:cookies信息

结果:得到图片的dict,包括图片id和图片src

'''

ri = requests.get(ui, headers= d_h, cookies = d_c)

soup_i = BeautifulSoup(ri.text,'lxml')

ul = soup_i.find("ul",class_="poster-col3 clearfix")

lis = ul.find_all('li')

pic_lst =[]

for li in lis:

dic_pic = {}

dic_pic['pic_id'] = li['data-id']

dic_pic['pic_src'] = li.find('img')['src']

pic_lst.append(dic_pic)

return pic_lst

urllst_1 = get_url(5)

u1 = urllst_1[0]

print(get_pic(u1,dic_heders,dic_cookies))

print(len(get_pic(u1,dic_heders,dic_cookies)))

输出的结果为:(只截取部分海报信息,总共30条信息,第一页的海报内容全部爬取下来了)

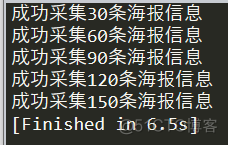

接着获取五页全部的海报信息,需要添加一个for循环,然后再创建两个列表,分别装爬取到的信息和没有爬取到的信息。如下

error_lst = []

for u in urllst_1:

try:

successed_lst.extend(get_pic(u,dic_heders,dic_cookies))

print("成功采集{}条海报信息".format(len(successed_lst)))

except:

error_lst.append(u)

print('海报信息采集失败,网址为:',u)

输出为:

封装第三个函数

该部分完成的任务是将前面获得海报信息存储到本地。和之前的操作一样,先以第一个海报为例,进行试错存储

pic = successed_lst[0]img = requests.get(pic['pic_src'])

print(img)

输出结果为:<Response [200]>(该海报信息允许访问,接着就把海报存放在本地)

with open(pic['pic_id'] + '.jpg', 'wb') as f:f.write(img.content)

f.close()

输出的结果为:(如果没有指定路径,生成的图片会自动放在与代码文件相同的路径下)

至此就完成了图片存放本地的试错,接着就是封装第三个函数,进行全部图片的存储

'''srci:图片src'''

img = requests.get(srci['pic_src'])

with open(pic['pic_id'] + '.jpg', 'wb') as f:

f.write(img.content)

f.close()

最后调用函数三,进行海报文件的存储

import ospath = os.getcwd()

if not os.path.exists('哪吒海报'):

os.mkdir('哪吒海报')

os.chdir(path+"/" + '哪吒海报')

n = 1

for pic in successed_lst:

try:

save(pic)

print('成功储存{}张海报'.format(n))

n += 1

except:

print('图片存储失败,对应的src为:',pic)

continue

至此整个爬取哪吒之魔童降世影片的海报的项目就全部结束了,下面是全部代码和输出结果

全部代码及输出结果

import requestsfrom bs4 import BeautifulSoup

import pandas as pd

def get_url(n):

'''

【分页网址url采集】函数

n:页数参数

结果:得到一个分页网页的list

'''

lst = []

for i in range(n):

ui = "https://movie.douban.com/subject/26794435/photos?type=R&start={}&sortby=like&size=a&subtype=a".format(i*30)

#print(ui)

lst.append(ui)

return lst

def get_pic(ui,d_h,d_c):

'''

【数据采集】

ui:数据信息网页

d_h:user-agent信息

d_c:cookies信息

结果:得到图片的dict,包括图片id和图片src

'''

ri = requests.get(ui, headers= d_h, cookies = d_c)

soup_i = BeautifulSoup(ri.text,'lxml')

ul = soup_i.find("ul",class_="poster-col3 clearfix")

lis = ul.find_all('li')

pic_lst =[]

for li in lis:

dic_pic = {}

dic_pic['pic_id'] = li['data-id']

dic_pic['pic_src'] = li.find('img')['src']

pic_lst.append(dic_pic)

return pic_lst

def save(srci):

'''srci:图片src'''

img = requests.get(srci['pic_src'])

with open(pic['pic_id'] + '.jpg', 'wb') as f:

f.write(img.content)

f.close()

if __name__ == "__main__":

dic_heders = {

'User-Agent' : 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'

}

dic_cookies = {}

cookies = 'll="108296"; bid=b9z-Z1JF8wQ; _vwo_uuid_v2=DDF408830197B90007427EFEAB67DF985|b500ed9e7e3b5f6efec01c709b7000c3; douban-fav-remind=1; __yadk_uid=2D7qqvQghjfgVOD0jdPUlybUNa2MBZbz; gr_user_id=5943e535-83de-4105-b840-65b7a0cc92e1; dbcl2="150296873:VHh1cXhumGU"; push_noty_num=0; push_doumail_num=0; __utmv=30149280.15029; __gads=ID=dcc053bfe97d2b3c:T=1579101910:S=ALNI_Ma5JEn6w7PLu-iTttZOFRZbG4sHCw; ct=y; Hm_lvt_cfafef0aa0076ffb1a7838fd772f844d=1579102240; __utmz=81379588.1579138975.5.5.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; __utmz=30149280.1579162528.9.8.utmcsr=baidu|utmccn=(organic)|utmcmd=organic; ck=csBn; _pk_ref.100001.3ac3=%5B%22%22%2C%22%22%2C1581081161%2C%22https%3A%2F%2Fwww.baidu.com%2Flink%3Furl%3DNq2xYeTOYsYNs1a4LeFRmxqwD_0zDOBN253fDrX-5wRdwrQqUpYGFSmifESD4TLN%26wd%3D%26eqid%3De7868ab7001090b7000000035e1fbf95%22%5D; _pk_ses.100001.3ac3=*; __utma=30149280.195590675.1570957615.1581050101.1581081161.16; __utmc=30149280; __utma=81379588.834351582.1571800818.1581050101.1581081161.12; __utmc=81379588; ap_v=0,6.0; gr_session_id_22c937bbd8ebd703f2d8e9445f7dfd03=b6a046c7-15eb-4e77-bc26-3a7af29e68b1; gr_cs1_b6a046c7-15eb-4e77-bc26-3a7af29e68b1=user_id%3A1; gr_session_id_22c937bbd8ebd703f2d8e9445f7dfd03_b6a046c7-15eb-4e77-bc26-3a7af29e68b1=true; _pk_id.100001.3ac3=6ec264aefc5132a2.1571800818.12.1581082472.1581050101.; __utmb=30149280.7.10.1581081161; __utmb=81379588.7.10.1581081161'

cookies_lst = cookies.split("; ")

for i in cookies_lst:

dic_cookies[i.split("=")[0]] = i.split("=")[1]

urllst_1 = get_url(5)

u1 = urllst_1[0]

# print(get_pic(u1,dic_heders,dic_cookies))

# print(len(get_pic(u1,dic_heders,dic_cookies)))

successed_lst = []

error_lst = []

for u in urllst_1:

try:

successed_lst.extend(get_pic(u,dic_heders,dic_cookies))

print("成功采集{}条海报信息".format(len(successed_lst)))

except:

error_lst.append(u)

print('海报信息采集失败,网址为:',u)

import os

path = os.getcwd()

if not os.path.exists('哪吒海报'):

os.mkdir('哪吒海报')

os.chdir(path+"/" + '哪吒海报')

n = 1

for pic in successed_lst:

try:

save(pic)

print('成功储存{}张海报'.format(n))

n += 1

except:

print('图片存储失败,对应的src为:',pic)

continue

输出结果如下: