上一期分享了模拟生成车牌的方法,今天分享一下搭建要给简单的车牌识别模型,模拟生成车牌的方法参看:车牌识别(1)-车牌数据集生成 生成的车牌如下图 准备数据集,图

上一期分享了模拟生成车牌的方法,今天分享一下搭建要给简单的车牌识别模型,模拟生成车牌的方法参看:车牌识别(1)-车牌数据集生成

生成的车牌如下图

准备数据集,图片放在path下面,同时把图片名称和图片的车牌号对应关系写入到txt文件,读取对应的数据到变量

# 读取数据集 path = './plate2/' # 车牌号数据集路径(车牌图片宽240,高80) data = {} with open('plate2.txt', encoding='utf-8') as f: lines = f.readlines() for line in lines: img = line.split(',')[0].strip('\n') # 图片名 lp = line.split(',')[1].strip('\n') # 车牌号码 data[img] = lp print(data) X_train, y_train = [], [] for key, value in data.items(): print("正在读取 %s 图片" % key) # print(path + key) img = cv2.imdecode(np.fromfile(path + key, dtype=np.uint8), -1) # cv2.imshow无法读取中文路径图片,改用此方式 label = [char_dict[name] for name in value] # 图片名前7位为车牌标签 # print(label) X_train.append(img) y_train.append(label) X_train = np.array(X_train) y_train = [np.array(y_train)[:, i] for i in range(7)] # y_train是长度为7的列表,其中每个都是shape为(n, # )的ndarray,分别对应n张图片的第一个字符,第二个字符....第七个字符因为车牌是固定长度,所以有个想法,就是既然我们知道识别七次,那就可以用七个模型按照顺序识别。这个思路没有问题,但实际上根据之前卷积神经网络的原理,实际上卷积神经网络在扫描整张图片的过程中,已经对整个图像的内容以及相对位置关系有所了解,所以,七个模型的卷积层实际上是可以共享的。实际上可以用一个 一组卷积层+7个全链接层 的架构,来对应输入的车牌图片:

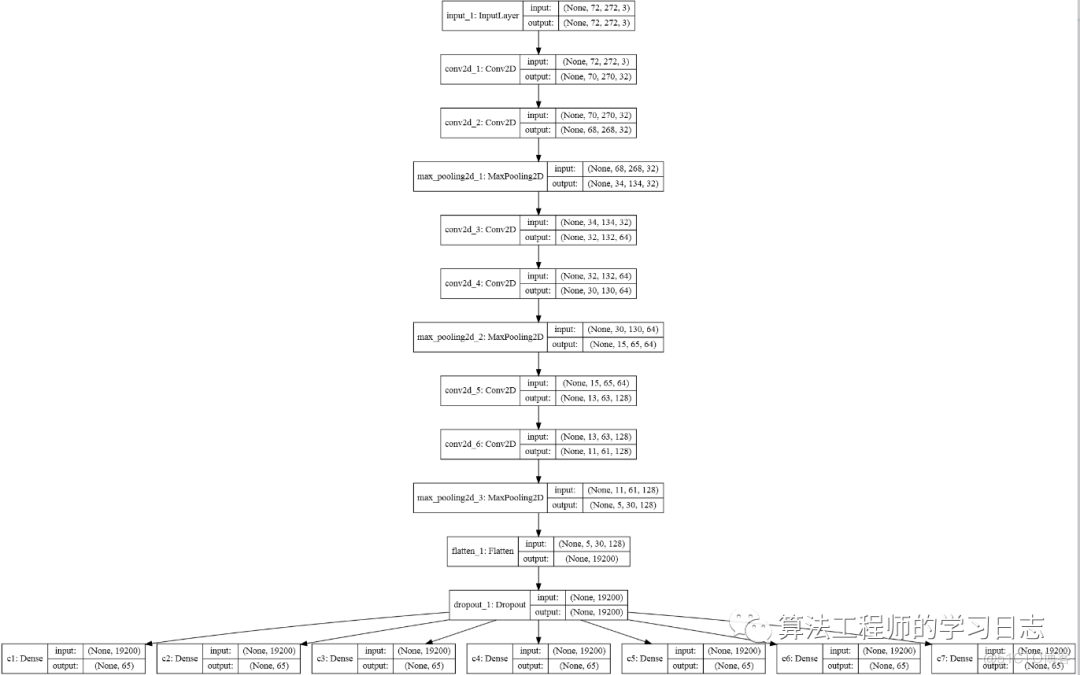

# cnn模型 Input = layers.Input((80, 240, 3)) # 车牌图片shape(80,240,3) x = Input x = layers.Conv2D(filters=16, kernel_size=(3, 3), strides=1, padding='same', activatinotallow='relu')(x) x = layers.MaxPool2D(pool_size=(2, 2), padding='same', strides=2)(x) for i in range(3): x = layers.Conv2D(filters=32 * 2 ** i, kernel_size=(3, 3), padding='valid', activatinotallow='relu')(x) x = layers.Conv2D(filters=32 * 2 ** i, kernel_size=(3, 3), padding='valid', activatinotallow='relu')(x) x = layers.MaxPool2D(pool_size=(2, 2), padding='same', strides=2)(x) x = layers.Dropout(0.3)(x) x = layers.Flatten()(x) x = layers.Dropout(0.3)(x) Output = [layers.Dense(65, activatinotallow='softmax', name='c%d' % (i + 1))(x) for i in range(7)] # 7个输出分别对应车牌7个字符,每个输出都为65个类别类概率 model = models.Model(inputs=Input, outputs=Output) model.summary() model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', # y_train未进行one-hot编码,所以loss选择sparse_categorical_crossentropy metrics=['accuracy'])

模型训练和结果保存

model.fit(X_train, y_train, epochs=5, batch_size=64, callbacks=[tensorboard_callback]) # 总loss为7个loss的和model.save('cnn.h5')print('cnn.h5保存成功!!!')Epoch 1/52000/2000 [==============================] - 547s - loss: 11.1077 - c1_loss: 1.3878 - c2_loss: 0.7512 - c3_loss: 1.1270 - c4_loss: 1.3997 - c5_loss: 1.7955 - c6_loss: 2.3060 - c7_loss: 2.3405 - c1_acc: 0.6157 - c2_acc: 0.7905 - c3_acc: 0.6831 - c4_acc: 0.6041 - c5_acc: 0.5025 - c6_acc: 0.3790 - c7_acc: 0.3678 - val_loss: 3.1323 - val_c1_loss: 0.1970 - val_c2_loss: 0.0246 - val_c3_loss: 0.0747 - val_c4_loss: 0.2076 - val_c5_loss: 0.5099 - val_c6_loss: 1.0774 - val_c7_loss: 1.0411 - val_c1_acc: 0.9436 - val_c2_acc: 0.9951 - val_c3_acc: 0.9807 - val_c4_acc: 0.9395 - val_c5_acc: 0.8535 - val_c6_acc: 0.7065 - val_c7_acc: 0.7190Epoch 2/52000/2000 [==============================] - 546s - loss: 2.7473 - c1_loss: 0.2008 - c2_loss: 0.0301 - c3_loss: 0.0751 - c4_loss: 0.1799 - c5_loss: 0.4407 - c6_loss: 0.9450 - c7_loss: 0.8757 - c1_acc: 0.9416 - c2_acc: 0.9927 - c3_acc: 0.9790 - c4_acc: 0.9467 - c5_acc: 0.8740 - c6_acc: 0.7435 - c7_acc: 0.7577 - val_loss: 1.4777 - val_c1_loss: 0.1039 - val_c2_loss: 0.0118 - val_c3_loss: 0.0300 - val_c4_loss: 0.0665 - val_c5_loss: 0.2145 - val_c6_loss: 0.5421 - val_c7_loss: 0.5090 - val_c1_acc: 0.9725 - val_c2_acc: 0.9978 - val_c3_acc: 0.9937 - val_c4_acc: 0.9824 - val_c5_acc: 0.9393 - val_c6_acc: 0.8524 - val_c7_acc: 0.8609Epoch 3/52000/2000 [==============================] - 544s - loss: 1.7686 - c1_loss: 0.1310 - c2_loss: 0.0156 - c3_loss: 0.0390 - c4_loss: 0.0971 - c5_loss: 0.2689 - c6_loss: 0.6416 - c7_loss: 0.5754 - c1_acc: 0.9598 - c2_acc: 0.9961 - c3_acc: 0.9891 - c4_acc: 0.9715 - c5_acc: 0.9213 - c6_acc: 0.8223 - c7_acc: 0.8411 - val_loss: 1.0954 - val_c1_loss: 0.0577 - val_c2_loss: 0.0088 - val_c3_loss: 0.0229 - val_c4_loss: 0.0530 - val_c5_loss: 0.1557 - val_c6_loss: 0.4247 - val_c7_loss: 0.3726 - val_c1_acc: 0.9849 - val_c2_acc: 0.9987 - val_c3_acc: 0.9948 - val_c4_acc: 0.9861 - val_c5_acc: 0.9569 - val_c6_acc: 0.8829 - val_c7_acc: 0.8994Epoch 4/52000/2000 [==============================] - 544s - loss: 1.4012 - c1_loss: 0.1063 - c2_loss: 0.0120 - c3_loss: 0.0301 - c4_loss: 0.0754 - c5_loss: 0.2031 - c6_loss: 0.5146 - c7_loss: 0.4597 - c1_acc: 0.9677 - c2_acc: 0.9968 - c3_acc: 0.9915 - c4_acc: 0.9773 - c5_acc: 0.9406 - c6_acc: 0.8568 - c7_acc: 0.8731 - val_loss: 0.8221 - val_c1_loss: 0.0466 - val_c2_loss: 0.0061 - val_c3_loss: 0.0122 - val_c4_loss: 0.0317 - val_c5_loss: 0.1085 - val_c6_loss: 0.3181 - val_c7_loss: 0.2989 - val_c1_acc: 0.9870 - val_c2_acc: 0.9986 - val_c3_acc: 0.9969 - val_c4_acc: 0.9910 - val_c5_acc: 0.9696 - val_c6_acc: 0.9117 - val_c7_acc: 0.9182Epoch 5/52000/2000 [==============================] - 553s - loss: 1.1712 - c1_loss: 0.0903 - c2_loss: 0.0116 - c3_loss: 0.0275 - c4_loss: 0.0592 - c5_loss: 0.1726 - c6_loss: 0.4305 - c7_loss: 0.3796 - c1_acc: 0.9726 - c2_acc: 0.9971 - c3_acc: 0.9925 - c4_acc: 0.9825 - c5_acc: 0.9503 - c6_acc: 0.8821 - c7_acc: 0.8962 - val_loss: 0.7210 - val_c1_loss: 0.0498 - val_c2_loss: 0.0079 - val_c3_loss: 0.0132 - val_c4_loss: 0.0303 - val_c5_loss: 0.0930 - val_c6_loss: 0.2810 - val_c7_loss: 0.2458 - val_c1_acc: 0.9862 - val_c2_acc: 0.9987 - val_c3_acc: 0.9971 - val_c4_acc: 0.9915 - val_c5_acc: 0.9723 - val_c6_acc: 0.9212 - val_c7_acc: 0.9336可见五轮训练后,即便是位置靠后的几位车牌,也实现了 93% 的识别准确率。

展示下模型预测结果:

def cnn_predict_special(cnn, Lic_img): characters = ["京", "沪", "津", "渝", "冀", "晋", "蒙", "辽", "吉", "黑", "苏", "浙", "皖", "闽", "赣", "鲁", "豫", "鄂", "湘", "粤", "桂", "琼", "川", "贵", "云", "藏", "陕", "甘", "青", "宁", "新", "0", "1", "2", "3", "4", "5", "6", "7", "8", "9", "A", "B", "C", "D", "E", "F", "G", "H", "J", "K", "L", "M", "N", "P", "Q", "R", "S", "T", "U", "V", "W", "X", "Y", "Z"] Lic_pred = [] for lic in Lic_img: lic_pred = cnn.predict(lic.reshape(1, 80, 240, 3)) # 预测形状应为(1,80,240,3) lic_pred = np.array(lic_pred).reshape(7, 65) # 列表转为ndarray,形状为(7,65) # print(lic_pred) if len(lic_pred[lic_pred >= 0.8]) >= 4: # 统计其中预测概率值大于80%以上的个数,大于等于4个以上认为识别率高,识别成功 chars = '' for arg in np.argmax(lic_pred, axis=1): # 取每行中概率值最大的arg,将其转为字符 chars += characters[arg] chars = chars[0:2] + '·' + chars[2:] Lic_pred.append(chars) # 将车牌和识别结果一并存入Lic_pred return Lic_pred

完整文件后台回复:车牌识别cnn