目录Kubeadm部署一个高可用集群Kubernetes的高可用HA的2中部署方式部署环境部署步骤关闭防火墙,swap,设置内核等安装kubeadm、docker安装配置负载均衡安 目录 Kubeadm部署一个高可用集群 Kubern

- Kubeadm部署一个高可用集群

- Kubernetes的高可用

- HA的2中部署方式

- 部署环境

- 部署步骤

- 关闭防火墙,swap,设置内核等

- 安装kubeadm、docker

- 安装配置负载均衡

- 安装haproxy

- 修改haproxy配置

- 开机默认启动haproxy,开启服务

- 检查服务端口情况

- 部署Kubernetes

- 生成init启动配置文件

- 调整kubeadm-config.yaml文件,修改配置或新增配置

- 执行节点初始化

- 安装成功,可以看到输出

- 启动flannel网络

- master节点查看集群

- 加入另外两个master节点

- 加入node节点

- 查看集群

- 后记

- 查看haproxy日志

- 4层负载均衡使用nginx

- Kubernetes的高可用

- 参考文档

Kubernetes的高可用

Kubernetes的高可用主要指的是控制平面的高可用,即有多套Master节点组件和Etcd组件,工作节点通过负载均衡连接到各Master。

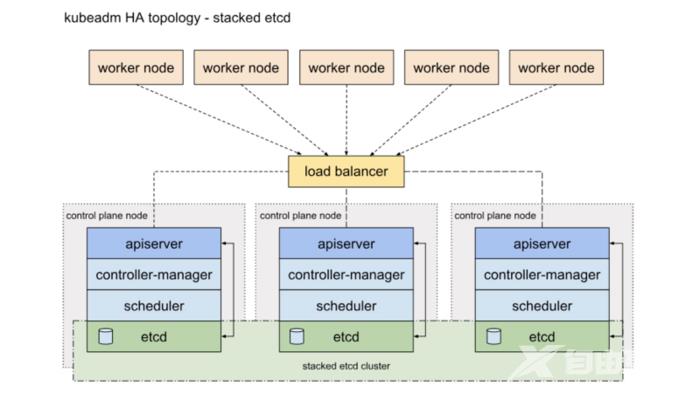

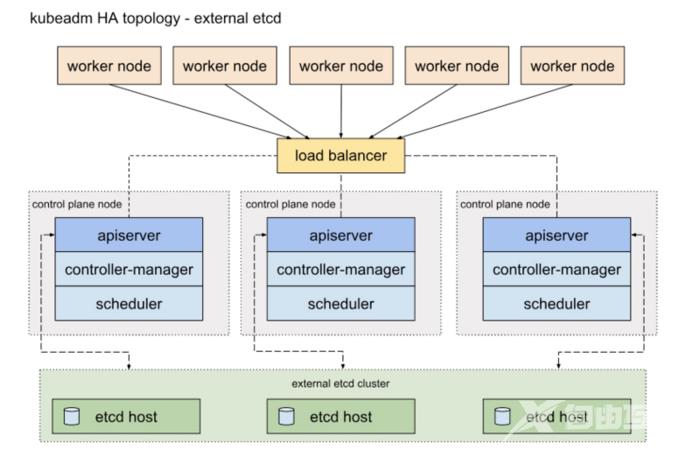

HA的2中部署方式

一种是将etcd与Master节点组件混布在一起

另外一种方式是,使用独立的Etcd集群,不与Master节点混布

两种方式的相同之处在于都提供了控制平面的冗余,实现了集群高可以用,区别在于:

- Etcd混布方式:

- Etcd独立部署方式:

部署环境

服务器

master1 192.168.0.101 (master节点1)master2 192.168.0.102 (master节点2)master3 192.168.0.103 (master节点3)haproxy 192.168.0.100 (haproxy节点,做3个master节点的负载均衡器)master-1 192.168.0.104 (node节点)主机IP备注master1192.168.0.101master节点1master2192.168.0.102master节点2master3192.168.0.103master节点3haproxy192.168.0.100haproxy节点,做3个master节点的负载均衡器node192.168.0.104node节点环境

主机:CentOS Linux release 7.7.1908 (Core)core:3.10.0-1062.el7.x86_64docker:19.03.7kubeadm:1.17.3资源配置主机CentOS Linux release 7.7.1908 (Core)主机core3.10.0-1062.el7.x86_64docker19.03.7kubeadm1.17.3部署步骤

关闭防火墙,swap,设置内核等

在所有节点上操作

- 关闭selinux,firewall

- 关闭swap,(1.8版本后的要求,目的应该是不想让swap干扰pod可使用的内存limit)

- 设置主机名

- 设置域名解析(不设置可能会导致kubeadm init初始化超时)

- 修改下面内核参数,否则请求数据经过iptables的路由可能有问题

安装kubeadm、docker

在除了haproxy以外所有节点上操作

- 将Kubernetes安装源改为阿里云,方便国内网络环境安装

- 安装docker-ce

- 安装kubelet kubeadm kubectl

安装配置负载均衡

在haproxy节点操作

安装haproxy

yum install haproxy -y修改haproxy配置

cat < /etc/haproxy/haproxy.cfgglobal log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemondefaults mode tcp log global retries 3 timeout connect 10s timeout client 1m timeout server 1mfrontend kube-apiserver bind *:6443 # 指定前端端口 mode tcp default_backend masterbackend master # 指定后端机器及端口,负载方式为轮询 balance roundrobin server master1 192.168.0.101:6443 check maxconn 2000 server master2 192.168.0.102:6443 check maxconn 2000 server master3 192.168.0.103:6443 check maxconn 2000EOF开机默认启动haproxy,开启服务

systemctl enable haproxysystemctl start haproxy检查服务端口情况

ss -tnlp | grep 6443LISTEN 0 128 *:6443 *:* users:(("haproxy",pid=1107,fd=4))部署Kubernetes

在master1节点操作

生成init启动配置文件

kubeadm config print init-defaults > kubeadm-config.yaml调整kubeadm-config.yaml文件,修改配置或新增配置

apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authenticationkind: InitConfigurationlocalAPIEndpoint: advertiseAddress: 192.168.0.101 ##宿主机IP地址 bindPort: 6443nodeRegistration: criSocket: /var/run/dockershim.sock name: master1 ##当前节点在k8s集群中名称 taints: - effect: NoSchedule key: node-role.kubernetes.io/master---apiServer: timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrolPlaneEndpoint: "192.168.0.100:6443" ##前段haproxy负载均衡地址和端口controllerManager: {}dns: type: CoreDNSetcd: local: dataDir: /var/lib/etcdimageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers ##使用阿里的镜像地址,否则无法拉取镜像kind: ClusterConfigurationkubernetesVersion: v1.17.0networking: dnsDomain: cluster.local podSubnet: "10.244.0.0/16" ##此处填写后期要安装网络插件flannel的默认网络地址 serviceSubnet: 10.96.0.0/12scheduler: {}执行节点初始化

# 通过阿里源预先拉镜像kubeadm config images pull --config kubeadm-config.yaml kubeadm init --cOnfig=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log安装成功,可以看到输出

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of the control-plane node running the following command on each as root:# master节点用以下命令加入集群: kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:37041e2b8e0de7b17fdbf73f1c79714f2bddde2d6e96af2953c8b026d15000d8 \ --control-plane --certificate-key 8d3f96830a1218b704cb2c24520186828ac6fe1d738dfb11199dcdb9a10579f8Please note that the certificate-key gives access to cluster sensitive data, keep it secret!As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.Then you can join any number of worker nodes by running the following on each as root:# 工作节点用以下命令加入集群kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:37041e2b8e0de7b17fdbf73f1c79714f2bddde2d6e96af2953c8b026d15000d8原来的kubeadm版本,join命令只用于工作节点的加入,而新版本加入了 --contaol-plane 参数后,控制平面(master)节点也可以通过kubeadm join命令加入集群了。

启动flannel网络

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"master节点查看集群

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configkubectl get noNAME STATUS ROLES AGE VERSIONmaster1 Ready master 4h12m v1.17.3加入另外两个master节点

# 在master(2|3)操作:kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:37041e2b8e0de7b17fdbf73f1c79714f2bddde2d6e96af2953c8b026d15000d8 \ --control-plane --certificate-key 8d3f96830a1218b704cb2c24520186828ac6fe1d738dfb11199dcdb9a10579f8加入node节点

# 在node操作kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:37041e2b8e0de7b17fdbf73f1c79714f2bddde2d6e96af2953c8b026d15000d8查看集群

kubectl get noNAME STATUS ROLES AGE VERSIONnode Ready 3h37m v1.17.3master1 Ready master 4h12m v1.17.3master2 Ready master 4h3m v1.17.3master3 Ready master 3h54m v1.17.3后记

查看haproxy日志

以便k8s集群启动有问题时排查问题

安装rsyslog服务

yum install rsyslog配置rsyslog采集日志

vim /etc/rsyslog.conf# 修改配置$ModLoad imudp$UDPServerRun 514# 新增配置local2.* /var/log/haproxy.log重启rsyslog

systemctl restart rsyslogsystemctl enable rsyslog4层负载均衡使用nginx

安装nginx

yum install nginxsystemctl start nginxsystemctl enable nginx配置nginx文件

vim /etc/nginx/nginx.conf# 在http{}段外面添加stream { server { listen 6443; proxy_pass kube_apiserver; } upstream kube_apiserver { server 192.168.0.101:6443 max_fails=3 fail_timeout=5s; server 192.168.0.102:6443 max_fails=3 fail_timeout=5s; server 192.168.0.103:6443 max_fails=3 fail_timeout=5s; } log_format proxy '$remote_addr [$time_local] ' '$protocol $status $bytes_sent $bytes_received ' '$session_time "$upstream_addr" ' '"$upstream_bytes_sent" "$upstream_bytes_received" "$upstream_connect_time"'; access_log /var/log/nginx/proxy-access.log proxy;}重启nginx

systemctl restart nginx