案例: 利用 Filebeat 收集 Nginx的 Json 格式访问日志和错误日志到 Elasticsearch 不同的索引 默认Nginx的每一次访问生成的访问日志是一行文本,ES没办法直接提取有效信息,不利于后续针对特定信

案例: 利用 Filebeat 收集 Nginx的 Json 格式访问日志和错误日志到 Elasticsearch 不同的索引

默认Nginx的每一次访问生成的访问日志是一行文本,ES没办法直接提取有效信息,不利于后续针对特定信息的分析,可以将Nginx访问日志转换为JSON格式解决这一问题

- 安装 nginx 配置访问日志使用 Json格式

#安装Nginx

[root@web01 ~]#apt update && apt -y install nginx

#修改nginx访问日志为Json格式

[root@web01 ~]#vim /etc/nginx/nginx.conf

...

##

# Logging Settings

##

log_format access_json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"uri":"$uri",'

'"domain":"$host",'

'"xff":"$http_x_forwarded_for",'

'"referer":"$http_referer",'

'"tcp_xff":"$proxy_protocol_addr",'

'"http_user_agent":"$http_user_agent",'

'"status":"$status"}';

#access_log /var/log/nginx/access.log;

access_log /var/log/nginx/access_json.log access_json ;

error_log /var/log/nginx/error.log;

...

#默认开启nginx的错误日志,但如果是ubuntu,还需要修改下面行才能记录错误日志

[root@web01 ~]#vim /etc/nginx/sites-available/default

location / {

# First attempt to serve request as file, then

# as directory, then fall back to displaying a 404.

#try_files $uri $uri/ =404; #将此行注释

#检查一下语法

[root@web01 ~]#nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

#重新加载配置

[root@web01 ~]#nginx -s reload

#生成访问日志

[root@web01 ~]#ll /var/log/nginx/

total 12

drwxr-xr-x 2 root adm 4096 Feb 27 04:05 ./

drwxrwxr-x 10 root syslog 4096 Feb 27 04:05 ../

-rw-r----- 1 www-data adm 0 Feb 27 04:05 access_json.log

-rw-r----- 1 www-data adm 139 Feb 27 04:15 error.log

#测试 - 用浏览器访问几次,包括404等错误访问,查看日志是否记录

[root@web01 ~]#cat /var/log/nginx/access_json.log

{"@timestamp":"2023-02-27T04:26:05+00:00","host":"192.168.11.206","clientip":"192.168.11.5","size":0,"responsetime":0.000,"upstreamtime":"-","upstreamhost":"-","http_host":"192.168.11.206","uri":"/index.nginx-debian.html","domain":"192.168.11.206","xff":"-","referer":"-","tcp_xff":"-","http_user_agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36","status":"304"}

-------------------

#格式化

{

"@timestamp": "2023-02-27T04:26:05+00:00",

"host": "192.168.11.206",

"clientip": "192.168.11.5",

"size": 0,

"responsetime": 0.000,

"upstreamtime": "-",

"upstreamhost": "-",

"http_host": "192.168.11.206",

"uri": "/index.nginx-debian.html",

"domain": "192.168.11.206",

"xff": "-",

"referer": "-",

"tcp_xff": "-",

"http_user_agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"status": "304"

}

------------------

{"@timestamp":"2023-02-27T04:26:10+00:00","host":"192.168.11.206","clientip":"192.168.11.5","size":197,"responsetime":0.000,"upstreamtime":"-","upstreamhost":"-","http_host":"192.168.11.206","uri":"/404","domain":"192.168.11.206","xff":"-","referer":"-","tcp_xff":"-","http_user_agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36","status":"404"}

-------------------

#格式化

{

"@timestamp": "2023-02-27T04:26:10+00:00",

"host": "192.168.11.206",

"clientip": "192.168.11.5",

"size": 197,

"responsetime": 0.000,

"upstreamtime": "-",

"upstreamhost": "-",

"http_host": "192.168.11.206",

"uri": "/404",

"domain": "192.168.11.206",

"xff": "-",

"referer": "-",

"tcp_xff": "-",

"http_user_agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36",

"status": "404"

}

-------------------

[root@web01 ~]#cat /var/log/nginx/error.log

2023/02/27 04:25:38 [notice] 1664#1664: signal process started

2023/02/27 04:26:10 [error] 1665#1665: *5 open() "/var/www/html/404" failed (2: No such file or directory), client: 192.168.11.5, server: _, request: "GET /404 HTTP/1.1", host: "192.168.11.206"

- 修改 Filebeat 配置文件

#要收集多台nginx服务器日志到同名的ES的索引中,只要filebeat的配置都使用如下相同的配置即可

[root@web01 ~]#vim /etc/filebeat/filebeat.yml

[root@web01 ~]#cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true #开启日志

paths:

- /var/log/nginx/access_json.log #指定收集的日志文件

json.keys_under_root: true #默认false,只识别为普通文本,会将全部日志数据存储至message字段,改为true则会以Json格式存储

json.overwrite_keys: true #设为true,使用json格式日志中自定义的key替代默认的message字段

tags: ["nginx-access"] #指定tag,用于分类

- type: log

enabled: true #开启日志

paths:

- /var/log/nginx/error.log #指定收集的日志文件

tags: ["nginx-error"] #指定tag,用于分类

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

hosts: ["192.168.11.200:9200","192.168.11.201:9200","192.168.11.202:9200"] #指定ELK集群任意节点的地址和端口,多个地址容错

indices:

- index: "nginx-access-%{[agent.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "nginx-access" #如果记志中有access的tag,就记录到nginx-access的索引中

- index: "nginx-error-%{[agent.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "nginx-error" #如果记志中有error的tag,就记录到nginx-error的索引中

setup.ilm.enabled: false #关闭索引生命周期ilm功能,默认开启时索引名称只能为filebeat-*

setup.template.name: "nginx" #定义模板名称,要自定义索引名称,必须指定此项,否则无法启动

setup.template.pattern: "nginx-*" #定义模板的匹配索引名称,要自定义索引名称,必须指定此项,否则无法启动

[root@web01 ~]#systemctl restart filebeat.service

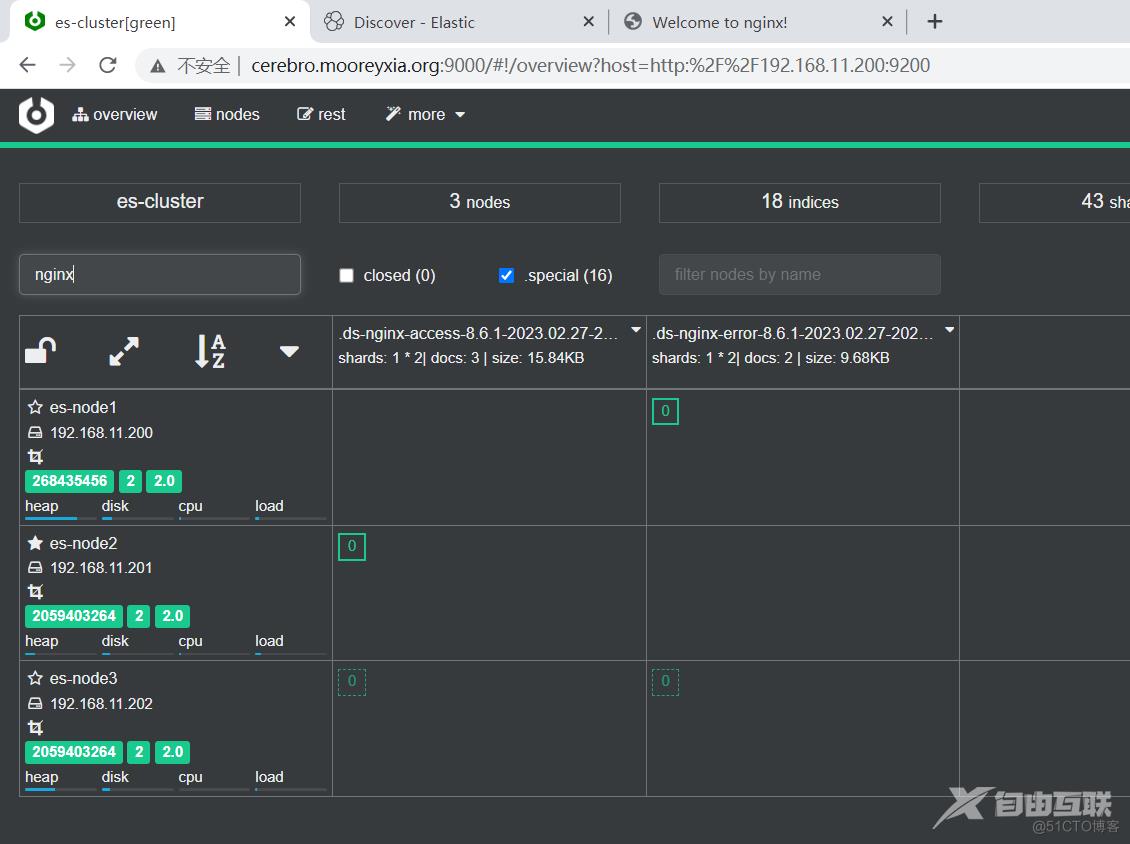

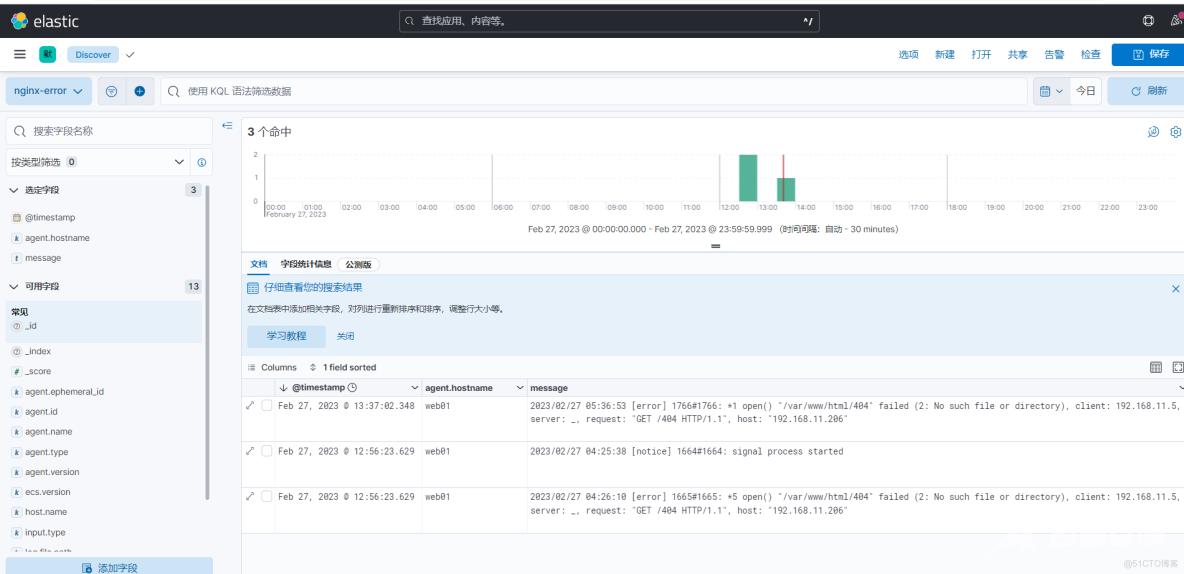

- 查看生成的索引

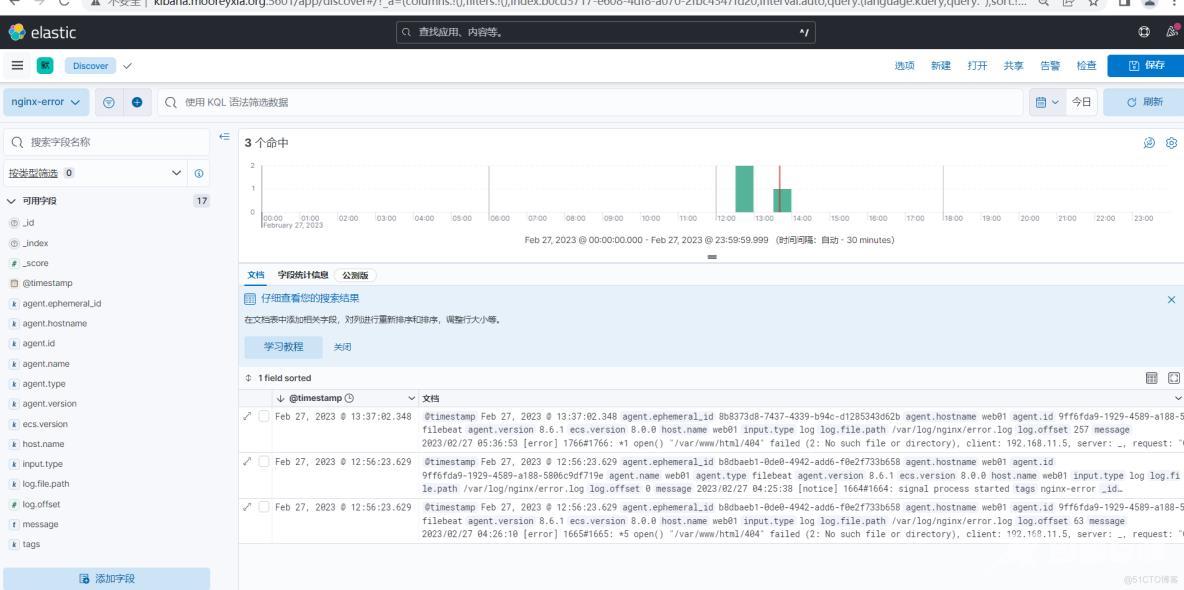

- 在 Kibana 验证日志数据

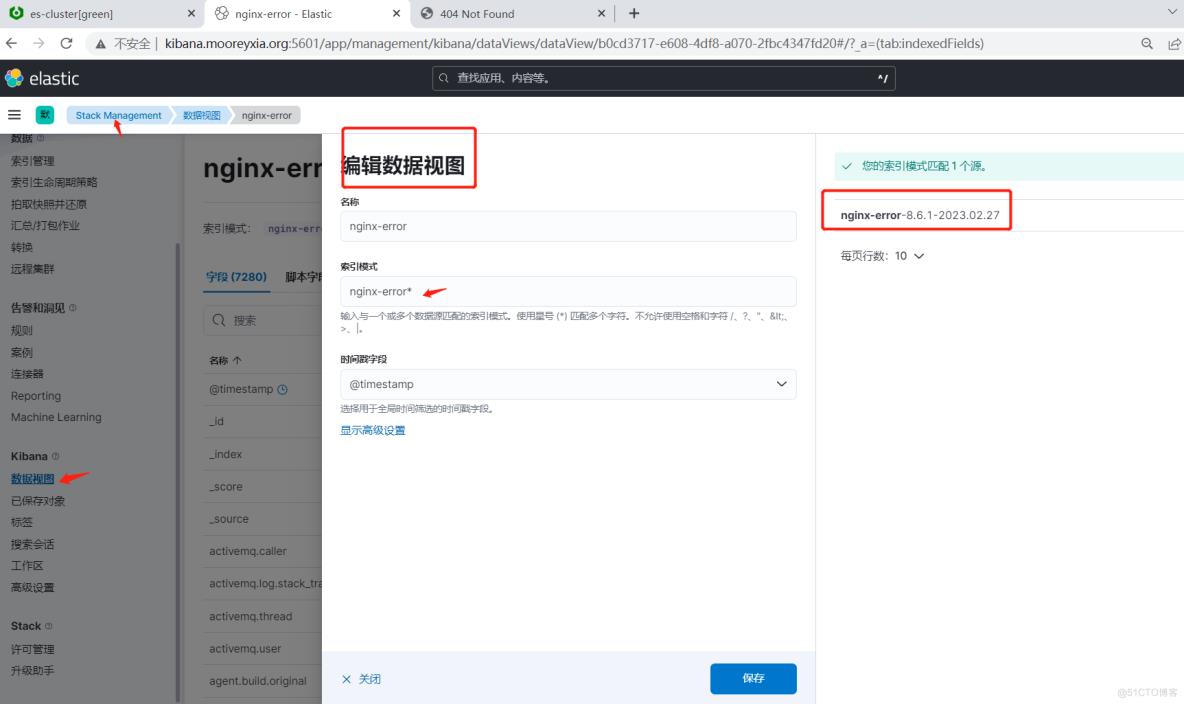

- 创建索引模式

案例: 利用 Filebeat 收集 Tomat 的 Json 格式的访问日志和错误日志到 Elasticsearch

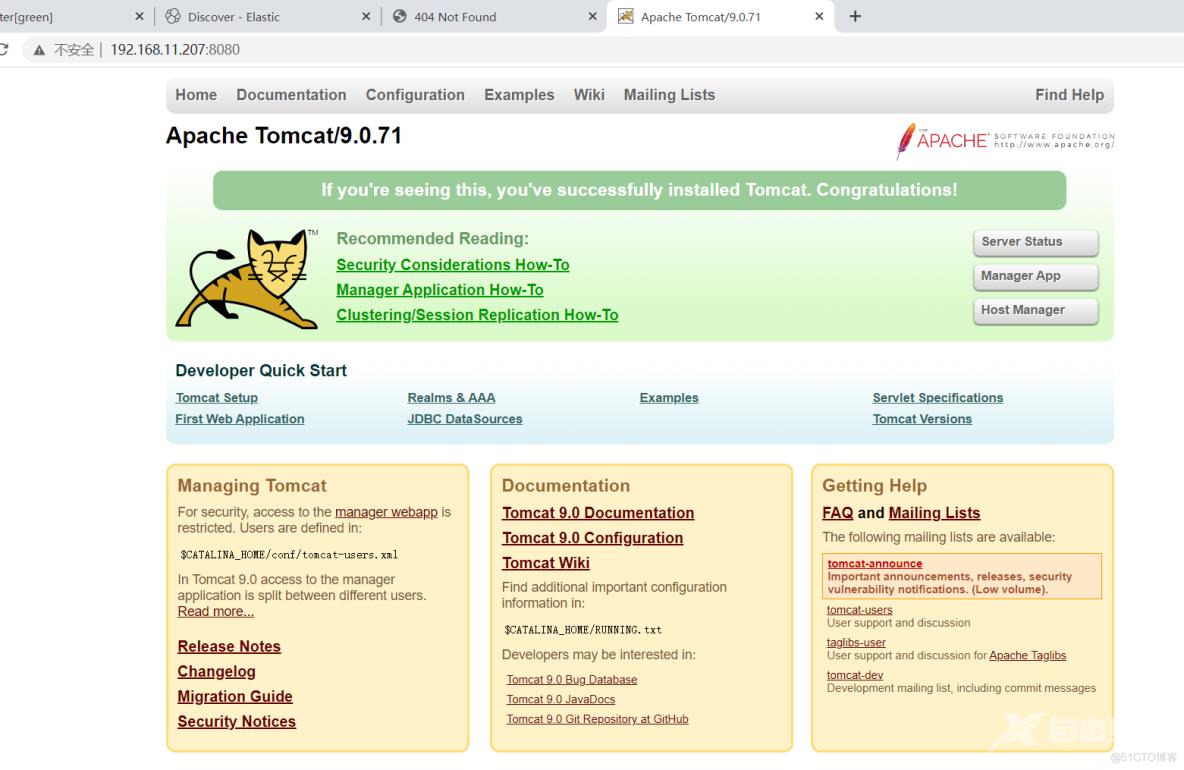

- 安装 Tomcat 并配置使用 Json 格式的访问日志

Tomcat安装过程省略

#修改 Tomcat 的访问日志为Json格式

[root@web02 ~]#cat /usr/local/tomcat/conf/server.xml

...

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log" suffix=".txt"

----------------

#日志格式修改为Json

----------------

pattern="{"clientip":"%h","ClientUser":"%l","authenticated":"%u","AccessTime":"%t","method":"%r","status":"%s","SendBytes":"%b","Query?string":"%q","partner":"%{Referer}i","AgentVersion":"%{User-Agent}i"}"/>

</Host>

</Engine>

</Service>

</Server>

[root@web02 ~]#systemctl restart tomcat

[root@web02 ~]#ll /usr/local/tomcat/logs/localhost_access_log.2023-02-27.txt

-rw-r----- 1 tomcat tomcat 747 Feb 27 06:44 /usr/local/tomcat/logs/localhost_access_log.2023-02-27.txt

#访问几次tomcat的页面,可以看到如下的Json格式日志

[root@web02 ~]#cat /usr/local/tomcat/logs/localhost_access_log.2023-02-27.txt

{"clientip":"192.168.11.5","ClientUser":"-","authenticated":"-","AccessTime":"[27/Feb/2023:06:52:51 +0000]","method":"GET /manager/html HTTP/1.1","status":"403","SendBytes":"3446","Query?string":"","partner":"http://192.168.11.207:8080/","AgentVersion":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"}

{"clientip":"192.168.11.5","ClientUser":"-","authenticated":"-","AccessTime":"[27/Feb/2023:06:52:55 +0000]","method":"GET / HTTP/1.1","status":"200","SendBytes":"11230","Query?string":"","partner":"-","AgentVersion":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"}

{"clientip":"192.168.11.5","ClientUser":"-","authenticated":"-","AccessTime":"[27/Feb/2023:06:53:03 +0000]","method":"GET /404 HTTP/1.1","status":"404","SendBytes":"719","Query?string":"","partner":"-","AgentVersion":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"}

{"clientip":"192.168.11.5","ClientUser":"-","authenticated":"-","AccessTime":"[27/Feb/2023:06:53:07 +0000]","method":"GET /manager/status HTTP/1.1","status":"403","SendBytes":"3446","Query?string":"","partner":"http://192.168.11.207:8080/","AgentVersion":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"}

{"clientip":"192.168.11.5","ClientUser":"-","authenticated":"-","AccessTime":"[27/Feb/2023:06:53:13 +0000]","method":"GET /host-manager/html HTTP/1.1","status":"403","SendBytes":"3022","Query?string":"","partner":"http://192.168.11.207:8080/","AgentVersion":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"}

-----------------------

#转换Json格式验证一下

{

"clientip": "192.168.11.5",

"ClientUser": "-",

"authenticated": "-",

"AccessTime": "[27/Feb/2023:06:52:55 +0000]",

"method": "GET / HTTP/1.1",

"status": "200",

"SendBytes": "11230",

"Query?string": "",

"partner": "-",

"AgentVersion": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/109.0.0.0 Safari/537.36"

}

-----------------------

- 修改 Filebeat 配置文件

[root@web02 ~]#cp /etc/filebeat/filebeat.yml /etc/filebeat/filebeat.yml.bk

[root@web02 ~]#vim /etc/filebeat/filebeat.yml

[root@web02 ~]#cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true #开启日志

paths:

- /usr/local/tomcat/logs/localhost_access_log.* #指定收集的日志文件

json.keys_under_root: true #默认false,只识别为普通文本,会将全部日志数据存储至message字段,改为true则会以Json格式存储

json.overwrite_keys: true #设为true,使用json格式日志中自定义的key替代默认的message字段

tags: ["tomcat-access"] #指定tag,用于分类

- type: log

enabled: true #开启日志

paths:

- /usr/local/tomcat/logs/catalina.* #指定收集的日志文件

tags: ["tomcat-error"] #指定tag,用于分类

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

hosts: ["192.168.11.200:9200","192.168.11.201:9200","192.168.11.202:9200"] #指定ELK集群任意节点的地址和端口,多个地址容错

indices:

- index: "tomcat-access-%{[agent.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "tomcat-access" #如果记志中有access的tag,就记录到tomcat-access的索引中

- index: "tomcat-error-%{[agent.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "tomcat-error" #如果记志中有error的tag,就记录到tomcat-error的索引中

setup.ilm.enabled: false #关闭索引生命周期ilm功能,默认开启时索引名称只能为filebeat-*

setup.template.name: "tomcat" #定义模板名称,要自定义索引名称,必须指定此项,否则无法启动

setup.template.pattern: "tomcat-*" #定义模板的匹配索引名称,要自定义索引名称,必须指定此项,否则无法启动

[root@web02 ~]#filebeat test config -c filebeat.yml

Config OK

[root@web02 ~]#systemctl restart filebeat

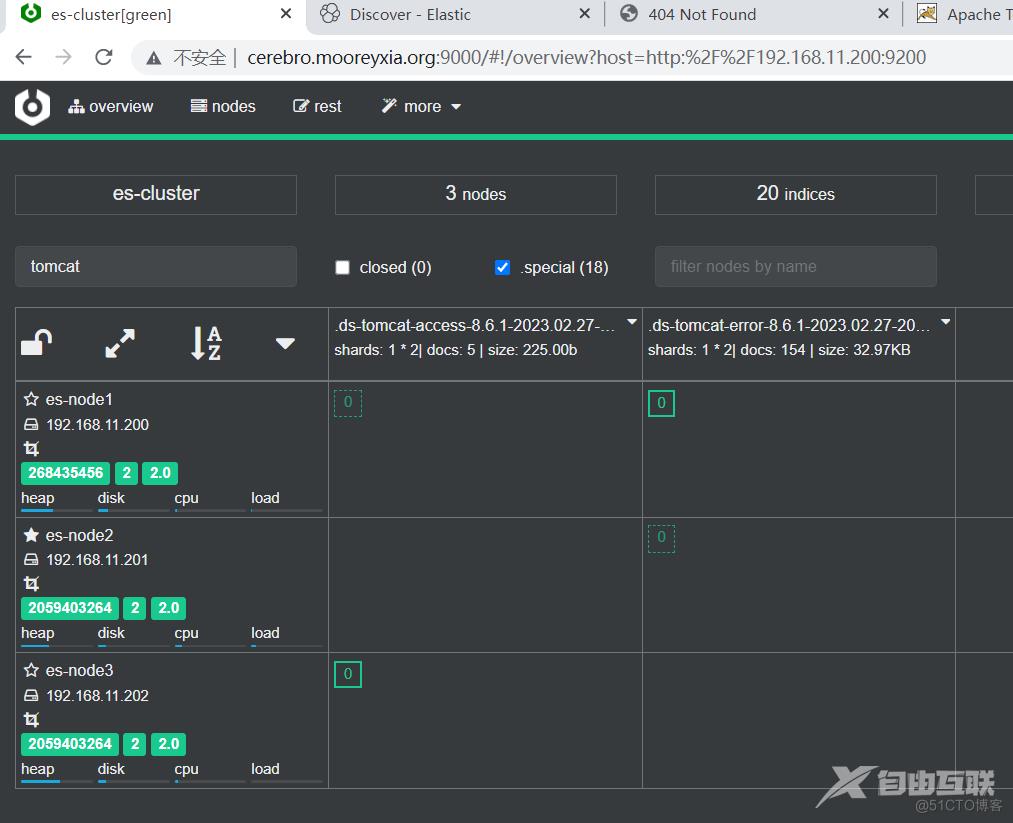

- 插件查看索引

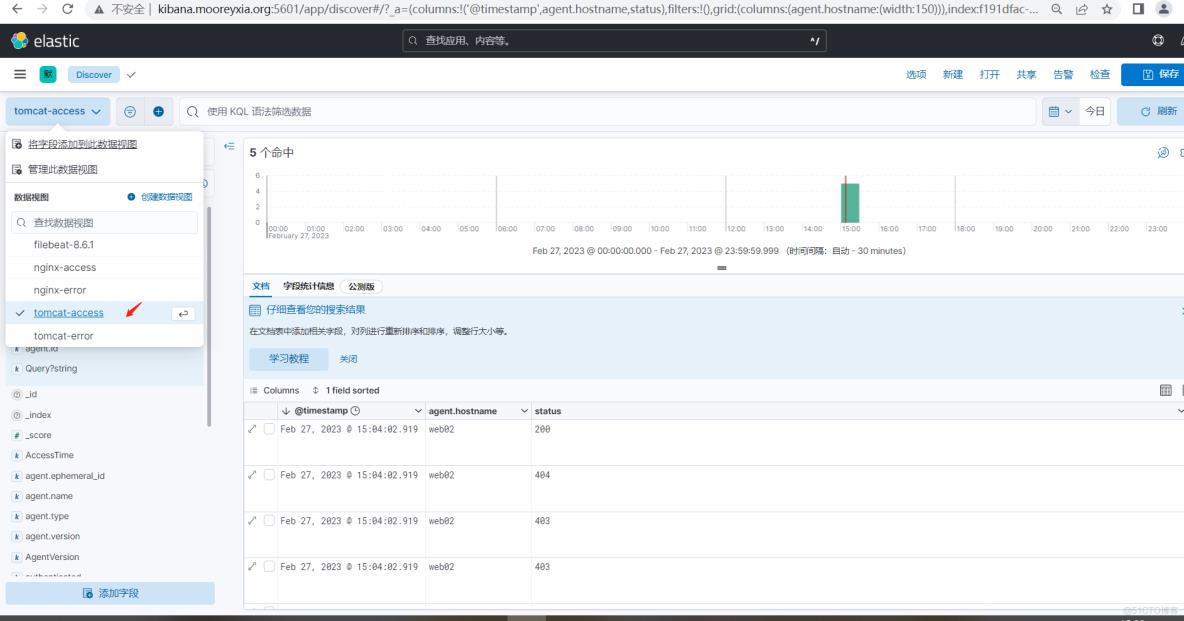

- 在 Kibana 验证日志数据

案例: 利用 Filebeat 收集 Tomat 的多行错误日志到Elasticsearch

#Tomcat 是 Java 应用,当只出现一个错误时,会显示很多行的错误日志

#更改配置文件生成多行错误

[root@web02 ~]#vim /usr/local/tomcat/conf/server.xml

[root@web02 ~]#systemctl restart tomcat;tail /usr/local/tomcat/logs/catalina.out

at org.apache.catalina.startup.Catalina.parseServerXml(Catalina.java:617)

at org.apache.catalina.startup.Catalina.load(Catalina.java:709)

at org.apache.catalina.startup.Catalina.load(Catalina.java:746)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.catalina.startup.Bootstrap.load(Bootstrap.java:307)

at org.apache.catalina.startup.Bootstrap.main(Bootstrap.java:477)

27-Feb-2023 07:16:42.959 SEVERE [main] org.apache.catalina.startup.Catalina.start Cannot start server, server instance is not configured

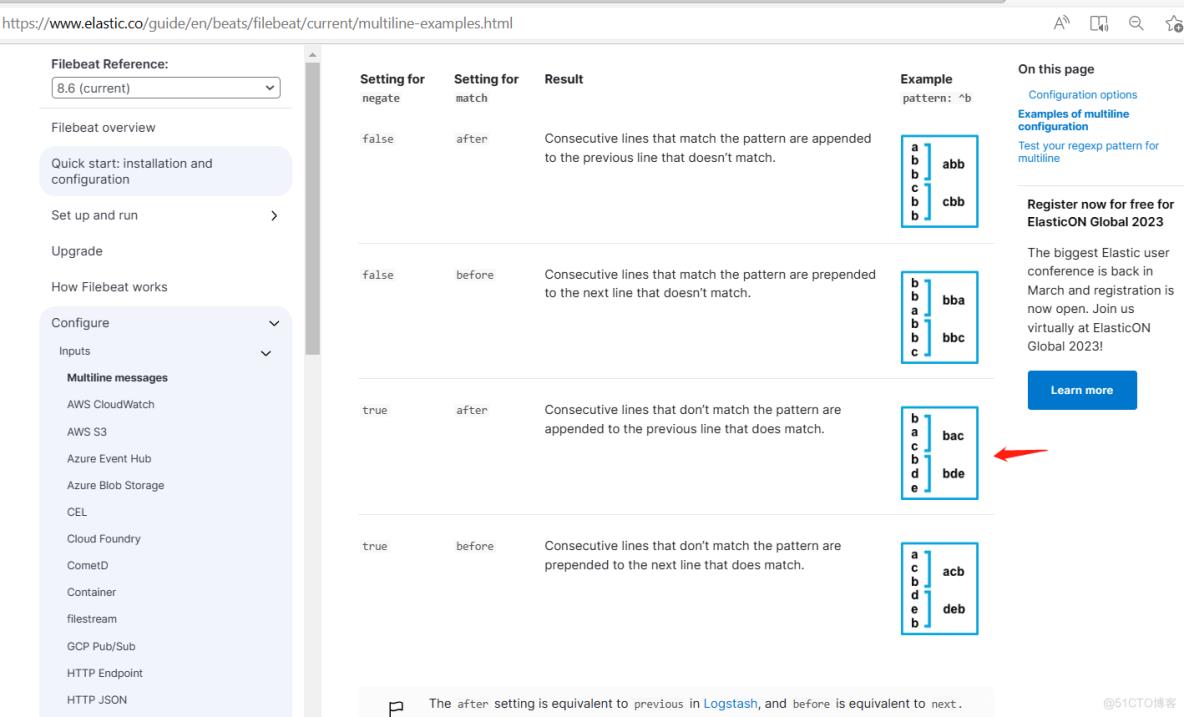

- 多个行其实是同一个事件的日志的内容,而ES默认是根据每一行来区别不同的日志,就会导致一个错误对应多行错误信息会生成很多行的ES文档记录。可以将一个错误对应的多个行合并成一个ES的文档记录来解决此问题

官方文档

https://www.elastic.co/guide/en/beats/filebeat/current/multiline-examples.html

- 在上一个实验的基础上修改 Filebeat 配置文件

[root@web02 ~]#vim /etc/filebeat/filebeat.yml

[root@web02 ~]#cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true #开启日志

paths:

- /usr/local/tomcat/logs/localhost_access_log.* #指定收集的日志文件

json.keys_under_root: true #默认false,只识别为普通文本,会将全部日志数据存储至message字段,改为true则会以Json格式存储

json.overwrite_keys: false #设为true,使用json格式日志中自定义的key替代默认的message字段

tags: ["tomcat-access"] #指定tag,用于分类

- type: log

enabled: true #开启日志

paths:

- /usr/local/tomcat/logs/catalina.* #指定收集的日志文件

tags: ["tomcat-error"] #指定tag,用于分类

multiline.type: pattern #此为默认值,可省略

multiline.pattern: '^[0-3][0-9]-' #正则表达式匹配以两位,或者为'^\d{2}'

multiline.negate: true #negate否定无效

multiline.match: after

multiline.max_lines: 5000 #默认只合并500行,指定最大合并5000行

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

hosts: ["192.168.11.200:9200","192.168.11.201:9200","192.168.11.202:9200"] #指定ELK集群任意节点的地址和端口,多个地址容错

indices:

- index: "tomcat-access-%{[agent.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "tomcat-access" #如果记志中有access的tag,就记录到tomcat-access的索引中

- index: "tomcat-error-%{[agent.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "tomcat-error" #如果记志中有error的tag,就记录到tomcat-error的索引中

setup.ilm.enabled: false #关闭索引生命周期ilm功能,默认开启时索引名称只能为filebeat-*

setup.template.name: "tomcat" #定义模板名称,要自定义索引名称,必须指定此项,否则无法启动

setup.template.pattern: "tomcat-*" #定义模板的匹配索引名称,要自定义索引名称,必须指定此项,否则无法启动

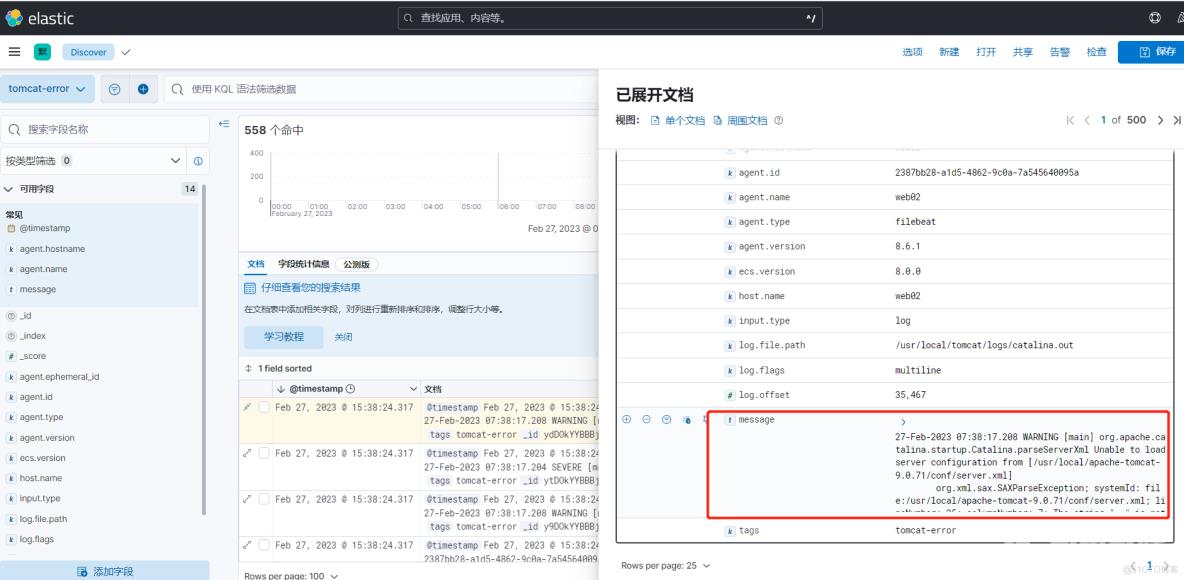

- 在Kibana上查看新搜集的错误日志

#测试 - 再次触发Tomcat报错

[root@web02 ~]#tail -f /usr/local/tomcat/logs/catalina.out

...

27-Feb-2023 07:38:17.208 WARNING [main] org.apache.catalina.startup.Catalina.parseServerXml Unable to load server configuration from [/usr/local/apache-tomcat-9.0.71/conf/server.xml]

org.xml.sax.SAXParseException; systemId: file:/usr/local/apache-tomcat-9.0.71/conf/server.xml; lineNumber: 25; columnNumber: 7; The string "--" is not permitted within comments.

at com.sun.org.apache.xerces.internal.parsers.AbstractSAXParser.parse(AbstractSAXParser.java:1243)

at com.sun.org.apache.xerces.internal.jaxp.SAXParserImpl$JAXPSAXParser.parse(SAXParserImpl.java:644)

at org.apache.tomcat.util.digester.Digester.parse(Digester.java:1535)

at org.apache.catalina.startup.Catalina.parseServerXml(Catalina.java:617)

at org.apache.catalina.startup.Catalina.load(Catalina.java:709)

at org.apache.catalina.startup.Catalina.load(Catalina.java:746)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.catalina.startup.Bootstrap.load(Bootstrap.java:307)

at org.apache.catalina.startup.Bootstrap.main(Bootstrap.java:477)

27-Feb-2023 07:38:17.209 SEVERE [main] org.apache.catalina.startup.Catalina.start Cannot start server, server instance is not configured

...

Ubuntu 安装 Logstash

- 包安装 Logstash

注意: Logstash 版本要和 Elasticsearch 相同的版本,否则可能会出错

#Ubuntu 环境准备

[root@logstash ~]#apt -y install openjdk-17-jdk

[root@logstash ~]#java -version

openjdk version "17.0.5" 2022-10-18

OpenJDK Runtime Environment (build 17.0.5+8-Ubuntu-2ubuntu122.04)

OpenJDK 64-Bit Server VM (build 17.0.5+8-Ubuntu-2ubuntu122.04, mixed mode, sharing)

#安装 Logstash

[root@logstash ~]#wget https://mirrors.tuna.tsinghua.edu.cn/elasticstack/8.x/apt/pool/main/l/logstash/logstash-8.6.1-amd64.deb && dpkg -i logstash-8.6.1-amd64.deb

--2023-02-27 09:04:33-- https://mirrors.tuna.tsinghua.edu.cn/elasticstack/8.x/apt/pool/main/l/logstash/logstash-8.6.1-amd64.deb

Resolving mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)... 2402:f000:1:400::2, 101.6.15.130

Connecting to mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)|2402:f000:1:400::2|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 341638094 (326M) [application/octet-stream]

Saving to: ‘logstash-8.6.1-amd64.deb’

logstash-8.6.1-amd64.deb 100%[=================================================================================================>] 325.81M 66.9MB/s in 5.1s

2023-02-27 09:04:39 (63.6 MB/s) - ‘logstash-8.6.1-amd64.deb’ saved [341638094/341638094]

Selecting previously unselected package logstash.

(Reading database ... 87112 files and directories currently installed.)

Preparing to unpack logstash-8.6.1-amd64.deb ...

Unpacking logstash (1:8.6.1-1) ...

Setting up logstash (1:8.6.1-1) ...

#设为开机启动

[root@logstash ~]#systemctl enable --now logstash.service

Created symlink /etc/systemd/system/multi-user.target.wants/logstash.service → /lib/systemd/system/logstash.service.

#生成专有用户logstash,以此用户启动服务,存在权限问题 - 例如要采集本机数据

[root@logstash ~]#id logstash

uid=999(logstash) gid=999(logstash) groups=999(logstash)

[root@logstash ~]#ps aux|grep logstash

logstash 4131 178 19.4 3642804 392732 ? SNsl 09:07 0:25 /usr/share/logstash/jdk/bin/java -Xms1g -Xmx1g -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -XX:+HeapDumpOnOutOfMemoryError -Djava.security.egd=file:/dev/urandom -Dlog4j2.isThreadContextMapInheritable=true -Djruby.regexp.interruptible=true -Djdk.io.File.enableADS=true --add-exports=jdk.compiler/com.sun.tools.javac.api=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.file=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.parser=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.tree=ALL-UNNAMED --add-exports=jdk.compiler/com.sun.tools.javac.util=ALL-UNNAMED --add-opens=java.base/java.security=ALL-UNNAMED --add-opens=java.base/java.io=ALL-UNNAMED --add-opens=java.base/java.nio.channels=ALL-UNNAMED --add-opens=java.base/sun.nio.ch=ALL-UNNAMED --add-opens=java.management/sun.management=ALL-UNNAMED -cp /usr/share/logstash/vendor/jruby/lib/jruby.jar:/usr/share/logstash/logstash-core/lib/jars/checker-qual-3.12.0.jar:/usr/share/logstash/logstash-core/lib/jars/commons-codec-1.15.jar:/usr/share/logstash/logstash-core/lib/jars/commons-compiler-3.1.0.jar:/usr/share/logstash/logstash-core/lib/jars/commons-logging-1.2.jar:/usr/share/logstash/logstash-core/lib/jars/error_prone_annotations-2.11.0.jar:/usr/share/logstash/logstash-core/lib/jars/failureaccess-1.0.1.jar:/usr/share/logstash/logstash-core/lib/jars/google-java-format-1.15.0.jar:/usr/share/logstash/logstash-core/lib/jars/guava-31.1-jre.jar:/usr/share/logstash/logstash-core/lib/jars/httpclient-4.5.13.jar:/usr/share/logstash/logstash-core/lib/jars/httpcore-4.4.14.jar:/usr/share/logstash/logstash-core/lib/jars/j2objc-annotations-1.3.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-annotations-2.13.3.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-core-2.13.3.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-databind-2.13.3.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-dataformat-cbor-2.13.3.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-dataformat-yaml-2.13.3.jar:/usr/share/logstash/logstash-core/lib/jars/janino-3.1.0.jar:/usr/share/logstash/logstash-core/lib/jars/javassist-3.29.0-GA.jar:/usr/share/logstash/logstash-core/lib/jars/jsr305-3.0.2.jar:/usr/share/logstash/logstash-core/lib/jars/jvm-options-parser-8.6.1.jar:/usr/share/logstash/logstash-core/lib/jars/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/usr/share/logstash/logstash-core/lib/jars/log4j-1.2-api-2.17.1.jar:/usr/share/logstash/logstash-core/lib/jars/log4j-api-2.17.1.jar:/usr/share/logstash/logstash-core/lib/jars/log4j-core-2.17.1.jar:/usr/share/logstash/logstash-core/lib/jars/log4j-jcl-2.17.1.jar:/usr/share/logstash/logstash-core/lib/jars/log4j-slf4j-impl-2.17.1.jar:/usr/share/logstash/logstash-core/lib/jars/logstash-core.jar:/usr/share/logstash/logstash-core/lib/jars/reflections-0.10.2.jar:/usr/share/logstash/logstash-core/lib/jars/slf4j-api-1.7.32.jar:/usr/share/logstash/logstash-core/lib/jars/snakeyaml-1.30.jar org.logstash.Logstash --path.settings /etc/logstash

root 4182 0.0 0.0 4020 1904 pts/0 S+ 09:07 0:00 grep --color=auto logstash

- 修改 Logstash 配置

[root@logstash ~]#vim /etc/logstash/logstash.yml

[root@logstash ~]#grep -Ev '#|^$' /etc/logstash/logstash.yml

node.name: logstash-node01

pipeline.workers: 2

pipeline.batch.size: 1000 #批量从IPNPUT读取的消息个数,可以根据ES的性能做性能优化

pipeline.batch.delay: 5 #处理下一个事件前的最长等待时长,以毫秒ms为单位,可以根据ES的

性能做性能优化

path.data: /var/lib/logstash #默认值

path.logs: /var/log/logstash #默认值

#内存优化

[root@logstash ~]#vim /etc/logstash/jvm.options

-Xms1g

-Xmx1g

#Logstash默认以logstash用户运行,如果logstash需要收集本机的日志,可能会有权限问题,可以修改为root

[root@logstash ~]#vim /lib/systemd/system/logstash.service

[Service]

User=root

Group=root

[root@logstash ~]#systemctl daemon-reload;systemctl restart logstash

#确认运行身份

[root@logstash ~]#ps aux|grep logstash

root 6068 178 17.3 3634616 351244 ? SNsl 09:17 0:21 /usr/share/logstash/jdk/bin/java -Xms1g -...

root 6119 0.0 0.0 4020 1992 pts/0 S+ 09:17 0:00 grep --color=auto logstash

#加入环境变量

[root@logstash ~]#vim /etc/profile.d/logstash.sh

[root@logstash ~]#cat /etc/profile.d/logstash.sh

#!/bin/bash

PATH=/usr/share/logstash/bin/:$PATH

[root@logstash ~]#. /etc/profile.d/logstash.sh

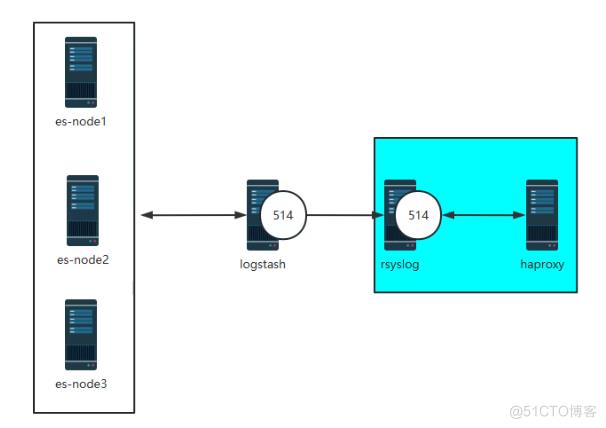

案例:通过 Rsyslog 收集 Haproxy 日志提交给Logstash并输出至 Elasticsearch集群

- 安装rsyslog 过程省略

- 安装并配置 Haproxy 的日志

[root@web02 ~]#apt -y install haproxy

[root@web02 ~]#vim /etc/haproxy/haproxy.cfg

global

#log /dev/log local0 #默认会记录日志到/var/log/haproxy.log中,将此行加注释

#log /dev/log local1 notice #将此行加注释

log 127.0.0.1 local6 #添加下面一行,发送至本机的514/udp的local6日志级别中

#在文件最后加下面段

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth admin:123456

[root@web02 ~]#systemctl restart haproxy.service

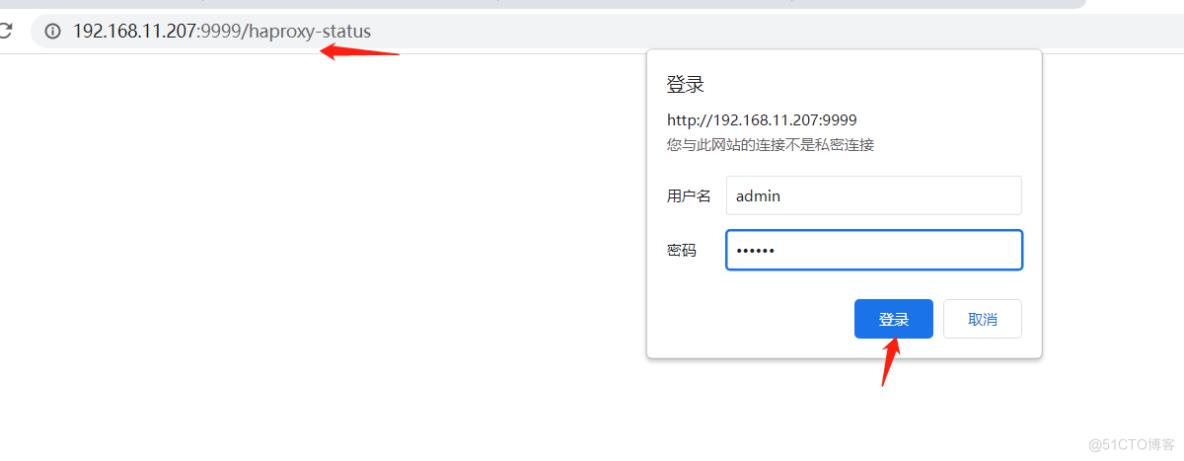

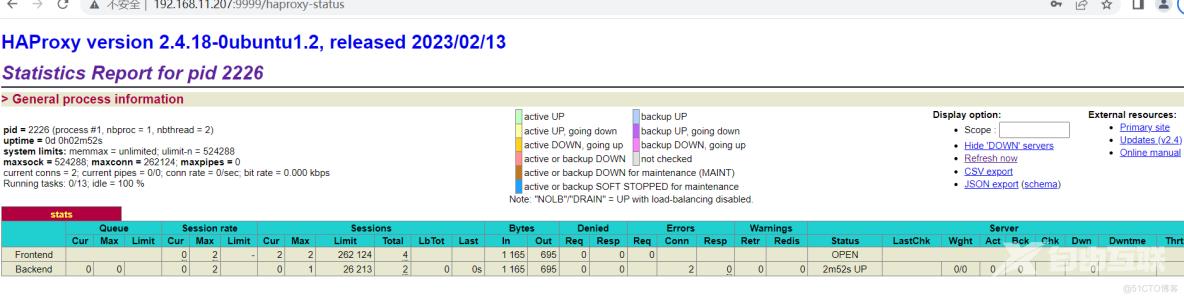

- 通过浏览器访问haproxy的状态页,生成访问日志

- 编辑 Rsyslog 服务配置文件

#在haproxy主机上配置rsyslog服务

[root@web02 ~]#vim /etc/rsyslog.conf

#取消下面两行的注释,实现接收haproxy的日志

module(load="imudp")

input(type="imudp" port="514")

......

#备份原有haproxy的配置文件

[root@web02 ~]#mv /etc/rsyslog.d/49-haproxy.conf /etc/rsyslog.d/49-haproxy.conf.bk

#编写配置文件,将haproxy日志再利用UDP或TCP转发到logstash服务器

[root@web02 ~]#vim /etc/rsyslog.d/haproxy.conf

[root@web02 ~]#cat /etc/rsyslog.d/haproxy.conf

local6.* @192.168.11.204:514

#重启 haproxy 和 rsyslog

[root@web02 ~]#systemctl restart haproxy.service rsyslog

- 配置Logstash

- 配置logstash监听一个本地端口作为日志输入源,用于接收从rsyslog服务转发过来的haproxy服务器日志

- 注意:logstash的服务器监听的IP:端口和rsyslog输出IP和端口必须相同

[root@logstash ~]#vim /etc/logstash/conf.d/haproxy-syslog-to-es.conf

[root@logstash ~]#cat /etc/logstash/conf.d/haproxy-syslog-to-es.conf

input {

syslog {

host => "0.0.0.0"

port => "514" #指定监听的UDP/TCP端口,注意普通用户无法监听此端口

type => "haproxy"

}

}

output {

if [type] == 'haproxy' {

stdout {

codec => "rubydebug" #默认值,可以省略

}

elasticsearch {

hosts => ["192.168.11.200:9200","192.168.11.201:9200","192.168.11.202:9200"]

index => "syslog-haproxy-%{+YYYY.MM.dd}"

}

}

}

#修改用户

[root@logstash ~]#vim /etc/systemd/system/logstash.service

User=root

Group=root

- 语法检查

#logstash - 没有加环境变量的话用/usr/share/logstash/bin/logstash执行

[root@logstash ~]#logstash -f /etc/logstash/conf.d/haproxy-syslog-to-es.conf -t

Using bundled JDK: /usr/share/logstash/jdk

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2023-02-27 12:09:18.410 [main] runner - NOTICE: Running Logstash as superuser is not recommended and won't be allowed in the future. Set 'allow_superuser' to 'false' to avoid startup errors in future releases.

[INFO ] 2023-02-27 12:09:18.419 [main] runner - Starting Logstash {"logstash.version"=>"8.6.1", "jruby.version"=>"jruby 9.3.8.0 (2.6.8) 2022-09-13 98d69c9461 OpenJDK 64-Bit Server VM 17.0.5+8 on 17.0.5+8 +indy +jit [x86_64-linux]"}

[INFO ] 2023-02-27 12:09:18.421 [main] runner - JVM bootstrap flags: [-Xms1g, -Xmx1g, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djruby.compile.invokedynamic=true, -XX:+HeapDumpOnOutOfMemoryError, -Djava.security.egd=file:/dev/urandom, -Dlog4j2.isThreadContextMapInheritable=true, -Djruby.regexp.interruptible=true, -Djdk.io.File.enableADS=true, --add-exports=jdk.compiler/com.sun.tools.javac.api=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.file=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.parser=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.tree=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.util=ALL-UNNAMED, --add-opens=java.base/java.security=ALL-UNNAMED, --add-opens=java.base/java.io=ALL-UNNAMED, --add-opens=java.base/java.nio.channels=ALL-UNNAMED, --add-opens=java.base/sun.nio.ch=ALL-UNNAMED, --add-opens=java.management/sun.management=ALL-UNNAMED]

[WARN ] 2023-02-27 12:09:18.607 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[INFO ] 2023-02-27 12:09:19.164 [LogStash::Runner] Reflections - Reflections took 151 ms to scan 1 urls, producing 127 keys and 444 values

[INFO ] 2023-02-27 12:09:19.714 [LogStash::Runner] javapipeline - Pipeline `main` is configured with `pipeline.ecs_compatibility: v8` setting. All plugins in this pipeline will default to `ecs_compatibility => v8` unless explicitly configured otherwise.

Configuration OK

[INFO ] 2023-02-27 12:09:19.715 [LogStash::Runner] runner - Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

- 启动并验证端口

#启动Logstash

[root@logstash ~]#logstash -f /etc/logstash/conf.d/haproxy-syslog-to-es.conf

Using bundled JDK: /usr/share/logstash/jdk

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[WARN ] 2023-02-27 11:50:06.404 [main] runner - NOTICE: Running Logstash as superuser is not recommended and won't be allowed in the future. Set 'allow_superuser' to 'false' to avoid startup errors in future releases.

[INFO ] 2023-02-27 11:50:06.413 [main] runner - Starting Logstash {"logstash.version"=>"8.6.1", "jruby.version"=>"jruby 9.3.8.0 (2.6.8) 2022-09-13 98d69c9461 OpenJDK 64-Bit Server VM 17.0.5+8 on 17.0.5+8 +indy +jit [x86_64-linux]"}

[INFO ] 2023-02-27 11:50:06.415 [main] runner - JVM bootstrap flags: [-Xms1g, -Xmx1g, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djruby.compile.invokedynamic=true, -XX:+HeapDumpOnOutOfMemoryError, -Djava.security.egd=file:/dev/urandom, -Dlog4j2.isThreadContextMapInheritable=true, -Djruby.regexp.interruptible=true, -Djdk.io.File.enableADS=true, --add-exports=jdk.compiler/com.sun.tools.javac.api=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.file=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.parser=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.tree=ALL-UNNAMED, --add-exports=jdk.compiler/com.sun.tools.javac.util=ALL-UNNAMED, --add-opens=java.base/java.security=ALL-UNNAMED, --add-opens=java.base/java.io=ALL-UNNAMED, --add-opens=java.base/java.nio.channels=ALL-UNNAMED, --add-opens=java.base/sun.nio.ch=ALL-UNNAMED, --add-opens=java.management/sun.management=ALL-UNNAMED]

[WARN ] 2023-02-27 11:50:06.598 [LogStash::Runner] multilocal - Ignoring the 'pipelines.yml' file because modules or command line options are specified

[FATAL] 2023-02-27 11:50:06.609 [LogStash::Runner] runner - Logstash could not be started because there is already another instance using the configured data directory. If you wish to run multiple instances, you must change the "path.data" setting.

[FATAL] 2023-02-27 11:50:06.612 [LogStash::Runner] Logstash - Logstash stopped processing because of an error: (SystemExit) exit

org.jruby.exceptions.SystemExit: (SystemExit) exit

at org.jruby.RubyKernel.exit(org/jruby/RubyKernel.java:790) ~[jruby.jar:?]

at org.jruby.RubyKernel.exit(org/jruby/RubyKernel.java:753) ~[jruby.jar:?]

at usr.share.logstash.lib.bootstrap.environment.<main>(/usr/share/logstash/lib/bootstrap/environment.rb:91) ~[?:?]

#验证端口

[root@logstash ~]#ss -ntlup|grep 514

udp UNCONN 0 0 0.0.0.0:514 0.0.0.0:* users:(("java",pid=35972,fd=88))

tcp LISTEN 0 50 *:514 *:* users:(("java",pid=35972,fd=85))

- Web 访问 haproxy 并验证数据

- 刷新页面,生成日志信息

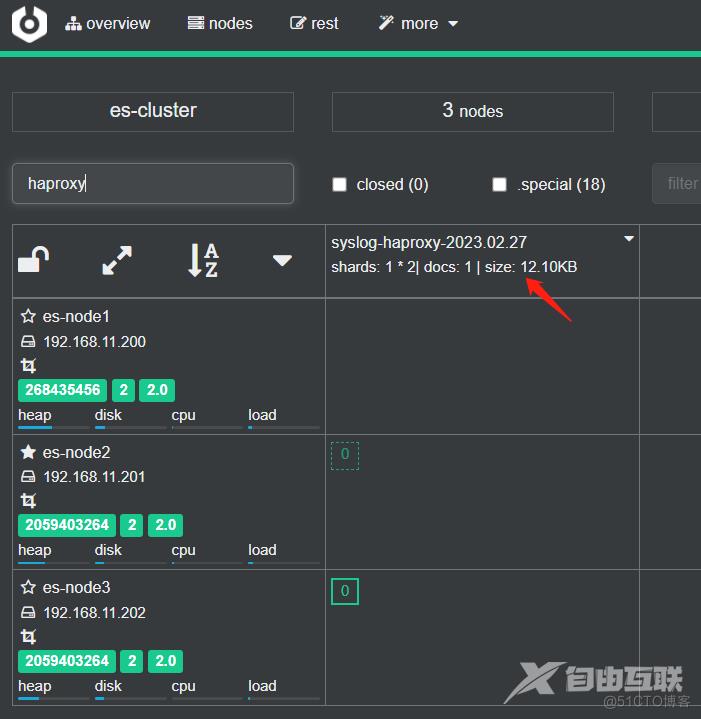

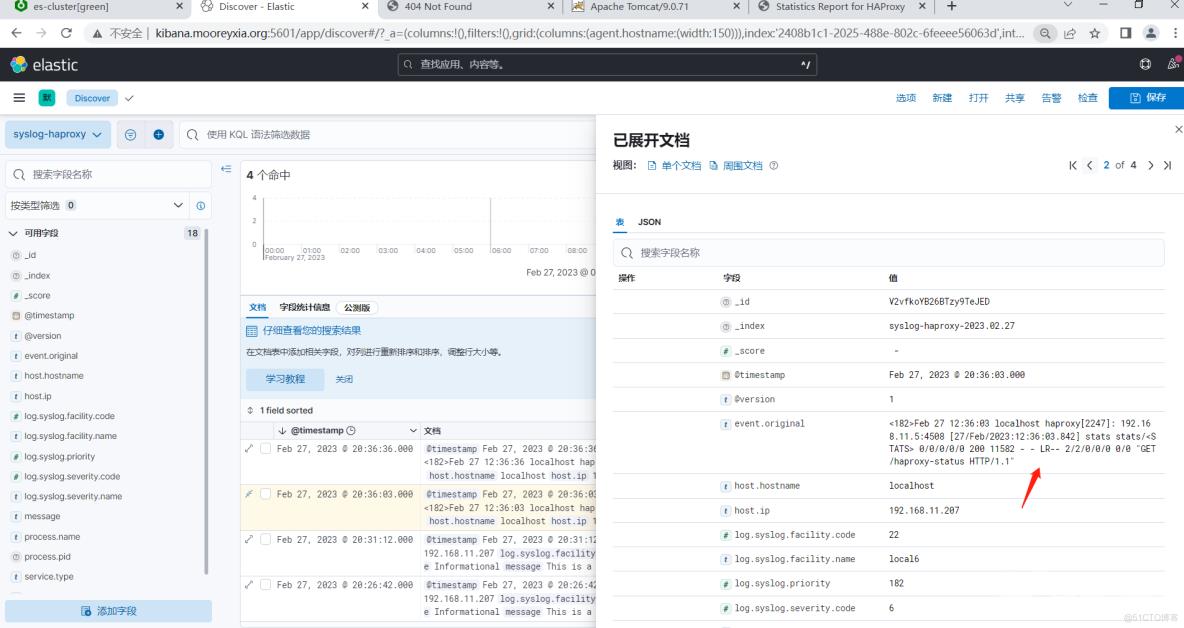

- 查看生成的索引

我是moore,大家一起加油!!!