官网sprng-hadoop https://spring.io/projects/spring-hadoop 添加依赖 dependencies dependency groupIdorg.springframework.data/groupId artifactIdspring-data-hadoop/artifactId version2.5.0.RELEASE/version /dependency/dependencies 使用spr

官网sprng-hadoop

https://spring.io/projects/spring-hadoop

添加依赖

<dependencies> <dependency> <groupId>org.springframework.data</groupId> <artifactId>spring-data-hadoop</artifactId> <version>2.5.0.RELEASE</version> </dependency> </dependencies>

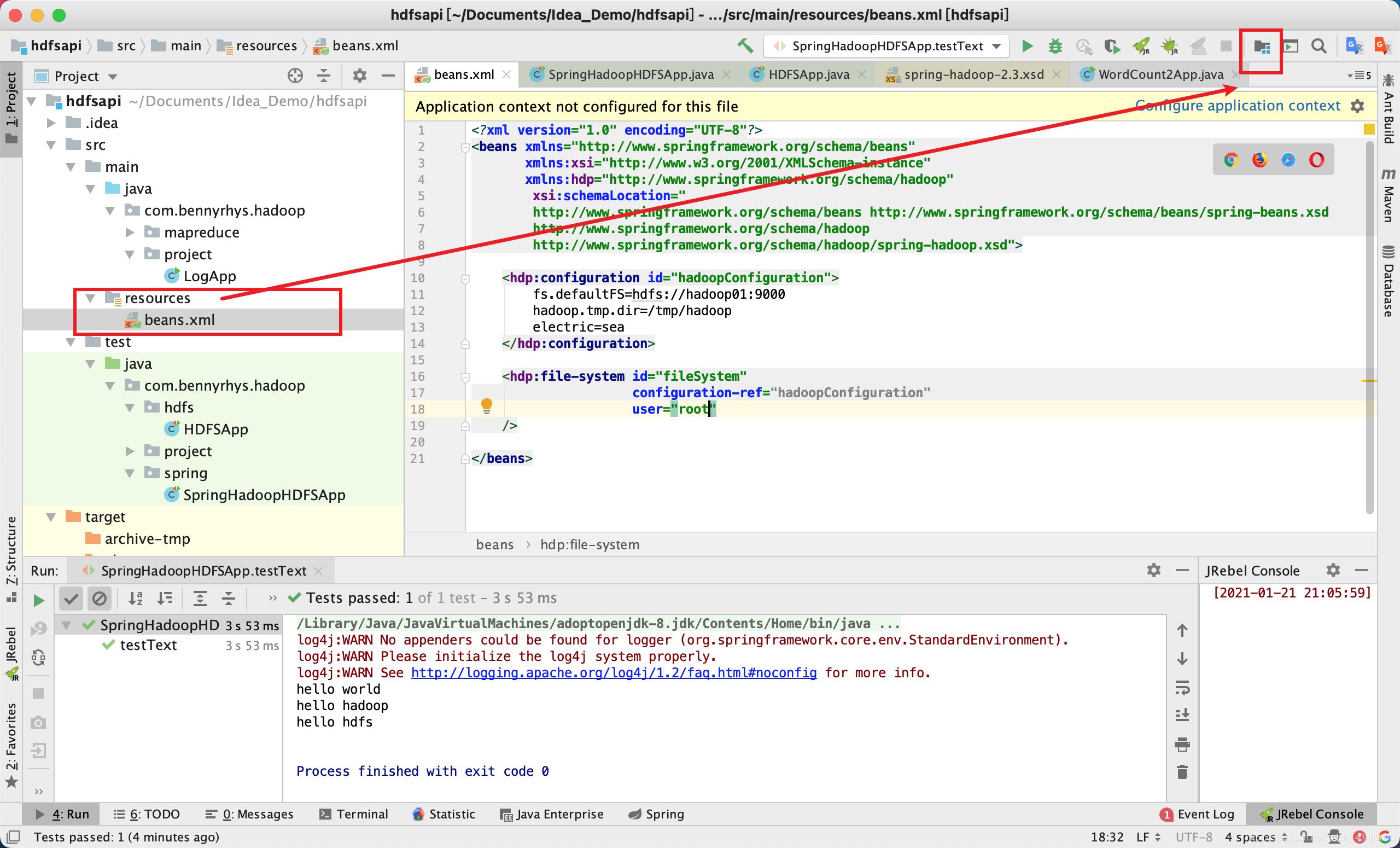

使用spring hadoop配置及查看HDFS文件

新建资源文件beans.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:hdp="http://www.springframework.org/schema/hadoop"

xsi:schemaLocation="

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/hadoop

http://www.springframework.org/schema/hadoop/spring-hadoop.xsd">

<hdp:configuration id="hadoopConfiguration">

fs.defaultFS=hdfs://hadoop01:9000

hadoop.tmp.dir=/tmp/hadoop

electric=sea

</hdp:configuration>

<hdp:file-system id="fileSystem"

configuration-ref="hadoopConfiguration"

user="root"

/>

</beans>

测试文件

package com.bennyrhys.hadoop.spring;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

import java.io.IOException;

/**

* @Author bennyrhys

* @Date 1/21/21 2:35 PM

*/

public class SpringHadoopHDFSApp {

private ApplicationContext ctx;

// apache hadoop

private FileSystem fileSystem;

/**

* 创建HDFS文件夹

*/

@Test

public void testMkdirs() throws Exception {

fileSystem.mkdirs(new Path("/springhdfs"));

}

/**

* 查看HDFS文件

*/

@Test

public void testText() throws Exception {

FSDataInputStream in = fileSystem.open(new Path("/springhdfs/hello.txt"));

IOUtils.copyBytes(in, System.out, 1024);

in.close();

}

@Before

public void setUp() {

ctx = new ClassPathXmlApplicationContext("beans.xml");

fileSystem = (FileSystem) ctx.getBean("fileSystem");

}

@After

public void tearDown() throws Exception {

ctx = null;

fileSystem.close();

}

}

spring hadoop 配置文件详解

提取变量

使用xml中的头文件替换bean,使其允许使用上下文

${}导入变量

新建配置文件application.properties

spring.hadoop.fsUri=hdfs://hadoop01:9000

获取context上下文引入变量

beans.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:hdp="http://www.springframework.org/schema/hadoop"

xmlns:context="http://www.springframework.org/schema/context"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context.xsd

http://www.springframework.org/schema/hadoop http://www.springframework.org/schema/hadoop/spring-hadoop.xsd">

<hdp:configuration id="hadoopConfiguration">

fs.defaultFS=${spring.hadoop.fsUri}

hadoop.tmp.dir=/tmp/hadoop

electric=sea

</hdp:configuration>

<context:property-placeholder location="application.properties"/>

<hdp:file-system id="fileSystem"

configuration-ref="hadoopConfiguration"

user="root"

/>

</beans>

SpringBoot访问HDFS系统

pom.xml

<!-- 添加spring boot的依赖操作hadoop --> <dependency> <groupId>org.springframework.data</groupId> <artifactId>spring-data-hadoop-boot</artifactId> <version>2.5.0.RELEASE</version> </dependency>

SpringBootHDFSApp

package com.bennyrhys.hadoop.spring;

import org.apache.hadoop.fs.FileStatus;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.CommandLineRunner;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.data.hadoop.fs.FsShell;

/**

* @Author bennyrhys

* @Date 1/21/21 11:33 PM

*/

@SpringBootApplication

public class SpringBootHDFSApp implements CommandLineRunner {

@Autowired

FsShell fsShell; //引入spring的

@Override

public void run(String... strings) throws Exception {

for (FileStatus fileStatus : fsShell.lsr("/springhdfs")) {

System.out.println("> " + fileStatus.getPath());

}

}

/**

* > hdfs://hadoop01:9000/springhdfs

* > hdfs://hadoop01:9000/springhdfs/hello.txt

* @param args

*/

public static void main(String[] args) {

SpringApplication.run(SpringBootHDFSApp.class, args);

}

}

到此这篇关于Hadoop集成Spring的使用详细教程(快速入门大数据)的文章就介绍到这了,更多相关Hadoop集成Spring的使用内容请搜索易盾网络以前的文章或继续浏览下面的相关文章希望大家以后多多支持易盾网络!