1. 单机模式为了方便学习测试,在没有服务器部署的情况,容器方式可以帮助我们快速启动一个学习测试环境。可以根据需要启一个单机模式,或者分片集群模式。下面分别介绍两种启动方式:

- 单机模式

- 副本模式

- 分片模式

其实可以通过docker-compose 快速起一个clickhouse集群环境,但是为了学习部署过程还是分步操作

本次试验clickhouse版本为 22.3.2.1

单机模式很简单 https://hub.docker.com/r/clickhouse/clickhouse-server

1.1 服务端容器启动方式之前镜像是yandex/clickhouse-server,现在clickhouse/clickhouse-server

可以根据需要选择合适启动命令

docker run -d --name single-clickhouse-server --ulimit nofile=262144:262144 clickhouse/clickhouse-server

docker run -d --name single-clickhouse-server --ulimit nofile=262144:262144 -p 8123:8123 -p 9000:9000 -p 9009:9009 clickhouse/clickhouse-server

## 数据目录 /var/lib/clickhouse/

$ mkdir -p /Users/momo/studySpace/docker/clickhouse/single-volume/data

$ docker run -d --name single-clickhouse-server \

--ulimit nofile=262144:262144 \

-p 8123:8123 -p 9000:9000 -p 9009:9009 \

--volume=/Users/momo/studySpace/docker/clickhouse/single-volume/data:/var/lib/clickhouse \

--platform linux/amd64 \

clickhouse/clickhouse-server

方式四:挂载配置如果报该错,并且在mac m1环境,可尝试在docker run命令中添加选项**--platform linux/amd64 **

官方解释:https://docs.docker.com/desktop/mac/apple-silicon/

WARNING: The requested image's platform (linux/amd64) does not match the detected host platform (linux/arm64/v8) and no specific platform was requested

为了在本机修改配置方便,可将配置挂载到本机

- 容器中配置目录: /etc/clickhouse-server

- 需要提前准备好配置放到挂载目录,否则会有启动报错: Configuration file '/etc/clickhouse-server/config.xml' isn't readable by user with id '101'

- 查看clickhouse版本:select version() 查看版本 22.3.2.1

# 创建配置目录在本机挂载目录

$ mkdir -p /Users/momo/studySpace/docker/clickhouse/single-volume/{config,logs}

# 可先能过以上方式启动容器,然后将容器配置拷贝出来, docker cp 容器id:容器目录 本机目录

$ docker cp b723e2da1368:/etc/clickhouse-server /Users/momo/studySpace/docker/clickhouse/single-volume/config

$ docker run -d --name single-clickhouse-server \

--ulimit nofile=262144:262144 \

-p 8123:8123 -p 9000:9000 -p 9009:9009 \

--volume=/Users/momo/studySpace/docker/clickhouse/single-volume/data:/var/lib/clickhouse \

--volume=/Users/momo/studySpace/docker/clickhouse/single-volume/config/clickhouse-server:/etc/clickhouse-server \

--volume=/Users/momo/studySpace/docker/clickhouse/single-volume/logs:/var/log/clickhouse-server \

--platform linux/amd64 \

clickhouse/clickhouse-server

## 删除容器

docker stop single-clickhouse-server && docker rm single-clickhouse-server

docker run -it --rm --link single-clickhouse-server:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

副本的目的主要是保障数据的高可用性,即使一台 ClickHouse 节点宕机,那么也可以从 其他服务器获得相同的数据。https://clickhouse.com/docs/en/engines/table-engines/mergetree-family/replication/

2.1 zookeeperzookeeper

host1

host2

standalone方式启动,详细使用说明:https://hub.docker.com/_/zookeeper

# 创建专有网络,zk,clickhouse容器都加入该网络

docker network create clickhouse

# 方式一:快速启动

$ docker run --name zk-standalone --network clickhouse --restart always -d zookeeper

# 方式二:挂载目录,防止重启数据丢失

## 数据目录 /data,日志目录 /datalog

$ mkdir -p /Users/momo/studySpace/docker/zookeeper/data

$ mkdir -p /Users/momo/studySpace/docker/zookeeper/datalog

$ docker run --name zk-standalone \

--restart always \

-v /Users/momo/studySpace/docker/zookeeper/data:/data \

-v /Users/momo/studySpace/docker/zookeeper/datalog:/datalog \

-d zookeeper

创建本地挂载目录

mkdir -p /Users/momo/studySpace/docker/clickhouse/replica-volume1/config

mkdir -p /Users/momo/studySpace/docker/clickhouse/replica-volume2/config

# 可先通过单机模式启动容器,然后将容器配置拷贝出来, docker cp 容器id:容器目录 本机目录

$ docker cp b723e2da1368:/etc/clickhouse-server /Users/momo/studySpace/docker/clickhouse/replica-volume1/config

$ docker cp b723e2da1368:/etc/clickhouse-server /Users/momo/studySpace/docker/clickhouse/replica-volume2/config

$ tree replica-volume1

replica-volume1

└── config

└── clickhouse-server

├── config.d

│ └── docker_related_config.xml

├── config.xml

├── users.d

└── users.xml

4 directories, 3 files

clickhouse-server/config.xml 添加内容如下:

<zookeeper>

<node>

<host>zookeeper</host>

<port>2181</port>

</node>

</zookeeper>

修改docker_related_config.xml

<clickhouse>

<!-- Listen wildcard address to allow accepting connections from other containers and host network. -->

<listen_host>::</listen_host> <!--删除这一条,ipv6时保留该配置-->

<listen_host>0.0.0.0</listen_host> <!--当前使用ipv4, 保留该配置-->

<listen_try>1</listen_try>

<!--

<logger>

<console>1</console>

</logger>

-->

</clickhouse>

同步replica-volume1和replica-volume2下的配置

2.2.2 启动clickhouse server# 创建相应的挂载目录

mkdir -p /Users/momo/studySpace/docker/clickhouse/replica-volume1/data

mkdir -p /Users/momo/studySpace/docker/clickhouse/replica-volume2/data

mkdir -p /Users/momo/studySpace/docker/clickhouse/replica-volume1/logs

mkdir -p /Users/momo/studySpace/docker/clickhouse/replica-volume2/logs

$ docker run -d --name replica-host1 \

-h replica-host1 \

--ulimit nofile=262144:262144 \

--volume=/Users/momo/studySpace/docker/clickhouse/replica-volume1/data:/var/lib/clickhouse \

--volume=/Users/momo/studySpace/docker/clickhouse/replica-volume1/logs:/var/log/clickhouse-server \

--volume=/Users/momo/studySpace/docker/clickhouse/replica-volume1/config/clickhouse-server:/etc/clickhouse-server \

--link zk-standalone:zookeeper \

--network clickhouse \

--platform linux/amd64 \

clickhouse/clickhouse-server

$ docker run -d --name replica-host2 \

-h replica-host2 \

--ulimit nofile=262144:262144 \

--volume=/Users/momo/studySpace/docker/clickhouse/replica-volume2/data:/var/lib/clickhouse \

--volume=/Users/momo/studySpace/docker/clickhouse/replica-volume2/logs:/var/log/clickhouse-server \

--volume=/Users/momo/studySpace/docker/clickhouse/replica-volume2/config/clickhouse-server:/etc/clickhouse-server \

--link zk-standalone:zookeeper \

--network clickhouse \

--platform linux/amd64 \

clickhouse/clickhouse-server

客户端连接

副本只能同步数据,不能同步表结构,所以我们需要在每台机器上自己手动建表

### 1. 连接replica-host1

$ docker run -it --rm --network clickhouse --link replica-host1:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

### 1. replica-host1建表

replica-host1 :) create table t_order_rep2 (

id UInt32,

sku_id String,

total_amount Decimal(16,2),

create_time Datetime

) engine =ReplicatedMergeTree('/clickhouse/tables/01/t_order_rep','rep_001')

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id,sku_id);

### 2. 连接replica-host1

$ docker run -it --rm --network clickhouse --link replica-host2:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

### 2. 这时候查看replica-host2,是没有表的

replica-host2 :) show tables

SHOW TABLES

Query id: e688ae0a-d457-4446-a014-c2f4fdd0a9e4

Ok.

0 rows in set. Elapsed: 0.017 sec.

### 2. replica-host2建表

replica-host2 :) create table t_order_rep2 (

id UInt32,

sku_id String,

total_amount Decimal(16,2),

create_time Datetime

) engine =ReplicatedMergeTree('/clickhouse/tables/01/t_order_rep','rep_002')

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id,sku_id);

### 3. 这个时候可以检查zookeeper上是否已有元数据, 如果没有,检查zookeeper相关配置是否正常

$ docker exec -it zk-standalone /bin/bash

$ ./bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 12] ls /clickhouse

tables task_queue

[zk: localhost:2181(CONNECTED) 12] ls /clickhouse/tables

[01]

[zk: localhost:2181(CONNECTED) 13] ls /clickhouse/tables/01

[t_order_rep]

[zk: localhost:2181(CONNECTED) 14] ls /clickhouse/tables/01/t_order_rep

[alter_partition_version, block_numbers, blocks, columns, leader_election, log, metadata, mutations, nonincrement_block_numbers, part_moves_shard, pinned_part_uuids, quorum, replicas, table_shared_id, temp, zero_copy_hdfs, zero_copy_s3]

### 3. 也可以在clickhouse client执行sql查询

replica-host1 :) select * from system.zookeeper where path='/'

SELECT *

FROM system.zookeeper

WHERE path = '/'

Query id: 30f82647-2d59-42b7-b48b-5c46eba16d72

┌─name───────┬─value─┬─czxid─┬─mzxid─┬───────────────ctime─┬───────────────mtime─┬─version─┬─cversion─┬─aversion─┬─ephemeralOwner─┬─dataLength─┬─numChildren─┬─pzxid─┬─path─┐

│ zookeeper │ │ 0 │ 0 │ 1970-01-01 00:00:00 │ 1970-01-01 00:00:00 │ 0 │ -2 │ 0 │ 0 │ 0 │ 2 │ 0 │ / │

│ clickhouse │ │ 2 │ 2 │ 2022-03-31 03:36:02 │ 2022-03-31 03:36:02 │ 0 │ 2 │ 0 │ 0 │ 0 │ 2 │ 11 │ / │

└────────────┴───────┴───────┴───────┴─────────────────────┴─────────────────────┴─────────┴──────────┴──────────┴────────────────┴────────────┴─────────────┴───────┴──────┘

2 rows in set. Elapsed: 0.028 sec.

https://clickhouse.com/docs/en/engines/table-engines/mergetree-family/replication/

参数说明:

ReplicatedMergeTree中:

- 第一个参数是分片的zk_path一般按照:/clickhouse/table/{shard}/{table_name} 的格式写,如果只有一个分片就写 01 即可。

- 第二个参数是副本名称,相同的分片副本名称不能相同。

实例插入数据,验证数据是否同步

# 1. 在replica_host1插入数据

insert into t_order_rep2 values

(101,'sku_001',1000.00,'2022-03-01 12:00:00'),

(102,'sku_002',2000.00,'2022-03-01 12:00:00'),

(103,'sku_004',2500.00,'2022-03-01 12:00:00'),

(104,'sku_002',2000.00,'2022-03-01 12:00:00'),

(105,'sku_003',600.00,'2022-03-02 12:00:00');

# 2. 在replica_host1查询数据,已经插入成功

replica-host1 :) select * from t_order_rep2

SELECT *

FROM t_order_rep2

Query id: b9dc6dc0-891b-4b68-8d6b-44cd835da82a

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 105 │ sku_003 │ 600 │ 2022-03-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000 │ 2022-03-01 12:00:00 │

│ 102 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

│ 103 │ sku_004 │ 2500 │ 2022-03-01 12:00:00 │

│ 104 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

5 rows in set. Elapsed: 0.059 sec.

# 3. 在replica_host2查询数据,发现数据自动同步过来了

replica-host2 :) select * from t_order_rep2

SELECT *

FROM t_order_rep2

Query id: a2f6a208-5986-48d8-b50b-15779d4e3da4

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000 │ 2022-03-01 12:00:00 │

│ 102 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

│ 103 │ sku_004 │ 2500 │ 2022-03-01 12:00:00 │

│ 104 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 105 │ sku_003 │ 600 │ 2022-03-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

5 rows in set. Elapsed: 0.095 sec.

# 停止

docker stop replica-host1

docker stop replica-host2

# 重启

docker restart replica-host1

docker restart replica-host2

# 停止并删除

docker stop replica-host1 && docker rm replica-host1

docker stop replica-host2 && docker rm replica-host2

2022.03.31 03:40:52.215028 [ 121 ] {} <Error> InterserverIOHTTPHandler: Code: 221. DB::Exception: No interserver IO endpoint named DataPartsExchange:/clickhouse/tables/01/t_order_rep/replicas/rep_001. (

NO_SUCH_INTERSERVER_IO_ENDPOINT), Stack trace (when copying this message, always include the lines below):

0. DB::Exception::Exception(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, int, bool) @ 0xa4dde1a in /usr/bin/clickhouse

1. DB::InterserverIOHandler::getEndpoint(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&) @ 0x151e36db in /usr/bin/clickhouse

2. DB::InterserverIOHTTPHandler::processQuery(DB::HTTPServerRequest&, DB::HTTPServerResponse&, DB::InterserverIOHTTPHandler::Output&) @ 0x151e2f29 in /usr/bin/clickhouse

3. DB::InterserverIOHTTPHandler::handleRequest(DB::HTTPServerRequest&, DB::HTTPServerResponse&) @ 0x151e3a51 in /usr/bin/clickhouse

4. DB::HTTPServerConnection::run() @ 0x1545979b in /usr/bin/clickhouse

5. Poco::Net::TCPServerConnection::start() @ 0x164b264f in /usr/bin/clickhouse

6. Poco::Net::TCPServerDispatcher::run() @ 0x164b4aa1 in /usr/bin/clickhouse

7. Poco::PooledThread::run() @ 0x16671e49 in /usr/bin/clickhouse

8. Poco::ThreadImpl::runnableEntry(void*) @ 0x1666f1a0 in /usr/bin/clickhouse

9. ? @ 0x400086b609 in ?

10. clone @ 0x40009a5163 in ?

CertificateReloader: Cannot obtain modification time for certificate file解决办法:

配置config.xml里interserver_http_host配置不合理,删除就好了

<interserver_http_host>0.0.0.0</interserver_http_host>

2022.03.31 03:36:01.021444 [ 1 ] {} <Error> CertificateReloader: Cannot obtain modification time for certificate file /etc/clickhouse-server/server.crt, skipping update. errno: 2, strerror: No such file

or directory

2022.03.31 03:36:01.022164 [ 1 ] {} <Error> CertificateReloader: Cannot obtain modification time for key file /etc/clickhouse-server/server.key, skipping update. errno: 2, strerror: No such file or dire

ctory

2022.03.31 03:36:01.041271 [ 1 ] {} <Error> CertificateReloader: Poco::Exception. Code: 1000, e.code() = 0, SSL context exception: Error loading private key from file /etc/clickhouse-server/server.key:

error:02000002:system library:OPENSSL_internal:No such file or directory (version 22.3.2.1)

Application: Listen [::1]:8123 failed解决办法:

添加配置 /etc/clickhouse-server/config.d/ssl.xml,内容如下:

2022.03.31 06:41:25.734263 [ 702 ] {} <Information> Application: It looks like the process has no CAP_SYS_NICE capability, the setting 'os_thread_priority' will have no effect. It could happen due to incorrect ClickHouse package installation. You could resolve the problem manually with 'sudo setcap cap_sys_nice=+ep /usr/bin/clickhouse'. Note that it will not work on 'nosuid' mounted filesystems.

2022.03.31 06:41:25.739990 [ 702 ] {} <Warning> Application: Listen [::1]:8123 failed: Poco::Exception. Code: 1000, e.code() = 99, Net Exception: Cannot assign requested address: [::1]:8123 (version 22.3.2.1). If it is an IPv6 or IPv4 address and your host has disabled IPv6 or IPv4, then consider to specify not disabled IPv4 or IPv6 address to listen in <listen_host> element of configuration file. Example for disabled IPv6: <listen_host>0.0.0.0</listen_host> . Example for disabled IPv4: <listen_host>::</listen_host>

2022.03.31 06:41:25.740849 [ 702 ] {} <Warning> Application: Listen [::1]:9000 failed: Poco::Exception. Code: 1000, e.code() = 99, Net Exception: Cannot assign requested address: [::1]:9000 (version 22.3.2.1). If it is an IPv6 or IPv4 address and your host has disabled IPv6 or IPv4, then consider to specify not disabled IPv4 or IPv6 address to listen in <listen_host> element of configuration file. Example for disabled IPv6: <listen_host>0.0.0.0</listen_host> . Example for disabled IPv4: <listen_host>::</listen_host>

2022.03.31 06:41:25.741669 [ 702 ] {} <Warning> Application: Listen [::1]:9004 failed: Poco::Exception. Code: 1000, e.code() = 99, Net Exception: Cannot assign requested address: [::1]:9004 (version 22.3.2.1). If it is an IPv6 or IPv4 address and your host has disabled IPv6 or IPv4, then consider to specify not disabled IPv4 or IPv6 address to listen in <listen_host> element of configuration file. Example for disabled IPv6: <listen_host>0.0.0.0</listen_host> . Example for disabled IPv4: <listen_host>::</listen_host>

插入数据后,副本节点没有同步数据解决办法:

修改配置docker_related_config.xml

默认:

<listen_host>::</listen_host>

改为:

<listen_host>0.0.0.0</listen_host>

<listen_try>1</listen_try>0.0.0.0 因为我当前使用的ipv4,所以只保留这个,另一个是ipv61

比如有2个副本节点replica-host1 replica-host2,在两个节点分别建表后,在replica-host1插入数据后,在replica-host1能查到数据,在replica-host2没有查到数据

查看replica-host2的日志发现:

2022.03.31 07:00:42.687816 [ 156 ] {} <Error> DNSResolver: Cannot resolve host (replica-host1), error 0: replica-host1.

2022.03.31 07:00:42.695884 [ 156 ] {} <Error> default.t_order_rep2 (908dbbab-99fe-4e7e-9901-c74dcbdc94ac): auto DB::StorageReplicatedMergeTree::processQueueEntry(ReplicatedMergeTreeQueue::SelectedEntryPtr)::(anonymous class)::operator()(DB::StorageReplicatedMergeTree::LogEntryPtr &) const: Code: 198. DB::Exception: Not found address of host: replica-host1. (DNS_ERROR), Stack trace (when copying this message, always include the lines below):

3. 分片模式解决办法:

容器环境,容器间通过主机名没有找到容器,dns问题

但是我添加dns后发现仍然不行

最终通过docker network create clickhouse创建network,将zk,clickhouse所有实例都放到该网络就可以通过容器主机名通信了

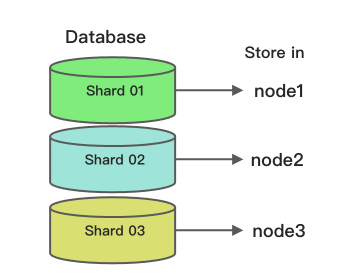

- 副本虽然能够提高数据的可用性,降低丢失风险,但是每台服务器实际上必须容纳全量 数据,对数据的横向扩容没有解决。

- 要解决数据水平切分的问题,需要引入分片的概念。通过分片把一份完整的数据进行切分,不同的分片分布到不同的节点上,再通过 Distributed 表引擎把数据拼接起来一同使用。

- Distributed 表引擎本身不存储数据,有点类似于 MyCat 之于 MySql,成为一种中间件,通过分布式逻辑表来写入、分发、路由来操作多台节点不同分片的分布式数据。

注意:ClickHouse 的集群是表级别的,实际企业中,大部分做了高可用,但是没有用分片,避免降低查询性能以及操作集群的复杂性。

物理机步骤:

- 所有节点安装ClickHouse server

- 在所有节点修改集群配置

- 在每个节点创建本地表 (副本只能同步数据,不能同步表结构,所以我们需要在每台机器上自己手动建表)

- 创建一个分布式表(Distributed 表引擎本身不存储数据,用于拼接分片数据)

容器方式步骤:

- 三个clickhouse server容器实例

- 为每个容器实例挂载相应的配置

- 通过clickhouse client连接每个实例,创建本地表

- 创建分布式表

原理就是zookeeper + ReplicatedMergeTree(复本表) + Distributed(分布式表)

官网分布式表参考:https://clickhouse.com/docs/en/engines/table-engines/special/distributed/

3.1 zookeeperstandalone方式启动,详细使用说明:https://hub.docker.com/_/zookeeper

# 创建专有网络,zk,clickhouse容器都加入该网络

docker network create clickhouse

# 方式一:快速启动

$ docker run --name zk-standalone --network clickhouse --restart always -d zookeeper

# 方式二:挂载目录,防止重启数据丢失

## 数据目录 /data,日志目录 /datalog

$ mkdir -p /Users/momo/studySpace/docker/zookeeper/data

$ mkdir -p /Users/momo/studySpace/docker/zookeeper/datalog

$ docker run --name zk-standalone \

--restart always \

-v /Users/momo/studySpace/docker/zookeeper/data:/data \

-v /Users/momo/studySpace/docker/zookeeper/datalog:/datalog \

-d zookeeper

创建本地挂载目录

# 创建相应的挂载目录

for ((i=1;i<=3;i++));do echo ch-host$i;mkdir -p /Users/momo/studySpace/docker/clickhouse/ch-volume$i/{data,logs,config};done

# 可先通过单机模式启动容器,然后将容器配置拷贝出来, docker cp 容器id:容器目录 本机目录

$ docker cp b723e2da1368:/etc/clickhouse-server /Users/momo/studySpace/docker/clickhouse/ch-volume1/config

$ tree ch-volume1

ch-volume1

├── config

│ └── clickhouse-server

│ ├── config.d

│ │ ├── docker_related_config.xml

│ │ ├── metrika-shard.xml 内容如下

│ │ └── ssl.xml,参考2.2.5第2个报错解决办法

│ ├── config.xml

│ ├── users.d

│ └── users.xml

├── data

└── logs

6 directories, 5 files

/etc/clickhouse-server/config.d/metrika-shard.xml(也可以直接配置在config.xml中)

<?xml version="1.0"?>

<clickhouse>

<remote_servers>

<jiazz_cluster>

<!-- 集群名称-->

<shard>

<!--集群的第一个分片-->

<internal_replication>true</internal_replication>

<replica>

<!--该分片的第一个副本-->

<host>ch-host1</host>

<port>9000</port>

</replica>

<replica>

<!--该分片的第二个副本-->

<host>ch-host2</host>

<port>9000</port>

</replica>

</shard>

<shard>

<!--集群的第二个分片-->

<internal_replication>true</internal_replication>

<replica>

<!--该分片的第一个副本-->

<host>ch-host3</host>

<port>9000</port>

</replica>

</shard>

</jiazz_cluster>

</remote_servers>

<zookeeper-servers>

<node index="1">

<host>zookeeper</host>

<port>2181</port>

</node>

</zookeeper-servers>

<macros>

<shard>01</shard>

<!--不同机器放的分片数不一样-->

<replica>rep_1_1</replica>

<!--不同机器放的副本数不一样-->

</macros>

</clickhouse>

/etc/clickhouse-server/config.xml 添加如下配置:

<zookeeper incl="zookeeper-servers" optional="true" />

<include_from>/etc/clickhouse-server/config.d/metrika-shard.xml</include_from>

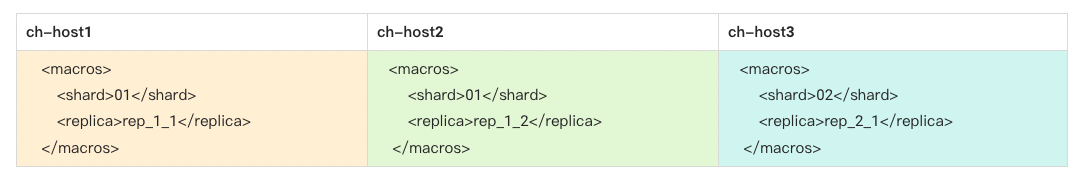

同步配置config.xml 到每个节点,并按节点修改配置metrika-shard.xml的macros

3.2.2 启动clickhouse server# 启动

for ((i=1;i<=3;i++));do \

echo "start ch-host$i";\

docker run -d --name ch-host$i \

-h ch-host$i \

--ulimit nofile=262144:262144 \

--volume=/Users/momo/studySpace/docker/clickhouse/ch-volume$i/data:/var/lib/clickhouse \

--volume=/Users/momo/studySpace/docker/clickhouse/ch-volume$i/logs:/var/log/clickhouse-server \

--volume=/Users/momo/studySpace/docker/clickhouse/ch-volume$i/config/clickhouse-server:/etc/clickhouse-server \

--link zk-standalone:zookeeper \

--network clickhouse \

--platform linux/amd64 \

clickhouse/clickhouse-server; \

done

# 停止

for ((i=1;i<=3;i++));do echo "stop ch-host$i"; docker stop ch-host$i;done

# 重启

for ((i=1;i<=3;i++));do echo "restart ch-host$i"; docker restart ch-host$i;done

# 删除

for ((i=1;i<=3;i++));do echo "rm ch-host$i"; docker stop ch-host$i && docker rm ch-host$i;done

# 清空挂载目录

for ((i=1;i<=3;i++));do echo "clear ch-host$i"; rm -rf /Users/momo/studySpace/docker/clickhouse/ch-volume$i/{data,logs}/*;done

# 连接ch-host1

docker run -it --rm --network clickhouse --link ch-host1:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

# 连接ch-host2

docker run -it --rm --network clickhouse --link ch-host2:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

# 连接ch-host3

docker run -it --rm --network clickhouse --link ch-host3:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

- 会自动同步到ch-host2和ch-host2上

- 集群名字要和配置文件中的一致

- 分片和副本名称从配置文件的宏定义中获取

# 在一个节点建表

ch-host1 :) create table st_order_mt on cluster jiazz_cluster (

id UInt32,

sku_id String,

total_amount Decimal(16,2),

create_time Datetime

) engine=ReplicatedMergeTree('/clickhouse/tables/{shard}/st_order_mt','{replica}')

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id,sku_id);

CREATE TABLE st_order_mt ON CLUSTER jiazz_cluster

(

`id` UInt32,

`sku_id` String,

`total_amount` Decimal(16, 2),

`create_time` Datetime

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/{shard}/st_order_mt', '{replica}')

PARTITION BY toYYYYMMDD(create_time)

PRIMARY KEY id

ORDER BY (id, sku_id)

Query id: 366f51ae-f5eb-4773-ba5e-beb4df56cf1a

┌─host─────┬─port─┬─status─┬─error─┬─num_hosts_remaining─┬─num_hosts_active─┐

│ ch-host1 │ 9000 │ 0 │ │ 2 │ 0 │

│ ch-host2 │ 9000 │ 0 │ │ 1 │ 0 │

│ ch-host3 │ 9000 │ 0 │ │ 0 │ 0 │

└──────────┴──────┴────────┴───────┴─────────────────────┴──────────────────┘

3 rows in set. Elapsed: 0.292 sec.

# ch-host2 ch-host3 表都有了

ch-host2 :) show tables

SHOW TABLES

Query id: e4c9d46c-476c-423a-ba0f-ab2c3984b6b4

┌─name────────┐

│ st_order_mt │

└─────────────┘

1 rows in set. Elapsed: 0.037 sec.

参数含义:

Distributed(集群名称,库名,本地表名,分片键)

分片键必须是整型数字,所以用 hiveHash 函数转换,也可以 rand()

ch-host1 :) create table st_order_mt_all on cluster jiazz_cluster(

id UInt32,

sku_id String,

total_amount Decimal(16,2),

create_time Datetime

)engine = Distributed(jiazz_cluster,default,st_order_mt,hiveHash(sku_id));

CREATE TABLE st_order_mt_all ON CLUSTER jiazz_cluster

(

`id` UInt32,

`sku_id` String,

`total_amount` Decimal(16, 2),

`create_time` Datetime

)

ENGINE = Distributed(jiazz_cluster, default, st_order_mt, hiveHash(sku_id))

Query id: 655b7f5d-ea2c-42c0-bfde-358ef50192bd

┌─host─────┬─port─┬─status─┬─error─┬─num_hosts_remaining─┬─num_hosts_active─┐

│ ch-host1 │ 9000 │ 0 │ │ 2 │ 0 │

│ ch-host2 │ 9000 │ 0 │ │ 1 │ 0 │

│ ch-host3 │ 9000 │ 0 │ │ 0 │ 0 │

└──────────┴──────┴────────┴───────┴─────────────────────┴──────────────────┘

3 rows in set. Elapsed: 0.155 sec.

# 在其它节点确认建表成功

ch-host2 :) show tables

SHOW TABLES

Query id: 0c5d7ee9-80a0-46e6-aee7-7b06afb17729

┌─name────────────┐

│ st_order_mt │

│ st_order_mt_all │

└─────────────────┘

2 rows in set. Elapsed: 0.044 sec.

ch-host1 :) insert into st_order_mt_all values

(201,'sku_001',1000.00,'2022-03-01 12:00:00') ,

(202,'sku_002',2000.00,'2022-03-01 12:00:00'),

(203,'sku_004',2500.00,'2022-03-01 12:00:00'),

(204,'sku_002',2000.00,'2022-03-01 12:00:00'),

(205,'sku_003',600.00,'2022-03-02 12:00:00');

INSERT INTO st_order_mt_all FORMAT Values

Query id: 0c886dc1-066c-402a-804e-0cbb9d004b5d

Ok.

5 rows in set. Elapsed: 0.156 sec.

####### 查询分布式表,所有节点查询结果都一样

ch-host2 :) SELECT * FROM st_order_mt_all;

SELECT *

FROM st_order_mt_all

Query id: 72a08fec-5477-4c9c-941b-6bc471400467

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 202 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

│ 203 │ sku_004 │ 2500 │ 2022-03-01 12:00:00 │

│ 204 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 201 │ sku_001 │ 1000 │ 2022-03-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 205 │ sku_003 │ 600 │ 2022-03-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

5 rows in set. Elapsed: 0.099 sec.

####### 查询本地表,ch-host1和ch-host2结果一样,因为是第一分片互为复本,ch-host3结果是第二个分片内容

ch-host1 :) select * from st_order_mt;

SELECT *

FROM st_order_mt

Query id: f728b8b0-46ec-4b02-8eca-825173c82dab

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 202 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

│ 203 │ sku_004 │ 2500 │ 2022-03-01 12:00:00 │

│ 204 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

3 rows in set. Elapsed: 0.041 sec.

ch-host2 :) select * from st_order_mt;

SELECT *

FROM st_order_mt

Query id: e67512ea-1b43-498d-97b0-5bc57e5172be

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 202 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

│ 203 │ sku_004 │ 2500 │ 2022-03-01 12:00:00 │

│ 204 │ sku_002 │ 2000 │ 2022-03-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

3 rows in set. Elapsed: 0.041 sec.

ch-host3 :) select * from st_order_mt;

SELECT *

FROM st_order_mt

Query id: f097753a-4d28-46d8-8d52-82a46908d9af

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 205 │ sku_003 │ 600 │ 2022-03-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 201 │ sku_001 │ 1000 │ 2022-03-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

2 rows in set. Elapsed: 0.021 sec.

3分片2副本部署参考:https://zhuanlan.zhihu.com/p/461792873

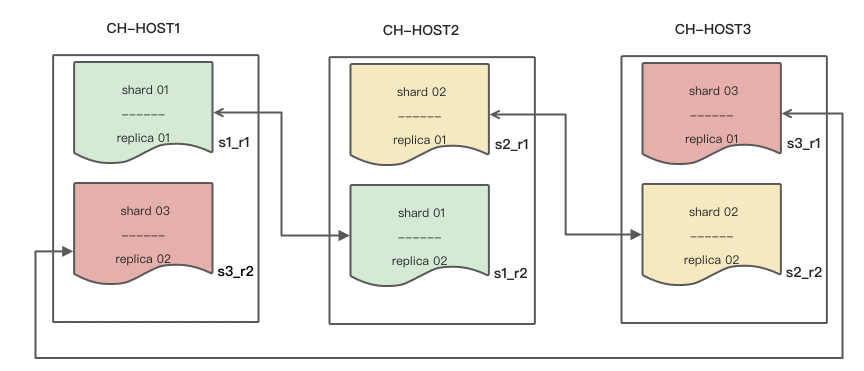

此设置仅用于理论验证,不建议在生产中进行此设置。

Clickhouse支持分片和复本:

- Data distribution是指将非常大的数据集拆分为多个存储在不同服务器上的碎片(数据集的较小部分)。ClickHouse根据分片键将数据集分为多个分片。每个分片保存并处理一部分数据,然后将来自多个分片的查询结果组合在一起,给出最终结果。

-

Data replication是指在其他服务器节点上保留一份数据副本,以确保在服务器节点出现故障时可用。这还可以通过允许多台服务器并行处理数据查询来提高性能。

-

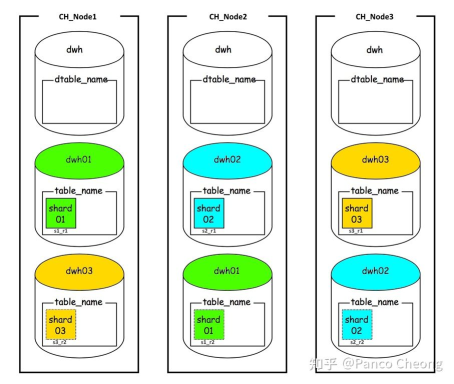

Circular Replication这里介绍的循环复本是将数据分布在3个分片,并复制2次

-

这需要在每个节点上放置2个不同的分片。由于每个分片都有相同的表名,因此当碎片/副本位于同一服务器上时,ClickHouse无法区分它们。

-

解决这个问题的办法:

- 将每个分片放入单独的数据库

- 为每个分片设置默认数据库

- 在分布式表ddl中设置空数据库参数,在查询时系统将使用默认数据库将特定表的查询路由到正确的数据库

- 设置每个分片的internal_replication=true

- 生产中的这种拓扑通常需要6个服务器节点,因为每个服务器只存储一个分片的数据,不需要单独的数据库解决方案。

- 在config.d和users.d目录下的.xml文件都会被自动加载到clickhouse中,所以我们可以将不同配置放到不同文件中,再起一个代表性的名字,比如zookeeper.xml表示zk相关配置。

- 尽管默认配置是config.xml 和user.xml,但是不建议直接编辑修改该文件,因为

- 这些文件可能会在下次升级时被覆盖

- 一个文件中配置非常多的时候,查找某个配置比较困难

- 只需复制XML文件即可轻松复制用户配置文件

- 便于在节点间复制配置文件

# 创建相应的挂载目录

for ((i=1;i<=3;i++));do echo ch2-host$i;mkdir -p /Users/momo/studySpace/docker/clickhouse/ch2-volume$i/{data,logs,config};done

# 可先通过单机模式启动容器,然后将容器配置拷贝出来, docker cp 容器id:容器目录 本机目录

$ docker cp b723e2da1368:/etc/clickhouse-server /Users/momo/studySpace/docker/clickhouse/ch2-volume1/config

$ tree ch2-volume1

ch-volume1

├── config

│ └── clickhouse-server

│ ├── config.d

│ │ ├── docker_related_config.xml

│ │ ├── metrika-shard.xml 内容如下

│ │ └── ssl.xml,参考2.2.5第2个报错解决办法

│ ├── config.xml

│ ├── users.d

│ └── users.xml

├── data

└── logs

6 directories, 5 files

/etc/clickhouse-server/config.d/cluster.xml

<?xml version="1.0"?>

<clickhouse>

<remote_servers>

<jiazz_cluster_3s_2r>

<!-- 集群名称-->

<shard>

<!--集群的第一个分片-->

<internal_replication>true</internal_replication>

<replica>

<!--该分片的第一个副本-->

<default_database>dwh01</default_database>

<host>ch-host1</host>

<port>9000</port>

</replica>

<replica>

<!--该分片的第二个副本-->

<default_database>dwh01</default_database>

<host>ch-host2</host>

<port>9000</port>

</replica>

</shard>

<shard>

<!--集群的第二个分片-->

<internal_replication>true</internal_replication>

<replica>

<!--该分片的第一个副本-->

<default_database>dwh02</default_database>

<host>ch-host2</host>

<port>9000</port>

</replica>

<replica>

<!--该分片的第二个副本-->

<default_database>dwh02</default_database>

<host>ch-host3</host>

<port>9000</port>

</replica>

</shard>

<shard>

<!--集群的第三个分片-->

<internal_replication>true</internal_replication>

<replica>

<!--该分片的第一个副本-->

<default_database>dwh03</default_database>

<host>ch-host3</host>

<port>9000</port>

</replica>

<replica>

<!--该分片的第二个副本-->

<default_database>dwh03</default_database>

<host>ch-host1</host>

<port>9000</port>

</replica>

</shard>

</jiazz_cluster_3s_2r>

</remote_servers>

</clickhouse>

/etc/clickhouse-server/config.d/zookeeper.xml

<?xml version="1.0"?>

<clickhouse>

<zookeeper>

<node index="1">

<host>zookeeper</host>

<port>2181</port>

</node>

</zookeeper>

</clickhouse>

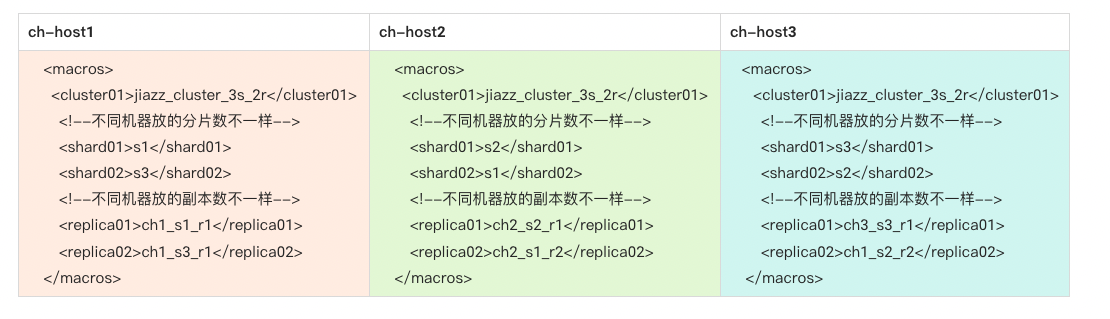

/etc/clickhouse-server/config.d/macro.xml

<?xml version="1.0"?>

<clickhouse>

<macros>

<cluster01>jiazz_cluster_3s_2r</cluster01>

<!--不同机器放的分片数不一样-->

<shard01>s1</shard01>

<shard02>s3</shard02>

<!--不同机器放的副本数不一样-->

<replica01>ch1_s1_r1</replica01>

<replica02>ch1_s3_r1</replica02>

</macros>

</clickhouse>

# 启动

for ((i=1;i<=3;i++));do \

echo "start ch-host$i";\

docker run -d --name ch-host$i \

-h ch-host$i \

--ulimit nofile=262144:262144 \

--volume=/Users/momo/studySpace/docker/clickhouse/ch2-volume$i/data:/var/lib/clickhouse \

--volume=/Users/momo/studySpace/docker/clickhouse/ch2-volume$i/logs:/var/log/clickhouse-server \

--volume=/Users/momo/studySpace/docker/clickhouse/ch2-volume$i/config/clickhouse-server:/etc/clickhouse-server \

--link zk-standalone:zookeeper \

--network clickhouse \

--platform linux/amd64 \

clickhouse/clickhouse-server; \

done

# 停止

for ((i=1;i<=3;i++));do echo "stop ch-host$i"; docker stop ch-host$i;done

# 重启

for ((i=1;i<=3;i++));do echo "restart ch-host$i"; docker restart ch-host$i;done

# 删除

for ((i=1;i<=3;i++));do echo "rm ch-host$i"; docker stop ch-host$i && docker rm ch-host$i;done

# 清空挂载目录

for ((i=1;i<=3;i++));do echo "clear ch-host$i"; rm -rf /Users/momo/studySpace/docker/clickhouse/ch2-volume$i/{data,logs}/*;done

# 连接ch-host1

docker run -it --rm --network clickhouse --link ch-host1:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

# 连接ch-host2

docker run -it --rm --network clickhouse --link ch-host2:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

# 连接ch-host3

docker run -it --rm --network clickhouse --link ch-host3:clickhouse-server clickhouse/clickhouse-client --host clickhouse-server

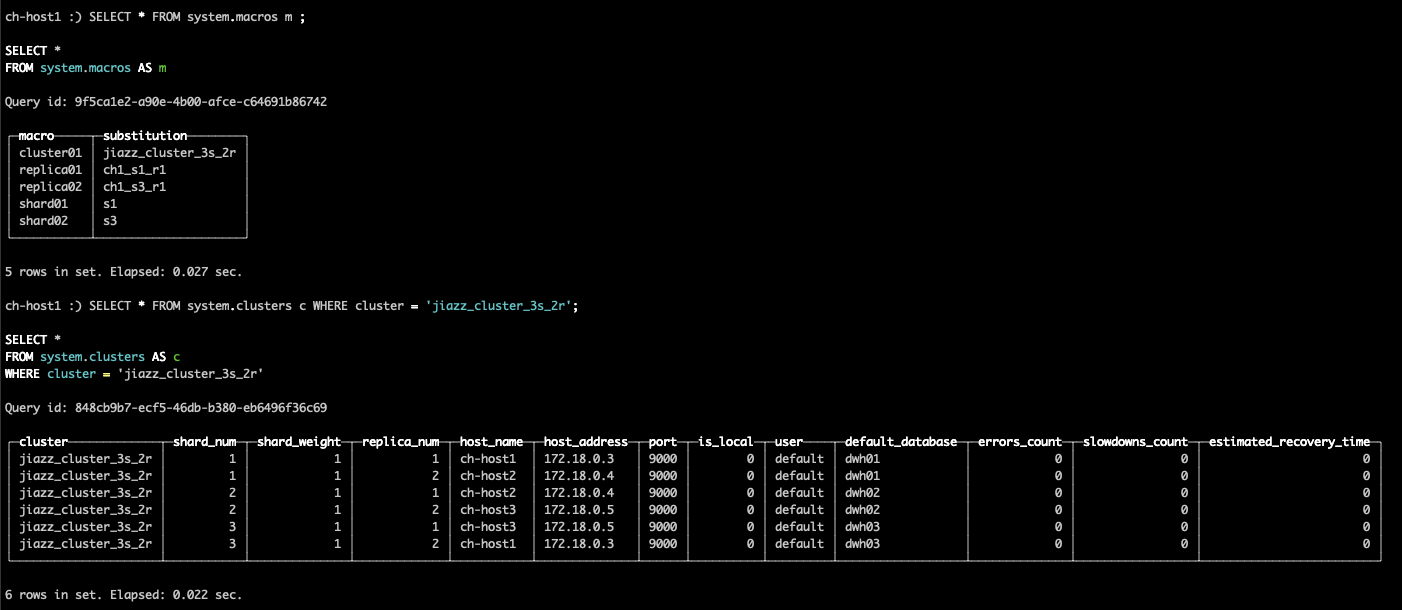

3.3.3.2 创建database检查配置

SELECT * FROM system.macros m ;

SELECT * FROM system.clusters c WHERE cluster = 'jiazz_3s_2r';

每个分片有各自的database,如shard01属于dwh01

--ch-host1

CREATE DATABASE IF NOT EXISTS dwh

CREATE DATABASE IF NOT EXISTS dwh01

CREATE DATABASE IF NOT EXISTS dwh03

--ch-host2

CREATE DATABASE IF NOT EXISTS dwh

CREATE DATABASE IF NOT EXISTS dwh02

CREATE DATABASE IF NOT EXISTS dwh01

--ch-host3

CREATE DATABASE IF NOT EXISTS dwh

CREATE DATABASE IF NOT EXISTS dwh03

CREATE DATABASE IF NOT EXISTS dwh02

show databases

创建表模板,根据每个节点,修改database名称即可,一个节点创建两个表

CREATE TABLE dwh03.hits_shard

(

`WatchID` UInt64,

`JavaEnable` UInt8,

`Title` String,

`GoodEvent` Int16,

`EventTime` DateTime,

`EventDate` Date,

`CounterID` UInt32,

`ClientIP` UInt32,

`ClientIP6` FixedString(16),

`RegionID` UInt32,

`UserID` UInt64,

`CounterClass` Int8,

`OS` UInt8,

`UserAgent` UInt8,

`URL` String,

`Referer` String,

`URLDomain` String,

`RefererDomain` String,

`Refresh` UInt8,

`IsRobot` UInt8,

`RefererCategories` Array(UInt16),

`URLCategories` Array(UInt16),

`URLRegions` Array(UInt32),

`RefererRegions` Array(UInt32),

`ResolutionWidth` UInt16,

`ResolutionHeight` UInt16,

`ResolutionDepth` UInt8,

`FlashMajor` UInt8,

`FlashMinor` UInt8,

`FlashMinor2` String,

`NetMajor` UInt8,

`NetMinor` UInt8,

`UserAgentMajor` UInt16,

`UserAgentMinor` FixedString(2),

`CookieEnable` UInt8,

`JavascriptEnable` UInt8,

`IsMobile` UInt8,

`MobilePhone` UInt8,

`MobilePhoneModel` String,

`Params` String,

`IPNetworkID` UInt32,

`TraficSourceID` Int8,

`SearchEngineID` UInt16,

`SearchPhrase` String,

`AdvEngineID` UInt8,

`IsArtifical` UInt8,

`WindowClientWidth` UInt16,

`WindowClientHeight` UInt16,

`ClientTimeZone` Int16,

`ClientEventTime` DateTime,

`SilverlightVersion1` UInt8,

`SilverlightVersion2` UInt8,

`SilverlightVersion3` UInt32,

`SilverlightVersion4` UInt16,

`PageCharset` String,

`CodeVersion` UInt32,

`IsLink` UInt8,

`IsDownload` UInt8,

`IsNotBounce` UInt8,

`FUniqID` UInt64,

`HID` UInt32,

`IsOldCounter` UInt8,

`IsEvent` UInt8,

`IsParameter` UInt8,

`DontCountHits` UInt8,

`WithHash` UInt8,

`HitColor` FixedString(1),

`UTCEventTime` DateTime,

`Age` UInt8,

`Sex` UInt8,

`Income` UInt8,

`Interests` UInt16,

`Robotness` UInt8,

`GeneralInterests` Array(UInt16),

`RemoteIP` UInt32,

`RemoteIP6` FixedString(16),

`WindowName` Int32,

`OpenerName` Int32,

`HistoryLength` Int16,

`BrowserLanguage` FixedString(2),

`BrowserCountry` FixedString(2),

`SocialNetwork` String,

`SocialAction` String,

`HTTPError` UInt16,

`SendTiming` Int32,

`DNSTiming` Int32,

`ConnectTiming` Int32,

`ResponseStartTiming` Int32,

`ResponseEndTiming` Int32,

`FetchTiming` Int32,

`RedirectTiming` Int32,

`DOMInteractiveTiming` Int32,

`DOMContentLoadedTiming` Int32,

`DOMCompleteTiming` Int32,

`LoadEventStartTiming` Int32,

`LoadEventEndTiming` Int32,

`NSToDOMContentLoadedTiming` Int32,

`FirstPaintTiming` Int32,

`RedirectCount` Int8,

`SocialSourceNetworkID` UInt8,

`SocialSourcePage` String,

`ParamPrice` Int64,

`ParamOrderID` String,

`ParamCurrency` FixedString(3),

`ParamCurrencyID` UInt16,

`GoalsReached` Array(UInt32),

`OpenstatServiceName` String,

`OpenstatCampaignID` String,

`OpenstatAdID` String,

`OpenstatSourceID` String,

`UTMSource` String,

`UTMMedium` String,

`UTMCampaign` String,

`UTMContent` String,

`UTMTerm` String,

`FromTag` String,

`HasGCLID` UInt8,

`RefererHash` UInt64,

`URLHash` UInt64,

`CLID` UInt32,

`YCLID` UInt64,

`ShareService` String,

`ShareURL` String,

`ShareTitle` String,

`ParsedParams` Nested(

Key1 String,

Key2 String,

Key3 String,

Key4 String,

Key5 String,

ValueDouble Float64),

`IslandID` FixedString(16),

`RequestNum` UInt32,

`RequestTry` UInt8

)

ENGINE=ReplicatedMergeTree('/clickhouse/{cluster01}/{shard01}/tables/hits', '{replica01}')

PARTITION BY toYYYYMM(EventDate)

ORDER BY (CounterID, EventDate, intHash32(UserID))

SAMPLE BY intHash32(UserID);

CREATE TABLE dwh02.hits_shard

(

`WatchID` UInt64,

`JavaEnable` UInt8,

`Title` String,

`GoodEvent` Int16,

`EventTime` DateTime,

`EventDate` Date,

`CounterID` UInt32,

`ClientIP` UInt32,

`ClientIP6` FixedString(16),

`RegionID` UInt32,

`UserID` UInt64,

`CounterClass` Int8,

`OS` UInt8,

`UserAgent` UInt8,

`URL` String,

`Referer` String,

`URLDomain` String,

`RefererDomain` String,

`Refresh` UInt8,

`IsRobot` UInt8,

`RefererCategories` Array(UInt16),

`URLCategories` Array(UInt16),

`URLRegions` Array(UInt32),

`RefererRegions` Array(UInt32),

`ResolutionWidth` UInt16,

`ResolutionHeight` UInt16,

`ResolutionDepth` UInt8,

`FlashMajor` UInt8,

`FlashMinor` UInt8,

`FlashMinor2` String,

`NetMajor` UInt8,

`NetMinor` UInt8,

`UserAgentMajor` UInt16,

`UserAgentMinor` FixedString(2),

`CookieEnable` UInt8,

`JavascriptEnable` UInt8,

`IsMobile` UInt8,

`MobilePhone` UInt8,

`MobilePhoneModel` String,

`Params` String,

`IPNetworkID` UInt32,

`TraficSourceID` Int8,

`SearchEngineID` UInt16,

`SearchPhrase` String,

`AdvEngineID` UInt8,

`IsArtifical` UInt8,

`WindowClientWidth` UInt16,

`WindowClientHeight` UInt16,

`ClientTimeZone` Int16,

`ClientEventTime` DateTime,

`SilverlightVersion1` UInt8,

`SilverlightVersion2` UInt8,

`SilverlightVersion3` UInt32,

`SilverlightVersion4` UInt16,

`PageCharset` String,

`CodeVersion` UInt32,

`IsLink` UInt8,

`IsDownload` UInt8,

`IsNotBounce` UInt8,

`FUniqID` UInt64,

`HID` UInt32,

`IsOldCounter` UInt8,

`IsEvent` UInt8,

`IsParameter` UInt8,

`DontCountHits` UInt8,

`WithHash` UInt8,

`HitColor` FixedString(1),

`UTCEventTime` DateTime,

`Age` UInt8,

`Sex` UInt8,

`Income` UInt8,

`Interests` UInt16,

`Robotness` UInt8,

`GeneralInterests` Array(UInt16),

`RemoteIP` UInt32,

`RemoteIP6` FixedString(16),

`WindowName` Int32,

`OpenerName` Int32,

`HistoryLength` Int16,

`BrowserLanguage` FixedString(2),

`BrowserCountry` FixedString(2),

`SocialNetwork` String,

`SocialAction` String,

`HTTPError` UInt16,

`SendTiming` Int32,

`DNSTiming` Int32,

`ConnectTiming` Int32,

`ResponseStartTiming` Int32,

`ResponseEndTiming` Int32,

`FetchTiming` Int32,

`RedirectTiming` Int32,

`DOMInteractiveTiming` Int32,

`DOMContentLoadedTiming` Int32,

`DOMCompleteTiming` Int32,

`LoadEventStartTiming` Int32,

`LoadEventEndTiming` Int32,

`NSToDOMContentLoadedTiming` Int32,

`FirstPaintTiming` Int32,

`RedirectCount` Int8,

`SocialSourceNetworkID` UInt8,

`SocialSourcePage` String,

`ParamPrice` Int64,

`ParamOrderID` String,

`ParamCurrency` FixedString(3),

`ParamCurrencyID` UInt16,

`GoalsReached` Array(UInt32),

`OpenstatServiceName` String,

`OpenstatCampaignID` String,

`OpenstatAdID` String,

`OpenstatSourceID` String,

`UTMSource` String,

`UTMMedium` String,

`UTMCampaign` String,

`UTMContent` String,

`UTMTerm` String,

`FromTag` String,

`HasGCLID` UInt8,

`RefererHash` UInt64,

`URLHash` UInt64,

`CLID` UInt32,

`YCLID` UInt64,

`ShareService` String,

`ShareURL` String,

`ShareTitle` String,

`ParsedParams` Nested(

Key1 String,

Key2 String,

Key3 String,

Key4 String,

Key5 String,

ValueDouble Float64),

`IslandID` FixedString(16),

`RequestNum` UInt32,

`RequestTry` UInt8

)

ENGINE=ReplicatedMergeTree('/clickhouse/{cluster01}/{shard02}/tables/hits', '{replica02}')

PARTITION BY toYYYYMM(EventDate)

ORDER BY (CounterID, EventDate, intHash32(UserID))

SAMPLE BY intHash32(UserID);

CREATE TABLE dwh01.hits_shard

<!----- skipped, copy detail from previous ----->

ENGINE=ReplicatedMergeTree('/clickhouse/{cluster01}/{shard01}/tables/hits', '{replica01}')

<!----- skipped, copy detail from previous ----->

CREATE TABLE dwh03.hits_shard

<!----- skipped, copy detail from previous ----->

ENGINE=ReplicatedMergeTree('/clickhouse/{cluster01}/{shard02}/tables/hits', '{replica02}')

<!----- skipped, copy detail from previous ----->

CREATE TABLE dwh02.hits_shard

<!----- skipped, copy detail from previous ----->

ENGINE=ReplicatedMergeTree('/clickhouse/{cluster01}/{shard01}/tables/hits', '{replica01}')

<!----- skipped, copy detail from previous ----->

CREATE TABLE dwh01.hits_shard

<!----- skipped, copy detail from previous ----->

ENGINE=ReplicatedMergeTree('/clickhouse/{cluster01}/{shard02}/tables/hits', '{replica02}')

<!----- skipped, copy detail from previous ----->

CREATE TABLE dwh03.hits_shard

<!----- skipped, copy detail from previous ----->

ENGINE=ReplicatedMergeTree('/clickhouse/{cluster01}/{shard01}/tables/hits', '{replica01}')

<!----- skipped, copy detail from previous ----->

CREATE TABLE dwh02.hits_shard

<!----- skipped, copy detail from previous ----->

ENGINE=ReplicatedMergeTree('/clickhouse/{cluster01}/{shard02}/tables/hits', '{replica02}')

<!----- skipped, copy detail from previous ----->

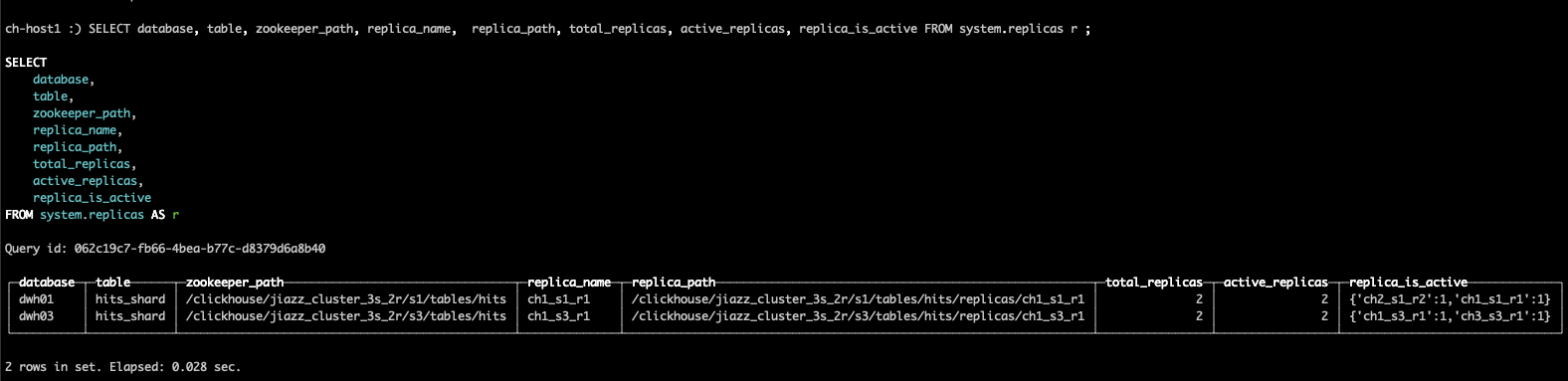

ch-host1 :) SELECT * FROM system.replicas r ;

SELECT *

FROM system.replicas AS r

Query id: b1549b41-67c9-44fe-8bfd-ea05e8205992

┌─database─┬─table──────┬─engine──────────────┬─is_leader─┬─can_become_leader─┬─is_readonly─┬─is_session_expired─┬─future_parts─┬─parts_to_check─┬─zookeeper_path─────────────────────────────────┬─replica_name─┬─replica_path──────────────────────────────────────────────────────┬─columns_version─┬─queue_size─┬─inserts_in_queue─┬─merges_in_queue─┬─part_mutations_in_queue─┬───queue_oldest_time─┬─inserts_oldest_time─┬──merges_oldest_time─┬─part_mutations_oldest_time─┬─oldest_part_to_get─┬─oldest_part_to_merge_to─┬─oldest_part_to_mutate_to─┬─log_max_index─┬─log_pointer─┬───last_queue_update─┬─absolute_delay─┬─total_replicas─┬─active_replicas─┬─last_queue_update_exception─┬─zookeeper_exception─┬─replica_is_active─────────────┐

│ dwh01 │ hits_shard │ ReplicatedMergeTree │ 1 │ 1 │ 0 │ 0 │ 0 │ 0 │ /clickhouse/jiazz_cluster_3s_2r/s1/tables/hits │ ch1_s1_r1 │ /clickhouse/jiazz_cluster_3s_2r/s1/tables/hits/replicas/ch1_s1_r1 │ -1 │ 0 │ 0 │ 0 │ 0 │ 1970-01-01 00:00:00 │ 1970-01-01 00:00:00 │ 1970-01-01 00:00:00 │ 1970-01-01 00:00:00 │ │ │ │ 0 │ 0 │ 1970-01-01 00:00:00 │ 0 │ 2 │ 2 │ │ │ {'ch2_s1_r2':1,'ch1_s1_r1':1} │

│ dwh03 │ hits_shard │ ReplicatedMergeTree │ 1 │ 1 │ 0 │ 0 │ 0 │ 0 │ /clickhouse/jiazz_cluster_3s_2r/s3/tables/hits │ ch1_s3_r1 │ /clickhouse/jiazz_cluster_3s_2r/s3/tables/hits/replicas/ch1_s3_r1 │ -1 │ 0 │ 0 │ 0 │ 0 │ 1970-01-01 00:00:00 │ 1970-01-01 00:00:00 │ 1970-01-01 00:00:00 │ 1970-01-01 00:00:00 │ │ │ │ 0 │ 0 │ 1970-01-01 00:00:00 │ 0 │ 2 │ 2 │ │ │ {'ch1_s3_r1':1,'ch3_s3_r1':1} │

└──────────┴────────────┴─────────────────────┴───────────┴───────────────────┴─────────────┴────────────────────┴──────────────┴────────────────┴────────────────────────────────────────────────┴──────────────┴───────────────────────────────────────────────────────────────────┴─────────────────┴────────────┴──────────────────┴─────────────────┴─────────────────────────┴─────────────────────┴─────────────────────┴─────────────────────┴────────────────────────────┴────────────────────┴─────────────────────────┴──────────────────────────┴───────────────┴─────────────┴─────────────────────┴────────────────┴────────────────┴─────────────────┴─────────────────────────────┴─────────────────────┴───────────────────────────────┘

2 rows in set. Elapsed: 0.036 sec.

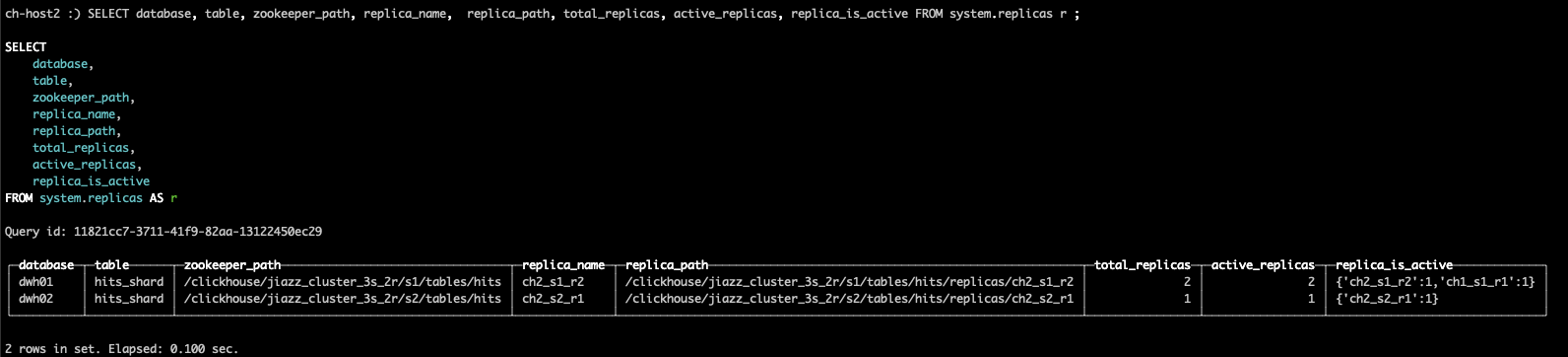

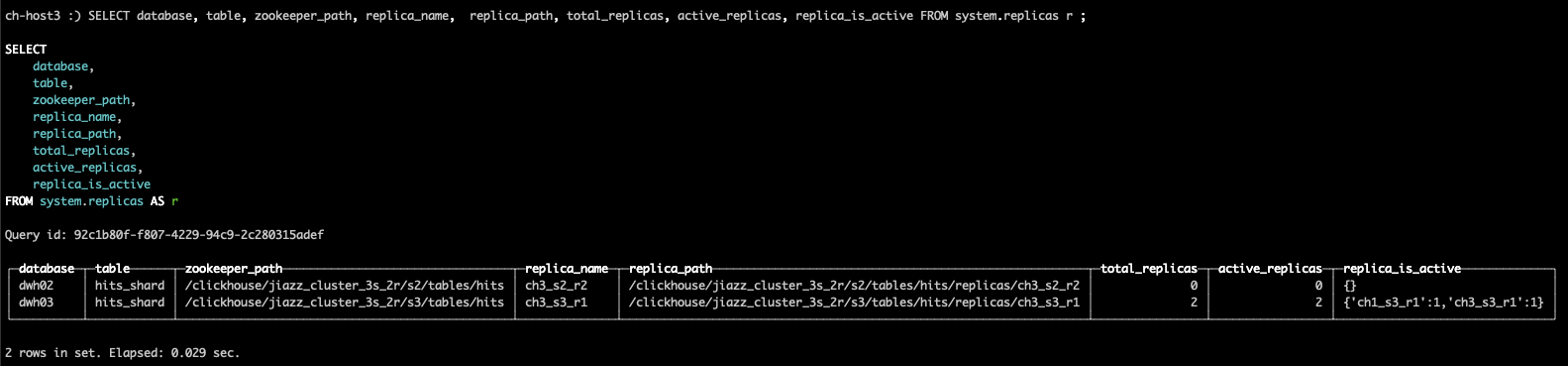

# 只查重点信息,观查对应每个节点是否正常

SELECT database, table, zookeeper_path, replica_name, replica_path, total_replicas, active_replicas, replica_is_active FROM system.replicas r ;

ch-host1

ch-host2

ch-host3

注意:数据库参数是空字符串“”,它反过来使用每个分片的默认数据库来查找正确的数据库(例如dwh01、dwh02、dwh03)。

在正常配置(而不是这种循环复制)中,分片本地数据表和分布式表位于同一数据库上。

-- execute the follow DDL on ALL 3 nodes (ch-host1, ch-host2, ch-host3)

CREATE TABLE dwh.hits_distributed

(

`WatchID` UInt64,

`JavaEnable` UInt8,

`Title` String,

`GoodEvent` Int16,

`EventTime` DateTime,

`EventDate` Date,

`CounterID` UInt32,

`ClientIP` UInt32,

`ClientIP6` FixedString(16),

`RegionID` UInt32,

`UserID` UInt64,

`CounterClass` Int8,

`OS` UInt8,

`UserAgent` UInt8,

`URL` String,

`Referer` String,

`URLDomain` String,

`RefererDomain` String,

`Refresh` UInt8,

`IsRobot` UInt8,

`RefererCategories` Array(UInt16),

`URLCategories` Array(UInt16),

`URLRegions` Array(UInt32),

`RefererRegions` Array(UInt32),

`ResolutionWidth` UInt16,

`ResolutionHeight` UInt16,

`ResolutionDepth` UInt8,

`FlashMajor` UInt8,

`FlashMinor` UInt8,

`FlashMinor2` String,

`NetMajor` UInt8,

`NetMinor` UInt8,

`UserAgentMajor` UInt16,

`UserAgentMinor` FixedString(2),

`CookieEnable` UInt8,

`JavascriptEnable` UInt8,

`IsMobile` UInt8,

`MobilePhone` UInt8,

`MobilePhoneModel` String,

`Params` String,

`IPNetworkID` UInt32,

`TraficSourceID` Int8,

`SearchEngineID` UInt16,

`SearchPhrase` String,

`AdvEngineID` UInt8,

`IsArtifical` UInt8,

`WindowClientWidth` UInt16,

`WindowClientHeight` UInt16,

`ClientTimeZone` Int16,

`ClientEventTime` DateTime,

`SilverlightVersion1` UInt8,

`SilverlightVersion2` UInt8,

`SilverlightVersion3` UInt32,

`SilverlightVersion4` UInt16,

`PageCharset` String,

`CodeVersion` UInt32,

`IsLink` UInt8,

`IsDownload` UInt8,

`IsNotBounce` UInt8,

`FUniqID` UInt64,

`HID` UInt32,

`IsOldCounter` UInt8,

`IsEvent` UInt8,

`IsParameter` UInt8,

`DontCountHits` UInt8,

`WithHash` UInt8,

`HitColor` FixedString(1),

`UTCEventTime` DateTime,

`Age` UInt8,

`Sex` UInt8,

`Income` UInt8,

`Interests` UInt16,

`Robotness` UInt8,

`GeneralInterests` Array(UInt16),

`RemoteIP` UInt32,

`RemoteIP6` FixedString(16),

`WindowName` Int32,

`OpenerName` Int32,

`HistoryLength` Int16,

`BrowserLanguage` FixedString(2),

`BrowserCountry` FixedString(2),

`SocialNetwork` String,

`SocialAction` String,

`HTTPError` UInt16,

`SendTiming` Int32,

`DNSTiming` Int32,

`ConnectTiming` Int32,

`ResponseStartTiming` Int32,

`ResponseEndTiming` Int32,

`FetchTiming` Int32,

`RedirectTiming` Int32,

`DOMInteractiveTiming` Int32,

`DOMContentLoadedTiming` Int32,

`DOMCompleteTiming` Int32,

`LoadEventStartTiming` Int32,

`LoadEventEndTiming` Int32,

`NSToDOMContentLoadedTiming` Int32,

`FirstPaintTiming` Int32,

`RedirectCount` Int8,

`SocialSourceNetworkID` UInt8,

`SocialSourcePage` String,

`ParamPrice` Int64,

`ParamOrderID` String,

`ParamCurrency` FixedString(3),

`ParamCurrencyID` UInt16,

`GoalsReached` Array(UInt32),

`OpenstatServiceName` String,

`OpenstatCampaignID` String,

`OpenstatAdID` String,

`OpenstatSourceID` String,

`UTMSource` String,

`UTMMedium` String,

`UTMCampaign` String,

`UTMContent` String,

`UTMTerm` String,

`FromTag` String,

`HasGCLID` UInt8,

`RefererHash` UInt64,

`URLHash` UInt64,

`CLID` UInt32,

`YCLID` UInt64,

`ShareService` String,

`ShareURL` String,

`ShareTitle` String,

`ParsedParams.Key1` Array(String),

`ParsedParams.Key2` Array(String),

`ParsedParams.Key3` Array(String),

`ParsedParams.Key4` Array(String),

`ParsedParams.Key5` Array(String),

`ParsedParams.ValueDouble` Array(Float64),

`IslandID` FixedString(16),

`RequestNum` UInt32,

`RequestTry` UInt8

)

ENGINE = Distributed('jiazz_cluster_3s_2r', '', 'hits_shard', rand());

数据集 https://clickhouse.com/docs/en/getting-started/tutorial/

- rand()为sharding key,数据将会随机分配到每个分片

- 由于internal_replication=true,一旦数据写入每个分片的第一个副本,ClickHouse系统将自动将数据复制到第二个副本中

# 在里选择ch-host1的挂载目录logs

cd ~/studySpace/docker/clickhouse/ch2-volume1/logs

curl https://datasets.clickhouse.com/hits/tsv/hits_v1.tsv.xz | unxz --threads=`nproc` > hits_v1.tsv

docker exec -it ch-host1 /bin/bash

root@ch-host1:/#cd /var/log/clickhouse-server

# 数据文件比较大,导入时间要长

root@ch-host1:/var/log/clickhouse-server# clickhouse-client --query "INSERT INTO dwh.hits_distributed FORMAT TSV" --max_insert_block_size=100000 < hits_v1.tsv

查数据

ch-host1 :) select * from dwh.hits_distributed limit 1;

SELECT *

FROM dwh.hits_distributed

LIMIT 1

Query id: 126b3803-659e-48fb-a12f-9e0a261de1ca

┌─────────────WatchID─┬─JavaEnable─┬─Title───────────────────────────────────────────────────────────┬─GoodEvent─┬───────────EventTime─┬──EventDate─┬─CounterID─┬───ClientIP─┬─ClientIP6─┬─RegionID─┬─────────────UserID─┬─CounterClass─┬─OS─┬─UserAgent─┬─URL────────────────────────────────────────────────────────────┬─Referer────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┬─URLDomain─────────┬─RefererDomain───────┬─Refresh─┬─IsRobot─┬─RefererCategories─┬─URLCategories────┬─URLRegions─┬─RefererRegions─┬─ResolutionWidth─┬─ResolutionHeight─┬─ResolutionDepth─┬─FlashMajor─┬─FlashMinor─┬─FlashMinor2─┬─NetMajor─┬─NetMinor─┬─UserAgentMajor─┬─UserAgentMinor─┬─CookieEnable─┬─JavascriptEnable─┬─IsMobile─┬─MobilePhone─┬─MobilePhoneModel─┬─Params─┬─IPNetworkID─┬─TraficSourceID─┬─SearchEngineID─┬─SearchPhrase─┬─AdvEngineID─┬─IsArtifical─┬─WindowClientWidth─┬─WindowClientHeight─┬─ClientTimeZone─┬─────ClientEventTime─┬─SilverlightVersion1─┬─SilverlightVersion2─┬─SilverlightVersion3─┬─SilverlightVersion4─┬─PageCharset─┬─CodeVersion─┬─IsLink─┬─IsDownload─┬─IsNotBounce─┬─────────────FUniqID─┬───────HID─┬─IsOldCounter─┬─IsEvent─┬─IsParameter─┬─DontCountHits─┬─WithHash─┬─HitColor─┬────────UTCEventTime─┬─Age─┬─Sex─┬─Income─┬─Interests─┬─Robotness─┬─GeneralInterests───────────────────────┬───RemoteIP─┬─RemoteIP6─┬─WindowName─┬─OpenerName─┬─HistoryLength─┬─BrowserLanguage─┬─BrowserCountry─┬─SocialNetwork─┬─SocialAction─┬─HTTPError─┬─SendTiming─┬─DNSTiming─┬─ConnectTiming─┬─ResponseStartTiming─┬─ResponseEndTiming─┬─FetchTiming─┬─RedirectTiming─┬─DOMInteractiveTiming─┬─DOMContentLoadedTiming─┬─DOMCompleteTiming─┬─LoadEventStartTiming─┬─LoadEventEndTiming─┬─NSToDOMContentLoadedTiming─┬─FirstPaintTiming─┬─RedirectCount─┬─SocialSourceNetworkID─┬─SocialSourcePage─┬─ParamPrice─┬─ParamOrderID─┬─ParamCurrency─┬─ParamCurrencyID─┬─GoalsReached─┬─OpenstatServiceName─┬─OpenstatCampaignID─┬─OpenstatAdID─┬─OpenstatSourceID─┬─UTMSource─┬─UTMMedium─┬─UTMCampaign─┬─UTMContent─┬─UTMTerm─┬─FromTag─┬─HasGCLID─┬─────────RefererHash─┬────────────URLHash─┬─CLID─┬─YCLID─┬─ShareService─┬─ShareURL─┬─ShareTitle─┬─ParsedParams.Key1─┬─ParsedParams.Key2─┬─ParsedParams.Key3─┬─ParsedParams.Key4─┬─ParsedParams.Key5─┬─ParsedParams.ValueDouble─┬─IslandID─┬─RequestNum─┬─RequestTry─┐

│ 4944118417295196513 │ 1 │ вышивка в Москве - Образовать фото мочия - Почта Mail.Ru: Силва │ 1 │ 2014-03-17 13:19:26 │ 2014-03-17 │ 57 │ 1581336367 │ ��:�[�Uc��m��� │ 54 │ 610708775678702928 │ 0 │ 1 │ 3 │ http://hurpasspx?EntityType=images.jpg,http:%2F%2Fweb%2Fimages │ http://iconnId=140Z1BmWE9JVEdoQ2Zud01aa0f8b72a2cb141ad2fbb6bc0488a293f1c0b7bbfe6e0921396325_7860c5b30e0216&mb_url=http://svit/realesta.com/?do=news.ru/yandex.php?thread%2FEl0TTQQZIClEGYFcJG1F4XldSeWtvVkFkf38xIAEsQVgWCVtUe15_d34cemhbU0dIfji-RM │ hurpass.uaprod.hu │ incityadspix.com.vn │ 0 │ 0 │ [6,98,456,8586] │ [5,92,469,13425] │ [348,1010] │ [267,694] │ 1846 │ 952 │ 29 │ 8 │ 0 │ 0. │ 0 │ 0 │ 24 │ s� │ 1 │ 1 │ 0 │ 0 │ │ │ 3223479 │ -1 │ 0 │ │ 0 │ 1 │ 1246 │ 906 │ 157 │ 2014-03-17 16:32:09 │ 5 │ 1 │ 19832 │ 0 │ utf-8 │ 291 │ 0 │ 0 │ 0 │ 5970711023083247428 │ 117479153 │ 0 │ 0 │ 0 │ 1 │ 0 │ E │ 2014-03-17 13:03:08 │ 55 │ 1 │ 2 │ 16014 │ 8 │ [5,2,14,925,4,3993,72,6,9,27,1,3,1019] │ 2024189568 │ ���_Y����5Ӵ. │ 8687 │ -1 │ 1 │ nD │ Tp │ │ │ 0 │ -1 │ -1 │ -1 │ -1 │ -1 │ -1 │ -1 │ -1 │ -1 │ -1 │ -1 │ -1 │ 7557 │ -1 │ -1 │ 0 │ │ 0 │ │ � │ 0 │ [834016] │ │ │ │ │ │ │ │ │ │ │ 0 │ 7613536979754271517 │ 966296541083783832 │ 0 │ 0 │ │ │ │ [] │ [] │ [] │ [] │ [] │ [] │ ���+������bKQ9 │ 1324 │ 1 │

└─────────────────────┴────────────┴─────────────────────────────────────────────────────────────────┴───────────┴─────────────────────┴────────────┴───────────┴────────────┴───────────┴──────────┴────────────────────┴──────────────┴────┴───────────┴────────────────────────────────────────────────────────────────┴────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┴───────────────────┴─────────────────────┴─────────┴─────────┴───────────────────┴──────────────────┴────────────┴────────────────┴─────────────────┴──────────────────┴─────────────────┴────────────┴────────────┴─────────────┴──────────┴──────────┴────────────────┴────────────────┴──────────────┴──────────────────┴──────────┴─────────────┴──────────────────┴────────┴─────────────┴────────────────┴────────────────┴──────────────┴─────────────┴─────────────┴───────────────────┴────────────────────┴────────────────┴─────────────────────┴─────────────────────┴─────────────────────┴─────────────────────┴─────────────────────┴─────────────┴─────────────┴────────┴────────────┴─────────────┴─────────────────────┴───────────┴──────────────┴─────────┴─────────────┴───────────────┴──────────┴──────────┴─────────────────────┴─────┴─────┴────────┴───────────┴───────────┴────────────────────────────────────────┴────────────┴───────────┴────────────┴────────────┴───────────────┴─────────────────┴────────────────┴───────────────┴──────────────┴───────────┴────────────┴───────────┴───────────────┴─────────────────────┴───────────────────┴─────────────┴────────────────┴──────────────────────┴────────────────────────┴───────────────────┴──────────────────────┴────────────────────┴────────────────────────────┴──────────────────┴───────────────┴───────────────────────┴──────────────────┴────────────┴──────────────┴───────────────┴─────────────────┴──────────────┴─────────────────────┴────────────────────┴──────────────┴──────────────────┴───────────┴───────────┴─────────────┴────────────┴─────────┴─────────┴──────────┴─────────────────────┴────────────────────┴──────┴───────┴──────────────┴──────────┴────────────┴───────────────────┴───────────────────┴───────────────────┴───────────────────┴───────────────────┴──────────────────────────┴──────────┴────────────┴────────────┘

1 rows in set. Elapsed: 28.719 sec.

如果以上有错误就删除数据库并重新开始

DROP DATABASE IF EXISTS dwh

DROP DATABASE IF EXISTS dwh01

DROP DATABASE IF EXISTS dwh02

DROP DATABASE IF EXISTS dwh03