1. 概述

Keepalived是通过vrrp 协议的实现高可用性,对网络比较了解的IT人,对这个技术应该非常熟悉了,早期核心交换机用来实现双机双线的标准协议,现在随着技术发展出现了更好的核心设备的双活技术,vrrp/hrrp慢慢被取代了,但目前在Linux主机类应用场景使用还比较广泛。它的原生设计目的为了解决 ipvs高可用性。

官网:http://keepalived.org/

功能:

- 基于vrrp协议完成地址漂移;

- 为vip地址所在的节点生成ipvs规则 (在配置文件中预先定义);

- 为ipvs集群的各RS做健康状态检测;( keepalived 可以搭配 LVS、haproxy等成为黄金组合,尤其是 keepalived + haproxy 在很多企业生产中使用)

- 基于脚本调用接口完成脚本中定义的功能,进而影响集群事务,以此支持nginx、haproxy等服务。

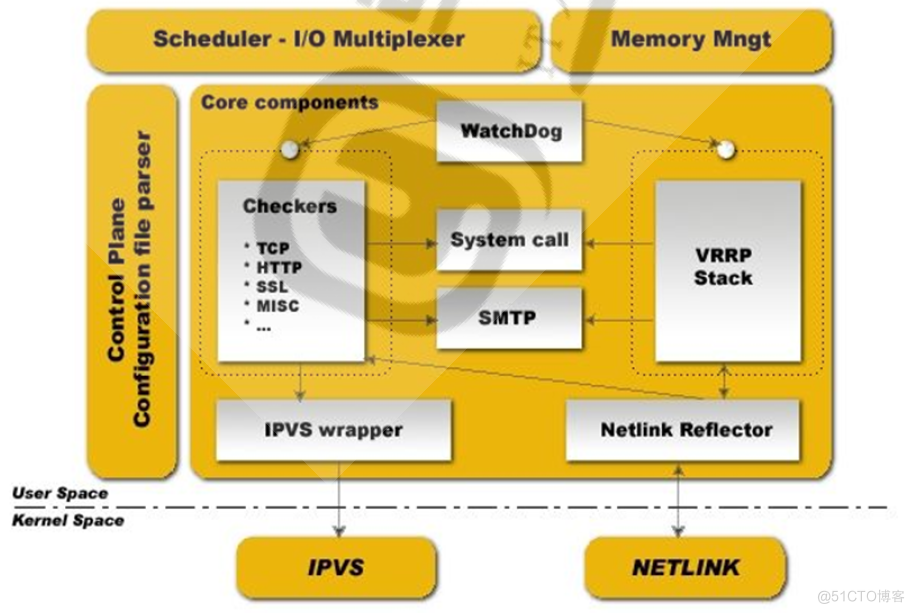

2. 工作原理及技术架构

官方文档:

https://keepalived.org/doc/http://keepalived.org/documentation.html

- 用户空间核心组件:

vrrp stack:VIP消息通告;

checkers:监测real server;

system call:实现 vrrp 协议状态转换时调用脚本的功能;

SMTP:邮件组件;

IPVS wrapper:生成IPVS规则;

Netlink Reflector:网络接口;

WatchDog:监控进程;

- 控制组件:提供keepalived.conf 的解析器,完成Keepalived配置。

- IO复用器:针对网络目的而优化的自己的线程抽象。

- 内存管理组件:为某些通用的内存管理功能(例如分配,重新分配,发布等)提供访问权限。

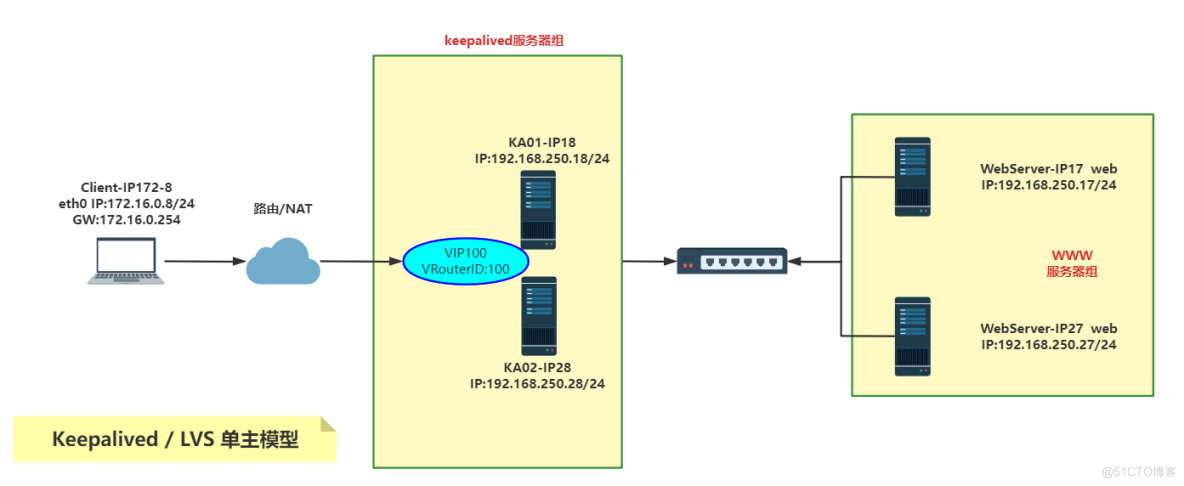

3. 拓扑结构及主机准备

1 2台web服务器 :

主机名:WebServer-IP17

CentOS 7.9

IP:192.168.250.17

主机名:WebServer-IP27

CentOS 7.9

IP:192.168.250.27

2 2台 keepalived 服务器 :

主机名: KA-IP18

CentOS 8.4

IP:192.168.250.18/24

Keepalived v2.1.5 (07/13,2020)

主机名: KA-IP28

CentOS 8.4

IP:192.168.250.28/24

Keepalived v2.1.5 (07/13,2020)

3 1台client主机 :

主机名:Client-IP172-8

CentOS 8.4

IP:172.16.0.8/24 NAT成192.168.250.254 访问192.168.250.X网段

4. 后端WEB服务器准备

4.1 安装Apache httpd 完成基本的WWW配置

# 基础环境包括CentOS操作系统的优化、防火墙关闭、同步时间等都要做好,我们按照规划的架构图对四台服务器进行分组并重新命名# 修改服务器名称

[root@centos79 <sub>]# hostnamectl set-hostname WebServer-IP17

[root@centos79 </sub>]# exit

# 修改NTP服务器地址为阿里云的NTP 启用时钟同步服务

[root@webserver-ip17 <sub>]# timedatectl set-timezone Asia/Shanghai

[root@webserver-ip17 </sub>]#sed -i '/^server/cserver ntp.aliyun.com iburst' /etc/chrony.conf

[root@webserver-ip17 <sub>]# systemctl enable --now chronyd.service

# 安装Apache

[root@webserver-ip17 </sub>]# yum -y install httpd

# 定义web主页文件

[root@webserver-ip17 <sub>]# hostname > /var/www/html/indexTmp.html

[root@webserver-ip17 </sub>]# hostname -I >> /var/www/html/indexTmp.html

# 将文件 /var/www/html/indexTmp.html 内两行文字合并成一行,便于后面测试观测

[root@webserver-ip17 <sub>]# cat /var/www/html/indexTmp.html | xargs > /var/www/html/index.html

[root@webserver-ip17 </sub>]# ll /var/www/html/

total 8

-rw-r--r-- 1 root root 30 Mar 31 23:07 index.html

-rw-r--r-- 1 root root 31 Mar 31 23:07 indexTmp.html

[root@webserver-ip17 <sub>]# rm -rf /var/www/html/indexTmp.html

[root@webserver-ip17 </sub>]# ll /var/www/html/

total 4

-rw-r--r-- 1 root root 30 Mar 31 23:07 index.html

# 启动Apache服务,并开机自启

[root@webserver-ip17 <sub>]# systemctl enable --now httpd

# 验证

[root@webserver-ip17 </sub>]# curl 192.168.250.17

webserver-ip17 192.168.250.17

[root@webserver-ip17 <sub>]#

#####################################################################################

#### 同样的方式完成另外一台 webserver-ip27 192.168.250.27 的Apache的配置和调试

# 修改主机名、同步时间等基础环境配置需要按常规完成好

[root@centos79 </sub>]# hostnamectl set-hostname WebServer-IP17

[root@centos79 <sub>]# exit

# 修改NTP服务器地址为阿里云的NTP 启用时钟同步服务

[root@webserver-ip27 </sub>]# timedatectl set-timezone Asia/Shanghai

[root@webserver-ip27 <sub>]#sed -i '/^server/cserver ntp.aliyun.com iburst' /etc/chrony.conf

[root@webserver-ip27 </sub>]# systemctl enable --now chronyd.service

# 安装Apache、配置主页、启动服务 一次搞定

[root@webserver-ip27 <sub>]# yum -y install httpd;hostname > /var/www/html/indexTmp.html;hostname -I >> /var/www/html/indexTmp.html;cat /var/www/html/indexTmp.html | xargs > /var/www/html/index.html;rm -rf /var/www/html/indexTmp.html;systemctl enable --now httpd

# 验证

[root@webserver-ip27 </sub>]# curl 192.168.250.27

webserver-ip27 192.168.250.27

[root@webserver-ip27 ~]#

4.2 配置与LVS相关的配置

简要概述:因为我们在利用keepalived实现LVS-DR模式的WEB服务负载均衡,所以需要对后端两台服务器的ARP宣告、VIP绑定做好相应的配置,这样在LVS + keepalived 配置好后就可以直接按照LVS-DR模式访问后端的服务器了,因为这个过程在我的博客的前面文章已经详细介绍过,对这两台服务器的配置直接用脚本来完成了。

#### lvs_dr_rs.sh 内容,在VS-Code内修改好上传到两个WEB-RS服务器上运行vip=192.168.250.100

mask='255.255.255.255'

dev=lo:1

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig $dev $vip netmask $mask

echo "The RS Server is Ready!"

;;

stop)

ifconfig $dev down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "The RS Server is Canceled!"

;;

*)

echo "Usage: $(basename $0) start|stop"

exit 1

;;

esac

## IP192.168.250.17 上的配置过程

[root@webserver-ip17 <sub>]# rz

rz waiting to receive.

Starting zmodem transfer. Press Ctrl+C to cancel.

Transferring lvs_dr_rs.sh...

100% 728 bytes 728 bytes/sec 00:00:01 0 Errors

[root@webserver-ip17 </sub>]# bash

[root@webserver-ip17 <sub>]# bash lvs_dr_rs.sh

Usage: lvs_dr_rs.sh start|stop

[root@webserver-ip17 </sub>]# bash lvs_dr_rs.sh start

The RS Server is Ready!

[root@webserver-ip17 <sub>]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.250.100/32 scope global lo:1

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a3:a8:67 brd ff:ff:ff:ff:ff:ff

inet 192.168.250.17/24 brd 192.168.250.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea3:a867/64 scope link

valid_lft forever preferred_lft forever

[root@webserver-ip17 </sub>]#

## IP192.168.250.27 上的配置过程

[root@webserver-ip27 <sub>]# rz

rz waiting to receive.

Starting zmodem transfer. Press Ctrl+C to cancel.

Transferring lvs_dr_rs.sh...

100% 728 bytes 728 bytes/sec 00:00:01 0 Errors

[root@webserver-ip27 </sub>]# bash lvs_dr_rs.sh start

The RS Server is Ready!

[root@webserver-ip27 <sub>]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.250.100/32 scope global lo:1

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a3:fb:92 brd ff:ff:ff:ff:ff:ff

inet 192.168.250.27/24 brd 192.168.250.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea3:fb92/64 scope link

valid_lft forever preferred_lft forever

[root@webserver-ip27 </sub>]#

5. 配置keepalived服务器

第一步:先配置实现master/slave的 Keepalived 单主架构,并测试成功。第二步再配置和LVS的ipvs服务相关的,实现和后端WEB-RS的通信。

5.1 配置 Keepalived 实现master/slave的单主架构

#### IP 192.168.250.18 Keepalived-IP18 的 Keepalived 配置[root@CentOS84-IP18 ]#hostnamectl set-hostname Keepalived-IP18

[root@CentOS84-IP18 ]#exit

[root@Keepalived-IP18 ]#timedatectl set-timezone Asia/Shanghai

[root@Keepalived-IP18 ]#sed -i '/^server/cserver ntp.aliyun.com iburst' /etc/chrony.conf

[root@Keepalived-IP18 ]#systemctl enable --now chronyd.service

[root@Keepalived-IP18 ]#systemctl restart chronyd.service

[root@Keepalived-IP18 ]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a3:e8:6b brd ff:ff:ff:ff:ff:ff

inet 192.168.250.18/24 brd 192.168.250.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea3:e86b/64 scope link

valid_lft forever preferred_lft forever

[root@Keepalived-IP18 ]#

[root@Keepalived-IP18 ]#dnf info keepalived

Last metadata expiration check: 19:41:03 ago on Wed 30 Mar 2022 10:03:06 PM CST.

Installed Packages

Name : keepalived

Version : 2.1.5

....................

[root@Keepalived-IP18 ]#dnf install keepalived -y

[root@Keepalived-IP18 ]#keepalived -v

Keepalived v2.1.5 (07/13,2020)

Copyright(C) 2001-2020 Alexandre Cassen, <acassen@gmail.com>

Built with kernel headers for Linux 4.18.0

Running on Linux 4.18.0-305.3.1.el8.x86_64 #1 SMP Tue Jun 1 16:14:33 UTC 2021

......................

# 备份默认的 keepalived.conf 配置文件并按照规划拓扑结构修改相应的配置文件。配置文件详细的每行含义请参见文章末尾的详细说明。

[root@Keepalived-IP18 ]#cp /etc/keepalived/keepalived.conf{,.bak}

[root@Keepalived-IP18 ]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

# 全局设置:邮箱设置,要结合系统的/etc/mail.rc 和通知脚本来实现故障通知。

global_defs {

notification_email {

root@shone.cn

}

notification_email_from admin@shone.cn

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id KA-IP18

vrrp_skip_check_adv_addr

#vrrp_strict # 严格模式建议关闭掉

vrrp_garp_interval 0

vrrp_gna_interval 0

#vrrp_mcast_group4 234.0.0.66 #可以用自定义多播地址通告VRRP信息,建议用下面的单播

}

vrrp_instance VI_IP100 {

state MASTER

interface eth0

virtual_router_id 100

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass shone888

}

virtual_ipaddress {

192.168.250.100 dev eth0 label eth0:1

}

# 下面是单播的配置

unicast_src_ip 192.168.250.18

unicast_peer {

192.168.250.28

}

}

# 全部配置好启动服务并设定开机自启

[root@Keepalived-IP28 ]#systemctl enable --now keepalived#### IP 192.168.250.28 Keepalived-IP28 的 Keepalived 配置

[root@CentOS84-IP28 ]#hostnamectl set-hostname Keepalived-IP28

[root@CentOS84-IP28 ]#exit

[root@Keepalived-IP28 ]#timedatectl set-timezone Asia/Shanghai

[root@Keepalived-IP28 ]#sed -i '/^server/cserver ntp.aliyun.com iburst' /etc/chrony.conf

[root@Keepalived-IP28 ]#systemctl enable --now chronyd.service

[root@Keepalived-IP28 ]#systemctl restart chronyd.service

[root@Keepalived-IP28 ]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a3:e2:bf brd ff:ff:ff:ff:ff:ff

inet 192.168.250.28/24 brd 192.168.250.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea3:e2bf/64 scope link

valid_lft forever preferred_lft forever

[root@Keepalived-IP28 ]#

[root@Keepalived-IP28 ]#dnf info keepalived

Last metadata expiration check: 19:41:03 ago on Wed 30 Mar 2022 10:03:06 PM CST.

Installed Packages

Name : keepalived

Version : 2.1.5

....................

[root@Keepalived-IP28 ]#dnf install keepalived -y

[root@Keepalived-IP28 ]#keepalived -v

Keepalived v2.1.5 (07/13,2020)

......................

# 备份默认的 keepalived.conf 配置文件并按照规划拓扑结构修改相应的配置文件。配置文件详细的每行含义请参见文章末尾的详细说明。

[root@Keepalived-IP28 ]#cp /etc/keepalived/keepalived.conf{,.bak}

[root@Keepalived-IP28 ]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@shone.cn

}

notification_email_from admin@shone.cn

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id KA-IP28

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

#vrrp_mcast_group4 234.0.0.66

}

vrrp_instance VI_IP100 {

state BACKUP

interface eth0

virtual_router_id 100

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass shone888

}

virtual_ipaddress {

192.168.250.100 dev eth0 label eth0:1

}

unicast_src_ip 192.168.250.28

unicast_peer {

192.168.250.18

}

}

# 全部配置好启动服务并设定开机自启

[root@Keepalived-IP28 ]#systemctl enable --now keepalived#### 经过上面的配置可以简单测试下 keepalived 单主的工作状态

# 正常状态下 因为KV-IP18的优先级别高,VIP应该漂在其上面

[root@Keepalived-IP18 ]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a3:e8:6b brd ff:ff:ff:ff:ff:ff

inet 192.168.250.18/24 brd 192.168.250.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.250.100/32 scope global eth0:1 ## 确实是地址绑定在IP18上面

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea3:e86b/64 scope link

valid_lft forever preferred_lft forever

[root@Keepalived-IP18 ]#

#####################################################################################

# 在IP28上面没有绑定VIP地址

[root@Keepalived-IP28 ]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a3:e2:bf brd ff:ff:ff:ff:ff:ff

inet 192.168.250.28/24 brd 192.168.250.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea3:e2bf/64 scope link

valid_lft forever preferred_lft forever

[root@Keepalived-IP28 ]#

#####################################################################################

# 抓包分析看到是IP18 到 IP28 有报文传送

[root@Keepalived-IP28 ]#tcpdump -i eth0 -nn src host 192.168.250.18 and dst 192.168.250.28

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

19:14:56.311959 IP 192.168.250.18 > 192.168.250.28: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20

19:14:57.312137 IP 192.168.250.18 > 192.168.250.28: VRRPv2, Advertisement, vrid 100, prio 100, authtype simple, intvl 1s, length 20

19:14:58.312325 IP 192.168.250.18 > 192.168.250.28: VRRPv2, Advertisement, vrid 100, .....................

[root@Keepalived-IP28 ]#

[root@Keepalived-IP28 ]#tcpdump -i eth0 -nn src host 192.168.250.28 and dst 192.168.250.18

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

19:13:48.714620 ARP, Reply 192.168.250.28 is-at 00:50:56:a3:e2:bf, length 28

19:14:37.354588 ARP, Reply 192.168.250.28 is-at 00:50:56:a3:e2:bf, length 28

19:15:11.658614 ARP, Reply 192.168.250.28 is-at 00:50:56:a3:e2:bf, length 28

...............

#####################################################################################

#####################################################################################

#### 模拟将IP18 (优先级别高) 发生故障 将IP18 重启,VIP自动漂移到IP28上去了,同时看到抓包分析的情况。 当IP18恢复后自动又抢占了VIP地址,因为我们配置的是抢占模式

[root@Keepalived-IP28 ]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a3:e2:bf brd ff:ff:ff:ff:ff:ff

inet 192.168.250.28/24 brd 192.168.250.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.250.100/32 scope global eth0:1

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea3:e2bf/64 scope link

valid_lft forever preferred_lft forever

[root@Keepalived-IP28 ]#tcpdump -i eth0 -nn src host 192.168.250.28 and dst 192.168.250.18

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

19:23:34.350734 ARP, Request who-has 192.168.250.18 tell 192.168.250.28, length 28

19:23:35.374733 ARP, Request who-has 192.168.250.18 tell 192.168.250.28, length 28

19:23:37.311983 ARP, Request who-has 192.168.250.18 tell 192.168.250.28, length 28

19:23:38.318712 ARP, Request who-has 192.168.250.18 tell 192.168.250.28, length 28

19:23:39.342751 ARP, Request who-has 192.168.250.18 tell 192.168.250.28, length 28

19:23:40.049623 IP 192.168.250.28 > 192.168.250.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20

19:23:40.049675 IP 192.168.250.28 > 192.168.250.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20

19:23:40.049680 IP 192.168.250.28 > 192.168.250.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20

19:23:40.312537 IP 192.168.250.28 > 192.168.250.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20

19:23:41.312750 IP 192.168.250.28 > 192.168.250.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20

19:23:42.312928 IP 192.168.250.28 > 192.168.250.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20

19:23:43.313117 IP 192.168.250.28 > 192.168.250.18: VRRPv2, Advertisement, vrid 100, prio 80, authtype simple, intvl 1s, length 20

19:23:45.294732 ARP, Request who-has 192.168.250.18 tell 192.168.250.28, length 28

5.2 配置与LVS相关的配置

简要说明:后端RS和LVS相关的配置通过脚本实现后,就可以开始配置 keepalived 与LVS相关的内容了,在两台keepalived 上都要进行相应的配置才行。

#### keepalived IP:192.168.250.18 的配置信息[root@Keepalived-IP18 ]#cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@shone.cn

}

notification_email_from admin@shone.cn

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id KA-IP18

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

#vrrp_mcast_group4 224.0.0.18

}

vrrp_instance VI_IP100 {

state MASTER

interface eth0

virtual_router_id 100

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass shone888

}

virtual_ipaddress {

192.168.250.100 dev eth0 label eth0:1

}

unicast_src_ip 192.168.250.18 unicast_peer {

192.168.250.28

}

}

# 上面的配置是前面已经测试通过的,需要增加下面的和虚拟服务器相关的配置内容

virtual_server 192.168.250.100 80 {

delay_loop 3

lb_algo rr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.250.17 80 {

weight 1

HTTP_GET { # 定义的健康监测方式:应用层监测

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.250.27 80 {

weight 1

TCP_CHECK { # 定义的健康监测方式:TCP监测

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

[root@Keepalived-IP18 ]#

# 配置修改完成后重启服务

[root@Keepalived-IP18 ]#systemctl restart keepalived

###################################################################################

#### keepalived IP:192.168.250.28 的配置信息

[root@Keepalived-IP28 ]#cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@shone.cn

}

notification_email_from admin@shone.cn

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id KA-IP28

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

#vrrp_mcast_group4 224.0.0.18

}

vrrp_instance VI_IP100 {

state BACKUP

interface eth0

virtual_router_id 100

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass shone888

}

virtual_ipaddress {

192.168.250.100 dev eth0 label eth0:1

}

unicast_src_ip 192.168.250.28

unicast_peer {

192.168.250.18

}

}

virtual_server 192.168.250.100 80 {

delay_loop 3

lb_algo rr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.250.17 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.250.27 80 {

weight 1

TCP_CHECK {

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

[root@Keepalived-IP28 ]#

# 配置修改完成后重启服务

[root@Keepalived-IP28 ]#systemctl restart keepalived

6. 测试验证

简要说明:上面的配置全部完成后,实现了对后端的两台WEB的LVS-DR模式的负载集群,通过keepalive 完美解决了 LVS 的自身的单点故障和后端健康监测的缺陷问题,这个模式完成适合很多中小型企业的应用了。

#### 按照前面学习LVS的方式去查看 ipvs 的信息[root@Keepalived-IP18 ]#yum -y install ipvsadm

[root@Keepalived-IP18 ]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.250.100:80 rr

-> 192.168.250.17:80 Route 1 0 0

-> 192.168.250.27:80 Route 1 0 0

[root@Keepalived-IP18 ]#

[root@Keepalived-IP28 ]#yum -y install ipvsadm

[root@Keepalived-IP28 ]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.250.100:80 rr

-> 192.168.250.17:80 Route 1 0 0

-> 192.168.250.27:80 Route 1 0 0

[root@Keepalived-IP28 ]#

6.1 后端两台17和27的WEB均正常,观察访问

######################################################################################## 全部正常情况下,在客户端 Clients 上测试到VIP的访问

[root@CentOS84-IP172-08 ]#curl 192.168.250.100

webserver-ip27 192.168.250.27

[root@CentOS84-IP172-08 ]#curl 192.168.250.100

webserver-ip17 192.168.250.17

[root@CentOS84-IP172-08 ]#curl 192.168.250.100

webserver-ip27 192.168.250.27

[root@CentOS84-IP172-08 ]#curl 192.168.250.100

webserver-ip17 192.168.250.17

[root@CentOS84-IP172-08 ]#curl 192.168.250.100

webserver-ip27 192.168.250.27

[root@CentOS84-IP172-08 ]#curl 192.168.250.100

webserver-ip17 192.168.250.17

[root@CentOS84-IP172-08 ]#

# 自动访问情况

[root@CentOS84-IP172-08 ]#while :;do curl 192.168.250.100;sleep 1;done

webserver-ip27 192.168.250.27

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

6.2 模拟 IP192.168.250.17 故障

##################################################################################### 模拟 IP192.168.250.17 故障,因为我们对其监测的是应用层,HTTP_GET 访问 /

[root@webserver-ip17 <sub>]# chmod 0 /var/www/html/index.html

[root@webserver-ip17 </sub>]#

[root@CentOS84-IP172-08 ]#while :;do curl 192.168.250.100;sleep 1;done

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

^C

[root@CentOS84-IP172-08 ]#

[root@Keepalived-IP18 ]#yum -y install ipvsadm

[root@Keepalived-IP18 ]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.250.100:80 rr

-> 192.168.250.27:80 Route 1 0 0

[root@Keepalived-IP18 ]#

[root@Keepalived-IP28 ]#yum -y install ipvsadm

[root@Keepalived-IP28 ]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.250.100:80 rr

-> 192.168.250.27:80 Route 1 0 0

[root@Keepalived-IP28 ]#

6.3 模拟后端两台都故障

####################################################################################### 前面关闭了后端IP17 上的 WEB ,再关闭 IP27 上的WEB ,也就是两台后端的服务器都故障了

[root@webserver-ip27 <sub>]# systemctl stop httpd

[root@webserver-ip27 </sub>]#

[root@CentOS84-IP172-08 ]#while :;do curl 192.168.250.100;sleep 1;done

curl: (7) Failed to connect to 192.168.250.100 port 80: Connection refused

curl: (7) Failed to connect to 192.168.250.100 port 80: Connection refused

curl: (7) Failed to connect to 192.168.250.100 port 80: Connection refused

^C

[root@CentOS84-IP172-08 ]#

[root@Keepalived-IP18 ]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.250.100:80 rr

-> 127.0.0.1:80 Route 1 0 0

[root@Keepalived-IP18 ]#

[root@Keepalived-IP28 ]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.250.100:80 rr

-> 127.0.0.1:80 Route 1 0 0

[root@Keepalived-IP28 ]#

# 总结:从上面的信息可以看出,在 ipvs 信息显示跳转到127.0.0.1 本机上的页面,这样就提供了另外一个思路,可以在两台Keepalived 本地定义一个道歉页,但是实际生产中不建议在 Keepalived 主机上开启web服务了,减轻其负载,可以单独定义一个道歉通道,我们为了测试直观,直接在Keepalived 主机上来定义道歉页面,顺便可以直观看到 Keepalived 自身发生故障的情况。

###################################################################################

#### 在两台keepalived 服务器上构建,道歉页面

# IP192.168.250.18 上配置和验证

[root@Keepalived-IP18 ]#yum -y install nginx;systemctl enable --now nginx

[root@Keepalived-IP18 ]#echo "Sorry, the server is unavailable! Keepalived-IP18" > /usr/share/nginx/html/index.html

[root@CentOS84-IP172-08 ]#curl 192.168.250.18

Sorry, the server is unavailable! Keepalived-IP18

# IP192.168.250.28 上配置和验证

[root@Keepalived-IP28 ]#yum -y install nginx;systemctl enable --now nginx;echo "Sorry, the server is unavailable! Keepalived-IP18" > /usr/share/nginx/html/index.html

[root@CentOS84-IP172-08 ]#curl 192.168.250.28

Sorry, the server is unavailable! Keepalived-IP28

[root@CentOS84-IP172-08 ]#

#### 上面的道歉页面构建成功后,因为我们在keepalived的配置文件内定义好了 sorry_server 127.0.0.1 80 ,也就是临时将 keepalived 的本机的页面作为道歉页面了。

## 前面已经模拟后面两台WWW的故障了,现在观测访问效果

[root@CentOS84-IP172-08 ]#while :;do curl 192.168.250.100;sleep 1;done

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

..................

6.4 模拟后端两台都故障和keepalived master故障

[root@Keepalived-IP18 ]#systemctl stop keepalived[root@Keepalived-IP18 ]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@Keepalived-IP18 ]#

[root@Keepalived-IP28 ]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.250.100:80 rr

-> 127.0.0.1:80 Route 1 0 4

[root@Keepalived-IP28 ]#

[root@CentOS84-IP172-08 ]#while :;do curl 192.168.250.100;sleep 1;done

Sorry, the server is unavailable! Keepalived-IP28

Sorry, the server is unavailable! Keepalived-IP28

Sorry, the server is unavailable! Keepalived-IP28

Sorry, the server is unavailable! Keepalived-IP28

^C

[root@CentOS84-IP172-08 ]#

# 按照下面的顺序恢复模拟的故障点,观察访问的变化,完全符合设定的逻辑

[root@Keepalived-IP18 ]#systemctl restart keepalived

[root@webserver-ip27 <sub>]# systemctl start httpd

[root@webserver-ip17 </sub>]# chmod 644 /var/www/html/index.html

[root@CentOS84-IP172-08 ]#while :;do curl 192.168.250.100;sleep 1;done

# Keepalived-IP18 恢复后的访问信息

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

Sorry, the server is unavailable! Keepalived-IP18

# Keepalived-IP18 、ip192.168.250.27恢复后的访问信息

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

webserver-ip27 192.168.250.27

# Keepalived-IP18 、ip192.168.250.27、webserver-ip17 192.168.250.17恢复后的访问信息

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

webserver-ip17 192.168.250.17

webserver-ip27 192.168.250.27

^C

[root@CentOS84-IP172-08 ]#

总结:经过上面的过程,我们深入理解了 keepalived + LVS 实现负载均衡确实一个比较好的组合,它还可以结合脚本实现邮件通知功能,在实际生产中很有意义。

附:KeepAlived 配置说明

1、配置文件组成部分

配置文件

/etc/keepalived/keepalived.conf配置文件组成:

- GLOBAL CONFIGURATION

Global definitions:定义邮件配置,route_id,vrrp配置,多播地址等

- VRRP CONFIGURATION

VRRP instance(s):定义每个vrrp虚拟路由器

- LVS CONFIGURATION

Virtual server group(s)

Virtual server(s):LVS集群的VS和RS

配置语法说明

man keepalived.conf2、全局配置配置详细解读

#/etc/keepalived/keepalived.confglobal_defs {

notification_email {

root@localhost #keepalived 发生故障切换时邮件发送的目标邮箱,可以按行区分写

多个

root@wangxiaochun.com

29308620@qq.com

}

notification_email_from keepalived@localhost #发邮件的地址

smtp_server 127.0.0.1 #邮件服务器地址

smtp_connect_timeout 30 #邮件服务器连接timeout

router_id ka1.example.com #每个keepalived主机唯一标识,建议使用主机名,但节点重名不影响

vrrp_skip_check_adv_addr #对所有通告报文都检查,会比较消耗性能,启用此配置后,如果收到的通告报文和上一个报文是同一个路由器,则跳过检查,默认值为全检查

vrrp_strict #严格遵守VRRP协议,启用此项后以下状况将无法启动服务:1.无VIP地址 2.配置了单播邻居 3.在VRRP版本2中有IPv6地址,开启动此项并且没有配置vrrp_iptables时会自动开启iptables防火墙规则,默认导致VIP无法访问,建议不加此项配置

vrrp_garp_interval 0 #gratuitous ARP messages 报文发送延迟,0表示不延迟

vrrp_gna_interval 0 #unsolicited NA messages (不请自来)消息发送延迟

vrrp_mcast_group4 224.0.0.18 #指定组播IP地址范围:224.0.0.0到239.255.255.255,默认值:224.0.0.18

vrrp_iptables #此项和vrrp_strict同时开启时,则不会添加防火墙规则,如果无配置 vrrp_strict项,则无需启用此项配置

}

3、配置虚拟路由器

vrrp_instance <STRING> { #<String>为vrrp的实例名,一般为业务名称配置参数

......

}

#配置参数:

state MASTER|BACKUP#当前节点在此虚拟路由器上的初始状态,状态为MASTER或者BACKUP

interface IFACE_NAME #绑定为当前虚拟路由器使用的物理接口,如:eth0,bond0,br0,可以和VIP不

在一个网卡

virtual_router_id VRID #每个虚拟路由器惟一标识,范围:0-255,每个虚拟路由器此值必须唯一,否

则服务无法启动,同属一个虚拟路由器的多个keepalived节点必须相同,务必要确认在同一网络中此值必须唯

一

priority 100 #当前物理节点在此虚拟路由器的优先级,范围:1-254,值越大优先级越高,每个

keepalived主机节点此值不同

advert_int 1 #vrrp通告的时间间隔,默认1s

authentication { #认证机制

auth_type AH|PASS #AH为IPSEC认证(不推荐),PASS为简单密码(建议使用)

auth_pass <PASSWORD> #预共享密钥,仅前8位有效,同一个虚拟路由器的多个keepalived节点必须一样

}

virtual_ipaddress { #虚拟IP,生产环境可能指定上百个IP地址

<IPADDR>/<MASK> brd <IPADDR> dev <STRING> scope <SCOPE> label <LABEL>

192.168.200.100 #指定VIP,不指定网卡,默认为eth0,注意:不指定/prefix,默认为/32

192.168.200.101/24 dev eth1 #指定VIP的网卡,建议和interface指令指定的岗卡不在一个网卡

192.168.200.102/24 dev eth2 label eth2:1 #指定VIP的网卡label

}

track_interface { #配置监控网络接口,一旦出现故障,则转为FAULT状态实现地址转移

eth0

eth1

…

}

4、IPVS高可用性的相关配置

4.1 虚拟服务器配置结构

# 配置文件的样式virtual_server IP port {

...

real_server {

...

}

real_server {

...

}

…

}

# 虚拟服务器的定义格式

virtual_server IP port #定义虚拟主机IP地址及其端口

virtual_server fwmark int #ipvs的防火墙打标,实现基于防火墙的负载均衡集群

virtual_server group string #使用虚拟服务器组

4.2 虚拟服务器配置

virtual_server IP port { #VIP和PORTdelay_loop <INT> #检查后端服务器的时间间隔

lb_algo rr|wrr|lc|wlc|lblc|sh|dh #定义调度方法

lb_kind NAT|DR|TUN #集群的类型,注意要大写

persistence_timeout <INT> #持久连接时长

protocol TCP|UDP|SCTP #指定服务协议,一般为TCP

sorry_server <IPADDR> <PORT> #所有RS故障时,备用服务器地址

real_server <IPADDR> <PORT> { #RS的IP和PORT

weight <INT> #RS权重

notify_up <STRING>|<QUOTED-STRING> #RS上线通知脚本

notify_down <STRING>|<QUOTED-STRING> #RS下线通知脚本

HTTP_GET|SSL_GET|TCP_CHECK|SMTP_CHECK|MISC_CHECK { ... } #定义当前主机健康状态检测方法

}

}

4.3 虚拟服务器组

#参考文档:/usr/share/doc/keepalived/keepalived.conf.virtual_server_groupvirtual_server_group <STRING> {

# Virtual IP Address and Port

<IPADDR> <PORT>

<IPADDR> <PORT>

...

# <IPADDR RANGE> has the form

# XXX.YYY.ZZZ.WWW-VVV eg 192.168.200.1-10

# range includes both .1 and .10 address

<IPADDR RANGE> <PORT># VIP range VPORT

<IPADDR RANGE> <PORT>

...

# Firewall Mark (fwmark)

fwmark <INTEGER>

fwmark <INTEGER>

...

}