一、服务器规划和基础配置

*RM-ResourceManager,HM-HMaster,SN-SecondaryNameNode,NM-NodeManager,HR-HRegionServer

IP

Hostname

角色

10.0.0.1

Node1

RM,HM,NameNode,SN

10.0.0.2

Node2

DataNode,NM,HR

10.0.0.3

Node3

DataNode,NM,HR

1.ssh免密认证

ssh-keygen yum -y install openssh-clients ssh-copy-id

2.Hosts文件主机名解析

vim /etc/hosts

10.0.0.1 Node1

10.0.0.2 Node2

10.0.0.3 Node3

3.防火墙selinux关闭

setenforce 0

4.Java8安装

java 1.8.0_144

vim /etc/profile

export JAVA_HOME=/usr/local/jdk

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

5.软件版本

hbase 1.2.11+hadoop3.1.2+zookeeper3.4.13

二、Zookeeper集群搭建

1. 配置文件更改

1.mv /root/soft/zookeeper-3.4.13 /usr/local/zookeeper

2.mv conf/zoo_sample.cfg conf/zoo.cfg

3.mkdir /usr/local/zookeeper/{data,logs}

4.vim conf/zoo.cfg

-增加如下设置

dataDir=/usr/local/zookeeper/data

dataLogDir=/usr/local/zookeeper/logs

server.1=10.0.0.1:2888:3888

server.2=10.0.0.2:2888:3888

server.3=10.0.0.3:2888:3888

MaxSessionTimeout=200000

5.vim /usr/local/zookeeper/data/myid

1(另外两台改成2和3)

2. 服务启动测试(3台都启动后查看日志是否有报错)

1.cd /usr/local/zookeeper

2../bin/zkServer.sh start #启动服务

3../bin/zkServer.sh status #查看服务状态

三、Hadoop集群搭建

1. 配置文件更改

1.mv /root/soft/hadoop-3.1.2 /usr/local/hadoop

2.vim /etc/profile(3台都需要更改)

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

source /etc/profile 使配置生效

3.cd /usr/local/hadoop/etc/hadoop

vim core-site.xml

<configuration><property>

<name>hadoop.tmp.dir</name>

<value>file:/home/hadoop/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://10.0.0.1:9000</value>

</property>

</configuration>

4.mkdir -p /home/hadoop/hdfs/name

mkdir -p /home/hadoop/hdfs/data

vim hdfs-site.xml

<configuration><property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:/home/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:/home/hadoop/hdfs/data</value>

</property>

</configuration>

dfs.replication表示数据副本数,一般不大于datanode的节点数。

5.vim mapred-site.xml

<configuration><property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>10.0.0.1:9001</value>

</property>

</configuration>

6.vim yarn-site.xml

<configuration><!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>10.0.0.1</value>

</property>

</configuration>

7.修改从服务器配置

vim worker

10.0.0.2

10.0.0.3

2. 增加环境变量

1.vim hadoop-env.sh

添加JDK路径

export JAVA_HOME=/usr/local/jdk

2.cd /usr/local/hadoop

①vim sbin/start-dfs.sh(stop-dfs.sh)

添加HDFS的root执行权限

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

②vim sbin/start-yarn.sh(stop-yarn.sh)

添加yarn的root执行权限

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

3. 配置从节点的Hadoop环境

scp -r /usr/local/hadoop 10.0.0.2:/usr/local/

scp -r /usr/local/hadoop 10.0.0.3:/usr/local/

4. 服务启动测试

①格式化namenode,第一次启动服务前执行的操作,以后不需要执行。

cd /usr/local/hadoop

./bin/hadoop namenode -format

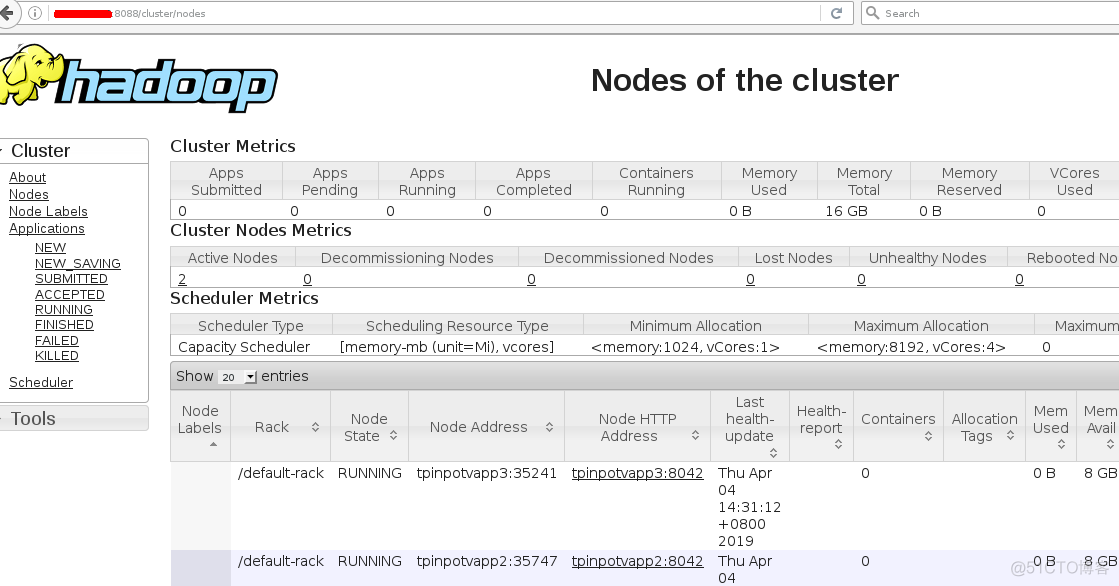

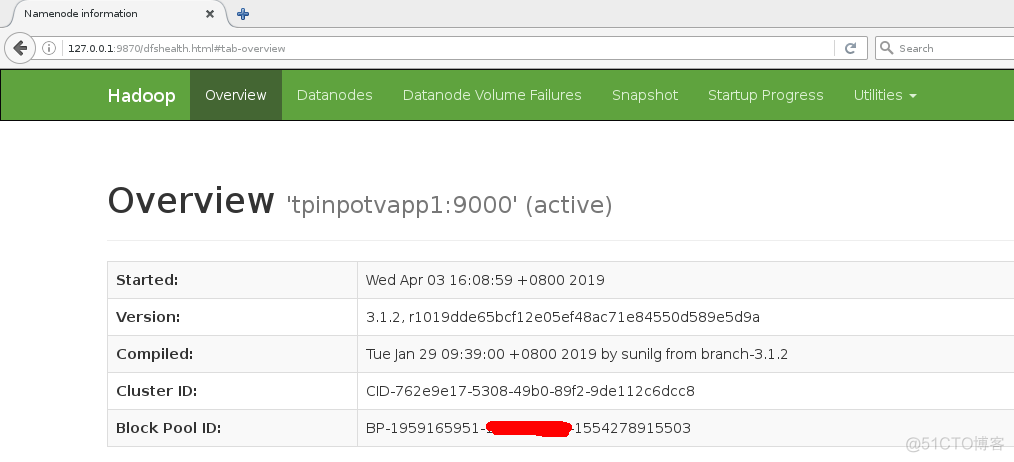

② 在master节点执行如下命令

./sbin/start-all.sh

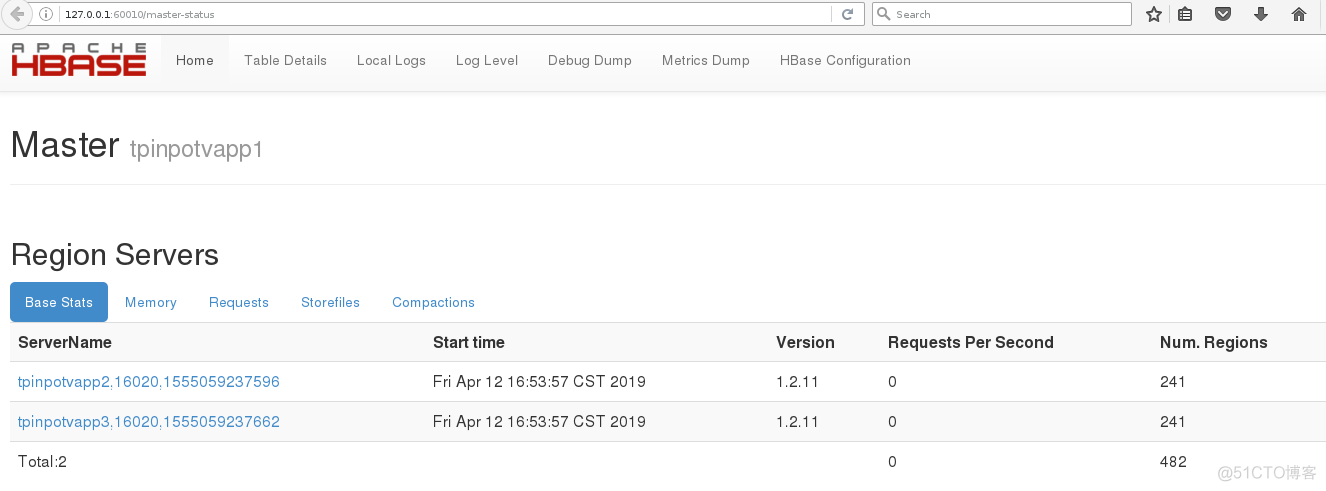

四、Hbase集群搭建

1. 配置文件更改

mv /root/soft/hbase-1.2.11 /usr/local/hbase

1.cd /usr/local/hbase

vim config/hbase-site.xml

<configuration><property>

<name>hbase.rootdir</name>

<value>hdfs://10.0.0.1:9000/hbase</value>

</property>

<property>

<name>hbase.master</name>

<value>10.0.0.1</value>

</property>

<property>

<name>hbase.master.info.port</name>

<value>60010</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>10.0.0.1,10.0.0.2,10.0.0.3</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>200000</value>

</property>

<property>

<name>dfs.support.append</name>

<value>true</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

</configuration>

2.修改从服务器配置

vim config/regionservers

10.0.0.2

10.0.0.3

2. 增加环境变量

vim hbase-env.sh

export JAVA_HOME=/usr/local/jdk

export HBASE_CLASSPATH=/usr/local/hadoop/etc/hadoop #配置hbase找到Hadoop

export HBASE_MANAGES_ZK=false #使用外部的zookeeper

3. 配置从节点的Hadoop环境

scp -r /usr/local/hbase 10.0.0.2:/usr/local/

scp -r /usr/local/hbase 10.0.0.3:/usr/local/

4. 服务启动测试

cd /usr/local/hbase

./bin/start-hbase.sh

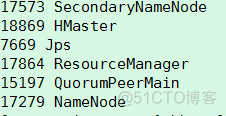

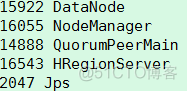

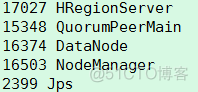

5. 运行检查

10.0.0.1

jps

10.0.0.2

jps

10.0.0.3

Jps

五、错误排查

启动hbase报java.lang.ClassNotFoundException: org.apache.htrace.SamplerBuilder

上传htrace-core-3.1.0-incubating.jar到lib目录下

Attempting to operate on hdfs namenode as root

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

java.lang.IllegalStateException: The procedure WAL relies on the ability to hsync for proper operation during component failures, but the underlying filesystem does not support doing so. Please check the config value of 'hbase.procedure.store.wal.use.hsync' to set the desired level of robustness and ensure the config value of 'hbase.wal.dir' points to a FileSystem mount that can provide it.

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

Operation category READ is not supported in state standby

获取namenode节点状态

./bin/hdfs haadmin -getServiceState Node1

手动更改namenode状态为active

./bin/hdfs haadmin -transitionToActive --forcemanual Node1

NameNode is not formatted

./bin/hadoop namenode -format

Unable to check if JNs are ready for formatting

解决方法:先用./zkServer.sh start 启动各个zookeeper,再用./hadoop-daemon.sh start journalnode启动各个NodeName上的JournalNode进程。然后再进行格式化即可。

Incompatible namespaceID for journal Storage Directory /root/hadoop/hdfs/journal/hadoop-test: NameNode has nsId 229502726 but storage has nsId 1994970436

是多次格式化namenode导致版本不一致,删除namenode的目录后,重新格式化,重启后,该问题解决。

java.io.IOException: Incompatible clusterIDs in /data/dfs/data: namenode clusterID = CID-d1448b9e-da0f-499e-b1d4-78cb18ecdebb; datanode clusterID = CID-ff0faa40-2940-4838-b321-98272eb0dee3

先停掉集群,然后将datanode节点目录/dfs/data/current/VERSION中的修改为与namenode一致即可

got premature end-of-file at txid 0; expected file to go up to 2

hdfs namenode -bootstrapStandby #会清空hdfs