-------------------------------------------

一、前言

二、环境

三、配置

1.target配置

2.node1配置

3.node2配置

四、测试

五、附:

1.iscsiadm用法

2.o2cb_ctl用法

-------------------------------------------

一、前言

1、简介

iSCSI介绍请参看另一篇博客《关于iSCSI的一些介绍》。

OCFS2是下一代的ORACLE集群文件系统,它已经被设计成为一种为通用的文件系统。OCFS2能使运行在集群中所有节点并发的通过标准文件系统接口来访问存储备。

2、下载

OCFS2的发行版包括了2个安装包,一个称为核心模块,另一个叫工具包。

核心模块链接下载:

http://oss.oracle.com/projects/ocfs2/files/

工具包链接下载:

http://oss.oracle.com/projects/ocfs2-tools/files/

首先要下载相应的软件包,对于核心模块,下载时要考虑它的发行号,适用的平台,操作系统内核版本以及内核的类型(比如SMP,HUGEMEM,PSMP等),对于工具包,只要符合发行版本和平台就可以了。工具包又分为两部分,ocfs2-tools命令行工具,ocfs2console图形工具,用户可以选择不安装这部分,但它的确可以令操作简单化。

3、安装(每个节点都安装)

以RedHat安装为例:

操作系统:Red Hat Enterprise Linux Server release 5.4

操作系统内核版本:2.6.18-164.el5

OCFS2核心模块安装包:

ocfs2-2.6.18-164.el5-1.4.7-1.el5.i686.rpm

OCFS2工具安装包:

ocfs2-tools-1.4.4-1.el5.i386.rpm

ocfs2console-1.4.4-1.el5.i386.rpm

二、环境

1.系统:RedHat5.4 32位

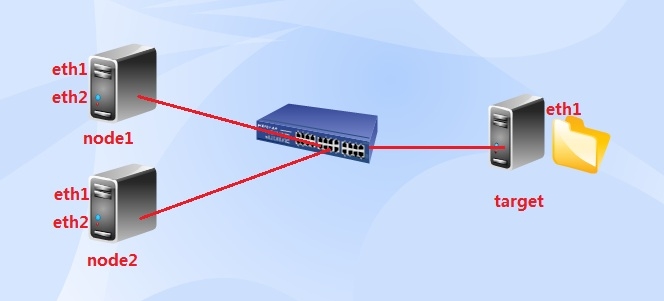

2.拓扑图

3.地址规划

target

eth1 192.168.3.100/24

node1

eth1 192.168.2.10/24

eth2 192.168.3.10/24

node2

eth1 192.168.2.20/24

eth2 192.168.3.20/24

三、配置

1.target端配置

1-1.添加一块硬盘(用于node1和node2的共享磁盘)

1-2.安装scsi-target

# mkdir /media/rhel/ //创建光盘挂载点 # mount /dev/cdrom /media/rhel/ # cd /media/rhel/ClusterStorage/ # rpm -ivh perl-Config-General-2.40-1.el5.noarch.rpm //依赖包 # rpm -ivh scsi-target-utils-0.0-5.20080917snap.el5.i386.rpm //安装scsi-target,作为发起者 # service tgtd start Starting SCSI target daemon: [ OK ] 创建一个新的target: # tgtadm --lld iscsi --op new --mode target --tid 1 --targetname iqn.2014-03.com.a.target:disk 用刚创建的分区/dev/sdc1创建为LUN,号码为1: # tgtadm --lld iscsi --op new --mode logicalunit --tid 1 --lun 1 --backing-store /dev/sdc1 开放给192.168.3.0/24网络中的所有主机访问: # tgtadm --lld iscsi --op bind --mode target --tid 1 --initiator-address 192.168.3.0/24 显示所有创建的target: # tgtadm --lld iscsi --op show --mode target Target 1: iqn.2014-03.com.a.target:disk System information: Driver: iscsi State: ready I_T nexus information: LUN information: LUN: 0 Type: controller SCSI ID: deadbeaf1:0 SCSI SN: beaf10 Size: 0 MB Online: Yes Removable media: No Backing store: No backing store LUN: 1 Type: disk SCSI ID: deadbeaf1:1 SCSI SN: beaf11 Size: 2147 MB Online: Yes Removable media: No Backing store: /dev/sdc1 Account information: ACL information: 192.168.3.0/24 显示target的详细信息: # tgtadm --lld iscsi --op show --mode target --tid 1 # vim /etc/tgt/targets.conf //查看配置文档 6 <target iqn.2014-03.com.a.target:disk> 7 # List of files to export as LUNs 8 backing-store /dev/sdc1 9 10 # Authentication : 11 # if no "incominguser" is specified, it is not used 12 #incominguser backup secretpass12 13 14 # Access control : 15 # defaults to ALL if no "initiator-address" is specified 16 initiator-address 192.168.3.0/24 17 </target>2.node1配置

2-1.修改主机名

# vim /etc/sysconfig/network HOSTNAME=node1.a.com # hostname node1.a.com # vim /etc/hosts //相互通信 192.168.2.10 node1.a.com node1 192.168.2.20 node2.a.com node22-2.安装iscsi-initiator

# cd /media/rhel/Server/ //挂载光盘 # rpm -ivh iscsi-initiator-utils-6.2.0.871-0.10.el5.i386.rpm # vim /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.2014-03.com.a.node1 # service iscsi start iscsid is stopped Turning off network shutdown. Starting iSCSI daemon: [ OK ] [ OK ] Setting up iSCSI targets: iscsiadm: No records found! [ OK ]2-3.连接target服务器端

# iscsiadm --mode discovery --type sendtargets --portal 192.168.3.100 192.168.3.100:3260,1 iqn.2014-03.com.a.target:disk # man iscsiadm //查看命令的使用 # iscsiadm --mode node --targetname iqn.2014-03.com.a.target:disk --portal 192.168.3.100:3260 --login //登陆,如登陆失败可以使用--logout登出再登陆。 # fdisk -l Device Boot Start End Blocks Id System /dev/sda1 * 1 38 305203+ 83 Linux /dev/sda2 39 914 7036470 83 Linux /dev/sda3 915 1044 1044225 82 Linux swap / Solaris Disk /dev/sdb: 2146 MB, 2146765824 bytes 67 heads, 62 sectors/track, 1009 cylinders Units = cylinders of 4154 * 512 = 2126848 bytes Disk /dev/sdb doesn't contain a valid partition table2-4.安装ocfs2相关包

-rw-r--r-- 1 root root 324174 Mar 15 20:49 ocfs2-2.6.18-164.el5-1.4.7-1.el5.i686.rpm -rw-r--r-- 1 root root 338927 Mar 15 20:49 ocfs2console-1.4.4-1.el5.i386.rpm -rw-r--r-- 1 root root 1559206 Mar 15 20:56 ocfs2-tools-1.4.4-1.el5.i386.rpm # rpm -ivh *.rpm # scp *.rpm node2:/root/ //复制ocfs2相关包到节点2上。 # o2cb_ctl -C -n ocfs2 -t cluster -i # service o2cb enable Writing O2CB configuration: OK Loading filesystem "configfs": OK Mounting configfs filesystem at /sys/kernel/config: OK Loading filesystem "ocfs2_dlmfs": OK Creating directory '/dlm': OK Mounting ocfs2_dlmfs filesystem at /dlm: OK Checking O2CB cluster configuration : Failed //一个错误,缺少配置文档,下面开始配置。 # o2cb_ctl -C -n ocfs2 -t cluster -i //创建ocfs2集群文件夹 Cluster ocfs2 created # cd /etc/ocfs2/ # ll -rw-r--r-- 1 root root 40 Mar 15 20:59 cluster.conf # vim cluster.conf //仅查看,不写入任何东西 将node1的配置写入配置文档中。 # o2cb_ctl -C -n node1.a.com -t node -a number=0 -a ip_address=192.168.2.10 -a ip_port=7777 -a cluster=ocfs2 将node2的配置写入配置文档中。 # o2cb_ctl -C -n node2.a.com -t node -a number=1 -a ip_address=192.168.2.20 -a ip_port=7777 -a cluster=ocfs2 # vim cluster.conf //再次查看 # service o2cb restart Stopping O2CB cluster ocfs2: OK Unmounting ocfs2_dlmfs filesystem: OK Unloading module "ocfs2_dlmfs": OK Unmounting configfs filesystem: OK Unloading module "configfs": OK Loading filesystem "configfs": OK Mounting configfs filesystem at /sys/kernel/config: OK Loading filesystem "ocfs2_dlmfs": OK Mounting ocfs2_dlmfs filesystem at /dlm: OK Starting O2CB cluster ocfs2: OK2-5.挂载服务器端存储

# fdisk /dev/sdb Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-1009, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-1009, default 1009): Using default value 1009 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks. # fdisk -l Device Boot Start End Blocks Id System /dev/sda1 * 1 38 305203+ 83 Linux /dev/sda2 39 914 7036470 83 Linux /dev/sda3 915 1044 1044225 82 Linux swap / Solaris Device Boot Start End Blocks Id System /dev/sdb1 1 1009 2095662 83 Linux # mkfs -t ocfs2 -N 2 /dev/sdb1 //格式化 # mkdir /mnt/1 //创建挂载点 # mount /dev/sdb1 /mnt/1 //挂载 # mount /dev/sdb1 on /mnt/1 type ocfs2 (rw,_netdev,heartbeat=local)3.node2配置

# hostname node2.a.com # vim /etc/sysconfig/network HOSTNAME=node2.a.com # hostname node2.a.com # vim /etc/hosts 192.168.2.10 node1.a.com node1 192.168.2.20 node2.a.com node2 # ll -rw-r--r-- 1 root root 324174 Mar 15 20:57 ocfs2-2.6.18-164.el5-1.4.7-1.el5.i686.rpm -rw-r--r-- 1 root root 338927 Mar 15 20:57 ocfs2console-1.4.4-1.el5.i386.rpm -rw-r--r-- 1 root root 1559206 Mar 15 20:57 ocfs2-tools-1.4.4-1.el5.i386.rpm # rpm -ivh *.rpm # service o2cb enable # o2cb_ctl -C -n ocfs2 -t cluster -i Cluster ocfs2 created # o2cb_ctl -C -n node1.a.com -t node -a number=0 -a ip_address=192.168.2.10 -a ip_port=7777 -a cluster=ocfs2 # o2cb_ctl -C -n node2.a.com -t node -a number=1 -a ip_address=192.168.2.20 -a ip_port=7777 -a cluster=ocfs2 # cd /etc/ocfs2/ # ll -rw-r--r-- 1 root root 238 Mar 15 21:06 cluster.conf # vim cluster.conf # service o2cb restart # service o2cb status # cd /media/rhel/Server/ # rpm -ivh iscsi-initiator-utils-6.2.0.871-0.10.el5.i386.rpm # vim /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.2014-03.com.a.node2 # service iscsi start # iscsiadm --mode discovery --type sendtargets --portal 192.168.3.100 192.168.3.100:3260,1 iqn.2014-03.com.a.target:disk # iscsiadm --mode node --targetname iqn.2014-03.com.a.target:disk --portal 192.168.3.100:3260 --login # fdisk -l Device Boot Start End Blocks Id System /dev/sdb1 1 1009 2095662 83 Linux # mkdir /mnt/1 //node1中已格式化,直接挂载即可。 # mount /dev/sdb1 /mnt/1 # mount /dev/sdb1 on /mnt/1 type ocfs2 (rw,_netdev,heartbeat=local) PS:如无法挂载,重新退出并登录再挂载(如下) # iscsiadm --mode node --targetname iqn.2014-03.com.a.target:disk --portal 192.168.3.100:3260 --logout # iscsiadm --mode node --targetname iqn.2014-03.com.a.target:disk --portal 192.168.3.100:3260 --login四、测试

4-1.node2测试

# cd /mnt/1 # touch f1 //新建f1文本文件 # ll -rw-r--r-- 1 root root 0 Mar 15 21:28 f1 drwxr-xr-x 2 root root 3896 Mar 15 21:11 lost+found # vim f1 //编辑f1,保持编辑状态,不退出4-2.node1测试

# cd /mnt/1 # ll //f1已同步 -rw-r--r-- 1 root root 0 Mar 15 21:28 f1 drwxr-xr-x 2 root root 3896 Mar 15 21:11 lost+found # vim f1 //无法编辑,已被锁住,因为node2正在编辑。现在两个客户端就共享使用了iscsi设备,并且可以同时往挂载的分区中写入数据了,可以实现实时同步!

五、附:

1.iscsiadm命令使用方法:

iscsiadm -m discovery [ -d debug_level ] [ -P printlevel ] [ -t type -p ip:port [ -l ] ]

-d --debug=debug_level 显示debug信息,级别为0-8;

-l --login 登录

-t --type=type 这里可以使用的类型为sendtargets, fw, 和 iSNS

-p --portal=ip[:port] 指定target服务的IP和端口

-m --mode op 可用的mode有discovery,node,fw,host iface和session

-T --targetname=targetname 用于指定要访问的target的名字

-u --logout 登出

2.o2cb_ctl命令使用方法:

o2cb_ctl -C -n object -t类型(-i)[-a attribute ]

OPTIONS:

-C Create an object in the OCFS2 Cluster Configuration.

-D Delete an object from the existing OCFS2 Cluster Configuration.

-I Print information about the OCFS2 Cluster Configuration.

-H Change an object or objects in the existing OCFS2 Cluster Configuration.

-h Displays help and exit.

-V Print version and exit.

OTHER OPTIONS:

-a <attribute>

With -C, <attribute> is in format "parameter=value", where the parameter is a valid parameter that can be set in the file /etc/ocfs2/cluster.conf. With -I, <attribute> may be "parameter", indicating an attribute to be listed in the output, or it may be "parameter==value", indicating that only objects matching "parameter=value" are to be displayed.

-i

Valid only with -C. When creating something (node or cluster), it will also install it in the live cluster. If the parameter is not specified, then only update the /etc/ocfs2/cluster.conf.

-n object

object is usually the node name or cluster name. In the /etc/ocfs2/cluster.conf file, it would be the value of the name parameter for any of the sections (cluster or node).

-o

Valid only with -I. Using this parameter, if one asks o2cb_ctl to list all nodes, it will output it in a format suitable for shell parsing.

-t type

type can be cluster, node or heartbeat.

-u

Valid only with -D. When deleting something (node or cluster), it will also remove it from the live cluster. If the parameter is not specified, then only update the /etc/ocfs2/cluster.conf.

-z

Valid only with -I. This is the default. If one asks o2cb_ctl to list all nodes, it will give a verbose listing.

EXAMPLES

Add node5 to an offline cluster:

$ o2cb_ctl -C -n node5 -t node -a number=5 -a ip_address=192.168.0.5 -a ip_port=7777 -a cluster=mycluster

Add node10 to an online cluster:

$ o2cb_ctl -C -i -n node10 -t node -a number=10 -a ip_address=192.168.1.10 -a ip_port=7777 -a cluster=mycluster

Note the -i argument.

Query the IP address of node5:

$ o2cb_ctl -I -n node5 -a ip_address

Change the IP address of node5:

$ o2cb_ctl -H -n node5 -a ip_address=192.168.1.5