#-*- coding:utf-8 -*-import urllib2from lxml import etreeclass CrawlJs():#定义函数爬取对应的数据def getArticle(self,url):print █████████████◣开始爬取数据my_headers {User-Agent:Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.104 Safari/537.36,}request urllib2.Request(url,headersmy_headers)content urllib2.urlopen(request).read()return content#定义函数筛选和保存爬取到的数据def save(self,content):xml etree.HTML(content)title xml.xpath(//div[class"content"]/a[class"title"]/text())link xml.xpath(//div[class"content"]/a[class"title"]/href)print linki-1for data in title:print datai1with open(JsIndex.txt,a) as f:f.write([data.encode(utf-8)](http://www.jianshu.comlink[i]) \n)print █████████████◣爬取完成#定义主程序接口if __name__ __main__:page int(raw_input(请输入你要抓取的页码总数))for num in range(page):#这里输入个人主页如u/c475403112ceurl http://www.jianshu.com/u/c475403112ce?order_byshared_at%s%(num1)#调用上边的函数js CrawlJs()#获取页面内容content js.getArticle(url)#保存内容到文本中js.save(content)

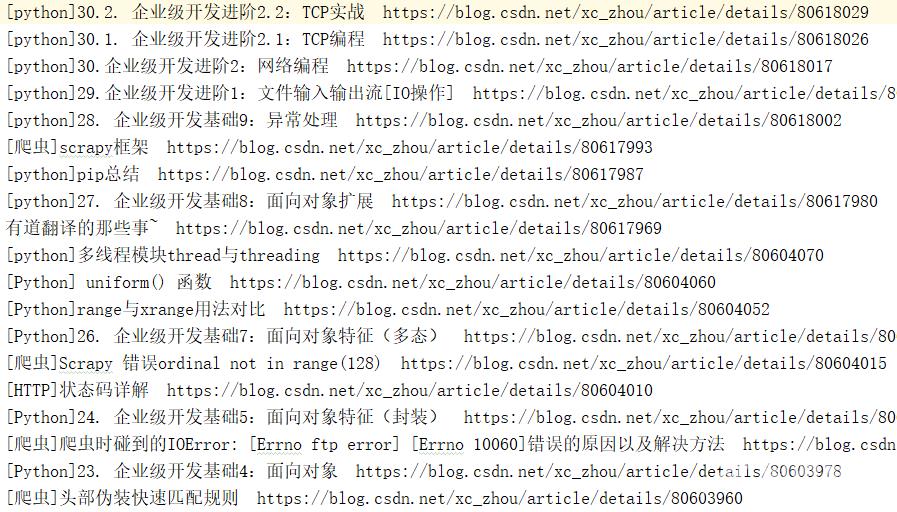

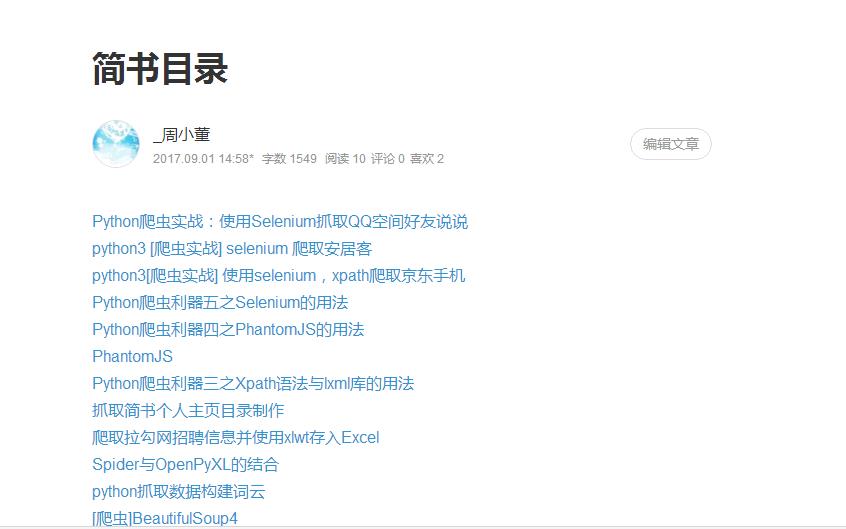

运行结果

python3代码

#-*- coding:utf-8 -*-import urllib.requestfrom lxml import etreeclass CrawlJs():#定义函数爬取对应的数据def getArticle(self,url):print (█████████████◣开始爬取数据)my_headers {User-Agent:Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.104 Safari/537.36,}request urllib.request.Request(url,headersmy_headers)content urllib.request.urlopen(request).read()return content#定义函数筛选和保存爬取到的数据def save(self,content):xml etree.HTML(content)title xml.xpath(//div[class"content"]/a[class"title"]/text())link xml.xpath(//div[class"content"]/a[class"title"]/href)print (link)i-1for data in title:print (data)i1with open(JsIndex.txt,a) as f:f.write([data](http://www.jianshu.comlink[i]) \n)print (█████████████◣爬取完成)#定义主程序接口if __name__ __main__:page int(input(请输入你要抓取的页码总数))for num in range(page):#这里输入个人主页如u/c475403112ceurl http://www.jianshu.com/u/c475403112ce?order_byshared_at%s%(num1)js CrawlJs()content js.getArticle(url)js.save(content)

抓取博客园个人主页制作目录

python2代码

#-*- coding:utf-8 -*-import urllib2from lxml import etreeclass CrawlJs():#定义函数爬取对应的数据def getArticle(self,url):print █████████████◣开始爬取数据my_headers {User-Agent:Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.104 Safari/537.36,}request urllib2.Request(url,headersmy_headers)content urllib2.urlopen(request).read()return content#定义函数筛选和保存爬取到的数据def save(self,content):xml etree.HTML(content)title xml.xpath(//*[class"postTitle"]/a/text())link xml.xpath(//*[class"postTitle"]/a/href)print (title,link)# print(zip(title,link))# print(map(lambda x,y:[x,y], title,link))for t,li in zip(title,link):print(tli)with open(bokeyuan.txt,a) as f:f.write(t.encode(utf-8)li \n)print █████████████◣爬取完成#定义主程序接口if __name__ __main__:page int(raw_input(请输入你要抓取的页码总数))for num in range(page):#这里输入个人主页url http://www.cnblogs.com/zhouxinfei/default.html?page%s%(num1)js CrawlJs()content js.getArticle(url)js.save(content)

python3代码

#-*- coding:utf-8 -*-import urllib.requestfrom lxml import etreeclass CrawlJs():#定义函数爬取对应的数据def getArticle(self,url):print (█████████████◣开始爬取数据)my_headers {User-Agent:Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.104 Safari/537.36,}request urllib.request.Request(url,headersmy_headers)content urllib.request.urlopen(request).read()return content#定义函数筛选和保存爬取到的数据def save(self,content):xml etree.HTML(content)title xml.xpath(//*[class"postTitle"]/a/text())link xml.xpath(//*[class"postTitle"]/a/href)print (title,link)# print(zip(title,link))# print(map(lambda x,y:[x,y], title,link))for t,li in zip(title,link):print(tli)with open(bokeyuan.txt,a) as f:f.write(t li \n)print(█████████████◣爬取完成)#定义主程序接口if __name__ __main__:page int(input(请输入你要抓取的页码总数))for num in range(page):#这里输入个人主页url http://www.cnblogs.com/zhouxinfei/default.html?page%s%(num1)js CrawlJs()content js.getArticle(url)js.save(content)

CSDN个人目录制作

#-*- coding:utf-8 -*-import urllib.requestfrom lxml import etreeclass CrawlJs():#定义函数爬取对应的数据def getArticle(self,url):print (█████████████◣开始爬取数据)my_headers {User-Agent:Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.104 Safari/537.36,}request urllib.request.Request(url,headersmy_headers)content urllib.request.urlopen(request).read()return content#定义函数筛选和保存爬取到的数据def save(self,content):xml etree.HTML(content)title xml.xpath(//div[class"article-list"]/div/h4/a/text()[2])link xml.xpath(//div[class"article-list"]/div/h4/a/href)if titleNone:return # print(map(lambda x,y:[x,y], title,link))for t,li in zip(title,link):print(tli)with open(csdn.txt,a) as f:f.write(t.strip() li \n)print(█████████████◣爬取完成)#定义主程序接口if __name__ __main__:page int(input(请输入你要抓取的页码总数))for num in range(page):#这里输入个人主页url https://blog.csdn.net/xc_zhou/article/list/%s%(num1)js CrawlJs()content js.getArticle(url)js.save(content)