软件环境:

软件

版本

操作系统

CentOS7.8_x64 (mini)

Docker

19-ce

Kubernetes

1.20

服务器整体规划:

角色

IP

其他单装组件

k8s-master1

192.168.40.180

docker,etcd,keepalived

k8s-master2

192.168.40.181

docker,etcd,keepalived

k8s-master3

192.168.40.183

docker,etcd,keepalived

负载均衡器对外IP

192.168.40.188 (VIP)

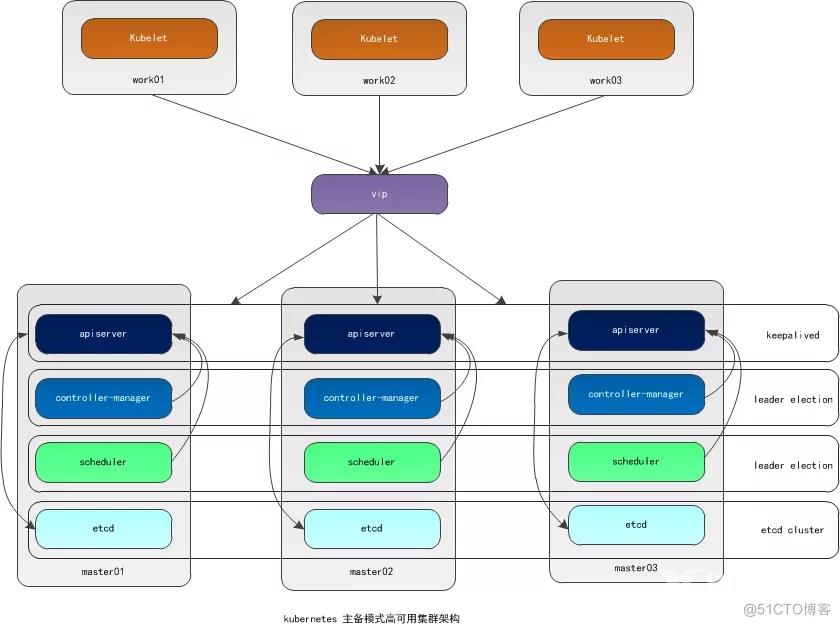

架构图:

环境准备:

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config

# 永久 setenforce 0 # 临时

关闭swap

swapoff -a

# 临时

sed -ri 's/.swap./#&/' /etc/fstab

# 永久根据规划设置主机名

hostnamectl set-hostname <hostname> bash

在master添加hosts

cat >> /etc/hosts << EOF

192.168.40.180 k8s-master1

192.168.40.181 k8s-master2

192.168.40.182 k8s-master3

EOF将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system # 生效

#时间同步

yum install ntpdate -y ntpdate time.windows.com

添加阿里云YUM软件源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

#添加docker YUM软件源

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#安装基础软件包

yum install -y yum-utils device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm iptables-services

#禁用iptables

systemctl stop iptables && systemctl disable iptables

#清空防火墙规则 iptables -F

配置免密登录

ssh-keygen1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.52.53.54.55.56.57.58.59.60.61.

开启ipvs

cat > /etc/sysconfig/modules/ipvs.modules <<\EOF #!/bin/bash ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack" for kernel_module in ${ipvs_modules}; do /sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1 if [ 0 -eq 0 ];

then /sbin/modprobe ${kernel_module}

fi done

EOF1.2.3.4.5.6.7.8.9.10.

把ipvs.modules上传到k8s-master1;k8s-master2;k8s-master3机器的/etc/sysconfig/modules/目录下

- 脚本执行

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs1.

安装docker

yum install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io -y systemctl start docker && systemctl enable docker && systemctl status docker1.2.3.

配置docker镜像加速器和驱动

cat > /etc/docker/daemon.json <<\EOF { "registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com","http://qtid6917.mirror.aliyuncs.com", "https://rncxm540.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"] } EOF systemctl daemon-reload && systemctl restart docker systemctl status docker docker info1.2.3.4.5.6.7.8.9.10.11.

#修改docker文件驱动为systemd,默认为cgroupfs,kubelet默认使用systemd,两者必须一致才可以。

安装初始化k8s需要的软件包

yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 systemctl enable kubelet && systemctl start kubelet systemctl status kubelet1.2.3.4.5.

上面kubelet状态不是running状态,这个是正常的,不用管,等k8s组件起来这个kubelet就正常了#

keepalived安装

yum install keepalived -y1.

- cat /etc/keepalived/keepalived.conf

cat > /etc/keepalived/keepalived.conf <<\EOF ! Configuration File for keepalived global_defs { smtp_server localhost } vrrp_script chk_kubeapiserver { script "/etc/keepalived/keepalived_checkkubeapiserver.sh" interval 3 timeout 1 rise 3 fall 3 } vrrp_instance kubernetes-internal { state BACKUP interface ens32 virtual_router_id 171 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.40.188 } track_script { chk_kubeapiserver } } EOF1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.

- matser2

cat > /etc/keepalived/keepalived.conf <<\EOF ! Configuration File for keepalived global_defs { smtp_server localhost } vrrp_script chk_kubeapiserver { script "/etc/keepalived/keepalived_checkkubeapiserver.sh" interval 3 timeout 1 rise 3 fall 3 } vrrp_instance kubernetes-internal { state BACKUP interface ens33 virtual_router_id 171 priority 99 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.40.188 } track_script { chk_kubeapiserver } } EOF1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.

- mtaster3

cat > /etc/keepalived/keepalived.conf <<\EOF ! Configuration File for keepalived global_defs { smtp_server localhost } vrrp_script chk_kubeapiserver { script "/etc/keepalived/keepalived_checkkubeapiserver.sh" interval 3 timeout 1 rise 3 fall 3 } vrrp_instance kubernetes-internal { state BACKUP interface ens32 virtual_router_id 171 priority 98 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.40.188 } track_script { chk_kubeapiserver } } EOF1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.

- 检测api 脚本

cat > /etc/keepalived/keepalived_checkkubeapiserver.sh <<EOF #!/bin/bash sudo ss -ltn|grep ":6443 " > /dev/null EOF1.2.3.4.5.

chmod 644 /etc/keepalived/keepalived.conf && chmod 700 /etc/keepalived/keepalived_checkkubeapiserver.sh1.

systemctl daemon-reload && systemctl start keepalived && systemctl enable keepalived1.

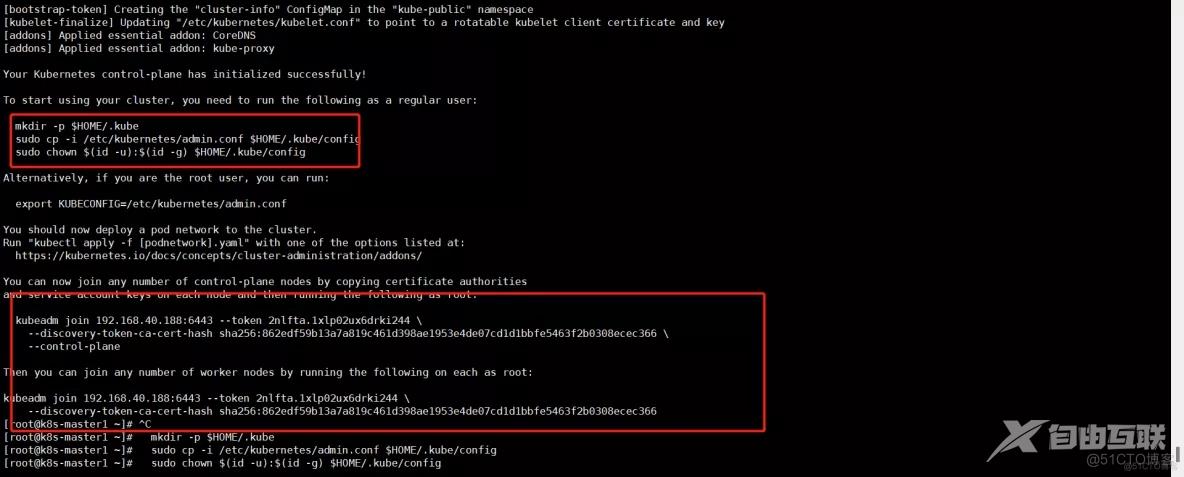

kubeadm初始化k8s集群

在k8s-master1上创建kubeadm-config.yaml文件:

[root@k8s-master1 ~]# cd /root/

[root@k8s-master1]# vim kubeadm-config.yaml

cat > kubeadm-config.yaml << EOF apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.20.6 controlPlaneEndpoint: 192.168.40.188:6443 imageRepository: registry.aliyuncs.com/google_containers apiServer: certSANs: - 192.168.40.180 - 192.168.40.181 - 192.168.40.182 - 192.168.40.188 networking: podSubnet: 10.244.0.0/16 serviceSubnet: 10.10.0.0/16 --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs EOF1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.

安装

kubeadm init --config kubeadm-config.yaml1.

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config1.2.3.

master 新增

在k8s-master1 上运行脚本,将证书分发至master02和master03

cat > cert-main-master.sh <<\EOF#!/bin/bash USER=root CONTROL_PLANE_IPS="192.168.40.181 192.168.40.182" for host in ${CONTROL_PLANE_IPS}; do scp /etc/kubernetes/pki/ca.crt "${USER}"@$host: scp /etc/kubernetes/pki/ca.key "${USER}"@$host: scp /etc/kubernetes/pki/sa.key "${USER}"@$host: scp /etc/kubernetes/pki/sa.pub "${USER}"@$host: scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host: scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host: scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:etcd-ca.crt # Quote this line if you are using external etcd scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:etcd-ca.key done EOF sh ./cert-main-master.sh1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.

在master02;master3 上运行脚本cert-other-master.sh,将证书移至指定目录

cat > cert-other-master.sh <<\EOF#!/bin/bash USER=root # customizable mkdir -p /etc/kubernetes/pki/etcd mv /${USER}/ca.crt /etc/kubernetes/pki/ mv /${USER}/ca.key /etc/kubernetes/pki/ mv /${USER}/sa.pub /etc/kubernetes/pki/ mv /${USER}/sa.key /etc/kubernetes/pki/ mv /${USER}/front-proxy-ca.crt /etc/kubernetes/pki/ mv /${USER}/front-proxy-ca.key /etc/kubernetes/pki/ mv /${USER}/etcd-ca.crt /etc/kubernetes/pki/etcd/ca.crt # Quote this line if you are using external etcd mv /${USER}/etcd-ca.key /etc/kubernetes/pki/etcd/ca.key EOF sh cert-other-master.sh1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.

在k8s-master1上查看加入节点的命令:

kubeadm token create --print-join-command1.

添加 --control-plane

带有 --control-plane 是用于加入组建多master集群的,不带的是加入节点的

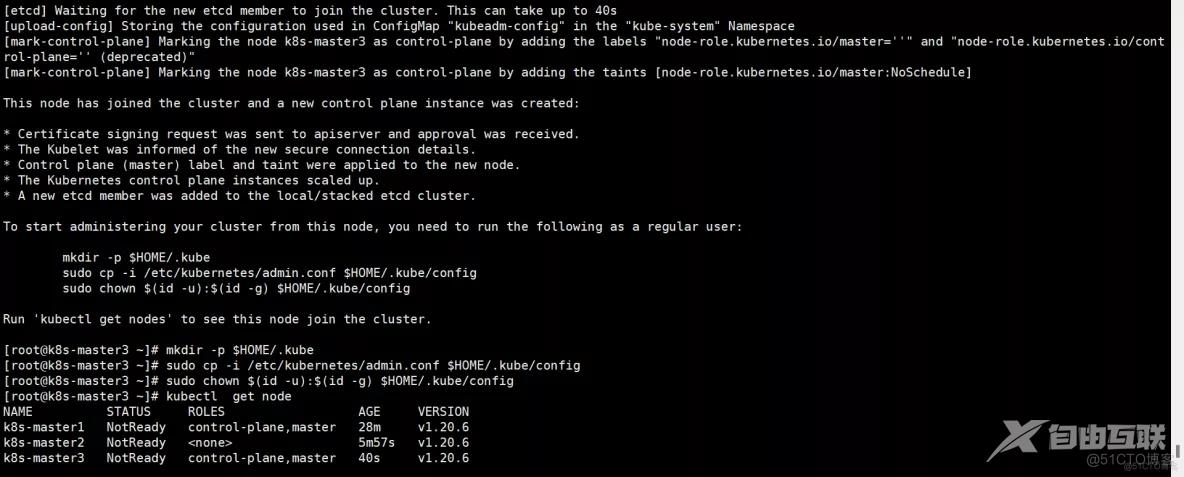

证书拷贝之后在k8s-master2 k8s-master3上执行如下命令,大家复制自己的,这样就可以把k8s-master2和加入到集群,成为控制节点:

kubeadm join 192.168.40.188:6443 --token 2nlfta.1xlp02ux6drki244 --discovery-token-ca-cert-hash sha256:862edf59b13a7a819c461d398ae1953e4de07cd1d1bbfe5463f2b0308ecec366 --control-plane1.

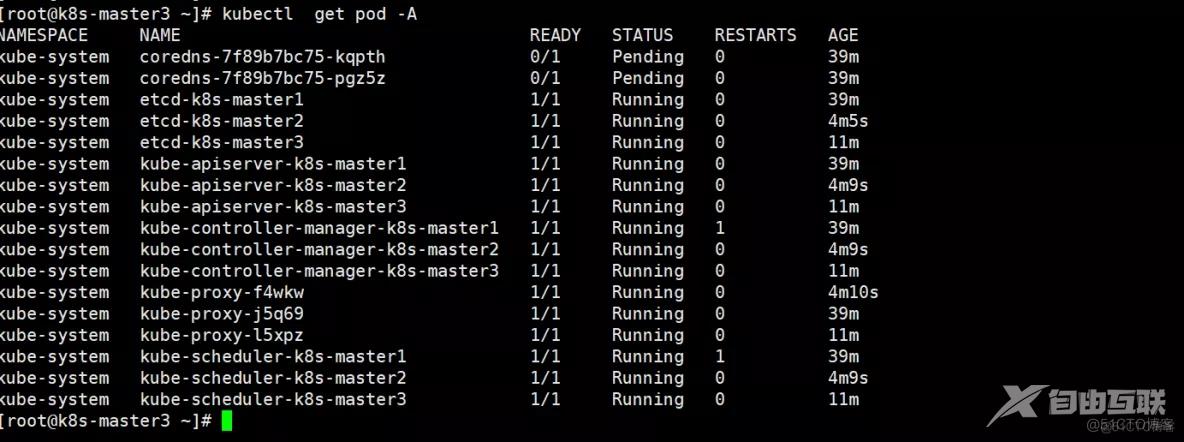

查看集群中的pod

注意:上面状态都是NotReady状态,说明没有安装网络插件

安装kubernetes网络组件-Calico

curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -o calico.yaml1.

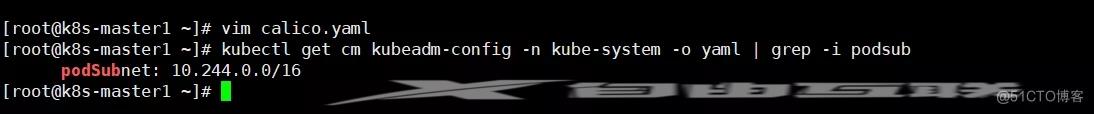

- 查看当前pod网段

kubectl get cm kubeadm-config -n kube-system -o yaml | grep -i podsub1.

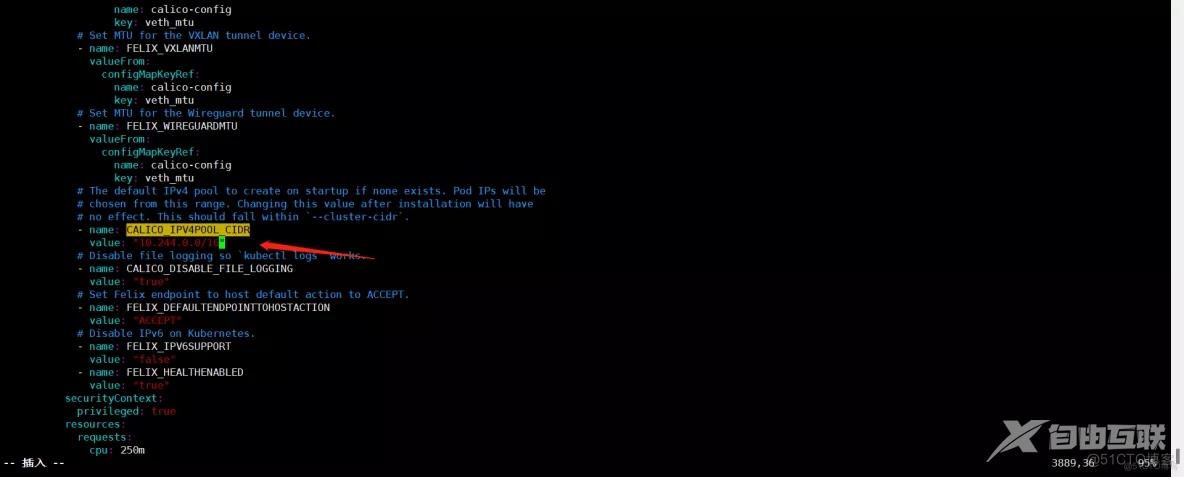

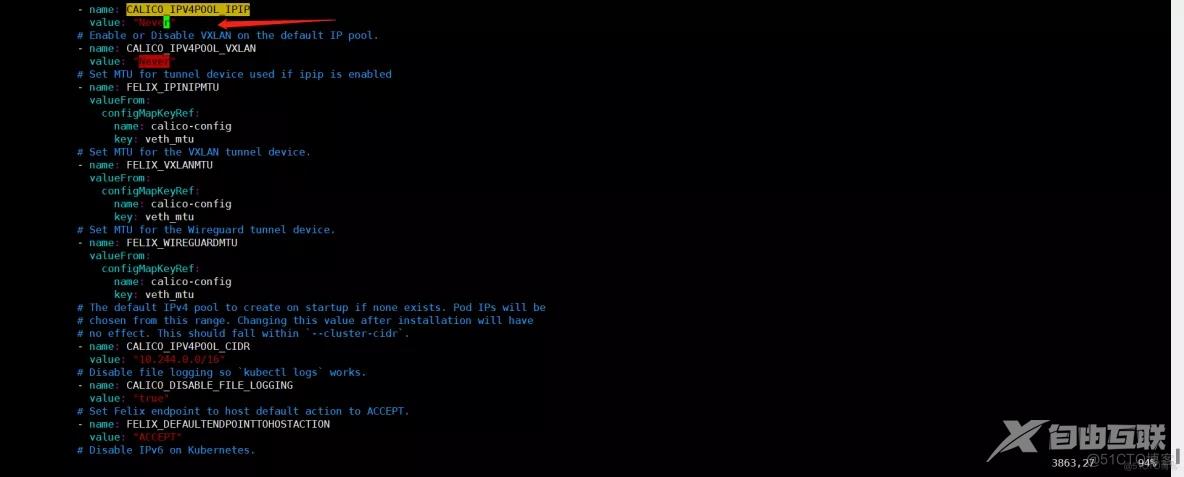

修改 calico.yaml

根据实际网络规划修改Pod CIDR(CALICO_IPV4POOL_CIDR)

选择工作模式(CALICO_IPV4POOL_IPIP),支持BGP(Never)、IPIP(Always)、CrossSubnet(开启BGP并支持跨子网)

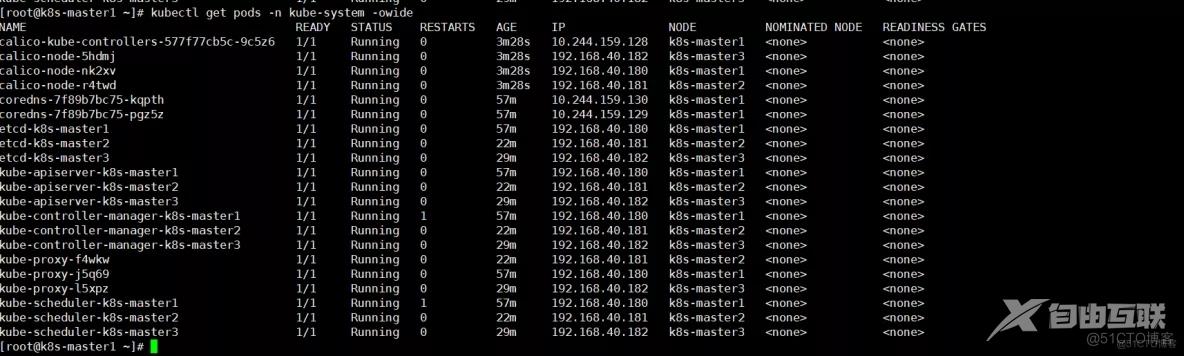

应用清单

kubectl apply -f calico.yamlkubectl get pods -n kube-system1.2.

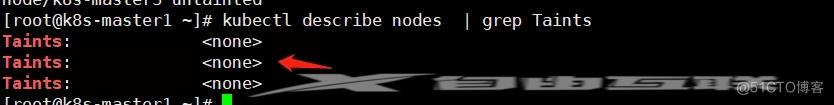

去除污点, 因为目前是3个master 节点, 有默认污点。

[root@k8s-master1 ~]# kubectl describe nodes | grep TaintsTaints: node-role.kubernetes.io/master:NoScheduleTaints: node-role.kubernetes.io/master:NoScheduleTaints: node-role.kubernetes.io/master:NoSchedulekubectl taint node k8s-master1 node-role.kubernetes.io/master:NoSchedule-kubectl taint node k8s-master2 node-role.kubernetes.io/master:NoSchedule-kubectl taint node k8s-master3 node-role.kubernetes.io/master:NoSchedule-1.2.3.4.5.6.7.8.9.

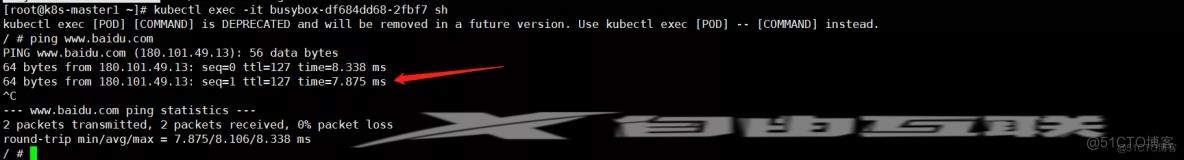

- 测试网络

apiVersion: apps/v1kind: Deploymentmetadata: labels: app: busybox name: busyboxspec: replicas: 1 selector: matchLabels: app: busybox strategy: {} template: metadata: labels: app: busybox spec: containers: - image: busybox:1.28 name: busybox command: ["/bin/sh","-c","sleep 36000"]1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.

#通过上面可以看到能访问网络,说明calico网络插件已经被正常安装了

在Kubernetes集群中创建一个pod,验证是否正常运行:

kubectl create deployment nginx --image=nginxkubectl expose deployment nginx --port=80 --type=NodePortkubectl get pod,svc1.2.3.

访问地址:http://NodeIP:Port

- 测试coredns是否正常

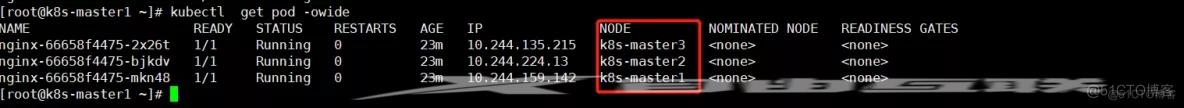

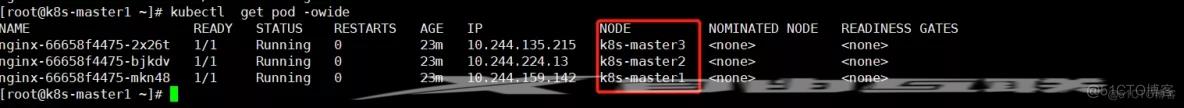

测试3个master 是否都可以运行pod

- 将 Pod 强制打散调度到不同节点上(强反亲和)

apiVersion: apps/v1kind: Deploymentmetadata: name: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - topologyKey: kubernetes.io/hostname labelSelector: matchLabels: app: nginx containers: - name: nginx image: nginx1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.

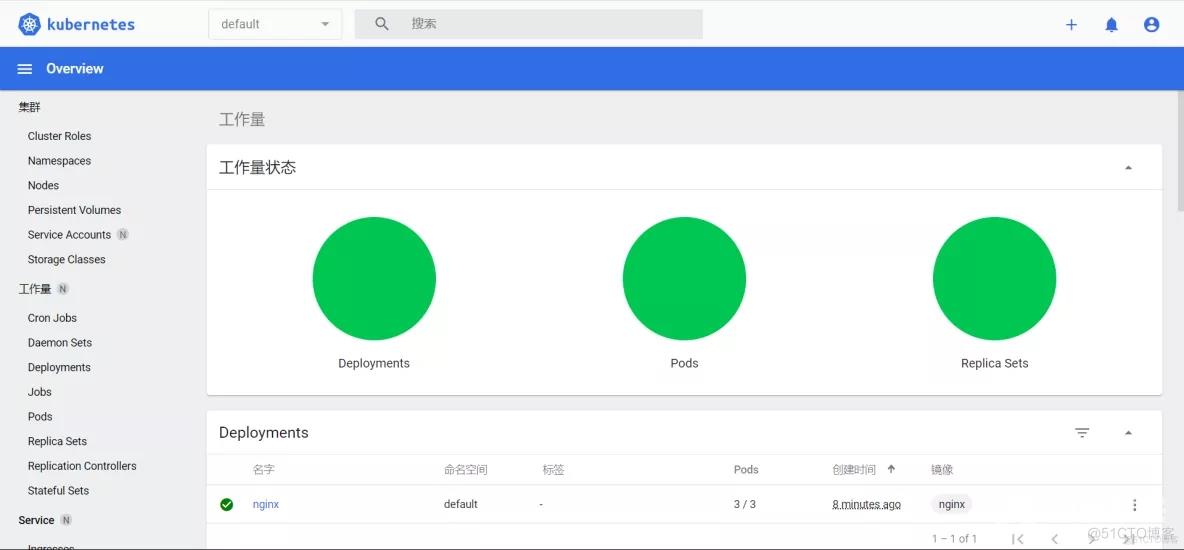

部署 Dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml1.

默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

vi recommended.yaml...kind: ServiceapiVersion: v1metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboardspec: ports: - port: 443 targetPort: 8443 nodePort: 30001 selector: k8s-app: kubernetes-dashboard type: NodePort ... $ kubectl apply -f recommended.yaml $ kubectl get pods -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE dashboard-metrics-scraper-6b4884c9d5-gl8nr 1/1 Running 0 13m kubernetes-dashboard-7f99b75bf4-89cds 1/1 Running 0 13m1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.

- 访问地址:https://NodeIP:30001 (使用https)

- 创建service account并绑定默认cluster-admin管理员集群角色

# 创建用户kubectl create serviceaccount dashboard-admin -n kube-system# 用户授权kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin# 获取用户Tokenkubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')1.2.3.4.5.6.

- 使用输出的token登录Dashboard。

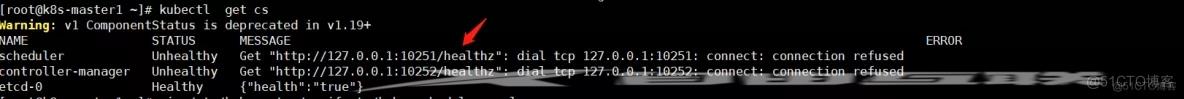

问题1

- Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

可按如下方法处理: vim /etc/kubernetes/manifests/kube-scheduler.yaml修改如下内容:把--bind-address=127.0.0.1变成--bind-address=192.168.40.188把httpGet:字段下的hosts由127.0.0.1变成192.168.40.188把—port=0删除#注意:192.168.40.188是k8s的控制节点的ipvim /etc/kubernetes/manifests/kube-controller-manager.yaml把--bind-address=127.0.0.1变成--bind-address=192.168.40.188把httpGet:字段下的hosts由127.0.0.1变成192.168.40.188把—port=0删除修改之后在k8s各个节点执行systemctl restart kubelet