1.基础信息kubernetes-1-20 新功能特性介绍k8S 1.20新功能特性介绍

问题: 为何要用1.20.0 k8s 版本? 解答 1.阿里云目前支撑最高版本 1.20 版本 保障运维基线统一 线下环境环境一致; 2.新增新特性功能、优化历史(Alpha:优雅的节点关闭、稳定性的过程PID限制、更新的 Alpha:IPV4/IPV6、测试版:API 优先级和公平性、kubectl debug 工具、卷快照)1.1.容量规划

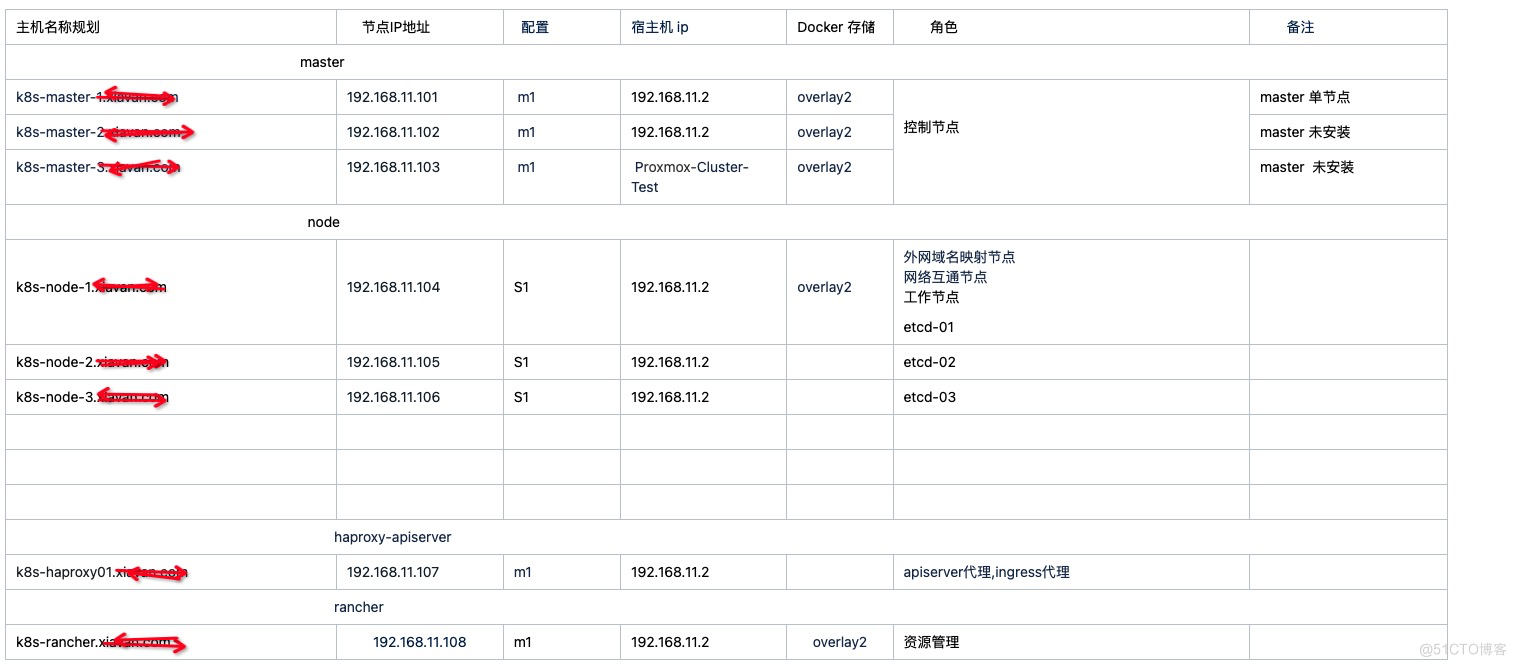

物理服务器规格: 型号 DELL R630 CPU:48 x Intel(R) Xeon(R) CPU E5-2678 v3 @ 2.50GHz (2 Socket) 48核心逻辑CPU 内存:128G 硬盘:容量1.8T 虚拟机(k8s master) CPU:4 内存:8G 硬盘:100G 类型: M1 虚拟机(k8s node) CPU. 8C 内存:16G 硬盘:300G 类型: S1 虚拟机(k8s node) CPU:8C 内存:32G 硬盘:300G 类型: S2 每台物理机部署8个虚拟机(k8s node),预计每个虚拟机运行8个pod 1pm = 8vm * 8 pod = 64 pod, 一台物理机运行64个pod2. 部署节点规划

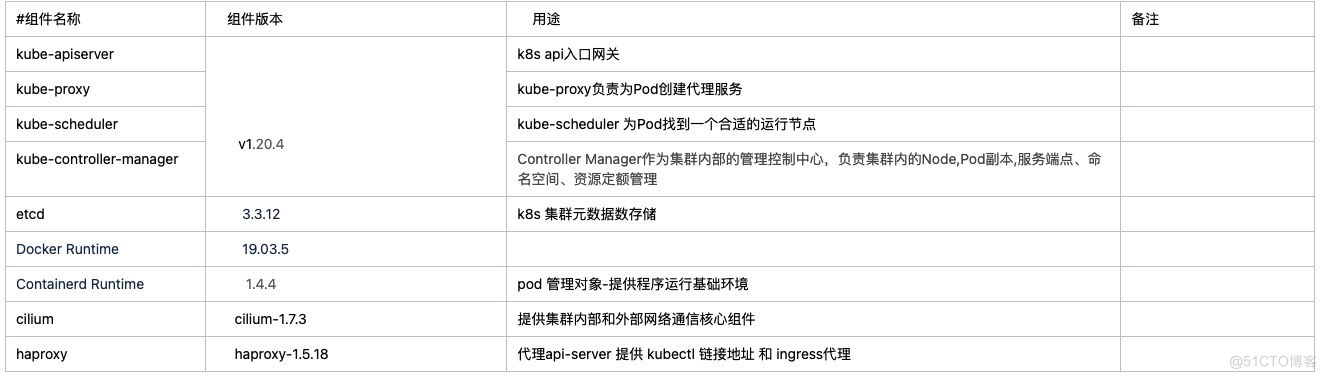

3.组件版本规划

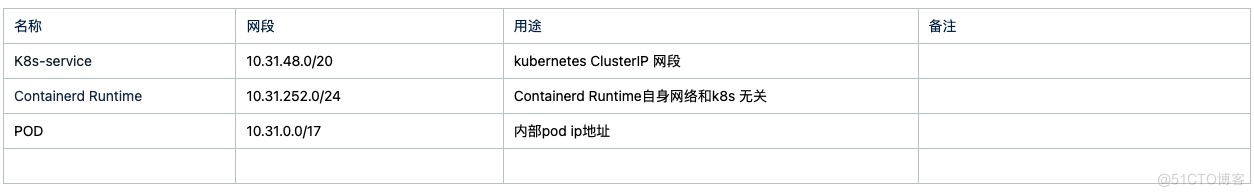

4.子网网络规划

5.部署kubeadmin v1.20.45.1.升级系统内核

1.1. 查看当前内核版本 [root@VM_0_17_centos ~]# uname -r 3.10.0-862.el7.x86_64 安装 ELRepo 源ELRepo官网 (1) 导入公共秘钥 rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org [root@VM_0_17_centos ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org (2) 安装 ELRepo 的 YUM 源 rpm -Uvh https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm [root@VM_0_17_centos ~]# rpm -Uvh https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm Retrieving https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm Preparing... ################################# [100%] Updating / installing... 1:elrepo-release-7.0-3.el7.elrepo ################################# [100%]1.2.安装内核

1. 通过 YUM 安装 yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml 默认安装 mainline 版本(主线版本)。 [root@VM_0_17_centos ~]# yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml Loaded plugins: fastestmirror, langpacks Determining fastest mirrors * elrepo: mirror-hk.koddos.net * elrepo-kernel: mirror-hk.koddos.net elrepo | 2.9 kB 00:00:00 elrepo-kernel | 2.9 kB 00:00:00 (1/2): elrepo/primary_db | 477 kB 00:00:00 (2/2): elrepo-kernel/primary_db | 1.8 MB 00:00:00 Resolving Dependencies --> Running transaction check ---> Package kernel-ml.x86_64 0:5.12.8-1.el7.elrepo will be installed ---> Package kernel-ml-devel.x86_64 0:5.12.8-1.el7.elrepo will be installed --> Finished Dependency Resolution Dependencies Resolved ===================================================================================================================================================================== Package Arch Version Repository Size ===================================================================================================================================================================== Installing: kernel-ml x86_64 5.12.8-1.el7.elrepo elrepo-kernel 48 M kernel-ml-devel x86_64 5.12.8-1.el7.elrepo elrepo-kernel 13 M Transaction Summary ===================================================================================================================================================================== Install 2 Packages Total download size: 61 M Installed size: 264 M Is this ok [y/d/N]: y Downloading packages: (1/2): kernel-ml-devel-5.12.8-1.el7.elrepo.x86_64.rpm | 13 MB 00:00:16 (2/2): kernel-ml-5.12.8-1.el7.elrepo.x86_64.rpm | 48 MB 00:01:16 --------------------------------------------------------------------------------------------------------------------------------------------------------------------- Total 807 kB/s | 61 MB 00:01:16 Running transaction check Running transaction test Transaction test succeeded Running transaction Warning: RPMDB altered outside of yum. Installing : kernel-ml-5.12.8-1.el7.elrepo.x86_64 1/2 Installing : kernel-ml-devel-5.12.8-1.el7.elrepo.x86_64 2/2 Verifying : kernel-ml-devel-5.12.8-1.el7.elrepo.x86_64 1/2 Verifying : kernel-ml-5.12.8-1.el7.elrepo.x86_64 2/2 Installed: kernel-ml.x86_64 0:5.12.8-1.el7.elrepo kernel-ml-devel.x86_64 0:5.12.8-1.el7.elrepo Complete! 1.3. 查看已安装的内核版本 rpm -qa kernel* [root@VM_0_17_centos ~]# rpm -qa kernel* kernel-headers-3.10.0-957.1.3.el7.x86_64 kernel-3.10.0-862.el7.x86_64 kernel-ml-devel-5.12.8-1.el7.elrepo.x86_64 kernel-tools-libs-3.10.0-862.el7.x86_64 kernel-tools-3.10.0-862.el7.x86_64 kernel-devel-3.10.0-862.el7.x86_64 kernel-ml-5.12.8-1.el7.elrepo.x86_64 rpm -qa | grep -i kernel 1.4 查看内核版本 [root@VM_0_17_centos ~]# rpm -qa | grep -i kernel kernel-headers-3.10.0-957.1.3.el7.x86_64 kernel-3.10.0-862.el7.x86_64 abrt-addon-kerneloops-2.1.11-50.el7.centos.x86_64 kernel-ml-devel-5.12.8-1.el7.elrepo.x86_64 kernel-tools-libs-3.10.0-862.el7.x86_64 kernel-tools-3.10.0-862.el7.x86_64 kernel-devel-3.10.0-862.el7.x86_64 kernel-ml-5.12.8-1.el7.elrepo.x86_64 1.4 、内核切换 1. 更改默认内核 命令2选1: (1) grub2-set-default 0 默认启动顺序应该为1,升级后内核是往前面插入,为0。 [root@VM_0_17_centos ~]# grub2-set-default 0 (2) grub2-set-default ' ' [root@VM_0_17_centos ~]# grub2-set-default 'CentOS Linux (5.12.8-1.el7.elrepo.x86_64) 7 (Core)' 2. 查看默认启动内核是否更换成功 grub2-editenv list [root@VM_0_17_centos ~]# grub2-editenv list saved_entry=CentOS Linux (5.12.8-1.el7.elrepo.x86_64) 7 (Core) 1.5 卸载内核 rpm -e kernel-tools-3.10.0-862.el7.x86_64 rpm -e kernel-devel-3.10.0-862.el7.x86_64 rpm -e kernel-3.10.0-862.el7.x86_64 1.6、激活内核 1. 重启系统 reboot [root@VM_0_17_centos ~]# reboot 2. 查看内核版本 uname -r 5.12.8-1.el7.elrepo.x86_642.配置安装前基础环境

#关闭相关服务 stop_service_init_service() { echo -e "\033[32m 关闭防火墙... \033[0m" systemctl stop firewalld systemctl disable firewalld echo -e "\033[32m 关闭swap... \033[0m" swapoff -a sed -i 's/.*swap.*/#&/' /etc/fstab echo -e "\033[32m 关闭selinux... \033[0m" setenforce 0 sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config echo -e "\033[32m 启动bpf功能支持cilium网络插件... \033[0m" mount /sys/fs/bpf sed -i '$a bpffs /sys/fs/bpf bpf defaults 0 0' /etc/fstab echo -e "\033[32m init kubernetes base service... \033[0m" modprobe br_netfilter cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl -p /etc/sysctl.d/k8s.conf ls /proc/sys/net/bridge echo -e "\033[32m 配置kubernetes yum源... \033[0m" cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF echo -e "\033[32m 安装相关基础依赖包... \033[0m" yum install -y epel-release yum install -y yum-utils device-mapper-persistent-data lvm2 net-tools conntrack-tools wget vim ntpdate libseccomp libtool-ltdl echo -e "\033[32m 配置时间同步... \033[0m" systemctl enable ntpdate.service echo '*/30 * * * * /usr/sbin/ntpdate time7.aliyun.com >/dev/null 2>&1' > /tmp/kubernetes_crontab.tmp crontab /tmp/kubernetes_crontab.tmp systemctl start ntpdate.service echo -e "\033[32m 修改相关limits限制配置... \033[0m" echo -e "\033[32m * soft nofile 65536" >> /etc/security/limits.conf echo -e "\033[32m * hard nofile 65536" >> /etc/security/limits.conf echo -e "\033[32m * soft nproc 65536" >> /etc/security/limits.conf echo -e "\033[32m * hard nproc 65536" >> /etc/security/limits.conf echo -e "\033[32m * soft memlock unlimited" >> /etc/security/limits.conf echo -e "\033[32m * hard memlock unlimited" >> /etc/security/limits.conf }3.kube-admin 支持版本

yum list kubelet kubeadm kubectl --showduplicates|sort -r [root@k8s-master-1 ~]# yum list kubelet kubeadm kubectl --showduplicates|sort -r |grep 1.20.4 kubelet.x86_64 1.20.4-0 kubernetes kubelet.x86_64 1.20.4-0 installed kubeadm.x86_64 1.20.4-0 kubernetes kubeadm.x86_64 1.20.4-0 @kubernetes [root@k8s-master-1 ~]#yum -y install kubectl-1.20.4 kubeadm-1.20.4 kubelet-1.20.4-0.x86_64.rpm #可能找不到kubelet包,下载下图所示rpm [root@k8s-master-1 ~]#rpm -ivh https://yumrepo.nautilus.optiputer.net/kubernetes/Packages/1e577d68c58aa6fb846fdaa49737288f12dc78a2163a8470de930acece49974e-kubelet-1.20.4-0.x86_64.rpm4.开始安装etcd安装etcd

[root@k8s-master-1 tmp]#vim install_etcd.sh #安装cfssl install_cfssl() { echo -e "\033[32m 安装cfssl环境... \033[0m" wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl chmod +x cfssljson_linux-amd64 mv cfssljson_linux-amd64 /usr/local/bin/cfssljson chmod +x cfssl-certinfo_linux-amd64 mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo } #初始化配置ca init_ca() { echo -e "\033[32m 证书配置... \033[0m" mkdir /root/ssl cd /root/ssl cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes-Soulmate": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "87600h" } } } } EOF cat > ca-csr.json <<EOF { "CN": "kubernetes-Soulmate", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "shanghai", "L": "shanghai", "O": "k8s", "OU": "System" } ] } EOF export PATH=/usr/local/bin:$PATH cfssl gencert -initca ca-csr.json | cfssljson -bare ca cat > etcd-csr.json <<EOF { "CN": "etcd", "hosts": [ "127.0.0.1", "192.168.11.101", #master01 ip地址授权连接etcd 集群 "192.168.11.102", #master02 ip地址授权连接etcd 集群 "192.168.11.103" #master03 ip地址授权连接etcd 集群 ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "shanghai", "L": "shanghai", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes-Soulmate etcd-csr.json | cfssljson -bare etcd } #初始化etcd服务配置 install_etcd() { echo -e "\033[32m master-1安装etcd... \033[0m" yum install etcd -y mkdir -p /var/lib/etcd echo -e "\033[32m 其他master节点安装etcd... \033[0m" } init_etcd_service() { echo -e "\033[32m 生成相关service配置... \033[0m" cat <<EOF >/etc/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/bin/etcd --name k8s-master-1.k8s.com --peer-client-cert-auth --client-cert-auth --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --peer-cert-file=/etc/etcd/ssl/etcd.pem --peer-key-file=/etc/etcd/ssl/etcd-key.pem --trusted-ca-file=/etc/etcd/ssl/ca.pem --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem --initial-advertise-peer-urls https://192.168.11.104:2380 --listen-peer-urls https://192.168.11.104:2380 --listen-client-urls https://192.168.11.104:2379,http://127.0.0.1:2379 --advertise-client-urls https://192.168.11.104:2379 --initial-cluster-token etcd-cluster-0 --initial-cluster k8s-master-1.k8s.com=https://192.168.11.104:2380,k8s-master-2.k8s.com=https://192.168.11.105:2380,k8s-master-3.k8s.com=https://192.168.11.106:2380 --initial-cluster-state new --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF echo -e "\033[32m 生成其他节点相关service配置... \033[0m" cat <<EOF >/root/etcd-master-2.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/bin/etcd --name k8s-master-2.k8s.com --peer-client-cert-auth --client-cert-auth --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --peer-cert-file=/etc/etcd/ssl/etcd.pem --peer-key-file=/etc/etcd/ssl/etcd-key.pem --trusted-ca-file=/etc/etcd/ssl/ca.pem --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem --initial-advertise-peer-urls https://192.168.11.105:2380 --listen-peer-urls https://192.168.11.105::2380 --listen-client-urls https://192.168.11.105::2379,http://127.0.0.1:2379 --advertise-client-urls https://192.168.11.105::2379 --initial-cluster-token etcd-cluster-0 --initial-cluster k8s-master-1.k8s.com=https://192.168.11.104:2380,k8s-master-2.k8s.com=https://192.168.11.105:2380,k8s-master-3.k8s.com=https://192.168.11.106::2380 --initial-cluster-state new --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF cat <<EOF >/root/etcd-master-3.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ ExecStart=/usr/bin/etcd --name k8s-master-3.k8s.com --peer-client-cert-auth --client-cert-auth --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --peer-cert-file=/etc/etcd/ssl/etcd.pem --peer-key-file=/etc/etcd/ssl/etcd-key.pem --trusted-ca-file=/etc/etcd/ssl/ca.pem --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem --initial-advertise-peer-urls https://192.168.11.106:2380 --listen-peer-urls https://192.168.11.106:2380 --listen-client-urls https://192.168.11.106:2379,http://127.0.0.1:2379 --advertise-client-urls https://192.168.11.106:2379 --initial-cluster-token etcd-cluster-0 --initial-cluster k8s-master-1.k8s.com=https://192.168.11.104:2380,k8s-master-2.k8s.com=https://192.168.11.105:2380,k8s-master-3.k8s.com=https://192.168.11.106:2380 --initial-cluster-state new --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF echo -e "\033[32m 分发etcd.service... \033[0m" scp /root/etcd-master-2.service $3:/etc/systemd/system/etcd.service scp /root/etcd-master-3.service $4:/etc/systemd/system/etcd.service } #启动etcd start_etcd() { echo -e "\033[32m 添加etcd自启动... \033[0m" mv /etc/systemd/system/etcd.service /usr/lib/systemd/system/ ssh -n $3 "mv /etc/systemd/system/etcd.service /usr/lib/systemd/system/" ssh -n $4 "mv /etc/systemd/system/etcd.service /usr/lib/systemd/system/" systemctl daemon-reload systemctl enable etcd ssh -n $3 "systemctl daemon-reload && systemctl enable etcd && exit" ssh -n $4 "systemctl daemon-reload && systemctl enable etcd && exit" echo -e "\033[32m 启动etcd... \033[0m" systemctl start etcd systemctl status etcd ssh -n $3 "systemctl start etcd && systemctl status etcd && exit" ssh -n $4 "systemctl start etcd && systemctl status etcd && exit" } #检查etcd节点 chekc_etcd() { echo -e "\033[32m 检测etcd节点... \033[0m" export ETCDCTL_API=3;etcdctl --endpoints=https://192.168.11.104::2379,https://192.168.11.105:2379,https://192.168.11.106:2379 --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem endpoint health }5.安装配置Containerd服务

5.1 按照软件包 Containerd [root@k8s-node-1 ]# yum -y install Containerd [root@k8s-node-1 ]# mkdir -p /etc/containerd [root@k8s-node-1 ]#containerd config default > /etc/containerd/config.toml 5.2 修改sandbox_image的镜像地址,也就是Pause容器的镜像地址,修改为国内阿里云的地址。 [k8s-node-1 ]# vim /etc/containerd/config.toml 修改处: sandbox_image = "k8s.gcr.io/pause:3.2" 修改为: sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.2" 5.3 重启kubelet [k8s-node-1 ]#修改kubelet的启动参数并重启 [rk8s-node-1]# vim /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--container-runtime=remote --container-runtime-endpoint=unix:///var/run/containerd/containerd.sock" [k8s-node-1]# systemctl restart containerd kubelet 5.4 用crictl替代docker进行一些基本容器管理 [root@linux-node-1 ~]# vim /etc/crictl.yaml runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 10 debug: false6 kubeadmin 初始化k8s集群

配置文件 --- apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.20.4 imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers controlPlaneEndpoint: "192.168.11.101:6443" networking: serviceSubnet: "10.31.48.0/22" podSubnet: "10.31.32.0/19" dnsDomain: "cluster.local" dns: type: CoreDNS etcd: external: caFile: /etc/etcd/ssl/ca.pem certFile: /etc/etcd/ssl/etcd.pem endpoints: - https://192.168.11.104:2379 - https://192.168.11.105:2379 - https://192.168.11.106:2379 keyFile: /etc/etcd/ssl/etcd-key.pem --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration kubeadm init --config=kubeadm-config.yaml节点加入集群 1.线下环境#master加入节点kubeadm join 192.1768.11.101:6443 --token eyoyc6.4mr8da91080gihjl \--discovery-token-ca-cert-hash sha256:05ad2e54678f93788b33a3591fe6069a65649d8f1d3b467388f6d0f4445c80af \--control-plane Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.1768.11.101::6443 --token eyoyc6.4mr8da91080gihjl \--discovery-token-ca-cert-hash sha256:05ad2e54678f93788b33a3591fe6069a65649d8f1d3b467388f6d0f4445c80af

**8.办公网k8s网络互通方案配置**k8网络互通办公网步骤1#配置防火墙源地址转化规则iptables -t nat -A POSTROUTING -s 192.168.0.0/16 -d 10.31.0.0/17 -j MASQUERADE

注解:重点在那个『 MASQUERADE 』!这个设定值就是『IP伪装成为封包出去(-o)的那块装置上的IP』!用途:不管现在eth0的出口获得了怎样的动态ip,MASQUERADE会自动读取eth0现在的ip地址然后做SNAT出去,这样就实现了很好的动态SNAT地址转换。

步骤2#在办公室的核心路由器上,设置静态路由,将 k8s pod网段,路由到 node-1节点上ip route 10.31.0.0/17 192.168.11.104#开机启动#iptables -t nat -A POSTROUTING -s 192.168.0.0/16 -d 10.31.0.0/17 -j MASQUERADE