进程查看

Ex1、Cpu Load突然升高?

排查思路:

1) 用户访问量增加,导致机器CPU负责升高;

2) 程序异常,导致CPU使用升高;

3) 磁盘IO故障,导致CPU负载升高;

排除命令参考:

>> 找出CPU使用率高的进程;

# ps aux |sort -k3 |tail root 1654 0.0 0.1 12308 2636 ? S< 18:28 0:00 /sbin/udevd -d root 1655 0.0 0.1 12308 2636 ? S< 18:28 0:00 /sbin/udevd -d root 1612 0.0 0.1 81012 3480 ? Ss 18:28 0:00 /usr/libexec/postfix/master postfix 1618 0.0 0.1 81092 3436 ? S 18:28 0:00 pickup -l -t fifo -u postfix 1619 0.0 0.1 81160 3484 ? S 18:28 0:00 qmgr -l -t fifo -u root 1689 0.3 0.2 98008 4436 ? Ss 18:30 0:00 sshd: root@pts/0 root 1626 0.5 0.0 116884 1392 ? Ss 18:28 0:01 crond root 1 0.7 0.0 19232 1500 ? Ss 18:27 0:02 /sbin/init root 1708 99.5 0.0 100940 680 pts/0 R 18:31 1:13 sha256sum /dev/zero USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

>>找出执行该进程的用户、终端;

# ps axjf |sort -k3|tail -n 20 1612 1618 1612 1612 ? -1 S 89 0:00 \_ pickup -l -t fifo -u 1612 1619 1612 1612 ? -1 S 89 0:00 \_ qmgr -l -t fifo -u 1 1612 1612 1612 ? -1 Ss 0 0:00 /usr/libexec/postfix/master 1 1626 1626 1626 ? -1 Ss 0 0:01 crond 1 1639 1639 1639 tty1 1639 Ss+ 0 0:00 /sbin/mingetty /dev/tty1 1 1641 1641 1641 tty2 1641 Ss+ 0 0:00 /sbin/mingetty /dev/tty2 1 1643 1643 1643 tty3 1643 Ss+ 0 0:00 /sbin/mingetty /dev/tty3 1 1645 1645 1645 tty4 1645 Ss+ 0 0:00 /sbin/mingetty /dev/tty4 1 1647 1647 1647 tty5 1647 Ss+ 0 0:00 /sbin/mingetty /dev/tty5 1 1649 1649 1649 tty6 1649 Ss+ 0 0:00 /sbin/mingetty /dev/tty6 1533 1689 1689 1689 ? -1 Ss 0 0:00 \_ sshd: root@pts/0 1689 1693 1693 1693 pts/0 1747 Ss 0 0:00 \_ -bash 1693 1708 1708 1693 pts/0 1747 R 0 10:17 \_ sha256sum /dev/zero 1693 1747 1747 1693 pts/0 1747 R+ 0 0:00 \_ ps axjf 1693 1748 1747 1693 pts/0 1747 S+ 0 0:00 \_ sort -k3 1693 1749 1747 1693 pts/0 1747 S+ 0 0:00 \_ tail -n 20 614 1654 614 614 ? -1 S< 0 0:00 \_ /sbin/udevd -d 614 1655 614 614 ? -1 S< 0 0:00 \_ /sbin/udevd -d 1 614 614 614 ? -1 S<s 0 0:00 /sbin/udevd -d PPID PID PGID SID TTY TPGID STAT UID TIME COMMAND

>>找出该用户登录的终端来源;

# w 18:46:23 up 18 min, 1 user, load average: 1.00, 0.94, 0.60 USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT root pts/0 192.168.23.1 18:30 0.00s 15:18 0.00s w

>>如果是网络服务进程,检查网络连接数

# ss -4tu Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port tcp ESTAB 0 0 1*.2**.2**.7:59197 10.202.13.3:10050 tcp ESTAB 0 0 1*.2**.2**.7:41727 10.202.13.10:10050 tcp LAST-ACK 1 1 1*.2**.2**.7:18876 10.202.13.5:10050 tcp ESTAB 0 0 1*.2**.2**.7:41771 10.202.13.10:10050 tcp ESTAB 0 0 1*.2**.2**.7:40051 10.202.13.15:10050 tcp ESTAB 0 0 1*.2**.2**.7:22087 10.202.167.12:10050 tcp ESTAB 0 0 1*.2**.2**.7:17288 10.202.13.4:10050 统计网络服务连接数ss -4tup |wc –l; 统计某一个服务进程的连接数 ss -4tup |grep ‘进程名’|wc -l

>>检查进程是否被IO Block;

# ps aux |sort -k8 USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND root 4169515 30.6 0.7 2975800 2872816 ? D 09:36 26:17 /usr/bin/python3.6 -s /bin/s3cmd *** root 82363 28.9 0.0 295004 193892 ? D 10:58 0:54 /usr/bin/python3.6 -s /bin/s3cmd *** root 7 0.0 0.0 0 0 ? D Jul21 4:34 [kworker/u96:0+ixgbe] root 3436655 0.0 0.0 0 0 ? I 00:00 0:01 [kworker/16:1-mm_percpu_wq] root 3445624 0.0 0.0 0 0 ? I 00:07 0:01 [kworker/26:1-events] root 3481033 0.0 0.0 0 0 ? I 00:34 0:01 [kworker/11:3-xfs-buf/dm-2被block的进程,如果磁盘IO能够响应过来,进程状态会自动恢复正常,所以当排除到这样的进程状态时,应先查看本地磁盘,后端的NAS,块存储等是否正常。

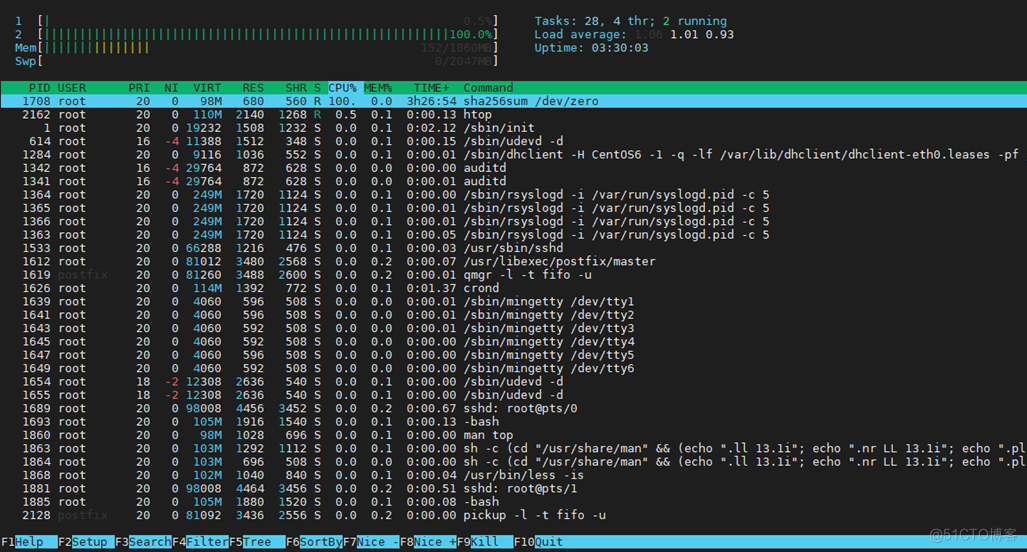

>>对于间歇性Cpu冲高的情况,使用top等交互性工具查找使用高的进程

# top -M -d 1 Tasks: 145 total, 2 running, 143 sleeping, 0 stopped, 0 zombie Cpu(s): 49.2%us, 0.8%sy, 0.0%ni, 49.9%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st Mem: 1860.512M total, 364.098M used, 1496.414M free, 9284.000k buffers Swap: 2047.996M total, 0.000k used, 2047.996M free, 207.379M cached PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 1708 root 20 0 98.6m 680 560 R 100.0 0.0 56:50.53 sha256sum 1900 root 20 0 15020 1356 1012 R 0.3 0.1 0:00.49 top 1 root 20 0 19232 1508 1232 S 0.0 0.1 0:02.11 init 2 root 20 0 0 0 0 S 0.0 0.0 0:00.02 kthreadd

>>也可以使用htop来查看

Htop

F2 设置想要显示的内容

F3 搜索想要显示的内容,找到内容后会高亮显示

F4 过滤想要显示的内存

F5 以树状显示进程运行

F6 排序,可以自己设定排序项显示

F7 降低进程运行优先级

F8 增加进程运行优先级

F9 给进程发送信号

F10 退出交互式命令

Ex2、磁盘IO突然升高?

排查思路:

1) 数据备份、集群同步引起的?

2) 磁盘Raid降级、同步?

3) 程序异常,IO读写进程死掉,卡住?

排查命令参考:

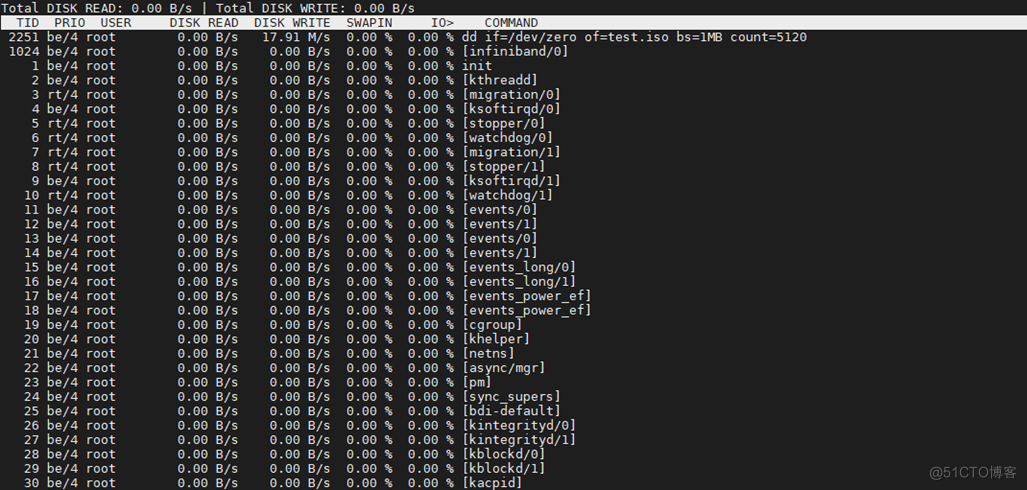

>>使用iotop查看进程使用的IO资源,可以定位到进程;

#iotop –d1

>>使用sar查看磁盘设备IO读写,可以定位到磁盘设备;

# sar -d 1 1 Linux 3.10.0-1127.19.1.el7.x86_64 (SIT-KVM03) 08/12/2021 _x86_64_ (48 CPU) 05:31:59 PM DEV tps rd_sec/s wr_sec/s avgrq-sz avgqu-sz await svctm %util 05:32:00 PM dev8-0 104.00 2304.00 3129.00 52.24 0.06 0.58 0.58 6.00 05:32:00 PM dev253-0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 05:32:00 PM dev253-1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 05:32:00 PM dev253-2 104.00 2304.00 3129.00 52.24 0.06 0.58 0.58 6.00 Average: DEV tps rd_sec/s wr_sec/s avgrq-sz avgqu-sz await svctm %util Average: dev8-0 104.00 2304.00 3129.00 52.24 0.06 0.58 0.58 6.00 Average: dev253-0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 Average: dev253-1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 Average: dev253-2 104.00 2304.00 3129.00 52.24 0.06 0.58 0.58 6.00

tps: 每秒从物理磁盘I/O的次数.多个逻辑请求会被合并为一个I/O磁盘请求,一次传输的大小是不确定的.

rd_sec/s:每秒读扇区的次数.

wr_sec/s:每秒写扇区的次数.

avgrq-sz:平均每次设备I/O操作的数据大小(扇区).

avgqu-sz:磁盘请求队列的平均长度.

await:从请求磁盘操作到系统完成处理,每次请求的平均消耗时间,包括请求队列等待时间,单位是毫秒(1秒=1000毫秒).

svctm:系统处理每次请求的平均时间,不包括在请求队列中消耗的时间.

%util: I/O请求占CPU的百分比,比率越大,说明越饱和.

>>使用racadm查看Raid状态,可以定位都Raid;

查看服务器Raid状态

# racadm -r 10.2**.2**.84 -u root -p24**** storage get pdisks:Disk.Bay.3:Enclosure.Internal.0-1:RAID.Integrated.1-1 /opt/dell/srvadmin/sbin/racadm: line 13: printf: 0xError: invalid hex number Security Alert: Certificate is invalid - self signed certificate Continuing execution. Use -S option for racadm to stop execution on certificate-related errors. Disk.Bay.3:Enclosure.Internal.0-1:RAID.Integrated.1-1 Status = Ok DeviceDescription = Disk 3 in Backplane 1 of Integrated RAID Controller 1 RollupStatus = Ok Name = Physical Disk 0:1:3 State = Online OperationState = Rebuilding Progress = 37 % PowerStatus = Spun-Up Size = 1117.25 GB FailurePredicted = NO RemainingRatedWriteEndurance = Not Applicable SecurityStatus = Not Capable BusProtocol = SAS MediaType = HDD UsedRaidDiskSpace = 1117.25 GB AvailableRaidDiskSpace = 1117.25 GB Hotspare = NO Manufacturer = HGST ProductId = HUC101212CSS600 Revision = U5E0 SerialNumber = L0GDRE1G PartNumber = TH0T6TWN1256753J8JX1A00 NegotiatedSpeed = 6.0 Gb/s ManufacturedDay = 5 ManufacturedWeek = 12 ManufacturedYear = 2015 SasAddress = 0x5000CCA072172279 FormFactor = 2.5 Inch RaidNominalMediumRotationRate = 10000 T10PICapability = Not Capable BlockSizeInBytes = 512 MaxCapableSpeed = 6 Gb/s SelfEncryptingDriveCapability = Not Capable>>如果是Ceph分布式存储设备,用ceph –s查看Ceph集群状态;

root@debian10-02:~# ps aux |grep qemu root 2399333 7.7 1.6 3548736 536636 ? Sl May12 10644:01 /usr/bin/kvm -id 100 -name vm-ex-001 -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/100.qmp,server,nowait -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5 -mon chardev=qmp-event,mode=control -pidfile /var/run/qemu-server/100.pid -daemonize -smbios type=1,uuid=8c12b72e-8f5c-4c88-a9d2-f485bff42115 -smp 1,sockets=1,cores=1,maxcpus=1 -nodefaults -boot menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg -vnc unix:/var/run/qemu-server/100.vnc,password -cpu kvm64,enforce,+kvm_pv_eoi,+kvm_pv_unhalt,+lahf_lm,+sep -m 2048 -device pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e -device pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f -device vmgenid,guid=7134fe1d-17d5-4942-95df-c181a284020b -device piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2 -device usb-tablet,id=tablet,bus=uhci.0,port=1 -device VGA,id=vga,bus=pci.0,addr=0x2 -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3 -drive if=none,id=drive-ide2,media=cdrom,aio=threads -device ide-cd,bus=ide.1,unit=0,drive=drive-ide2,id=ide2,bootindex=101 -device virtio-scsi-pci,id=scsihw0,bus=pci.0,addr=0x5 -drive file=rbd:compute/vm-100-disk-0:conf=/etc/pve/ceph.conf:id=admin:keyring=/etc/pve/priv/ceph/compute.keyring,if=none,id=drive-scsi0,format=raw,cache=none,aio=native,detect-zeroes=on -device scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,bootindex=100-netdev type=tap,id=net0,ifname=tap100i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on -device virtio-net-pci,mac=62:A6:C2:6E:50:DD,netdev=net0,bus=pci.0,addr=0x12,id=net0,bootindex=102 -machine type=pc-i440fx-5.2+pve0 -incoming unix:/run/qemu-server/100.migrate -S

查看Ceph集群状态信息

# ceph -s cluster e0954af1-2185-4a95-ae24-0e61ee658718 health HEALTH_WARN 1 pgs backfill 3 pgs backfill_toofull 212 pgs degraded 3 pgs recovering 163 pgs recovery_wait 1 pgs stuck degraded 213 pgs stuck unclean 1 pgs stuck undersized 3 pgs undersized recovery 5387/18168697 objects degraded (0.030%) recovery 16041/18168697 objects misplaced (0.088%) 2 near full osd(s) noout,noscrub,nodeep-scrub flag(s) set monmap e4: 3 mons at {node-27=10.2**.6.11:6789/0,node-32=10.2**.6.17:6789/0,node-45=10.2**.6.12:6789/0} election epoch 256, quorum 0,1,2 node-27,node-45,node-32 osdmap e159710: 213 osds: 213 up, 213 in; 5 remapped pgs flags noout,noscrub,nodeep-scrub pgmap v111877299: 16448 pgs, 6 pools, 39199 GB data, 5912 kobjects 114 TB used, 96989 GB / 209 TB avail 5387/18168697 objects degraded (0.030%) 16041/18168697 objects misplaced (0.088%) 16235 active+clean 163 active+recovery_wait+degraded 42 active+degraded 3 active+recovering+degraded 1 active+remapped+backfill_toofull 1 active+undersized+degraded+remapped+backfill_toofull 1 active+degraded+remapped+backfill_toofull 1 active+undersized+degraded+remapped+wait_backfill 1 active+undersized+degraded+remapped recovery io 372 MB/s, 51 objects/s client io 875 kB/s rd, 38480 kB/s wr, 6972 op/s

如果服务器是一台KVM宿主机,上面运行的都是虚拟机进程,怎么快速定位到是哪一个虚拟机磁盘IO占用高?

>>可以使用virt-top虚拟化工具快速定位

# virt-top -3 –d 1 virt-top 09:55:28 - x86_64 48/48CPU 2900MHz 386890MB 44 domains, 42 active, 42 running, 0 sleeping, 0 paused, 2 inactive D:0 O:0 X:0 CPU: 7.9% Mem: 647513 MB (647513 MB by guests) ID S RDBY WRBY RDRQ WRRQ DOMAIN DEVICE 4 R 436K 216K 14 18 Dbmonitor--1 vda 83 R 40K 172K 7 24 SIT-201-128- vda 80 R 24K 389K 6 30 SIT-201-128- vda 85 R 80K 135K 5 13 SIT-201-128- vda 80 R 8192 64K 2 5 SIT-201-128- vdb 119 R 0 0 0 0 ubuntu18.04- hda 4 R 0 0 0 0 Dbmonitor--1 hdc

Ex3、网络IO突然升高?

排查思路:

1) 数据备份、集群同步引起的?

2) 用户上传、下载、批量更新引起的?

3) 用户访问量增加,被攻击引起的?

排查命令参考:

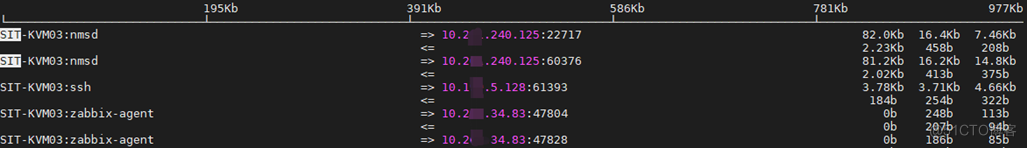

>>使用iftop查看指定网卡流量和,可以定位占用网络带宽的进程;

# iftop -i br129 -P

如果是虚拟机网卡流量,怎么快速定位到虚拟机?

>>用sar查看系统下所有的网卡流量;

~]# sar -n DEV 1 1 Linux 3.10.0-1127.19.1.el7.x86_64 (SIT-KVM03) 08/12/2021 _x86_64_ (48 CPU) 05:05:08 PM IFACE rxpck/s txpck/s rxkB/s txkB/s rxcmp/s txcmp/s rxmcst/s 05:05:09 PM vnet7 11.00 17.00 1.09 1.28 0.00 0.00 0.00 05:05:09 PM vnet12 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet31 20.00 25.00 6.09 6.58 0.00 0.00 0.00 05:05:09 PM vnet24 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet43 2.00 6.00 0.19 0.43 0.00 0.00 0.00 05:05:09 PM vnet17 43.00 52.00 4.07 4.51 0.00 0.00 0.00 05:05:09 PM vnet36 8.00 11.00 0.63 0.84 0.00 0.00 0.00 05:05:09 PM vnet4 8.00 12.00 9.68 9.88 0.00 0.00 0.00 05:05:09 PM vnet29 25.00 39.00 5.72 4.42 0.00 0.00 0.00 05:05:09 PM vnet20 125.00 130.00 37.64 14.04 0.00 0.00 0.00 05:05:09 PM vnet9 7.00 11.00 1.44 0.87 0.00 0.00 0.00 05:05:09 PM vnet13 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet32 32.00 35.00 9.86 9.57 0.00 0.00 0.00 05:05:09 PM vnet1 0.00 7.00 0.00 0.41 0.00 0.00 0.00 05:05:09 PM vnet25 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet18 27.00 32.00 16.95 2.81 0.00 0.00 0.00 05:05:09 PM vnet37 1.00 8.00 0.11 0.52 0.00 0.00 0.00 05:05:09 PM vnet21 101.00 107.00 12.29 12.62 0.00 0.00 0.00 05:05:09 PM vnet40 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet14 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM br129 13.00 5.00 0.61 0.30 0.00 0.00 0.00 05:05:09 PM vnet33 121.00 141.00 192.42 234.52 0.00 0.00 0.00 05:05:09 PM vnet26 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet3 0.00 7.00 0.00 0.41 0.00 0.00 0.00 05:05:09 PM vnet19 45.00 41.00 4.14 42.51 0.00 0.00 0.00 05:05:09 PM vnet38 8.00 15.00 0.48 0.97 0.00 0.00 0.00 05:05:09 PM lo 0.00 0.00 0.00 0.00 0.00 0.00 0.00 05:05:09 PM vnet10 0.00 7.00 0.00 0.41 0.00 0.00 0.00 05:05:09 PM vnet8 2.00 8.00 0.30 0.51 0.00 0.00 0.00 05:05:09 PM virbr0-nic 0.00 0.00 0.00 0.00 0.00 0.00 0.00 05:05:09 PM virbr0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 05:05:09 PM vnet22 105.00 108.00 12.32 12.38 0.00 0.00 0.00 05:05:09 PM em2.228 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM em2.229 0.00 7.00 0.00 0.41 0.00 0.00 0.00 05:05:09 PM em1.228 7949.00 6462.00 1588.54 3946.55 0.00 0.00 1.00 05:05:09 PM em1.229 35.00 31.00 2.00 3.24 0.00 0.00 3.00 05:05:09 PM vnet41 1.00 5.00 0.10 0.33 0.00 0.00 0.00 05:05:09 PM vnet0 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet15 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet34 178.00 152.00 271.39 335.86 0.00 0.00 0.00 05:05:09 PM vnet27 124.00 128.00 13.06 13.95 0.00 0.00 0.00 05:05:09 PM vnet5 0.00 7.00 0.00 0.41 0.00 0.00 0.00 05:05:09 PM vnet39 2.00 10.00 0.15 0.63 0.00 0.00 0.00 05:05:09 PM vnet11 0.00 7.00 0.00 0.41 0.00 0.00 0.00 05:05:09 PM em3 0.00 0.00 0.00 0.00 0.00 0.00 0.00 05:05:09 PM em1 9305.00 7756.00 2011.41 4694.15 0.00 0.00 32.00 05:05:09 PM em4 0.00 0.00 0.00 0.00 0.00 0.00 0.00 05:05:09 PM em2 65.00 15.00 4.42 0.99 0.00 0.00 30.00 05:05:09 PM vnet30 22.00 28.00 6.66 7.08 0.00 0.00 0.00 05:05:09 PM br128 4.00 0.00 0.18 0.00 0.00 0.00 0.00 05:05:09 PM vnet23 170.00 188.00 180.94 75.09 0.00 0.00 0.00 05:05:09 PM vnet42 3.00 7.00 0.28 0.53 0.00 0.00 0.00 05:05:09 PM vnet16 6302.00 7769.00 3914.88 1669.00 0.00 0.00 0.00 05:05:09 PM vnet2 0.00 4.00 0.00 0.24 0.00 0.00 0.00 05:05:09 PM vnet35 4.00 8.00 0.37 0.62 0.00 0.00 0.00 05:05:09 PM vnet28 20.00 35.00 5.89 4.51 0.00 0.00 0.00

IFACE 表示设备名称(LAN接口)

rxpck/s 每秒接收数据包的数量

txpck/s 每秒发出数据包的数量

rxKB/s 每秒接收的数据量(字节数),单位KByte 1KB=1000byte=8000bit

txKB/s 每秒发出的数据量(字节数),单位KByte

rxcmp/s:每秒钟接收的压缩数据包

txcmp/s:每秒钟发送的压缩数据包

rxmcst/s:每秒钟接收的多播数据包

[root@rhel6 ~]# sar -n EDEV 1 Linux 2.6.32-754.el6.x86_64 (rhel6) 08/23/2021 _x86_64_ (2 CPU) 09:37:25 PM IFACE rxerr/s txerr/s coll/s rxdrop/s txdrop/s txcarr/s rxfram/s rxfifo/s txfifo/s 09:37:26 PM lo 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 09:37:26 PM eth0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 09:37:26 PM pan0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 09:37:26 PM IFACE rxerr/s txerr/s coll/s rxdrop/s txdrop/s txcarr/s rxfram/s rxfifo/s txfifo/s 09:37:27 PM lo 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 09:37:27 PM eth0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 09:37:27 PM pan0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 09:37:27 PM IFACE rxerr/s txerr/s coll/s rxdrop/s txdrop/s txcarr/s rxfram/s rxfifo/s txfifo/s 09:37:28 PM lo 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 09:37:28 PM eth0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 09:37:28 PM pan0 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

rxerr/s:每秒钟接收的坏数据包

txerr/s:每秒钟发送的坏数据包

coll/s:每秒冲突数

rxdrop/s:因为缓冲充满,每秒钟丢弃的已接收数据包数

txdrop/s:因为缓冲充满,每秒钟丢弃的已发送数据包数

txcarr/s:发送数据包时,每秒载波错误数

rxfram/s:每秒接收数据包的帧对齐错误数

rxfifo/s:接收的数据包每秒FIFO过速的错误数

txfifo/s:发送的数据包每秒FIFO过速的错误数

>>查找\vnet对应的kvm虚拟机

# for i in $(virsh list|grep running|awk '{print $2}'); do echo $i;virsh domiflist $i; done DHCP-128-129 Interface Type Source Model MAC ------------------------------------------------------- vnet0 bridge br128 virtio 00:50:56:91:0c:67 vnet1 bridge br129 virtio 00:50:56:91:2e:8f SIT-201-129-07-12-20-01 Interface Type Source Model MAC ------------------------------------------------------- vnet2 bridge br128 virtio 00:50:56:91:14:b9 Dbmonitor--10.201.128.241 Interface Type Source Model MAC ------------------------------------------------------- vnet4 bridge br128 virtio 00:50:56:91:04:11 SIT-201-129-14-07-20-03 Interface Type Source Model MAC ------------------------------------------------------- vnet9 bridge br129 virtio 00:50:56:91:04:cf

>>也可以使用virt-top命令查看

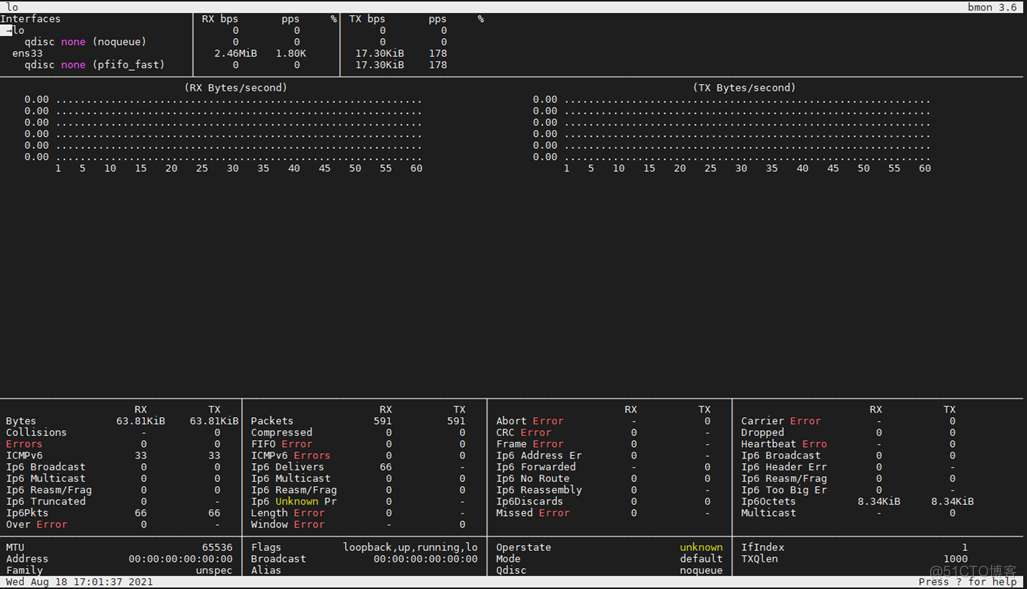

# virt-top -2 –d1 CPU: 7.2% Mem: 647513 MB (647513 MB by guests) ID S RXBY TXBY RXPK TXPK DOMAIN INTERFACE 116 R 83K 9237 59 48 VM_10_201_12 vnet43 90 R 72K 33K 414 396 SIT-201-128- vnet23 70 R 63K 61K 676 651 SIT-201-128- vnet20 71 R 55K 83K 562 537 SIT-201-128- vnet21 73 R 50K 53K 495 481 SIT-201-128- vnet22 69 R 45K 20K 169 187 SIT-201-128- vnet19 89 R 34K 6231 62 48 SIT-201-129- vnet8 79 R 31K 36K 354 314 SIT-201-128- vnet27 72 R 31K 24K 287 249 SIT-201-128- vnet17 92 R 26K 41K 223 211 SIT-201-128- vnet34>>查看网卡工作状态

进程暂停、终止

Ex1、进程状态异常,怎么暂停、终止、恢复?

>>使用kill/killall -19来暂停进程;

~]# service nginx status nginx (pid 1884) is running... [root@CentOS6 yum.repos.d]# ps aux |grep nginx root 1884 0.0 0.1 108936 2300 ? Ss 19:02 0:00 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf nginx 1885 0.0 0.1 109360 2924 ? S 19:02 0:00 nginx: worker process nginx 1886 0.0 0.1 109360 3220 ? S 19:02 0:00 nginx: worker process root 1987 0.0 0.0 103320 888 pts/0 S+ 19:08 0:00 grep nginx ~]# netstat -tlunp |grep :80 tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1884/nginx tcp 0 0 :::80 :::* LISTEN 1884/nginx ~]# killall -19 nginx ~]# service nginx status nginx (pid 1884) is running... ~]# ps aux |grep nginx root 1884 0.0 0.1 108936 2300 ? Ts 19:02 0:00 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf nginx 1885 0.0 0.1 109360 2924 ? T 19:02 0:00 nginx: worker process nginx 1886 0.0 0.1 109360 3220 ? T 19:02 0:00 nginx: worker process root 2004 0.0 0.0 103320 884 pts/0 S+ 19:10 0:00 grep nginx ~]# netstat -tlunp |grep :80 tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1884/nginx tcp 0 0 :::80 :::* LISTEN 1884/nginx

>>使用kill/killall -18来恢复暂停的进程;

~]# killall -18 nginx ~]# ps aux |grep nginx root 1884 0.0 0.1 108936 2300 ? Ss 19:02 0:00 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf nginx 1885 0.0 0.1 109360 2924 ? S 19:02 0:00 nginx: worker process nginx 1886 0.0 0.1 109360 3220 ? S 19:02 0:00 nginx: worker process root 2009 0.0 0.0 103320 888 pts/0 S+ 19:14 0:00 grep nginx

>>正常终止进程使用kill/killall(默认信号15);

~]# ps aux |grep nginx root 1884 0.0 0.1 108936 2300 ? Ss 19:02 0:00 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf nginx 1885 0.0 0.1 109360 2924 ? S 19:02 0:00 nginx: worker process nginx 1886 0.0 0.1 109360 3220 ? S 19:02 0:00 nginx: worker process root 2032 0.0 0.0 103320 888 pts/0 S+ 19:18 0:00 grep nginx ~]# killall nginx ~]# ps aux |grep nginx root 2035 0.0 0.0 103320 888 pts/0 S+ 19:19 0:00 grep nginx ~]# netstat -tlunp |grep :80

>>强制终止进程使用kill/killall -9

~]# ps aux |grep nginx root 2065 0.0 0.1 108936 2168 ? Ss 19:23 0:00 nginx: master process /usr/sbin/nginx -c /etc/nginx/nginx.conf nginx 2066 0.0 0.1 109360 2848 ? S 19:23 0:00 nginx: worker process nginx 2067 0.0 0.1 109360 2896 ? S 19:23 0:00 nginx: worker process root 2070 0.0 0.0 103320 888 pts/0 S+ 19:24 0:00 grep nginx ~]# killall -9 nginx ~]# ps aux |grep nginx root 2073 0.0 0.0 103320 888 pts/0 S+ 19:24 0:00 grep nginx>>reload进程使用kill -1

~]# ps aux |grep sshd root 1534 0.0 0.0 66288 1212 ? Ss 17:38 0:00 /usr/sbin/sshd root 1659 0.0 0.2 98008 4452 ? Ss 17:39 0:01 sshd: root@pts/0 root 2636 0.0 0.0 103320 884 pts/0 S+ 21:29 0:00 grep sshd ~]# kill -1 1534 ~]# ps aux |grep sshd root 1659 0.0 0.2 98008 4452 ? Ss 17:39 0:01 sshd: root@pts/0 root 2637 0.0 0.0 66288 1188 ? Ss 21:29 0:00 /usr/sbin/sshd root 2639 0.0 0.0 103320 884 pts/0 S+ 21:29 0:00 grep sshd

>>查看系统所支持的信号列表

[root@CentOS6 yum.repos.d]# kill -l 1) SIGHUP 2) SIGINT 3) SIGQUIT 4) SIGILL 5) SIGTRAP 6) SIGABRT 7) SIGBUS 8) SIGFPE 9) SIGKILL 10) SIGUSR1 11) SIGSEGV 12) SIGUSR2 13) SIGPIPE 14) SIGALRM 15) SIGTERM 16) SIGSTKFLT 17) SIGCHLD 18) SIGCONT 19) SIGSTOP 20) SIGTSTP 21) SIGTTIN 22) SIGTTOU 23) SIGURG 24) SIGXCPU 25) SIGXFSZ 26) SIGVTALRM 27) SIGPROF 28) SIGWINCH 29) SIGIO 30) SIGPWR 31) SIGSYS 34) SIGRTMIN 35) SIGRTMIN+1 36) SIGRTMIN+2 37) SIGRTMIN+3 38) SIGRTMIN+4 39) SIGRTMIN+5 40) SIGRTMIN+6 41) SIGRTMIN+7 42) SIGRTMIN+8 43) SIGRTMIN+9 44) SIGRTMIN+10 45) SIGRTMIN+11 46) SIGRTMIN+12 47) SIGRTMIN+13 48) SIGRTMIN+14 49) SIGRTMIN+15 50) SIGRTMAX-14 51) SIGRTMAX-13 52) SIGRTMAX-12 53) SIGRTMAX-11 54) SIGRTMAX-10 55) SIGRTMAX-9 56) SIGRTMAX-8 57) SIGRTMAX-7 58) SIGRTMAX-6 59) SIGRTMAX-5 60) SIGRTMAX-4 61) SIGRTMAX-3 62) SIGRTMAX-2 63) SIGRTMAX-1 64) SIGRTMAX

进程资源管理

Ex1、怎么限制用户、进程使用系统资源?

操作思路:

1) 针对用户做限制?

2) 针对服务进程做限制?

>>使用limit对用户资源限制;

可以限制的资源:

# - core - limits the core file size (KB)

# - data - max data size (KB)

# - fsize - maximum filesize (KB)

# - memlock - max locked-in-memory address space (KB)

# - nofile - max number of open file descriptors

# - rss - max resident set size (KB)

# - stack - max stack size (KB)

# - cpu - max CPU time (MIN)

# - nproc - max number of processes

# - as - address space limit (KB)

# - maxlogins - max number of logins for this user

# - maxsyslogins - max number of logins on the system

# - priority - the priority to run user process with

# - locks - max number of file locks the user can hold

# - sigpending - max number of pending signals

# - msgqueue - max memory used by POSIX message queues (bytes)

# - nice - max nice priority allowed to raise to values: [-20, 19]

# - rtprio - max realtime priority

~]# cat 13-trump.conf trump hard nofile 50 trump soft nproc 10 ~]$ md5sum /dev/zero & -bash: fork: retry: Resource temporarily unavailable -bash: fork: retry: Resource temporarily unavailable -bash: fork: retry: Resource temporarily unavailable -bash: fork: retry: Resource temporarily unavailable ^C^H^H^H-bash: fork: Resource temporarily unavailable

>>使用init配置文件对某一指定服务进行资源限制;

node1:/etc/init# cat radosgw.conf description "Ceph radosgw" start on radosgw stop on runlevel [!2345] or stopping radosgw-all respawn respawn limit 5 30 limit nofile 8096 65536 #limit nofile 1024 1024 pre-start script set -e test -x /usr/bin/radosgw || { stop; exit 0; } test -d "/var/lib/ceph/radosgw/${cluster:-ceph}-$id" || { stop; exit 0; } install -d -m0755 /var/run/ceph end script instance ${cluster:-ceph}/$id export cluster export id # this breaks oneiric #usage "cluster = name of cluster (defaults to 'ceph'); id = mds instance id" exec /usr/bin/radosgw --cluster="${cluster:-ceph}" --id "$id" –f

>>查看进程的资源限制;

node1:/etc/init# ps aux |grep radosgw root 11871 0.0 0.0 10480 2040 pts/0 S+ 14:46 0:00 grep --color=auto radosgw root 28866 0.1 0.8 15969024 73460 ? Ssl Aug03 25:55 /usr/bin/radosgw --cluster=ceph --id rgw.node1 -f node1:/etc/init# cat /proc/28866/limits Limit Soft Limit Hard Limit Units Max cpu time unlimited unlimited seconds Max file size unlimited unlimited bytes Max data size unlimited unlimited bytes Max stack size 8388608 unlimited bytes Max core file size 0 unlimited bytes Max resident set unlimited unlimited bytes Max processes 31849 31849 processes Max open files 8096 65536 files Max locked memory 65536 65536 bytes Max address space unlimited unlimited bytes Max file locks unlimited unlimited locks Max pending signals 31849 31849 signals Max msgqueue size 819200 819200 bytes Max nice priority 0 0 Max realtime priority 0 0 Max realtime timeout unlimited unlimited us

>>使用cgroup对用户或服务进程进行资源限制;

# cat /etc/cgconfig.conf mount { cpuset = /cgroup/cpuset; cpu = /cgroup/cpu; cpuacct = /cgroup/cpuacct; memory = /cgroup/memory; devices = /cgroup/devices; freezer = /cgroup/freezer; net_cls = /cgroup/net_cls; blkio = /cgroup/blkio; } group bio-181 { blkio { blkio.throttle.write_iops_device=""; blkio.throttle.read_iops_device=""; blkio.throttle.write_bps_device="253:0 31457280"; blkio.throttle.read_bps_device="253:0 31457280"; blkio.reset_stats=""; blkio.weight="500"; blkio.weight_device=""; } } cat /etc/cgrules.conf # /etc/cgrules.conf #The format of this file is described in cgrules.conf(5) #manual page. # # Example: #<user> <controllers> <destination> #@student cpu,memory usergroup/student/ #peter cpu test1/ #% memory test2/ # End of file * blkio bio-181/ ##对进程的限制写法 blsa:dd blkio bio-181/测试对比

tmp]# dd if=/dev/zero of=test bs=512M count=10 conv=fdatasync oflag=direct 10+0 records in 10+0 records out 5368709120 bytes (5.4 GB) copied, 76.8344 s, 69.9 MB/s tmp]# dd if=/dev/zero of=test bs=512M count=10 conv=fdatasync oflag=direct 10+0 records in 10+0 records out 5368709120 bytes (5.4 GB) copied, 171.454 s, 31.3 MB/s

进程优先级管理

Ex1、服务响应慢,进程被OOM_Kill?

排查思路:

1) 查看服务进程状态;

2) 查看进程OOM分值;

>>查看进程运行状态,优先级

28047]# ps axo pid,comm,rtprio,policy,nice,stat |sort -k5 28085 ps - TS 0 R+ 1013 bluetooth - TS 0 S 101 kdmremove - TS 0 S 102 kstriped - TS 0 S 11 events/0 - TS 0 S 1215 jbd2/sda1-8 - TS 0 S 1216 ext4-dio-unwrit - TS 0 S 12 events/1 - TS 0 S 1321 kauditd - TS 0 S 136 ttm_swap - TS 0 S 15 events_long/0 - TS 0 S 2244 pickup - TS 0 S 2245 qmgr - TS 0 S 22 async/mgr - TS 0 S 23 pm - TS 0 S 24 sync_supers - TS 0 S 25 bdi-default - TS 0 S 26 kintegrityd/0 - TS 0 S 27793 cqueue - TS 0 S 27993 httpd - TS 0 S 27994 httpd - TS 0 S 27995 httpd - TS 0 S 27996 httpd - TS 0 S 27997 httpd - TS 0 S 27998 httpd - TS 0 S 68 deferwq - TS 0 S 806 vmmemctl - TS 0 S 9 ksoftirqd/1 - TS 0 S 28086 sort - TS 0 S+ 1755 rsyslogd - TS 0 Sl 27518 console-kit-dae - TS 0 Sl 1789 irqbalance - TS 0 Ss 1807 rpcbind - TS 0 Ss 1864 rpc.statd - TS 0 Ss 1907 cupsd - TS 0 Ss 1908 wpa_supplicant - TS 0 Ss 1940 acpid - TS 0 Ss 1 init - TS 0 Ss 2122 sshd - TS 0 Ss 2159 ntpd - TS 0 Ss 2241 master - TS 0 Ss 2255 abrtd - TS 0 Ss 2282 crond - TS 0 Ss 2297 atd - TS 0 Ss 2314 rhsmcertd - TS 0 Ss 2331 certmonger - TS 0 Ss 2396 sshd - TS 0 Ss 2402 bash - TS 0 Ss 2423 login - TS 0 Ss 27882 cgrulesengd - TS 0 Ss 27990 httpd - TS 0 Ss 28047 vsftpd - TS 0 Ss 2379 mingetty - TS 0 Ss+ 2381 mingetty - TS 0 Ss+ 2383 mingetty - TS 0 Ss+ 2385 mingetty - TS 0 Ss+ 2387 mingetty - TS 0 Ss+ 27585 bash - TS 0 Ss+ 1827 dbus-daemon - TS 0 Ssl 1841 NetworkManager - TS 0 Ssl 1952 hald - TS 0 Ssl 2064 automount - TS 0 Ssl 2180 krfcommd - TS -10 S< 51 khugepaged - TS 19 SN 2394 udevd - TS -2 S< 2395 udevd - TS -2 S< 2100 bluetoothd - TS -2 S<s 643 udevd - TS -4 S<s 1721 auditd - TS -4 S<sl 50 ksmd - TS 5 SN PID COMMAND RTPRIO POL NI STAT

>>调整优先级,查看运行状态

7457]# renice -n 0 7457 7457: old priority -18, new priority 0 7457]# ps axo pid,comm,rtprio,policy,nice,stat |grep redis 7457 redis-server - TS 0 Ssl 7457]# ps axo pid,comm,rtprio,policy,nice,stat |grep redis 7457 redis-server - TS 0 Ssl 7457]# renice -n -18 7457 7457: old priority 0, new priority -18 7457]# ps axo pid,comm,rtprio,policy,nice,stat |grep redis 7457 redis-server - TS -18 S<sl 7457]# ps axo pid,comm,rtprio,policy,nice,stat |grep redis 7457 redis-server - TS -18 S<sl 7457]# renice -n 18 7457 7457: old priority -18, new priority 18 7457]# ps axo pid,comm,rtprio,policy,nice,stat |grep redis 7457 redis-server - TS 18 SNsl 7457]# ps axo pid,comm,rtprio,policy,nice,stat |grep redis 7457 redis-server - TS 18 SNsl

Ex2、提升优先级,调整CPU调度?

>>查看CPU调度policy,优先级

7457]# tuna -P thread ctxt_switches pid SCHED_ rtpri affinity voluntary nonvoluntary cmd 1 OTHER 0 0,1 712 40 init 2 OTHER 0 0,1 161 0 kthreadd 3 FIFO 99 0 897 0 migration/0 4 OTHER 0 0 456 0 ksoftirqd/0 5 FIFO 99 0 13 0 stopper/0 6 FIFO 99 0 1488 0 watchdog/0 7 FIFO 99 1 3353 0 migration/1 8 FIFO 99 1 13 0 stopper/1 9 OTHER 0 1 313 0 ksoftirqd/1

>>调整CPU调度policy和优先级

[root@CentOS6 7457]# tuna -P |grep redis 7457 OTHER 0 0,1 11568 17 redis-server [root@CentOS6 7457]# tuna -P |grep redis 7457 RR 40 0,1 23988 22 redis-server

>>查看进程的OOM分值

root@node1:~# pidof radosgw 28866 root@node1:~# cat /proc/28866/oom_score 4 root@node1:~# pidof ntpd 1645 root@node1:~# cat /proc/1645/oom_score 0 root@node1:~# cat /proc/1/oom_score 0>>调整进程的OOM分值

root@node1:~# cat /proc/28866/oom_score 4 root@node1:~# cat /proc/28866/oom_score_adj 0 root@node1:~# echo 100 > /proc/28866/oom_score_adj root@node1:~# cat /proc/28866/oom_score_adj 100 root@node1:~# cat /proc/28866/oom_score 104 root@node1:~# echo -100 > /proc/28866/oom_score_adj root@node1:~# cat /proc/28866/oom_score 0测试OOM

~]# cat oom.c #include <stdlib.h> #include <stdio.h> #define BYTES (8 * 1024 * 1024) int main(void) { printf("hello OOM \n"); while(1) { char *p = malloc(BYTES); if (p == NULL) { return -1; } } return 0; } ~]# gcc oom.c ~]# ls anaconda-ks.cfg a.out install.log install.log.syslog oom.c ~]# ./a.out hello OOM Killed message日志输出显示 Aug 17 23:43:56 CentOS6 kernel: Out of memory: Kill process 7940 (a.out) score 907 or sacrifice child Aug 17 23:43:56 CentOS6 kernel: Killed process 7940, UID 0, (a.out) total-vm:2993357344kB, anon-rss:213432kB, file-rss:4kB ~]# ./a.out & [1] 8049 ~]# sh -c "echo -1000 > /proc/$(pidof a.out)/oom_score_adj"

CPU调整优化

Ex1、CPU资源隔离;

>>查看当前系统cpu资源分布

[root@rhel7 ~]# tuna --show_threads thread ctxt_switches pid SCHED_ rtpri affinity voluntary nonvoluntary cmd 1 OTHER 0 0,1,2,3 1316 2333 systemd 2 OTHER 0 0,1,2,3 238 0 kthreadd 3 OTHER 0 0 887 0 ksoftirqd/0 5 OTHER 0 0 8 0 kworker/0:0H 7 FIFO 99 0 261 0 migration/0 8 OTHER 0 0,1,2,3 2 0 rcu_bh 9 OTHER 0 0,1,2,3 17941 3 rcu_sched 10 OTHER 0 0,1,2,3 2 0 lru-add-drain 11 FIFO 99 0 466 0 watchdog/0 12 FIFO 99 1 466 0 watchdog/1 13 FIFO 99 1 310 0 migration/1 14 OTHER 0 1 414 2 ksoftirqd/1 16 OTHER 0 1 11 0 kworker/1:0H 17 FIFO 99 2 466 0 watchdog/2 18 FIFO 99 2 365 0 migration/2 19 OTHER 0 2 263 0 ksoftirqd/2 20 OTHER 0 2 24 0 kworker/2:0 21 OTHER 0 2 11 0 kworker/2:0H 22 FIFO 99 3 466 0 watchdog/3 23 FIFO 99 3 324 0 migration/3 24 OTHER 0 3 346 0 ksoftirqd/3 25 OTHER 0 3 20 0 kworker/3:0 26 OTHER 0 3 11 0 kworker/3:0H 28 OTHER 0 0,1,2,3 165 0 kdevtmpfs 29 OTHER 0 0,1,2,3 2 0 netns 30 OTHER 0 0,1,2,3 17 0 khungtaskd 31 OTHER 0 0,1,2,3 2 0 writeback 32 OTHER 0 0,1,2,3 2 0 kintegrityd 33 OTHER 0 0,1,2,3 2 0 bioset 34 OTHER 0 0,1,2,3 2 0 kblockd 35 OTHER 0 0,1,2,3 2 0 md 36 OTHER 0 0,1,2,3 2 0 edac-poller 37 OTHER 0 0 13623 7 kworker/0:1 42 OTHER 0 0,1,2,3 3 0 kswapd0 43 OTHER 0 0,1,2,3 2 0 ksmd 44 OTHER 0 0,1,2,3 188 1 khugepaged 45 OTHER 0 0,1,2,3 2 0 crypto 53 OTHER 0 0,1,2,3 2 0 kthrotld 55 OTHER 0 0,1,2,3 2 0 kmpath_rdacd 56 OTHER 0 0,1,2,3 2 0 kaluad 58 OTHER 0 0,1,2,3 2 0 kpsmoused 59 OTHER 0 0 19 0 kworker/0:2 60 OTHER 0 0,1,2,3 2 0 ipv6_addrconf 61 OTHER 0 3 1200 1 kworker/3:1 74 OTHER 0 0,1,2,3 3 0 deferwq 106 OTHER 0 0,1,2,3 220 0 kauditd 288 OTHER 0 0,1,2,3 2 0 ata_sff 294 OTHER 0 0,1,2,3 4 0 scsi_eh_0 295 OTHER 0 0,1,2,3 2 0 scsi_tmf_0 296 OTHER 0 0,1,2,3 4 0 scsi_eh_1 297 OTHER 0 0,1,2,3 2 0 scsi_tmf_1 305 OTHER 0 0,1,2,3 2 0 mpt_poll_0 306 OTHER 0 0,1,2,3 2 0 mpt/0 307 OTHER 0 0,1,2,3 2 0 scsi_eh_2 308 OTHER 0 0,1,2,3 2 0 scsi_tmf_2 309 OTHER 0 0,1,2,3 2 0 ttm_swap 310 FIFO 50 0,1,2,3 2 0 irq/16-vmwgfx 313 OTHER 0 0,1,2,3 22 0 scsi_eh_3 314 OTHER 0 0,1,2,3 2 0 scsi_tmf_3 315 OTHER 0 0,1,2,3 25 0 scsi_eh_4 316 OTHER 0 0,1,2,3 2 0 scsi_tmf_4 317 OTHER 0 0,1,2,3 22 0 scsi_eh_5 318 OTHER 0 0,1,2,3 2 0 scsi_tmf_5 319 OTHER 0 0,1,2,3 22 0 scsi_eh_6 320 OTHER 0 0,1,2,3 2 0 scsi_tmf_6 321 OTHER 0 0,1,2,3 22 0 scsi_eh_7 322 OTHER 0 0,1,2,3 2 0 scsi_tmf_7 323 OTHER 0 0,1,2,3 22 0 scsi_eh_8 324 OTHER 0 0,1,2,3 2 0 scsi_tmf_8 325 OTHER 0 0,1,2,3 22 0 scsi_eh_9 326 OTHER 0 0,1,2,3 2 0 scsi_tmf_9 328 OTHER 0 0,1,2,3 22 0 scsi_eh_10 329 OTHER 0 0,1,2,3 2 0 scsi_tmf_10 330 OTHER 0 0,1,2,3 21 0 scsi_eh_11 332 OTHER 0 0,1,2,3 2 0 scsi_tmf_11 333 OTHER 0 0,1,2,3 21 0 scsi_eh_12 334 OTHER 0 0,1,2,3 2 0 scsi_tmf_12 335 OTHER 0 0,1,2,3 22 0 scsi_eh_13 336 OTHER 0 0,1,2,3 2 0 scsi_tmf_13 337 OTHER 0 0,1,2,3 22 0 scsi_eh_14 338 OTHER 0 0,1,2,3 2 0 scsi_tmf_14 339 OTHER 0 0,1,2,3 22 0 scsi_eh_15 340 OTHER 0 0,1,2,3 2 0 scsi_tmf_15 341 OTHER 0 0,1,2,3 22 0 scsi_eh_16 342 OTHER 0 0,1,2,3 2 0 scsi_tmf_16 343 OTHER 0 0,1,2,3 22 0 scsi_eh_17 345 OTHER 0 0,1,2,3 2 0 scsi_tmf_17 347 OTHER 0 0,1,2,3 21 0 scsi_eh_18 348 OTHER 0 0,1,2,3 2 0 scsi_tmf_18 349 OTHER 0 0,1,2,3 22 0 scsi_eh_19 350 OTHER 0 0,1,2,3 2 0 scsi_tmf_19 351 OTHER 0 0,1,2,3 22 0 scsi_eh_20 352 OTHER 0 0,1,2,3 2 0 scsi_tmf_20 353 OTHER 0 0,1,2,3 22 0 scsi_eh_21 354 OTHER 0 0,1,2,3 2 0 scsi_tmf_21 356 OTHER 0 0,1,2,3 24 0 scsi_eh_22 357 OTHER 0 0,1,2,3 2 0 scsi_tmf_22 358 OTHER 0 0,1,2,3 22 0 scsi_eh_23 359 OTHER 0 0,1,2,3 2 0 scsi_tmf_23 361 OTHER 0 0,1,2,3 22 0 scsi_eh_24 362 OTHER 0 0,1,2,3 2 0 scsi_tmf_24 364 OTHER 0 0,1,2,3 22 0 scsi_eh_25 365 OTHER 0 0,1,2,3 2 0 scsi_tmf_25 366 OTHER 0 0,1,2,3 22 0 scsi_eh_26 367 OTHER 0 0,1,2,3 2 0 scsi_tmf_26 368 OTHER 0 0,1,2,3 22 0 scsi_eh_27 369 OTHER 0 0,1,2,3 2 0 scsi_tmf_27 370 OTHER 0 0,1,2,3 22 0 scsi_eh_28 371 OTHER 0 0,1,2,3 2 0 scsi_tmf_28 372 OTHER 0 0,1,2,3 22 0 scsi_eh_29 373 OTHER 0 0,1,2,3 2 0 scsi_tmf_29 374 OTHER 0 0,1,2,3 22 0 scsi_eh_30 375 OTHER 0 0,1,2,3 2 0 scsi_tmf_30 376 OTHER 0 0,1,2,3 22 0 scsi_eh_31 377 OTHER 0 0,1,2,3 2 0 scsi_tmf_31 378 OTHER 0 0,1,2,3 22 0 scsi_eh_32 379 OTHER 0 0,1,2,3 2 0 scsi_tmf_32 405 OTHER 0 0,1,2,3 2005 6 kworker/u256:29 472 OTHER 0 0,1,2,3 2 0 kdmflush 473 OTHER 0 0,1,2,3 2 0 bioset 483 OTHER 0 0,1,2,3 2 0 kdmflush 484 OTHER 0 0,1,2,3 2 0 bioset 498 OTHER 0 0,1,2,3 2 0 bioset 499 OTHER 0 0,1,2,3 2 0 xfsalloc 500 OTHER 0 0,1,2,3 2 0 xfs_mru_cache 501 OTHER 0 0,1,2,3 2 0 xfs-buf/dm-0 502 OTHER 0 0,1,2,3 2 0 xfs-data/dm-0 503 OTHER 0 0,1,2,3 2 0 xfs-conv/dm-0 504 OTHER 0 0,1,2,3 2 0 xfs-cil/dm-0 505 OTHER 0 0,1,2,3 2 0 xfs-reclaim/dm- 506 OTHER 0 0,1,2,3 2 0 xfs-log/dm-0 507 OTHER 0 0,1,2,3 2 0 xfs-eofblocks/d 508 OTHER 0 0,1,2,3 9766 2 xfsaild/dm-0 509 OTHER 0 0 260 0 kworker/0:1H 578 OTHER 0 0,1,2,3 442 49 systemd-journal 603 OTHER 0 0,1,2,3 4 22 lvmetad 613 OTHER 0 0,1,2,3 246 438 systemd-udevd 633 OTHER 0 0,1,2,3 2 0 nfit 656 OTHER 0 0,1,2,3 15 1 kworker/u257:0 659 OTHER 0 0,1,2,3 2 0 hci0 660 OTHER 0 0,1,2,3 2 0 hci0 662 OTHER 0 0,1,2,3 25 0 kworker/u257:2 690 OTHER 0 2 969 0 kworker/2:2 705 OTHER 0 0,1,2,3 2 0 xfs-buf/sda1 707 OTHER 0 0,1,2,3 2 0 xfs-data/sda1 708 OTHER 0 0,1,2,3 2 0 xfs-conv/sda1 710 OTHER 0 0,1,2,3 2 0 xfs-cil/sda1 712 OTHER 0 0,1,2,3 2 0 xfs-reclaim/sda 715 OTHER 0 0,1,2,3 2 0 xfs-log/sda1 716 OTHER 0 0,1,2,3 2 0 xfs-eofblocks/s 717 OTHER 0 0,1,2,3 2 0 xfsaild/sda1 759 OTHER 0 0,1,2,3 216 0 auditd 785 OTHER 0 0,1,2,3 207 36 lsmd 787 OTHER 0 0,1,2,3 62 37 VGAuthService 788 OTHER 0 0,1,2,3 18728 57 vmtoolsd 789 OTHER 0 0,1,2,3 63 19 rpcbind 792 OTHER 0 0,1,2,3 11 55 smartd 793 OTHER 0 0,1,2,3 312 30 systemd-logind 794 OTHER 0 0,1,2,3 52 10 abrtd 796 OTHER 0 0,1,2,3 69 32 abrt-watch-log 797 OTHER 0 0,1,2,3 2796 2 rngd 801 OTHER 0 0,1,2,3 221 49 polkitd 805 OTHER 0 0,1,2,3 189 29 irqbalance 807 OTHER 0 0,1,2,3 982 118 dbus-daemon 828 OTHER 0 0,1,2,3 48 207 crond 829 OTHER 0 0,1,2,3 5 4 atd 834 OTHER 0 0,1,2,3 622 42 firewalld 844 OTHER 0 0,1,2,3 7 1 agetty 856 OTHER 0 0,1,2,3 258 245 NetworkManager 990 OTHER 0 0,1,2,3 38 2 dhclient 1182 OTHER 0 0,1,2,3 114 27 tuned 1183 OTHER 0 0,1,2,3 15 30 sshd 1185 OTHER 0 0,1,2,3 30 8 rsyslogd 1187 OTHER 0 0,1,2,3 3 2 rhsmcertd 1196 OTHER 0 0,1,2,3 1 2 rhnsd 1349 OTHER 0 0,1,2,3 84 0 master 1357 OTHER 0 0,1,2,3 35 2 pickup 1359 OTHER 0 0,1,2,3 13 4 qmgr 1450 OTHER 0 1 207 0 kworker/1:1H 1452 OTHER 0 0,1,2,3 2107 47 sshd 1456 OTHER 0 0,1,2,3 413 2 bash 1504 OTHER 0 2 13 0 kworker/2:1H 1519 OTHER 0 1 1631 1 kworker/1:3 1538 OTHER 0 3 10 0 kworker/3:1H 1603 OTHER 0 0,1,2,3 91 0 kworker/u256:0 1691 OTHER 0 0,1,2,3 2 0 anacron 1694 OTHER 0 1 808 1 kworker/1:0 1727 OTHER 0 1 6 0 kworker/1:1 1734 OTHER 0 0,1,2,3 2 0 kworker/u256:1 1737 OTHER 0 0,1,2,3 1 18 tuna

>>调整系统引导时隔离cpu资源

[root@rhel7 ~]# cat /etc/default/grub GRUB_TIMEOUT=5 GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)" GRUB_DEFAULT=saved GRUB_DISABLE_SUBMENU=true GRUB_TERMINAL_OUTPUT="console" GRUB_CMDLINE_LINUX="rd.lvm.lv=rh00/root rd.lvm.lv=rh00/swap rhgb quiet isolcpus=2,3 nohz_full=2,3 rcu_nocbs=2,3" GRUB_DISABLE_RECOVERY="true" [root@rhel7 ~]# grub2-mkconfig -o /boot/grub2/grub.cfg Generating grub configuration file ... Found linux image: /boot/vmlinuz-3.10.0-862.el7.x86_64 Found initrd image: /boot/initramfs-3.10.0-862.el7.x86_64.img Found linux image: /boot/vmlinuz-0-rescue-f239fa29131f442cb41a9fc31207a7e1 Found initrd image: /boot/initramfs-0-rescue-f239fa29131f442cb41a9fc31207a7e1.img done重启服务器,查看cpu使用

[root@rhel7 ~]# tuna --show_t thread ctxt_switches pid SCHED_ rtpri affinity voluntary nonvoluntary cmd 1 OTHER 0 0,1 1115 2573 systemd 2 OTHER 0 0,1,2,3 235 0 kthreadd 3 OTHER 0 0 679 1 ksoftirqd/0 4 OTHER 0 0 21 0 kworker/0:0 5 OTHER 0 0 8 0 kworker/0:0H 6 OTHER 0 0,1,2,3 55 0 kworker/u256:0 7 FIFO 99 0 232 0 migration/0 8 OTHER 0 0,1,2,3 2 0 rcu_bh 9 OTHER 0 0,1,2,3 7741 4 rcu_sched 10 OTHER 0 0,1,2,3 2 0 lru-add-drain 11 FIFO 99 0 58 0 watchdog/0 12 FIFO 99 1 58 0 watchdog/1 13 FIFO 99 1 229 0 migration/1 14 OTHER 0 1 433 0 ksoftirqd/1 15 OTHER 0 1 71 0 kworker/1:0 16 OTHER 0 1 11 0 kworker/1:0H 17 OTHER 0 0,1,2,3 2 0 watchdog/2 18 FIFO 99 2 60 0 migration/2 19 OTHER 0 2 3 0 ksoftirqd/2 20 OTHER 0 2 48 0 kworker/2:0 21 OTHER 0 2 9 0 kworker/2:0H 22 OTHER 0 0,1,2,3 2 0 rcuob/2 23 OTHER 0 0,1,2,3 2 0 rcuos/2 24 OTHER 0 0,1,2,3 2 0 watchdog/3 25 FIFO 99 3 60 0 migration/3 26 OTHER 0 3 3 0 ksoftirqd/3 27 OTHER 0 3 42 0 kworker/3:0 28 OTHER 0 3 8 0 kworker/3:0H 29 OTHER 0 0,1,2,3 2 0 rcuob/3 30 OTHER 0 0,1,2,3 2 0 rcuos/3 32 OTHER 0 0,1,2,3 165 0 kdevtmpfs 33 OTHER 0 0,1,2,3 2 0 netns 34 OTHER 0 0,1,2,3 3 0 khungtaskd 35 OTHER 0 0,1,2,3 2 0 writeback 36 OTHER 0 0,1,2,3 2 0 kintegrityd 37 OTHER 0 0,1,2,3 2 0 bioset 38 OTHER 0 0,1,2,3 2 0 kblockd 39 OTHER 0 0,1,2,3 2 0 md 40 OTHER 0 0,1,2,3 2 0 edac-poller 41 OTHER 0 0 3911 19 kworker/0:1 46 OTHER 0 0,1,2,3 3 0 kswapd0 47 OTHER 0 0,1,2,3 2 0 ksmd 48 OTHER 0 0,1,2,3 25 0 khugepaged 49 OTHER 0 0,1,2,3 2 0 crypto 57 OTHER 0 0,1,2,3 2 0 kthrotld 58 OTHER 0 0,1,2,3 95 7 kworker/u256:1 59 OTHER 0 0,1,2,3 2 0 kmpath_rdacd 60 OTHER 0 0,1,2,3 2 0 kaluad 61 OTHER 0 1 30 0 kworker/1:1 62 OTHER 0 0,1,2,3 2 0 kpsmoused 63 OTHER 0 0 91 0 kworker/0:2 64 OTHER 0 0,1,2,3 2 0 ipv6_addrconf 77 OTHER 0 0,1,2,3 2 0 deferwq 108 OTHER 0 0,1,2,3 187 3 kauditd 117 OTHER 0 1 3292 5 kworker/1:2 289 OTHER 0 0,1,2,3 2 0 mpt_poll_0 290 OTHER 0 0,1,2,3 2 0 mpt/0 291 OTHER 0 0,1,2,3 2 0 ata_sff 293 OTHER 0 0,1,2,3 2 0 scsi_eh_0 295 OTHER 0 0,1,2,3 2 0 scsi_tmf_0 301 OTHER 0 0,1,2,3 4 0 scsi_eh_1 302 OTHER 0 0,1,2,3 12 0 kworker/u256:2 303 OTHER 0 0,1,2,3 2 0 scsi_tmf_1 304 OTHER 0 0,1,2,3 5 0 scsi_eh_2 305 OTHER 0 0,1,2,3 2 0 scsi_tmf_2 307 OTHER 0 0,1,2,3 13 0 kworker/u256:3 310 OTHER 0 0,1,2,3 7 0 kworker/u256:4 311 OTHER 0 0,1,2,3 2 0 ttm_swap 312 FIFO 50 0,1,2,3 2 0 irq/16-vmwgfx 339 OTHER 0 0,1,2,3 22 0 scsi_eh_3 340 OTHER 0 0,1,2,3 2 0 scsi_tmf_3 341 OTHER 0 0,1,2,3 25 0 scsi_eh_4 342 OTHER 0 0,1,2,3 2 0 scsi_tmf_4 343 OTHER 0 0,1,2,3 22 0 scsi_eh_5 344 OTHER 0 0,1,2,3 2 0 scsi_tmf_5 345 OTHER 0 0,1,2,3 22 0 scsi_eh_6 346 OTHER 0 0,1,2,3 2 0 scsi_tmf_6 347 OTHER 0 0,1,2,3 22 0 scsi_eh_7 348 OTHER 0 0,1,2,3 2 0 scsi_tmf_7 349 OTHER 0 0,1,2,3 22 0 scsi_eh_8 350 OTHER 0 0,1,2,3 2 0 scsi_tmf_8 351 OTHER 0 0,1,2,3 22 0 scsi_eh_9 352 OTHER 0 0,1,2,3 2 0 scsi_tmf_9 353 OTHER 0 0,1,2,3 22 0 scsi_eh_10 354 OTHER 0 0,1,2,3 2 0 scsi_tmf_10 355 OTHER 0 0,1,2,3 22 0 scsi_eh_11 356 OTHER 0 0,1,2,3 2 0 scsi_tmf_11 357 OTHER 0 0,1,2,3 22 0 scsi_eh_12 358 OTHER 0 0,1,2,3 2 0 scsi_tmf_12 359 OTHER 0 0,1,2,3 22 0 scsi_eh_13 360 OTHER 0 0,1,2,3 2 0 scsi_tmf_13 361 OTHER 0 0,1,2,3 22 0 scsi_eh_14 362 OTHER 0 0,1,2,3 2 0 scsi_tmf_14 363 OTHER 0 0,1,2,3 22 0 scsi_eh_15 364 OTHER 0 0,1,2,3 2 0 scsi_tmf_15 365 OTHER 0 0,1,2,3 22 0 scsi_eh_16 366 OTHER 0 0,1,2,3 2 0 scsi_tmf_16 367 OTHER 0 0,1,2,3 23 0 scsi_eh_17 368 OTHER 0 0,1,2,3 2 0 scsi_tmf_17 369 OTHER 0 0,1,2,3 22 0 scsi_eh_18 370 OTHER 0 0,1,2,3 2 0 scsi_tmf_18 371 OTHER 0 0,1,2,3 22 0 scsi_eh_19 372 OTHER 0 0,1,2,3 2 0 scsi_tmf_19 373 OTHER 0 0,1,2,3 22 0 scsi_eh_20 374 OTHER 0 0,1,2,3 2 0 scsi_tmf_20 375 OTHER 0 0,1,2,3 22 0 scsi_eh_21 376 OTHER 0 0,1,2,3 2 0 scsi_tmf_21 377 OTHER 0 0,1,2,3 22 0 scsi_eh_22 378 OTHER 0 0,1,2,3 2 0 scsi_tmf_22 379 OTHER 0 0,1,2,3 22 0 scsi_eh_23 380 OTHER 0 0,1,2,3 2 0 scsi_tmf_23 381 OTHER 0 0,1,2,3 22 0 scsi_eh_24 382 OTHER 0 0,1,2,3 2 0 scsi_tmf_24 383 OTHER 0 0,1,2,3 22 0 scsi_eh_25 384 OTHER 0 0,1,2,3 2 0 scsi_tmf_25 385 OTHER 0 0,1,2,3 22 0 scsi_eh_26 386 OTHER 0 0,1,2,3 2 0 scsi_tmf_26 387 OTHER 0 0,1,2,3 22 0 scsi_eh_27 388 OTHER 0 0,1,2,3 2 0 scsi_tmf_27 389 OTHER 0 0,1,2,3 22 0 scsi_eh_28 390 OTHER 0 0,1,2,3 2 0 scsi_tmf_28 391 OTHER 0 0,1,2,3 25 0 scsi_eh_29 392 OTHER 0 0,1,2,3 2 0 scsi_tmf_29 393 OTHER 0 0,1,2,3 22 0 scsi_eh_30 394 OTHER 0 0,1,2,3 2 0 scsi_tmf_30 395 OTHER 0 0,1,2,3 22 0 scsi_eh_31 396 OTHER 0 0,1,2,3 2 0 scsi_tmf_31 397 OTHER 0 0,1,2,3 21 0 scsi_eh_32 398 OTHER 0 0,1,2,3 2 0 scsi_tmf_32 399 OTHER 0 0,1,2,3 8 0 kworker/u256:5 400 OTHER 0 0,1,2,3 8 0 kworker/u256:6 401 OTHER 0 0,1,2,3 6 0 kworker/u256:7 402 OTHER 0 0,1,2,3 11 0 kworker/u256:8 403 OTHER 0 0,1,2,3 11 0 kworker/u256:9 404 OTHER 0 0,1,2,3 7 0 kworker/u256:10 405 OTHER 0 0,1,2,3 6 1 kworker/u256:11 406 OTHER 0 0,1,2,3 13 1 kworker/u256:12 407 OTHER 0 0,1,2,3 4 2 kworker/u256:13 409 OTHER 0 0,1,2,3 4 1 kworker/u256:14 410 OTHER 0 0,1,2,3 6 1 kworker/u256:15 411 OTHER 0 0,1,2,3 6 1 kworker/u256:16 412 OTHER 0 0,1,2,3 7 1 kworker/u256:17 413 OTHER 0 0,1,2,3 9 1 kworker/u256:18 414 OTHER 0 0,1,2,3 4 1 kworker/u256:19 415 OTHER 0 0,1,2,3 9 1 kworker/u256:20 416 OTHER 0 0,1,2,3 6 1 kworker/u256:21 417 OTHER 0 0,1,2,3 10 1 kworker/u256:22 418 OTHER 0 0,1,2,3 9 1 kworker/u256:23 419 OTHER 0 0,1,2,3 9 1 kworker/u256:24 420 OTHER 0 0,1,2,3 12 1 kworker/u256:25 421 OTHER 0 0,1,2,3 9 1 kworker/u256:26 422 OTHER 0 0,1,2,3 11 1 kworker/u256:27 423 OTHER 0 0,1,2,3 261 3 kworker/u256:28 424 OTHER 0 0,1,2,3 194 1 kworker/u256:29 425 OTHER 0 0,1,2,3 3 1 kworker/u256:30 426 OTHER 0 0,1,2,3 2 0 kworker/u256:31 470 OTHER 0 0,1,2,3 2 0 kdmflush 471 OTHER 0 0,1,2,3 2 0 bioset 482 OTHER 0 0,1,2,3 2 0 kdmflush 483 OTHER 0 0,1,2,3 2 0 bioset 496 OTHER 0 0,1,2,3 2 0 bioset 497 OTHER 0 0,1,2,3 2 0 xfsalloc 498 OTHER 0 0,1,2,3 2 0 xfs_mru_cache 499 OTHER 0 0,1,2,3 2 0 xfs-buf/dm-0 500 OTHER 0 0,1,2,3 2 0 xfs-data/dm-0 501 OTHER 0 0,1,2,3 2 0 xfs-conv/dm-0 502 OTHER 0 0,1,2,3 2 0 xfs-cil/dm-0 503 OTHER 0 0,1,2,3 2 0 xfs-reclaim/dm- 504 OTHER 0 0,1,2,3 2 0 xfs-log/dm-0 505 OTHER 0 0,1,2,3 2 0 xfs-eofblocks/d 506 OTHER 0 0,1,2,3 1808 1 xfsaild/dm-0 507 OTHER 0 0 14 0 kworker/0:1H 576 OTHER 0 0,1 436 33 systemd-journal 598 OTHER 0 0,1 3 13 lvmetad 609 OTHER 0 0,1 254 822 systemd-udevd 628 OTHER 0 0,1,2,3 2 0 nfit 644 OTHER 0 0,1,2,3 13 1 kworker/u257:0 645 OTHER 0 0,1,2,3 2 1 hci0 646 OTHER 0 0,1,2,3 2 0 hci0 647 OTHER 0 0,1,2,3 22 1 kworker/u257:1 648 OTHER 0 0,1,2,3 25 0 kworker/u257:2 657 OTHER 0 0,1,2,3 2 0 xfs-buf/sda1 660 OTHER 0 0,1,2,3 2 0 xfs-data/sda1 661 OTHER 0 0,1,2,3 2 0 xfs-conv/sda1 667 OTHER 0 0,1,2,3 2 0 xfs-cil/sda1 668 OTHER 0 0,1,2,3 2 0 xfs-reclaim/sda 669 OTHER 0 0,1,2,3 2 0 xfs-log/sda1 670 OTHER 0 0,1,2,3 2 0 xfs-eofblocks/s 671 OTHER 0 0,1,2,3 2 0 xfsaild/sda1 724 OTHER 0 1 47 0 kworker/1:1H 751 OTHER 0 0,1 146 2 auditd 773 OTHER 0 0,1 687 66 dbus-daemon 776 OTHER 0 0,1 639 10 rngd 777 OTHER 0 0,1 60 21 VGAuthService 778 OTHER 0 0,1 24 20 irqbalance 780 OTHER 0 0,1 39 37 abrtd 781 OTHER 0 0,1 19 21 abrt-watch-log 782 OTHER 0 0,1 176 22 systemd-logind 784 OTHER 0 0,1 121 49 polkitd 785 OTHER 0 0,1 2290 40 vmtoolsd 789 OTHER 0 0,1 6 33 smartd 790 OTHER 0 0,1 100 48 lsmd 803 OTHER 0 0,1 8 31 rpcbind 812 OTHER 0 0,1 2 13 atd 814 OTHER 0 0,1 19 416 crond 824 OTHER 0 0,1 533 195 firewalld 825 OTHER 0 0,1 7 1 agetty 834 OTHER 0 0,1 162 382 NetworkManager 969 OTHER 0 0,1 30 7 dhclient 1159 OTHER 0 0,1 14 21 sshd 1162 OTHER 0 0,1 136 95 tuned 1164 OTHER 0 0,1 27 5 rsyslogd 1166 OTHER 0 0,1 1 2 rhsmcertd 1178 OTHER 0 0,1 1 4 rhnsd 1318 OTHER 0 0,1 17 3 master 1324 OTHER 0 0,1 6 11 pickup 1325 OTHER 0 0,1 3 7 qmgr 1435 OTHER 0 0,1 210 5 sshd 1439 OTHER 0 0,1 106 3 bash 1457 OTHER 0 0,1 16 2 abrt-dbus 1479 OTHER 0 0,1 0 22 tuna

>>确认cpu资源隔离

[root@rhel7 ~]# yum install nginx Loaded plugins: langpacks, product-id, search-disabled-repos, subscription-manager This system is not registered with an entitlement server. You can use subscription-manager to register. Resolving Dependencies --> Running transaction check ---> Package nginx.x86_64 1:1.20.1-2.el7 will be installed --> Processing Dependency: nginx-filesystem = 1:1.20.1-2.el7 for package: 1:nginx-1.20.1-2.el7.x86_64 --> Processing Dependency: libcrypto.so.1.1(OPENSSL_1_1_0)(64bit) for package: 1:nginx-1.20.1-2.el7.x86_64 --> Processing Dependency: libssl.so.1.1(OPENSSL_1_1_0)(64bit) for package: 1:nginx-1.20.1-2.el7.x86_64 --> Processing Dependency: libssl.so.1.1(OPENSSL_1_1_1)(64bit) for package: 1:nginx-1.20.1-2.el7.x86_64 --> Processing Dependency: nginx-filesystem for package: 1:nginx-1.20.1-2.el7.x86_64 --> Processing Dependency: libcrypto.so.1.1()(64bit) for package: 1:nginx-1.20.1-2.el7.x86_64 --> Processing Dependency: libprofiler.so.0()(64bit) for package: 1:nginx-1.20.1-2.el7.x86_64 --> Processing Dependency: libssl.so.1.1()(64bit) for package: 1:nginx-1.20.1-2.el7.x86_64 --> Running transaction check ---> Package gperftools-libs.x86_64 0:2.6.1-1.el7 will be installed ---> Package nginx-filesystem.noarch 1:1.20.1-2.el7 will be installed ---> Package openssl11-libs.x86_64 1:1.1.1g-3.el7 will be installed --> Finished Dependency Resolution ……………… [root@rhel7 ~]# systemctl enable nginx --now Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service. [root@rhel7 ~]# tuna --show_thread |grep nginx 3594 OTHER 0 0,1 1 0 nginx 3595 OTHER 0 0,1 1 0 nginx 3596 OTHER 0 0,1 1 0 nginx 3597 OTHER 0 0,1 1 0 nginx 3598 OTHER 0 0,1 1 0 nginx

>>调整资源到指定的CPU上

[root@rhel7 ~]# tuna --show_t |grep redis 1740 OTHER 0 0,1 7919 2 redis-server You have mail in /var/spool/mail/root [root@rhel7 ~]# tuna --cpus 2,3 --threads=redis-server --move [root@rhel7 ~]# tuna --show_t |grep redis 1740 OTHER 0 2,3 8345 2 redis-server [root@rhel7 ~]# tuna --threads=nginx --cpus=2 --move --spread [root@rhel7 ~]# tuna --show_thread |grep nginx 3594 OTHER 0 2 1 0 nginx 3595 OTHER 0 2 1 0 nginx 3596 OTHER 0 2 1 0 nginx 3597 OTHER 0 2 1 0 nginx 3598 OTHER 0 2 1 0 nginx

Ex2、调整CPU工作频率

[root@ceph1 cpu]# ls cpu0 cpu10 cpu12 cpu14 cpu2 cpu4 cpu6 cpu8 cpuidle isolated microcode nohz_full online power uevent cpu1 cpu11 cpu13 cpu15 cpu3 cpu5 cpu7 cpu9 intel_pstate kernel_max modalias offline possible present [root@ceph1 cpu]# for i in $(seq 0 15) > do > cat /sys/devices/system/cpu/cpu$i/cpufreq/scaling_governor > done powersave powersave powersave powersave powersave powersave powersave powersave powersave powersave powersave powersave powersave powersave powersave powersave [root@ceph1 cpu]# cat /proc/cpuinfo |grep -Ew '(processor|model|MHz)' processor : 0 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 1 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 1235.800 processor : 2 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 3 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3376.816 processor : 4 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 5 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 1699.960 processor : 6 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3600.078 processor : 7 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 1599.882 processor : 8 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 9 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 1294.042 processor : 10 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 11 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3656.816 processor : 12 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 13 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 1738.105 processor : 14 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 15 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 1612.871 [root@ceph1 cpu]# cpupower frequency-set -g performance Setting cpu: 0 Setting cpu: 1 Setting cpu: 2 Setting cpu: 3 Setting cpu: 4 Setting cpu: 5 Setting cpu: 6 Setting cpu: 7 Setting cpu: 8 Setting cpu: 9 Setting cpu: 10 Setting cpu: 11 Setting cpu: 12 Setting cpu: 13 Setting cpu: 14 Setting cpu: 15 [root@ceph1 cpu]# for i in $(seq 0 15); do cat /sys/devices/system/cpu/cpu$i/cpufreq/scaling_governor; done performance performance performance performance performance performance performance performance performance performance performance performance performance performance performance performance [root@ceph1 cpu]# cat /proc/cpuinfo |grep -Ew '(processor|model|MHz)' processor : 0 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 1 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.667 processor : 2 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3600.625 processor : 3 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3600.214 processor : 4 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3667.617 processor : 5 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 6 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3670.351 processor : 7 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 8 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 9 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 10 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 11 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3600.078 processor : 12 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 13 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941 processor : 14 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3664.199 processor : 15 model : 63 model name : Intel(R) Xeon(R) CPU E5-2637 v3 @ 3.50GHz cpu MHz : 3599.941