一、 环境描述

系统版本:Ubuntu20.04.2LTS

Tshark:thark_3.2.3-1

Logstash:logstash-7.12.0-amd64.deb

Elasticsearch:elasticsearch-7.12.0-amd64.deb

Kibana:kibana-7.12.0-amd64.deb

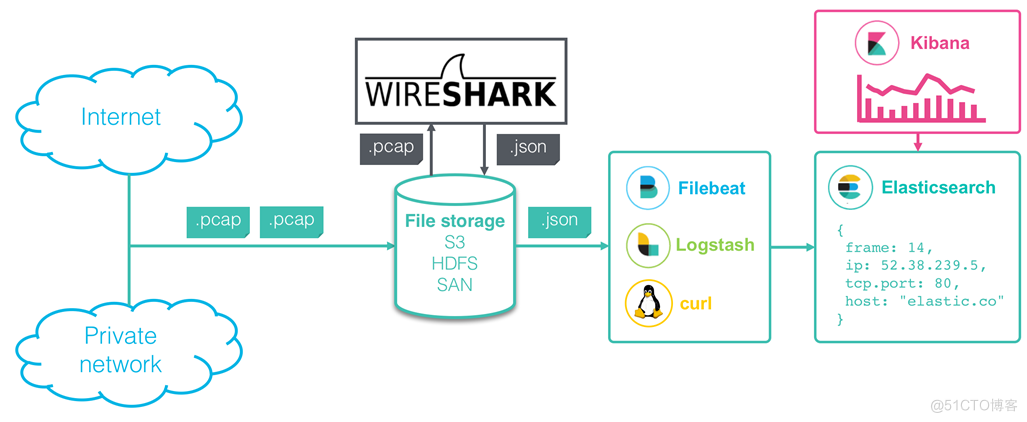

系统结构图参考:

二、 软件准备

1. 下载logstash;

# wget https://artifacts.elastic.co/downloads/logstash/logstash-7.12.0-amd64.deb

2. 下载elasticsearch;

# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.12.0-amd64.deb

3. 下载kibana;

# wget https://artifacts.elastic.co/downloads/kibana/kibana-7.12.0-amd64.deb

4. 下载tsharkVM git包(主要用到里面一个处理脚本);

# git clone https://github.com/H21lab/tsharkVM.git

5. 修改Ubuntu的source源味国内镜像源;

# cd /etc/apt/ # mv sources.list sources.list- # vim sources.list # cat sources.list deb https://mirrors.ustc.edu.cn/ubuntu/ focal main restricted universe multiverse deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal main restricted universe multiverse deb https://mirrors.ustc.edu.cn/ubuntu/ focal-security main restricted universe multiverse deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal-security main restricted universe multiverse deb https://mirrors.ustc.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb https://mirrors.ustc.edu.cn/ubuntu/ focal-backports main restricted universe multiverse deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal-backports main restricted universe multiverse ## Not recommended # deb https://mirrors.ustc.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse # deb-src https://mirrors.ustc.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse6. 更新系统;

# apt update && apt upgrade && reboot

三、 软件安装

1、 安装tshark和java

# apt install tshark The following packages were automatically installed and are no longer required: eatmydata libeatmydata1 python3-importlib-metadata python3-jinja2 python3-json-pointer python3-jsonpatch python3-jsonschema python3-markupsafe python3-more-itertools python3-pyrsistent python3-zipp Use 'apt autoremove' to remove them. The following additional packages will be installed: libc-ares2 libjbig0 libjpeg-turbo8 libjpeg8 liblua5.2-0 libsbc1 libsmi2ldbl libsnappy1v5 libspandsp2 libspeexdsp1 libssh-gcrypt-4 libtiff5 libwebp6 libwireshark-data libwireshark13 libwiretap10 libwsutil11 wireshark-common Suggested packages: snmp-mibs-downloader geoipupdate geoip-database geoip-database-extra libjs-leaflet libjs-leaflet.markercluster wireshark-doc The following NEW packages will be installed: libc-ares2 libjbig0 libjpeg-turbo8 libjpeg8 liblua5.2-0 libsbc1 libsmi2ldbl libsnappy1v5 libspandsp2 libspeexdsp1 libssh-gcrypt-4 libtiff5 libwebp6 libwireshark-data libwireshark13 libwiretap10 libwsutil11 tshark wireshark-common 0 upgraded, 19 newly installed, 0 to remove and 0 not upgraded. Need to get 18.8 MB of archives. After this operation, 109 MB of additional disk space will be used. Do you want to continue? [Y/n] y # apt install openjdk-11-jre-headless The following packages were automatically installed and are no longer required: eatmydata libeatmydata1 python3-importlib-metadata python3-jinja2 python3-json-pointer python3-jsonpatch python3-jsonschema python3-markupsafe python3-more-itertools python3-pyrsistent python3-zipp Use 'apt autoremove' to remove them. The following additional packages will be installed: ca-certificates-java fontconfig-config fonts-dejavu-core java-common libavahi-client3 libavahi-common-data libavahi-common3 libcups2 libfontconfig1 libgraphite2-3 libharfbuzz0b liblcms2-2 libpcsclite1 Suggested packages: default-jre cups-common liblcms2-utils pcscd libnss-mdns fonts-dejavu-extra fonts-ipafont-gothic fonts-ipafont-mincho fonts-wqy-microhei | fonts-wqy-zenhei fonts-indic The following NEW packages will be installed: ca-certificates-java fontconfig-config fonts-dejavu-core java-common libavahi-client3 libavahi-common-data libavahi-common3 libcups2 libfontconfig1 libgraphite2-3 libharfbuzz0b liblcms2-2 libpcsclite1 openjdk-11-jre-headless 0 upgraded, 14 newly installed, 0 to remove and 0 not upgraded. Need to get 39.4 MB of archives. After this operation, 177 MB of additional disk space will be used. Do you want to continue? [Y/n] y

2、 安装ELK

# dpkg -i elasticsearch-7.12.0-amd64.deb kibana-7.12.0-amd64.deb logstash-7.12.0-amd64.deb Preparing to unpack elasticsearch-7.12.0-amd64.deb ... Creating elasticsearch group... OK Creating elasticsearch user... OK Unpacking elasticsearch (7.12.0) ... Selecting previously unselected package kibana. Preparing to unpack kibana-7.12.0-amd64.deb ... Unpacking kibana (7.12.0) ... Selecting previously unselected package logstash. Preparing to unpack logstash-7.12.0-amd64.deb ... Unpacking logstash (1:7.12.0-1) ... Setting up elasticsearch (7.12.0) ... Created elasticsearch keystore in /etc/elasticsearch/elasticsearch.keystore Setting up kibana (7.12.0) ... Creating kibana group... OK Creating kibana user... OK Created Kibana keystore in /etc/kibana/kibana.keystore Setting up logstash (1:7.12.0-1) ... Using bundled JDK: /usr/share/logstash/jdk Using provided startup.options file: /etc/logstash/startup.options OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release. /usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/pleaserun-0.0.31/lib/pleaserun/platform/base.rb:112: warning: constant ::Fixnum is deprecated Successfully created system startup script for Logstash四、 ELK配置

1、 elasticsearch配置

# cd /etc/elasticsearch/

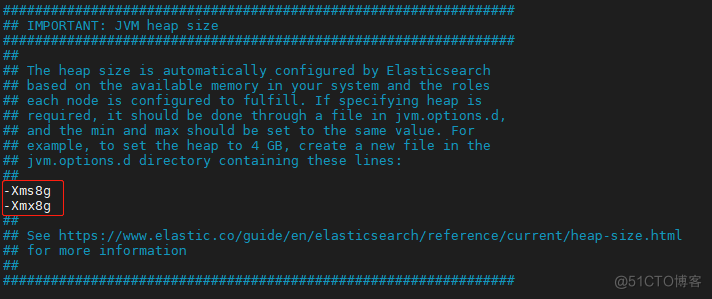

# vim jvm.options

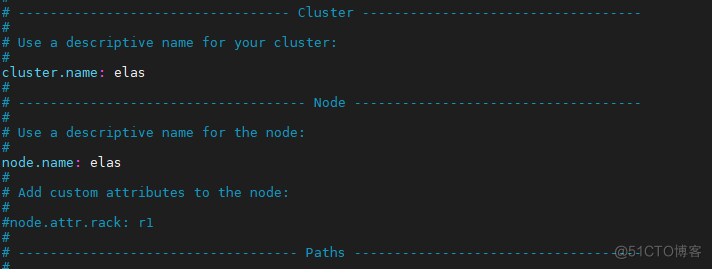

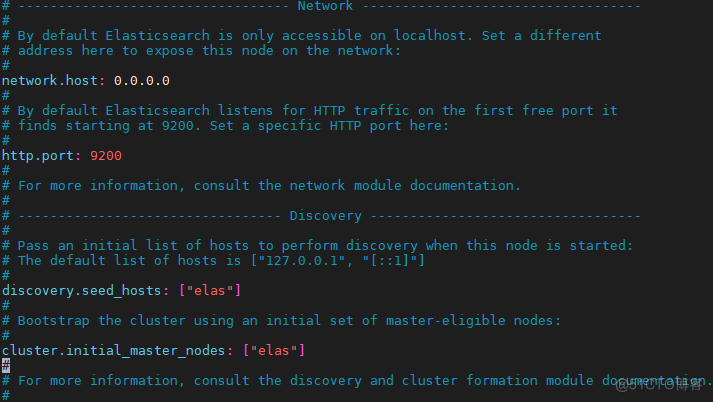

# vim elasticsearch.yml

# systemctl daemon-reload

重启服务;

# systemctl restart elasticsearch.service

查看服务状态;

# systemctl status elasticsearch.service

●elasticsearch.service - Elasticsearch

Loaded: loaded (/lib/systemd/system/elasticsearch.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-08-13 02:55:08 UTC; 4h 7min ago

Docs: https://www.elastic.co

Main PID: 271265 (java)

Tasks: 99 (limit: 19109)

Memory: 8.6G

CGroup: /system.slice/elasticsearch.service

├─271265 /usr/share/elasticsearch/jdk/bin/java -Xshare:auto -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -XX:+AlwaysPreT>

└─271470 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

Aug 13 02:54:48 elas systemd[1]: Starting Elasticsearch...

Aug 13 02:55:08 elas systemd[1]: Started Elasticsearch.

查看服务监听端口;

# netstat -tlunp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 661/systemd-resolve

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 780/sshd: /usr/sbin

tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 265188/sshd: root@p

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 270722/node

tcp6 0 0 :::9300 :::* LISTEN 271265/java

tcp6 0 0 :::22 :::* LISTEN 780/sshd: /usr/sbin

tcp6 0 0 ::1:6010 :::* LISTEN 265188/sshd: root@p

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 270442/java

tcp6 0 0 :::9200 :::* LISTEN 271265/java

udp 0 0 127.0.0.53:53 0.0.0.0:* 661/systemd-resolve

2、 logstash配置

# cd /etc/logstash/

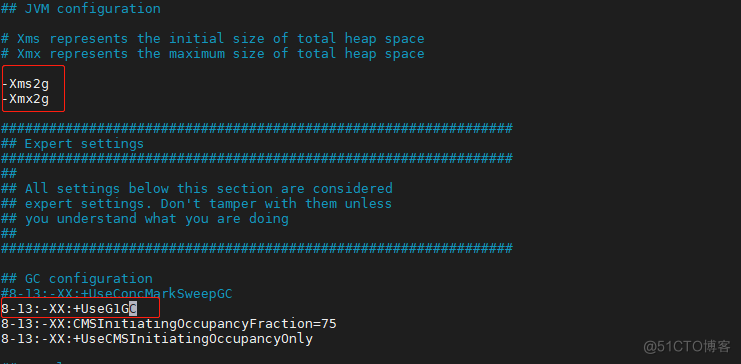

# vim jvm.options

# cd conf.d/

增加tshark数据输入到elasticsearch配置文件;

# vim tshark-elas.conf # cat tshark-elas.conf input { file { path => "/data/packets.json" start_position => "beginning" } } filter { # Drop Elasticsearch Bulk API control lines if ([message] =~ "{\"index") { drop {} } json { source => "message" remove_field => "message" } # Extract innermost network protocol grok { match => { "[layers][frame][frame_frame_protocols]" => "%{WORD:protocol}$" } } date { match => [ "timestamp", "UNIX_MS" ] } } output { elasticsearch { hosts => "localhost:9200" index => "packets-%{+YYYY-MM-dd}" # document_type => "pcap_file" manage_template => false } }# systemctl daemon-reload

重启服务

# systemctl restart logstash.service

查看服务器状态

# systemctl status logstash.service

● logstash.service - logstash

Loaded: loaded (/etc/systemd/system/logstash.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-08-13 02:10:24 UTC; 5h 17min ago

Main PID: 270442 (java)

Tasks: 72 (limit: 19109)

Memory: 2.6G

CGroup: /system.slice/logstash.service

└─270442 /usr/share/logstash/jdk/bin/java -Xms2g -Xmx2g -XX:+UseG1GC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.a>

Aug 13 02:10:46 elas logstash[270442]: [2021-08-13T02:10:46,739][WARN ][logstash.outputs.elasticsearch][main] Restored connection to ES instance {:url=>"http://loc>

Aug 13 02:10:46 elas logstash[270442]: [2021-08-13T02:10:46,798][INFO ][logstash.outputs.elasticsearch][main] ES Output version determined {:es_version=>7}

Aug 13 02:10:46 elas logstash[270442]: [2021-08-13T02:10:46,802][WARN ][logstash.outputs.elasticsearch][main] Detected a 6.x and above cluster: the `type` event fi>

Aug 13 02:10:46 elas logstash[270442]: [2021-08-13T02:10:46,857][INFO ][logstash.outputs.elasticsearch][main] New Elasticsearch output {:class=>"LogStash::Outputs:>

Aug 13 02:10:47 elas logstash[270442]: [2021-08-13T02:10:47,110][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers>

Aug 13 02:10:48 elas logstash[270442]: [2021-08-13T02:10:48,070][INFO ][logstash.javapipeline ][main] Pipeline Java execution initialization time {"seconds"=>0.>

Aug 13 02:10:48 elas logstash[270442]: [2021-08-13T02:10:48,325][INFO ][logstash.inputs.file ][main] No sincedb_path set, generating one based on the "path" se>

Aug 13 02:10:48 elas logstash[270442]: [2021-08-13T02:10:48,352][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

Aug 13 02:10:48 elas logstash[270442]: [2021-08-13T02:10:48,409][INFO ][filewatch.observingtail ][main][6efc126c2ab1b0e66a90b494d9a18ae7dc55003ac5f9addc874a5130d9>

Aug 13 02:10:48 elas logstash[270442]: [2021-08-13T02:10:48,417][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_>

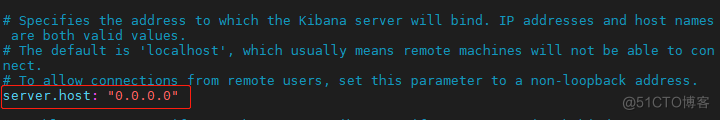

3、 kibana配置

# cd /etc/kibana/

# vim kibana.yml

# systemctl daemon-reload

重启服务;

# systemctl restart kibana.service

查看服务状态;

# systemctl status kibana.service

● kibana.service - Kibana

Loaded: loaded (/etc/systemd/system/kibana.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-08-13 02:19:08 UTC; 5h 16min ago

Docs: https://www.elastic.co

Main PID: 270722 (node)

Tasks: 11 (limit: 19109)

Memory: 267.2M

CGroup: /system.slice/kibana.service

└─270722 /usr/share/kibana/bin/../node/bin/node /usr/share/kibana/bin/../src/cli/dist --logging.dest=/var/log/kibana/kibana.log --pid.file=/run/kibana>

Aug 13 02:19:08 elas systemd[1]: Started Kibana.

查看服务监听端口

# netstat -tlunp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 661/systemd-resolve

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 780/sshd: /usr/sbin

tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 265188/sshd: root@p

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 270722/node

tcp6 0 0 :::9300 :::* LISTEN 271265/java

tcp6 0 0 :::22 :::* LISTEN 780/sshd: /usr/sbin

tcp6 0 0 ::1:6010 :::* LISTEN 265188/sshd: root@p

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 270442/java

tcp6 0 0 :::9200 :::* LISTEN 271265/java

udp 0 0 127.0.0.53:53 0.0.0.0:* 661/systemd-resolve

五、 配置Tshark数据包模板

1、 导出elastic-mapping;

# cd tsharkVM/Kibana

# tshark -G elastic-mapping --elastic-mapping-filter frame,eth,ip,udp,tcp,dns > custom_tshark_mapping.json

2、 处理重复字段和类型;(脚本处理完后,还有一些字段类型不匹配,在logstash导入数据时会有提示,根据提示,修改后再重新导入);

# ruby ./Public/process_tshark_mapping_json.rb

3、 导入模板文件;

# curl -X PUT "localhost:9200/_index_template/packets_template" -H 'Content-Type: application/json' \

-d@custom_tshark_mapping_deduplicated.json

{"acknowledged":true}

#

六、 导入数据

1、 使用tshark将数据包转换为elasticsearch格式,由logstacsh输出到elasticsearch;

# tshark –T ek –x –r 1030.pcap > /data/packets.json

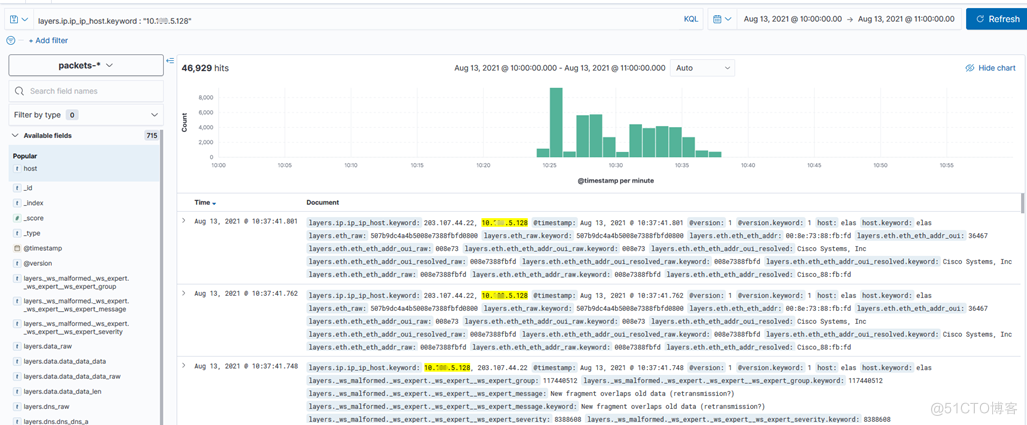

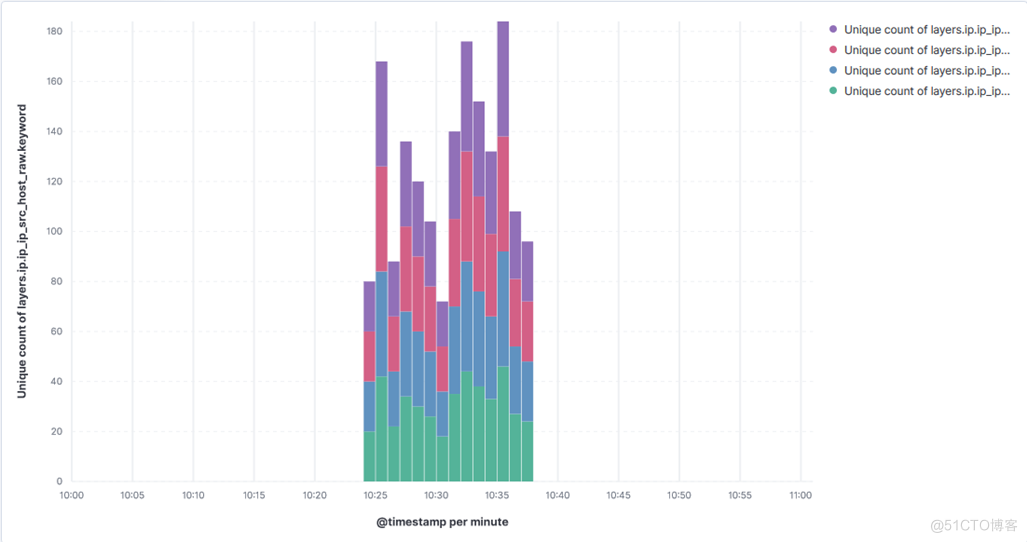

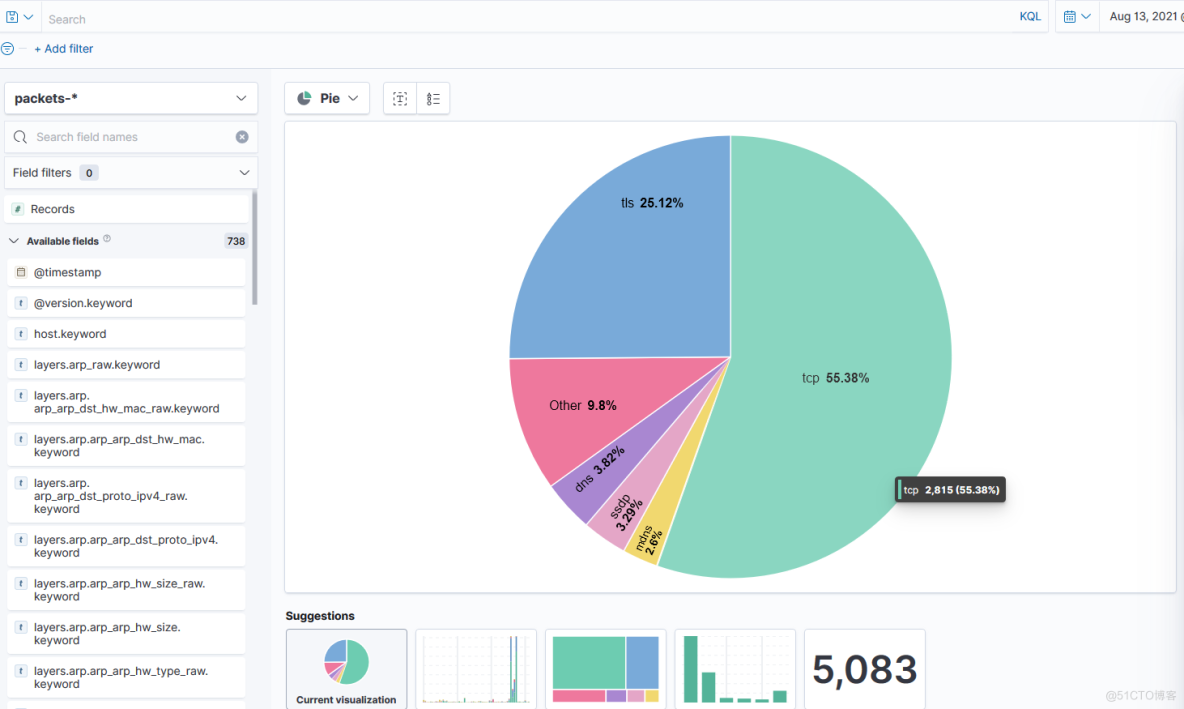

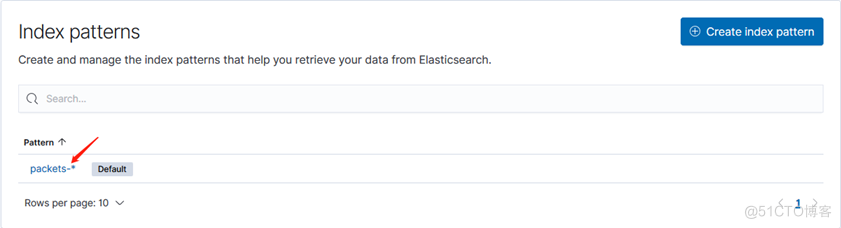

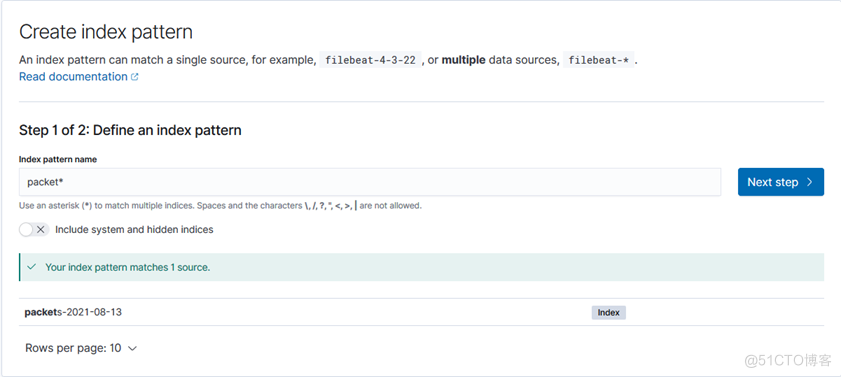

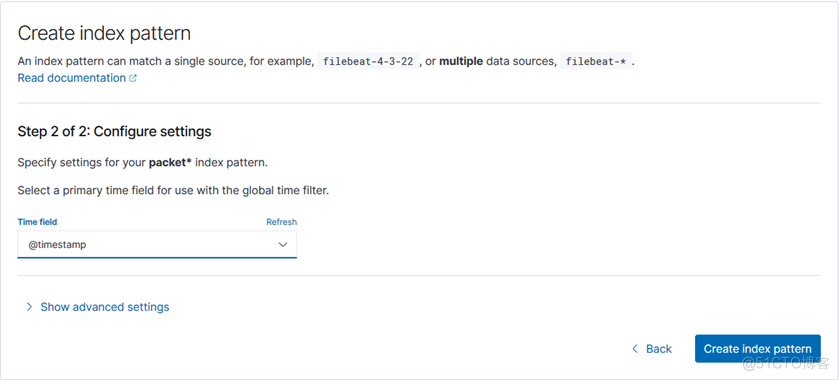

2、 在kibana上创建index patterns;

自定义展示;