在本系列文章中,我将最近学习的如何使用Elastic技术栈来为Kubernetes构建监控环境做一个简单的整理,以便后期查阅及加深理解。

ELK简单介绍

ELK是Elasticsearch、Logstash、Kibana三大开源框架首字母大写简称(后期出现的filebeat(beats中的一种)用来替代logstash的数据收集功能,比较轻量级)。Filebeat是用于转发和集中日志数据的轻量级传送工具。Filebeat监视您指定的日志文件或位置,收集日志事件,并将它们转发到Elasticsearch或Logstash进行索引。Logstash是免费且开放的服务器端数据处理管道,能够从多个来源采集数据,转换数据。Elasticsearch是Elastic Stack核心的分布式搜索和分析引擎,是一个基于Lucene、分布式、通过Restful方式进行交互的近实时搜索平台框架。Elasticsearch为所有类型的数据提供近乎实时的搜索和分析。

创建命名空间

我会用的环境是kubernetes v1.20.4版本的集群。为方便管理,我将涉及到的所有资源都部署到elastic的名称空间中

# kubectl create ns elastic namespace/elastic created配置文件(elasticsearch-configmap.yml)

首先创建一个configmap资源对象生成es的配置文件

需要注意:node.name最好使用完整的能解析到的域名,至少要和discovery.seed_hosts参数保持一致,否则es集群会协商不起来。

--- apiVersion: v1 kind: ConfigMap metadata: namespace: elastic name: es-config labels: app: elasticsearch role: es data: elasticsearch.yml: |- cluster.name: ${CLUSTER_NAME} node.name: ${NODE_NAME}.es-svc.elastic.svc.cluster.local path.data: ${PATH_DATA} path.logs: ${PATH_LOGS} discovery.seed_hosts: ${NODE_LIST} cluster.initial_master_nodes: ${NODE_LIST} network.host: 0.0.0.0 node: master: true data: true xpack.security.enabled: true xpack.monitoring.collection.enabled: true xpack.monitoring.collection.interval: 30s #### 部署文件(elasticsearch-statefulset.yml) 由于es集群存在多个数据节点,且每个节点上存在的数据是不一致的,数据都需要单独存储,所以采用`statefulset`控制器进行管理部署,同时需要使用`volumeClaimTemplates`来分别创建存储卷,我使用`nfs`作为后端的数据存储。apiVersion: apps/v1kind: StatefulSetmetadata:namespace: elasticname: elasticsearchlabels:app: elasticsearchrole: esspec:serviceName: "es-svc"replicas: 3selector:matchLabels:app: elasticsearchrole: estemplate:metadata:labels:app: elasticsearchrole: esspec:

pod反亲和策略,用于将具有role=es标签的pod打散

affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: "role" operator: In values: - "es" topologyKey: "kubernetes.io/hostname" containers: - image: elasticsearch:7.8.0 imagePullPolicy: IfNotPresent name: elasticsearch env: - name: CLUSTER_NAME value: elasticsearch-cluster - name: PATH_DATA value: /opt/es/data - name: PATH_LOGS value: /usr/share/elasticsearch/logs - name: NODE_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: NODE_LIST value: "elasticsearch-0.es-svc.elastic.svc.cluster.local,elasticsearch-1.es-svc.elastic.svc.cluster.local,elasticsearch-2.es-svc.elastic.svc.cluster.local" - name: "ES_JAVA_OPTS" value: "-Xms1024m -Xmx1024m" ports: - containerPort: 9200 name: http - containerPort: 9300 name: transport volumeMounts: - mountPath: /usr/share/elasticsearch/config/elasticsearch.yml readOnly: true subPath: elasticsearch.yml name: config - mountPath: /opt/es/data name: es-data - mountPath: /usr/share/elasticsearch/logs name: es-logs volumes: - name: config configMap: name: es-configvolumeClaimTemplates:

- metadata:name: es-dataspec:accessModes: [ "ReadWriteOnce" ]storageClassName: managed-nfs-storageresources:requests:storage: 10Gi

- metadata:name: es-logsspec:accessModes: [ "ReadWriteOnce" ]storageClassName: managed-nfs-storageresources:requests:storage: 5Gi

无头服务(elasticsearch-service.yml)

用于集群内部访问

--- kind: Service apiVersion: v1 metadata: namespace: elastic name: es-svc labels: app: elasticsearch role: es spec: selector: app: elasticsearch role: es clusterIP: None ports: - name: http port: 9200 - name: transport port: 9300映射至外网(es-ingress.yml)

配置ingress,提供一个外部访问的域名(elastic.liheng.com)

--- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: namespace: elastic name: es-ingress spec: rules: - host: elastic.liheng.com http: paths: - pathType: Prefix path: "/" backend: service: name: es-svc port: number: 9200部署

创建上面的4个资源对象即可

# kubectl apply -f elasticsearch-configmap.yml # kubectl apply -f elasticsearch-statefulset.yml # kubectl apply -f elasticsearch-service.yml # kubectl apply -f es-ingress.yml如果容器启动失败,可以通过kubectl -n elastic logs -f elasticsearch-0查看启动日志。一般会出现"[1] max virtual memory areas vm.max_map_count [65530] is too low,increase to at least [262144]"报错,导致容器启动失败。可通过加入初始化容器将容器系统参数进行初始化调整解决:

··· initContainers: - name: init-vm-max-map image: busybox command: ["sysctl","-w","vm.max_map_count=262144"] securityContext: privileged: true - name: init-fd-ulimit image: busybox command: ["sh","-c","ulimit -n 65535"] securityContext: privileged: true ···查看pod状态

# kubectl -n elastic get pod NAME READY STATUS RESTARTS AGE elasticsearch-0 1/1 Running 0 4m50s elasticsearch-1 1/1 Running 0 4m6s elasticsearch-2 1/1 Running 0 117s生成mima

我们启用了xpack认证模块,我们需要通过命令生成一个初始mima

#kubectl -n elastic exec -it elasticsearch-0 -- bin/elasticsearch-setup-passwords auto -b Changed password for user apm_system PASSWORD apm_system = 7IujAS2g2rHLgmNddwqH Changed password for user kibana_system PASSWORD kibana_system = qUjxDUNuNGkbqBVyZfs8 Changed password for user kibana PASSWORD kibana = qUjxDUNuNGkbqBVyZfs8 Changed password for user logstash_system PASSWORD logstash_system = wToZIb0nafESAlxQJysa Changed password for user beats_system PASSWORD beats_system = YUqz4YUojyNxodJFcYb6 Changed password for user remote_monitoring_user PASSWORD remote_monitoring_user = WQKNWUx2RD0so5WowLL8 Changed password for user elastic PASSWORD elastic = F31k1cwR8Td1ulNUqnXW查看es集群状态

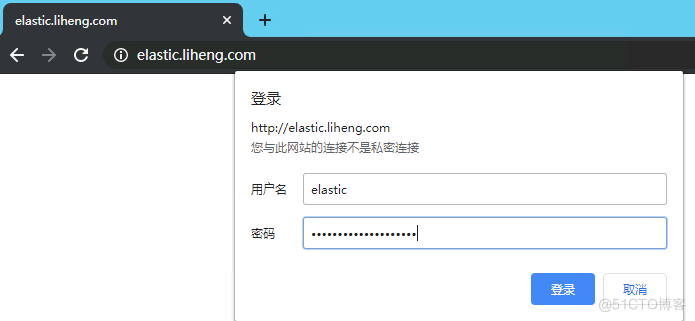

浏览器访问`http://elastic.liheng.com

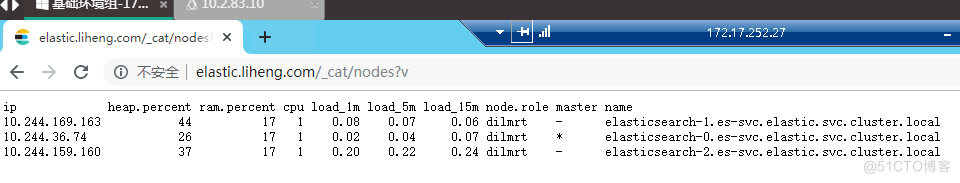

浏览器访问`http://elastic.liheng.com/_cat/nodes?v

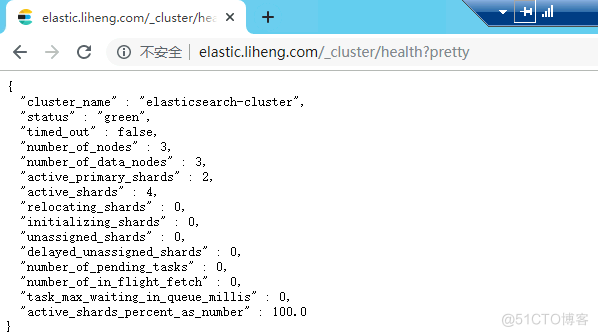

浏览器访问http://elastic.liheng.com/_cluster/health?pretty

通过以上访问结果,可以证明ES集群已经正常运行。