该文档编写参考了李振良大佬的学习教程,致敬大佬......... k8s部分核心组件概念简介: ectd :保存整个集群的状态,即数据库。apiserver :提供资源操作的唯一入口,并提供认证,授权.访问控

该文档编写参考了李振良大佬的学习教程,致敬大佬.........

k8s部分核心组件概念简介:

ectd :保存整个集群的状态,即数据库。 apiserver :提供资源操作的唯一入口,并提供认证,授权.访问控制以及API注册,以及服务发现的机制。 controller manager : 负责维护集群的状态,比如故障检测,自动扩展和滚动更新。 scheduler : 负责资源的调度,按照你预定配置好的调度策略将Pod调度到对应的机器上运行。 kubelet : 负责维护容器的生命周期,同时也负责Volume和网络的管理。 docker Engine : 负责镜像管理以及Pod和容器的真正运行 kube-proxy 负责为Service提供cluster内部的服务发现和负载均衡 flanneld 负责各个不同node节点运行的容器之间网络互通 Dashboard :提供 GUI web可视化 其他组件 自行查看官方文档用于生产环境的k8s集群可通过两种方式部署:

1.kubeadm 部署简单,快速。1.5版本以前的kubeadm不适宜用于生产环境,存在不稳定情况,新版本可用于生产环境

2.通过二进制包安装部署.较为复杂,繁琐。 说明:生产环境推荐尽可能使用二进制包安装,因为kubeadm是通过脚本式安装,很多组件都是脚本化,容器化,不清楚上面跑什么东西,一旦出问题,不好对故障修复,也就是kubeadm安装的k8s,易于安装但不利于维护.二进制安装则每一步骤自己都清楚,易于维护定位问题。难部署却利于运维。

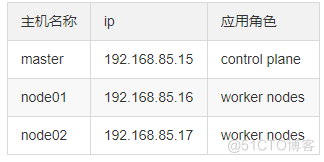

一、部署环境:环境说明: centos7.6_64 bit

二、安装的服务应用

1. etcd 服务 etcd集群其中的一个节点 2. kube-apiserver k8s的核心组件 3. kube-controller-manager k8s的核心组件 4. kube-scheduler k8s的核心组件 5. flanneld 网络组件 6. docker (选装) node节点上需要安装的服务应用如下: 1. etcd 服务 etcd集群其中的一个节点 2. kubelet 3. kube-proxy 4. flanneld 网络组件 5. docker

三、所有节点禁用交换分区

临时禁用: swapoff -a 永久禁用:修改配置文件/etc/fstab,注释swap四、二进制软件包下载

etcd-v3.5.0-linux-amd64.tar.gz flannel-v0.10.0-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz 由于以下地址均为海外地址,下载起来慢得没脾气,自行找ti子..... wget https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz https://github.com/kubernetes/kubernetes/releases 自行选择版本(推荐使用1.18-1.20版本)证书生成工具下载

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo五、目录拟定 (master节点和node01,node02节点均创建如下目录 安装目录可自行拟定)

etcd服务目录 mkdir -p /opt/etcd/{bin,ssl,cfg} 程序目录:/opt/etcd/bin 证书目录:/opt/etcd/ssl/ 配置文件目录:/opt/etcd/cfg kubernets服务目录 mkdir -p /opt/kubernetes/{bin,ssl,cfg} 程序目录:/opt/kubernetes/bin 证书目录:/opt/kubernetes/ssl/ 配置文件目录:/opt/kubernetes/cfg六、证书文件生成 (etcd证书 kubernetes证书)

etcd证书生成

vim ca-config.json { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } vim ca-csr.json { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "GZ", "ST": "GZ" } ] } 执行生成ca证书 cfssl gencert -initca ca-csr.json | cfssljson -bare ca - vim server-csr.json { "CN": "etcd", "hosts": [ "192.168.85.15", "192.168.85.16", "192.168.85.17", ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "GZ", "ST": "GZ" } ] } 执行生成server证书 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server 将生成的证书文件复制到master,node01,node02节点的/opt/etcd/ssl/证书目录下 cp -r ca.csr ca-key.pem ca.pem server.csr server-key.pem server.pem /opt/etcd/ssl/kubernetes证书生成

vim ca-config.json { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } vim ca-csr.json { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "GZ", "ST": "GZ", "O": "k8s", "OU": "System" } ] } 执行命令生成CA证书 cfssl gencert -initca ca-csr.json | cfssljson -bare ca - vim server-csr.json { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "192.168.85.15", "192.168.85.16", "192.168.85.17", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "GZ", "ST": "GZ", "O": "k8s", "OU": "System" } ] } 执行命令生成server证书 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server admin角色证书生成 vim admin-csr.json { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "GZ", "ST": "GZ", "O": "system:masters", "OU": "System" } ] } 执行命令生成admin相关证书 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin kube-proxy证书生成 vim kube-proxy-csr.json { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "GZ", "ST": "GZ", "O": "k8s", "OU": "System" } ] } 执行命令生成kube-proxy项目证书 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy 将生成的证书拷贝到/opt/kubernetes/ssl/ (master节点) cp -r admin.csr admin-key.pem admin.pem ca.csr ca-key.pem ca.pem \ kube-proxy.csr kube-proxy-key.pem kube-proxy.pem server.csr server-key.pem server.pem /opt/kubernetes/ssl/七、etcd集群配置

etcd安装

解压二进制包(master,node01,node02节点均相同操作) tar -zxvf etcd-v3.3.10-linux-amd64.tar.gz cp etcd-v3.3.10-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/master节点etcd的配置

//数据文件存放在/var/lib/etcd/default.etcd 请根据业务场景自行修改 vim /opt/etcd/cfg/etcd #[Member] ETCD_NAME="etcd01" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.85.15:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.85.15:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.85.15:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.85.15:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.85.15:2380,etcd02=https://192.168.85.16:2380,etcd03=https://192.168.85.17:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" 编写master节点etcd服务启动文件 vim /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd ExecStart=/opt/etcd/bin/etcd --name=${ETCD_NAME} --data-dir=${ETCD_DATA_DIR} --listen-peer-urls=${ETCD_LISTEN_PEER_URLS} --listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 --advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} --initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} --initial-cluster=${ETCD_INITIAL_CLUSTER} --initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} --initial-cluster-state=new --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target 执行启动 systemctl daemon-reload systemctl enable etcd systemctl restart etcdnode01节点etcd的配置

vim /opt/etcd/cfg/etcd #[Member] ETCD_NAME="etcd02" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.85.16:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.85.16:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.85.16:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.85.16:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.85.15:2380,etcd02=https://192.168.85.16:2380,etcd03=https://192.168.85.17:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"编写node01节点etcd服务启动文件 vim /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd ExecStart=/opt/etcd/bin/etcd --name=${ETCD_NAME} --data-dir=${ETCD_DATA_DIR} --listen-peer-urls=${ETCD_LISTEN_PEER_URLS} --listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 --advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} --initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} --initial-cluster=${ETCD_INITIAL_CLUSTER} --initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} --initial-cluster-state=new --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target 执行启动 systemctl daemon-reload systemctl enable etcd systemctl restart etcd

node02节点etcd的配置

vim /opt/etcd/cfg/etcd #[Member] ETCD_NAME="etcd03" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.85.17:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.85.17:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.85.17:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.85.17:2379" ETCD_INITIAL_CLUSTER="etcd01=https://192.168.85.15:2380,etcd02=https://192.168.85.16:2380,etcd03=https://192.168.85.17:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" 编写node02节点etcd服务启动文件 vim /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd ExecStart=/opt/etcd/bin/etcd --name=${ETCD_NAME} --data-dir=${ETCD_DATA_DIR} --listen-peer-urls=${ETCD_LISTEN_PEER_URLS} --listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 --advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} --initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} --initial-cluster=${ETCD_INITIAL_CLUSTER} --initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} --initial-cluster-state=new --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target 执行启动 systemctl daemon-reload systemctl enable etcd systemctl restart etcd测试请求etcd验证集群状态:

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.85.15:2379,https://192.168.85.16:2379,https://192.168.85.17:2379" cluster-health八、在Node安装Docker服务

yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo yum install docker-ce -y curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://bc437cce.m.daocloud.io systemctl start docker systemctl enable docker九、部署Flannel网络(通过Flannel组件实现将不同node节点的网络组成一个局域网 docker网络将于Flannel桥接 令令各个不同node节点之间的docker容器能够互相通信 各个节点操作相同)

1.先将分配的子网段设置写入etcd中

/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.85.15:2379,https://192.168.85.16:2379,https://192.168.85.17:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'2.安装flannel二进制包(master,node01,node02相同操作)

tar -zxvf flannel-v0.10.0-linux-amd64.tar.gz mv flannel-v0.10.0-linux-amd64/{flanneld,mk-docker-opts.sh} /opt/kubernetes/bin3.编写Flannel配置文件(master,node01,node02相同操作)

vim /opt/kubernetes/cfg/flanneld FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.85.15:2379,https://192.168.85.16:2379,https://192.168.85.17:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem" 4.编写systemd管理Flannel服务启动文件vim /usr/lib/systemd/system/flanneld.service [Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/opt/kubernetes/cfg/flanneld ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target

5.配置Docker启动指定子网段:(注意:如果该node节点上已经有正在运行的docker容器业务应用,千万不要执行下面操作,k8s的node节点应当选用一个上面没有docker应用服务的机器 也就是新的节点)

vim /usr/lib/systemd/system/docker.service [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify EnvironmentFile=/run/flannel/subnet.env ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target

6.重启flannel和docker:

systemctl daemon-reload systemctl start flanneld systemctl enable flanneld systemctl restart docker7.查看ip验证是否生效

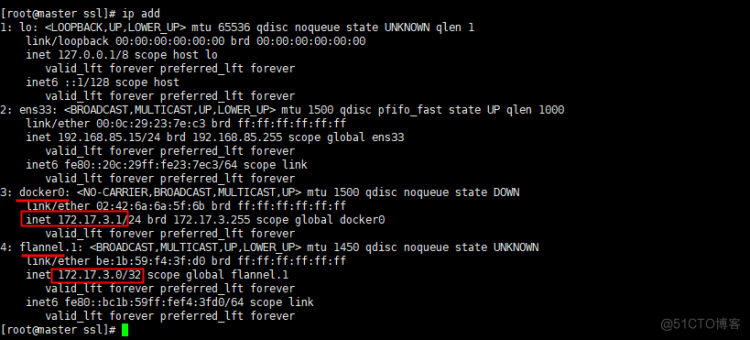

可以看到docker的网络为flannel分配的子网

十、在Master节点部署组件(包括apiserver,scheduler,controller-manager 其中apiserver必须先安装)

解压相关二进制可执行程序文件 tar zxvf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bin cp kube-apiserver kube-scheduler kube-controller-manager kubectl /opt/kubernetes/bin 可自行创建软链接到/usr/bin下部署apiserver组件

编写apiserver组件配置文件 vim /opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=https://192.168.85.15:2379,https://192.168.85.16:2379,https://192.168.85.17:2379 \ --bind-address=192.168.85.15 \ --insecure-bind-address=0.0.0.0 \ --secure-port=6443 \ --advertise-address=192.168.85.15 \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --kubelet-https=true \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem" 编写systemd管理apiserver服务启动文件 vim /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target 执行启动 systemctl daemon-reload systemctl enable kube-apiserver systemctl restart kube-apiserver

查看apiserver进程

[root@master source]# ps -ef |grep apiserver

root 104677 56865 0 16:09 pts/0 00:00:00 grep --color=auto apiserver

root 119376 1 1 7月11 ? 00:55:39 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://192.168.85.15:2379,https://192.168.85.16:2379,https://192.168.85.17:2379 --bind-address=192.168.85.15 --insecure-bind-address=0.0.0.0 --secure-port=6443 --advertise-address=192.168.85.15 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem

部署scheduler组件

编写kube-scheduler配置文件 vim /opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \ --v=4 \ --master=192.168.85.15:8080 \ --leader-elect" 编写systemd管理scheduler服务启动文件 vim /usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target 执行启动: systemctl daemon-reload systemctl enable kube-scheduler systemctl restart kube-scheduler

查看scheduler进程 [root@master source]# ps -ef |grep scheduler

root 69747 1 0 11:23 ? 00:01:33 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=192.168.85.15:8080 --leader-elect

部署controller-manager组件

编写controller-manager组件配置文件 vim /opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=192.168.85.15:8080 \ --leader-elect=true \ --address=127.0.0.1 \ --service-cluster-ip-range=10.0.0.0/24 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \ --experimental-cluster-signing-duration=87600h0m0s" 编写systemd管理controller-manager服务启动文件 vim /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target 执行启动 systemctl daemon-reload systemctl enable kube-controller-manager systemctl restart kube-controller-manager查看kube-controller-manager进程

[root@master source]# ps -ef |grep kube-controller-manager

root 69770 1 1 11:23 ? 00:03:02 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=192.168.85.15:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.0.0.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --experimental-cluster-signing-duration=87600h0m0s

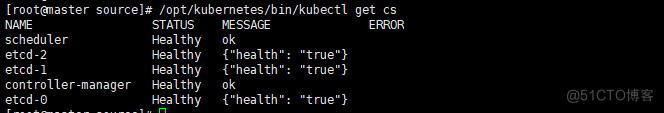

所有组件都已经启动成功,通过kubectl工具查看当前集群组件状态:

十一、在Node节点部署组件(包括kube-proxy,kubelet组件)

master节点操作

1.创建token文件 vim /opt/kubernetes/cfg/token.csv 674c457d4dcf2eefe4920d7dbb6bsoul,kubelet-bootstrap,10001,"system:kubelet-bootstrap" token.csv内容说明: 第一列:随机字符串,自己可随意生成 第二列:用户名 第三列:UID 第四列:用户组 2.将kubelet-bootstrap用户绑定到系统集群角色 kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap 3.创建kubelet bootstrapping kubeconfig export KUBE_APISERVER="https://192.168.85.15:6443" 4.设置集群参数 kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.85.15:6443 --kubeconfig=bootstrap.kubeconfig 5.设置客户端认证参数 kubectl config set-credentials kubelet-bootstrap --token=674c457d4dcf2eefe4920d7dbb6bsoul --kubeconfig=bootstrap.kubeconfig 这里的token就是上面token文件里面执行的第一列 6.设置上下文参数 kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig 7.设置默认上下文 kubectl config use-context default --kubeconfig=bootstrap.kubeconfig 8.创建kube-proxy kubeconfig文件 kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.85.15:6443 --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy --client-certificate=/opt/kubernetes/ssl/kube-proxy.pem --client-key=/opt/kubernetes/ssl/kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig 以上操作后会在当前目录生成bootstrap.kubeconfig kube-proxy.kubeconfig这两个文件,将这两个文件拷贝到Node节点/opt/kubernetes/cfg目录下 scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.85.16:/opt/kubernetes/cfg scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.85.17:/opt/kubernetes/cfgnode节点上安装部署kubelet组件,kube-proxy组件 node01,node02节点上相同操作,需要对应修改为node节点上的ip)

部署kubelet组件 (配置文件需要对应修改为node节点上的ip) 编写kubelet组件配置文件

vim /opt/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \ --v=4 \ --address=192.168.85.16 \ --hostname-override=192.168.85.16 \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \ --config=/opt/kubernetes/cfg/kubelet.config \ --cert-dir=/opt/kubernetes/ssl \ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" 编写kubelet.config配置文件 vim /opt/kubernetes/cfg/kubelet.config kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 192.168.85.16 port: 10250 cgroupDriver: cgroupfs clusterDNS: - 10.0.0.2 clusterDomain: cluster.local. failSwapOn: false 编写systemd管理kubelet服务启动文件 vim /usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target 执行启动: systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet

查看kubelet进程

部署kube-proxy组件 (配置文件需要对应修改为node节点上的ip)

编写kube-proxy配置文件 vim /opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=192.168.85.16 \ --cluster-cidr=10.0.0.0/24 \ --proxy-mode=ipvs \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" 编写systemd管理kube-proxy服务启动文件 vim /usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target 执行命令启动 systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy查看kube-proxy进程:

十二、在Master审批Node加入集群:

在node节点启动kubelet相当于发出请求加入集群,还需要在master节点手动允许该节点才可以完成加入。 在Master节点查看请求签名的Node: kubectl get csr kubectl certificate approve xxxxxxxx //其中xxxxxxxx为kubectl get csr 查看到的node名称 kubectl get node

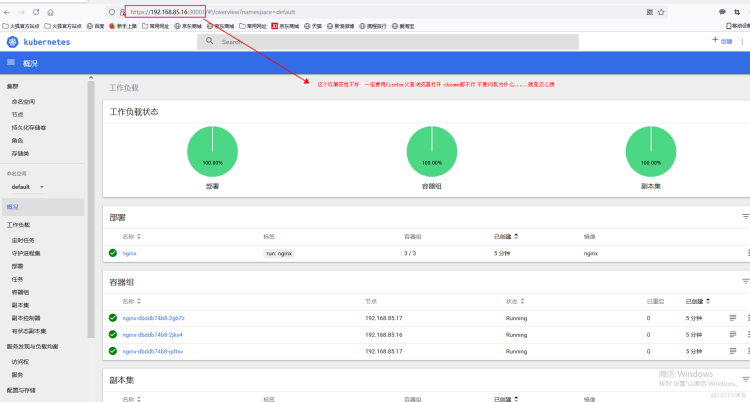

十三、部署dashboard UI仪表盘(可部署可不部署 只是一个可视化的web界面)

编写dashboard yaml文件

vim kubernetes-dashboard.yaml

apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kube-system type: Opaque --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: kubernetes-dashboard-minimal namespace: kube-system rules: - apiGroups: [""] resources: ["secrets"] verbs: ["create"] - apiGroups: [""] resources: ["configmaps"] verbs: ["create"] - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"] verbs: ["get", "update", "delete"] - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] - apiGroups: [""] resources: ["services"] resourceNames: ["heapster"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: kubernetes-dashboard-minimal namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard-minimal subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system --- kind: Deployment apiVersion: apps/v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: lizhenliang/kubernetes-dashboard-amd64:v1.10.1 ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --tls-key-file=dashboard-key.pem - --tls-cert-file=dashboard.pem volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 30001 selector: k8s-app: kubernetes-dashboard 执行创建dashboard kubectl apply -f kubernetes-dashboard.yaml

2.创建并绑定dashboard的用户角色 (注意默认kubernets创建的token令牌登陆是缺失管理pod,namespace等权限的 需要重新创建并绑定有权限的角色) vim k8s-admin.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: dashboard-admin namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: dashboard-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kube-system roleRef: kind: ClusterRole name: cluster-admin apiGroup: rbac.authorization.k8s.io

执行创建管理角色: kubectl create -f k8s-admin.yaml

3.获取token令牌

kubectl get secret --all-namespaces //先查看dashboard-admin对应的pod全名 [root@master source]# kubectl get secret --all-namespaces NAMESPACE NAME TYPE DATA AGE kube-system dashboard-admin-token-x4zk6 kubernetes.io/service-account-token 3 11m kube-system default-token-jngjn kubernetes.io/service-account-token 3 41h kube-system kubernetes-dashboard-certs Opaque 0 50m kube-system kubernetes-dashboard-key-holder Opaque 2 49m 获取token kubectl describe secret dashboard-admin-token-x4zk6 -n kube-system 用火狐浏览器访问https://192.168.85.16:30001 (具体仪表盘pod在哪个节点就用哪个节点ip) 使用以上获取到token令牌登陆

到此为止 整个k8s集群部署完成

运行一个测试示例

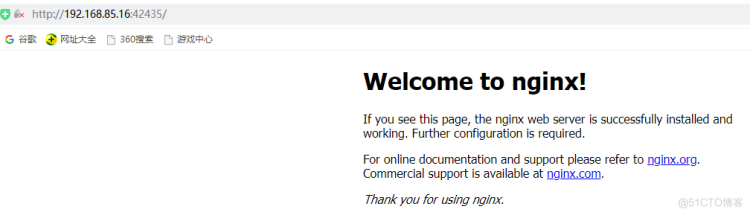

创建一个Nginx Web,测试集群是否正常工作:

kubectl run nginx --image=nginx --replicas=3

kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

你可能会用到的k8s命令集:

kubectl get namespaces 查看命名空间 kubectl get deployments.apps 查看应用部署 默认是default这个命名空间 kubectl get deployments.apps -A 查看所有 新版本是 kubectl get deployments.apps --all-namespaces kubectl get pods 查看pod (默认只能看到default 命名空间下的pod) kubectl get pods -A 查看全部命名空间的pod 新版本kubectl get pods --all-namespaces kubectl --namespace=test-soul get pods 查看指定命名空间下的pod (该命名空间为test-soul) kubectl get deploy -A 查看所有命名空间下的部署 新版本 kubectl get deploy --all-namespaces kubectl delete pod redis 删除pod kubectl --namespace=test-soul delete pod redis-hus09h-1 删除指定命名空间test-soul下的pod: redis-hus09h-1 kubectl delete deployments redis3 删除应用部署 kubectl --namespace=test-soul delete deployments redis3 删除指定命名空间test-soul下的deployments 或者 kubectl delete -f redis-deployment.yaml 删除应用: 先删除pod 接着删除对应的deployment kubectl create namespace soul 创建命名空间soul kubectl delete namespaces soul 删除命名空间soul 查看日志 kubectl logs redis 查看default下的pod 日志 kubectl logs --namespace=test-soul redis 查看指定命名空间test-soul下pod的日志 kubectl describe pod redis 查看pod为redis的部署过程 kubectl describe --namespace=test-soul pod redis 查看指定命名空间为test-soul的pod redis部署过程 创建一个应用 kubectl --namespace=test-soul run redis2 --image=sallsoul/redis-test --replicas=2 kubectl --namespace=test-soul get replicaset 查看副本数 kubectl set image deployment/nginx-deployment nginx=nginx:1.15.2 --record 更新版本 kubectl rollout history deployment/nginx-deployment 查看更新版本历史 kubectl rollout undo deployment/nginx-deployment 回滚上一个版本 kubectl --namespace=test-soul get deployment -o wide 查看部署 kubectl run --image=nginx:alpine nginx-app --port=80 创建一个容器(pod) 这样启动有很多功能限制,不全.一般是通过编写yaml文件,通过 kubectl create -f file.yaml来启动 kubectl get - 类似于 docker ps ,查询资源列表 kubectl describe - 类似于 docker inspect ,获取资源的详细信息 kubectl logs - 类似于 docker logs ,获取容器的日志 kubectl exec - 类似于 docker exec ,在容器内执行一个命令 kubectl expose deployment nginx-app --port=80 --target-port=80 --ty pe=NodePort kubectl describe service nginx-app 查看service kubectl scale --replicas=3 deployment/nginx-app //修改副本数量,来控制运行的pod数据 kubectl rolling-update frontend-v1 frontend-v2 --image=image:v2 //滚动升级(Rolling Update)通过逐个容器替代升级的方式来实现无中断的服务升级 kubectl rolling-update frontend-v1 frontend-v2 --rollback // 在滚动升级的过程中,如果发现了失败或者配置错误,还可以随时回滚 kubectl rollout status deployment/nginx-app //可以用 rollout 命令查看滚动升级的过程 Deployment 也支持回滚 kubectl rollout history deployment/nginx-app kubectl rollout undo deployment/nginx-app kubectl set resources deployment nginx-app -c=nginx --limits=cpu=50 0m,memory=128Mi //资源限制nginx容器最多只用 50% 的 CPU 和 128MB 的内存 kubectl edit deployment/nginx-app //对于已经部署的deployment,可以通过该命令来更新manifest,增加健康检查部分 kubectl attach 用于连接到一个正在运行的容器。跟 docker 的 attach 命令类似 kubectl port-forward 用于将本地端口转发到指定的 Pod。 kubectl cp 支持从容器中拷贝,或者拷贝文件到容器中还有很多命令待补充