作者:独笔孤行@TaoCloud DRBD(Distributed Replicated Block Device)是一个用软件实现的、无共享的、服务器之间镜像块设备内容的存储复制解决方案。可以简单的理解为网络RAID。 DRBD的核心功能

作者:独笔孤行@TaoCloud

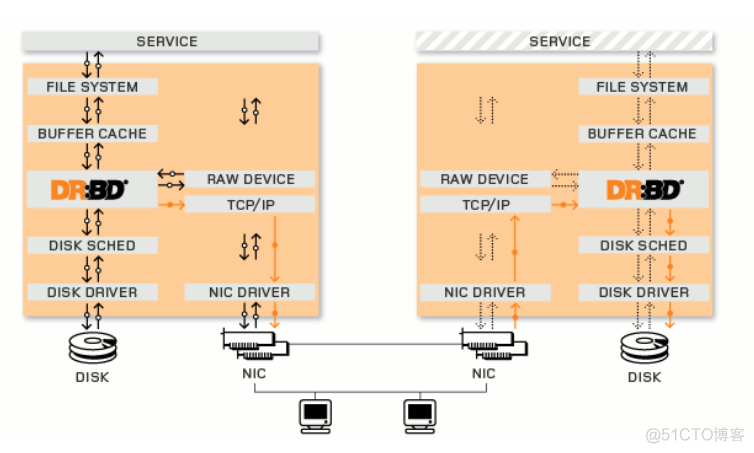

DRBD(Distributed Replicated Block Device)是一个用软件实现的、无共享的、服务器之间镜像块设备内容的存储复制解决方案。可以简单的理解为网络RAID。

DRBD的核心功能通过Linux的内核实现,最接近系统的IO栈,DRBD的位置处于文件系统以下,比文件系统更加靠近操作系统内核及IO栈。

一、准备环境

节点 主机名 IP地址 磁盘 操作系统 节点1 node1 172.16.201.53 sda,sdb centos7.6 节点2 node2 172.16.201.54 sda,sdb centos7.6关闭防火墙和selinux

#2节点都需要配置 systemctl stop firewalld systemctl disable firewalld setenforce 0 sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config配置epel源

#2节点都需要配置 yum install epel-release二、安装drbd

如果yum源中有完整的drbd软件,可直接通过yum进行安装,如果yum无法找到部分软件包,可通过编译安装。以下2中方法二选一即可。

1.yum安装drbd

yum install drbd drbd-bash-completion drbd-udev drbd-utils kmod-drbdyum方式进行安装可能无法找到kmod-drbd软件包,因此需要编译安装。

2.编译安装drbd

2.1准备编译环境

yum update yum -y install gcc gcc-c++ make automake autoconf help2man libxslt libxslt-devel flex rpm-build kernel-devel pygobject2 pygobject2-devel reboot2.2在官网下载源码包,

在官网 https://www.linbit.com/en/drbd-community/drbd-download/中获取源码包下载地址,并进行下载。

wget https://www.linbit.com/downloads/drbd/9.0/drbd-9.0.21-1.tar.gz wget https://www.linbit.com/downloads/drbd/utils/drbd-utils-9.13.0.tar.gz wget https://www.linbit.com/downloads/drbdmanage/drbdmanage-0.99.18.tar.gz mkdir -p rpmbuild/{BUILD,BUILDROOT,RPMS,SOURCES,SPECS,SRPMS} mkdir DRBD92.3.编译生成rpm包

tar xvf drbd-9.0.21-1.tar.gz cd drbd-9.0.21-1 make kmp-rpm cp /root/rpmbuild/RPMS/x86_64/*.rpm /root/DRBD9/ tar xvf drbdmanage-0.99.18.tar.gz cd drbdmanage-0.99.18 make rpm cp dist/drbdmanage-0.99.18*.rpm /root/DRBD9/2.4.开始安装drbd

#2节点都需要安装 cd /root/DRBD9 yum install drbd-kernel-debuginfo-9.0.21-1.x86_64.rpm drbdmanage-0.99.18-1.noarch.rpm drbdmanage-0.99.18-1.src.rpm kmod-drbd-9.0.21_3.10.0_1160.6.1-1.x86_64.rpm三、配置DRBD

1.主节点划分vg

#节点1操作 pvcreate /dev/sdb1 vgcreate drbdpool /dev/sdb12.初始化DRBD集群并添加节点

#节点1操作 [root@node1 ~]# drbdmanage init 172.16.201.53 You are going to initialize a new drbdmanage cluster. CAUTION! Note that: * Any previous drbdmanage cluster information may be removed * Any remaining resources managed by a previous drbdmanage installation that still exist on this system will no longer be managed by drbdmanage Confirm: yes/no: yes Empty drbdmanage control volume initialized on '/dev/drbd0'. Empty drbdmanage control volume initialized on '/dev/drbd1'. Waiting for server: . Operation completed successfully #添加节点2 [root@node1 ~]# drbdmanage add-node node2 172.16.201.54 Operation completed successfully Operation completed successfully Host key verification failed. Give leader time to contact the new node Operation completed successfully Operation completed successfully Join command for node node2: drbdmanage join -p 6999 172.16.201.54 1 node1 172.16.201.53 0 G3F1h/pAcGwV1LnlxhFE记录返回结果中的最后一行:“drbdmanage join -p 6999 172.16.201.54 1 node1 172.16.201.53 0 G3F1h/pAcGwV1LnlxhFE” 并在节点2中执行,以加入集群。

3.从节点划分vg

#节点2操作 pvcreate /dev/sdb vgcreate drbdpool /dev/sdb4.从节点加入集群

#节点2操作 [root@node2 ~]# drbdmanage join -p 6999 172.16.201.54 1 node1 172.16.201.53 0 G3F1h/pAcGwV1LnlxhFE You are going to join an existing drbdmanage cluster. CAUTION! Note that: * Any previous drbdmanage cluster information may be removed * Any remaining resources managed by a previous drbdmanage installation that still exist on this system will no longer be managed by drbdmanage Confirm: yes/no: yes Waiting for server to start up (can take up to 1 min) Operation completed successfully5.检查集群状态

#节点1操作,以下返回结果为正常状态 [root@node1 ~]# drbdadm status .drbdctrl role:Primary volume:0 disk:UpToDate volume:1 disk:UpToDate node2 role:Secondary volume:0 peer-disk:UpToDate volume:1 peer-disk:UpToDate6.创建资源

#节点1操作 #创建资源test01 [root@node1 ~]# drbdmanage add-resource test01 Operation completed successfully [root@node1 ~]# drbdmanage list-resources +----------------+ | Name | State | |----------------| | test01 | ok | +----------------+7.创建卷

#节点1操作 #创建5GB的卷test01 [root@node1 ~]# drbdmanage add-volume test01 5GB Operation completed successfully [root@node1 ~]# drbdmanage list-volumes +-----------------------------------------------------------------------------+ | Name | Vol ID | Size | Minor | | State | |-----------------------------------------------------------------------------| | test01 | 0 | 4.66 GiB | 100 | | ok | +-----------------------------------------------------------------------------+ [root@node1 ~]#8.部署资源

末尾数字 “2” 表示节点数量

#节点1操作 [root@node1 ~]# drbdmanage deploy-resource test01 2 Operation completed successfully #创建完时,状态为Inconsistent,正在进行同步 [root@node1 ~]# drbdadm status .drbdctrl role:Primary volume:0 disk:UpToDate volume:1 disk:UpToDate node2 role:Secondary volume:0 peer-disk:UpToDate volume:1 peer-disk:UpToDate test01 role:Secondary disk:UpToDate node2 role:Secondary replication:SyncSource peer-disk:Inconsistent done:5.70 #同步完成后,状态内容如下 [root@node1 ~]# drbdadm status .drbdctrl role:Primary volume:0 disk:UpToDate volume:1 disk:UpToDate node2 role:Secondary volume:0 peer-disk:UpToDate volume:1 peer-disk:UpToDate test01 role:Secondary disk:UpToDate node2 role:Secondary peer-disk:UpToDate9.配置DRBD设备完成后,创建文件系统并进行挂载

#节点1操作 # [/dev/drbd***]的数字,是通过命令[drbdmanage list-volumes]获取的[Minor]值 [root@node1 ~]# mkfs.xfs /dev/drbd100 meta-data=/dev/drbd100 isize=512 agcount=4, agsize=305176 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=1220703, imaxpct=25 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=0 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 [root@node1 ~]# mount /dev/drbd100 /mnt/ [root@node1 ~]# echo "Hello World" > /mnt/test.txt [root@node1 ~]# ll /mnt/ total 4 -rw-r--r-- 1 root root 12 Nov 26 15:43 test.txt [root@node1 ~]# cat /mnt/test.txt Hello World10.在节点2上挂载DRBD设备,可进行如下操作:

#在节点1操作 #卸载/mnt目录,配置为从节点 [root@node1 ~]# umount /mnt/ [root@node1 ~]# drbdadm secondary test01 #在节点2操作 #配置为主节点 [root@node2 ~]# drbdadm primary test01 [root@node2 ~]# mount /dev/drbd100 /mnt/ [root@node2 ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on devtmpfs devtmpfs 3.9G 0 3.9G 0% /dev tmpfs tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs tmpfs 3.9G 8.9M 3.9G 1% /run tmpfs tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/mapper/centos-root xfs 35G 1.5G 34G 5% / /dev/sda1 xfs 1014M 190M 825M 19% /boot tmpfs tmpfs 783M 0 783M 0% /run/user/0 /dev/drbd100 xfs 4.7G 33M 4.7G 1% /mnt [root@node2 ~]# ls -l /mnt/ total 4 -rw-r--r-- 1 root root 12 Nov 26 15:43 test.txt [root@node2 ~]# cat /mnt/test.txt Hello World关注微信公众号“云实战”,欢迎更多问题咨询