kubernetes 高可用的配置 标签(空格分隔): kubernetes系列 一:kubernetes 高可用的配置 一:kubernetes 的 kubeadmn高可用的配置 二: 系统初始化 2.1 系统主机名 192.168.100.11 node01.flyfish192.168.1

kubernetes 高可用的配置

标签(空格分隔): kubernetes系列

一:kubernetes 高可用的配置

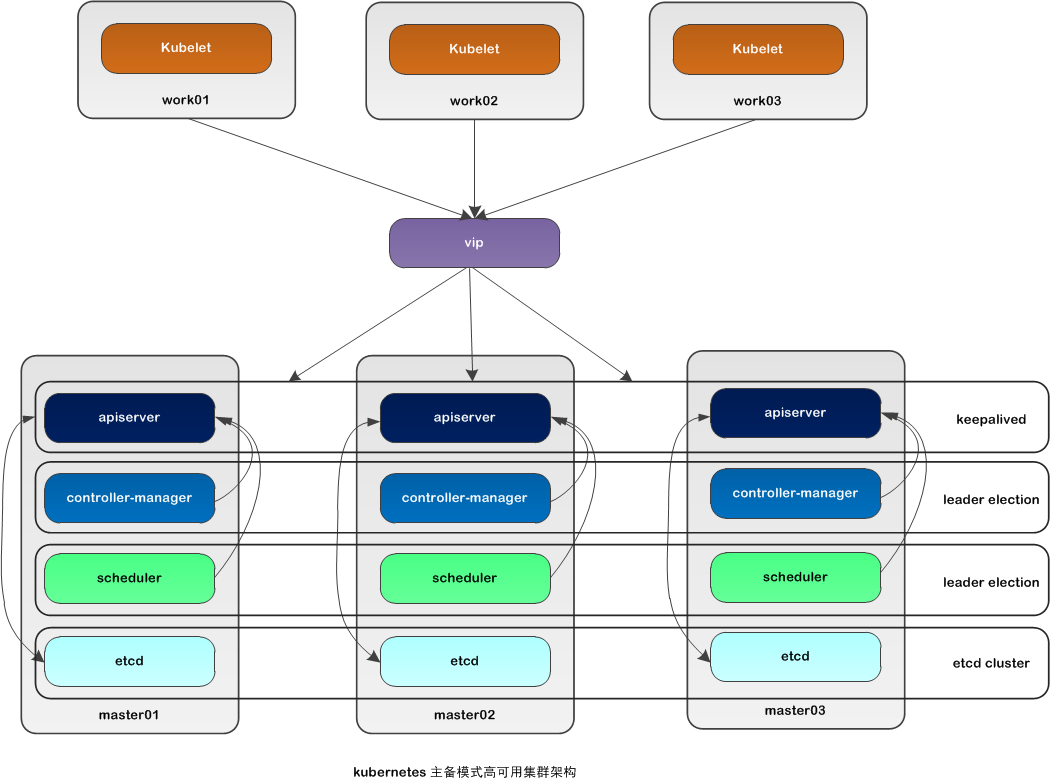

一:kubernetes 的 kubeadmn高可用的配置

二: 系统初始化

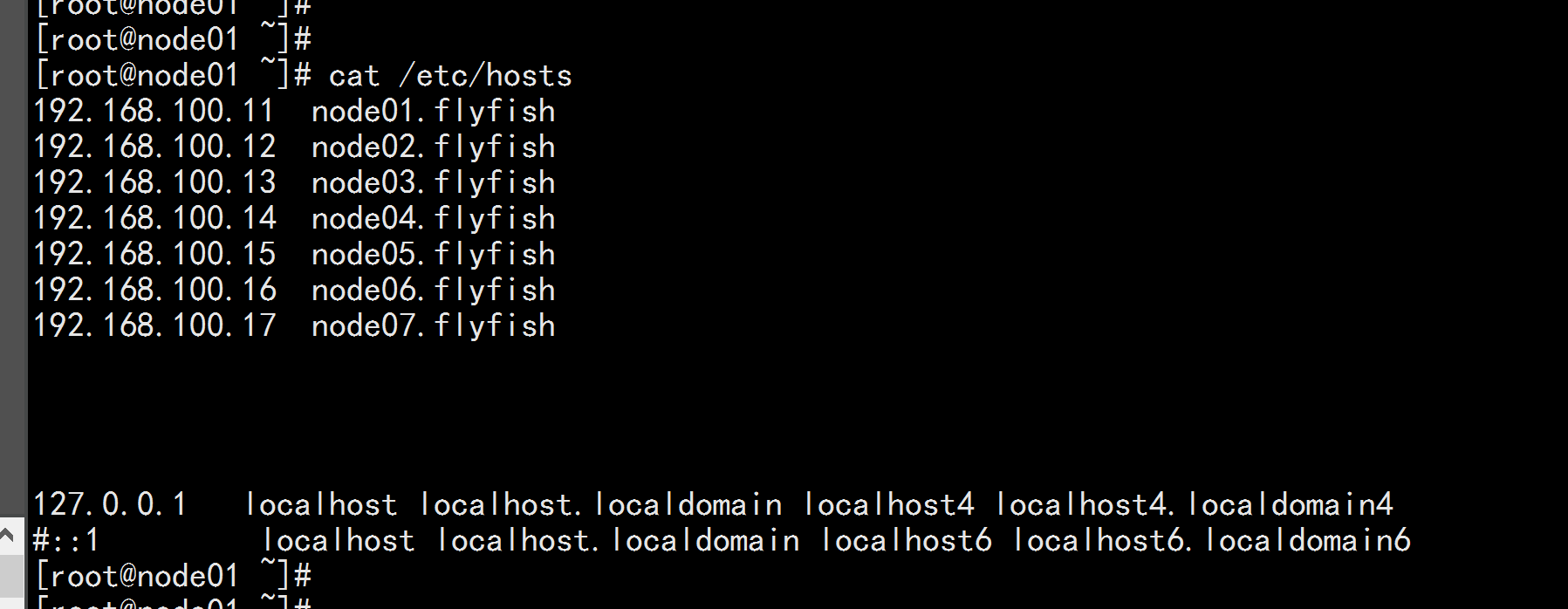

2.1 系统主机名

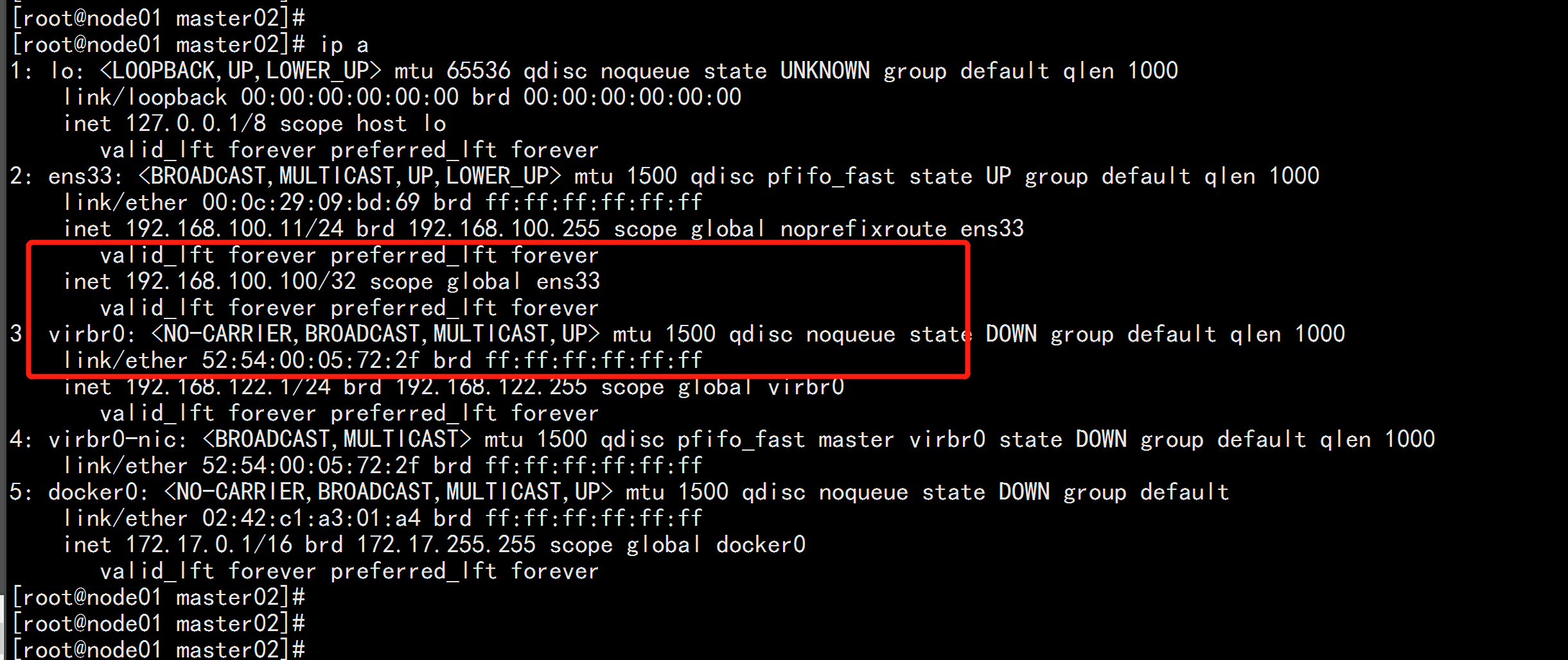

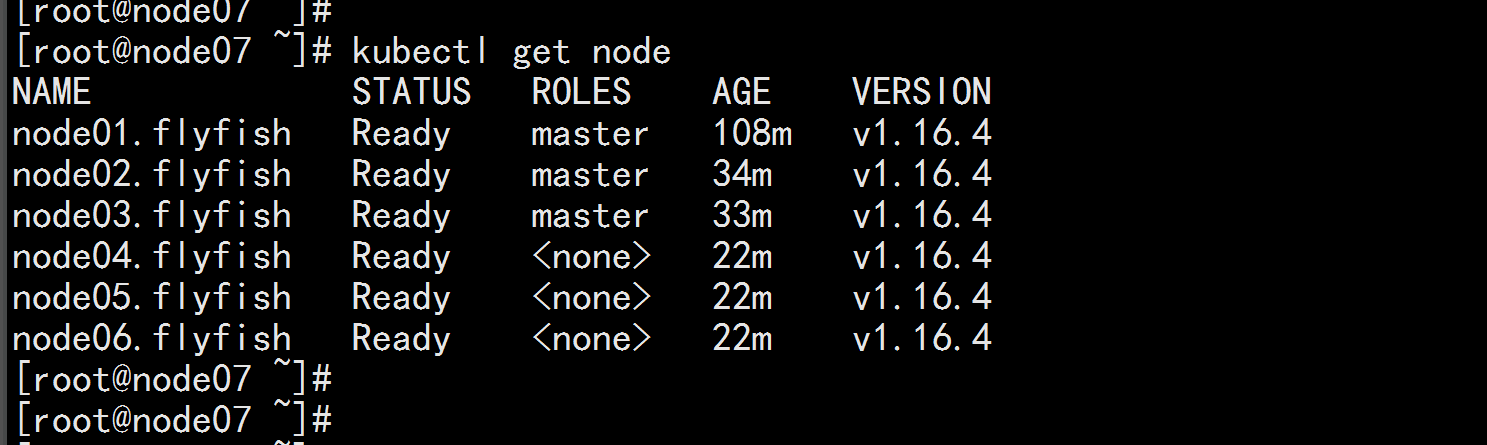

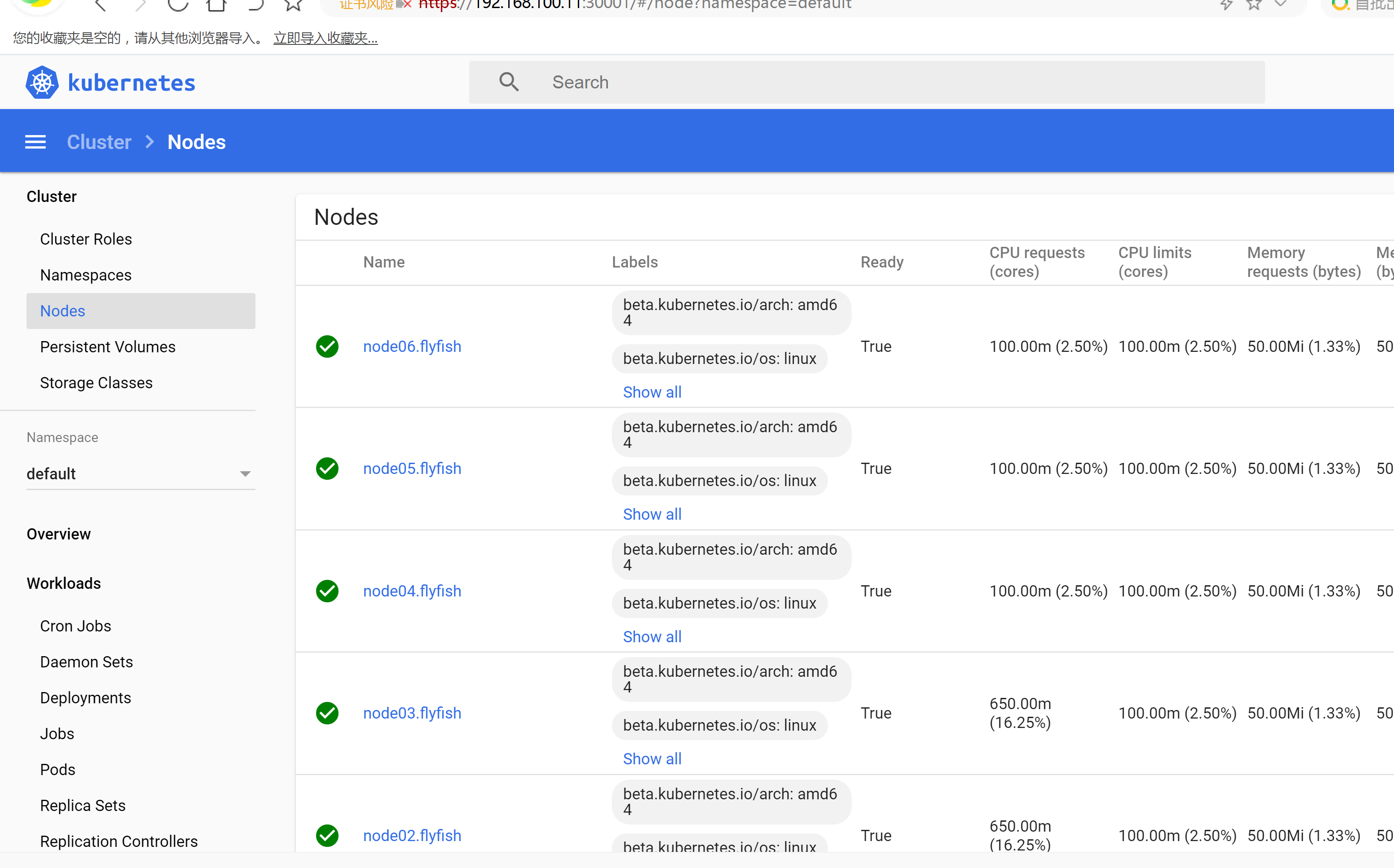

192.168.100.11 node01.flyfish 192.168.100.12 node02.flyfish 192.168.100.13 node03.flyfish 192.168.100.14 node04.flyfish 192.168.100.15 node05.flyfish 192.168.100.16 node06.flyfish 192.168.100.17 node07.flyfish ---- node01.flyfish / node02.flyfish /node03.flyfish 作为master 节点 node04.flyfish / node05.flyfish / node06.flyfish 作为work节点 node07.flyfish 作为 测试节点 keepalive集群VIP 地址为: 192.168.100.100

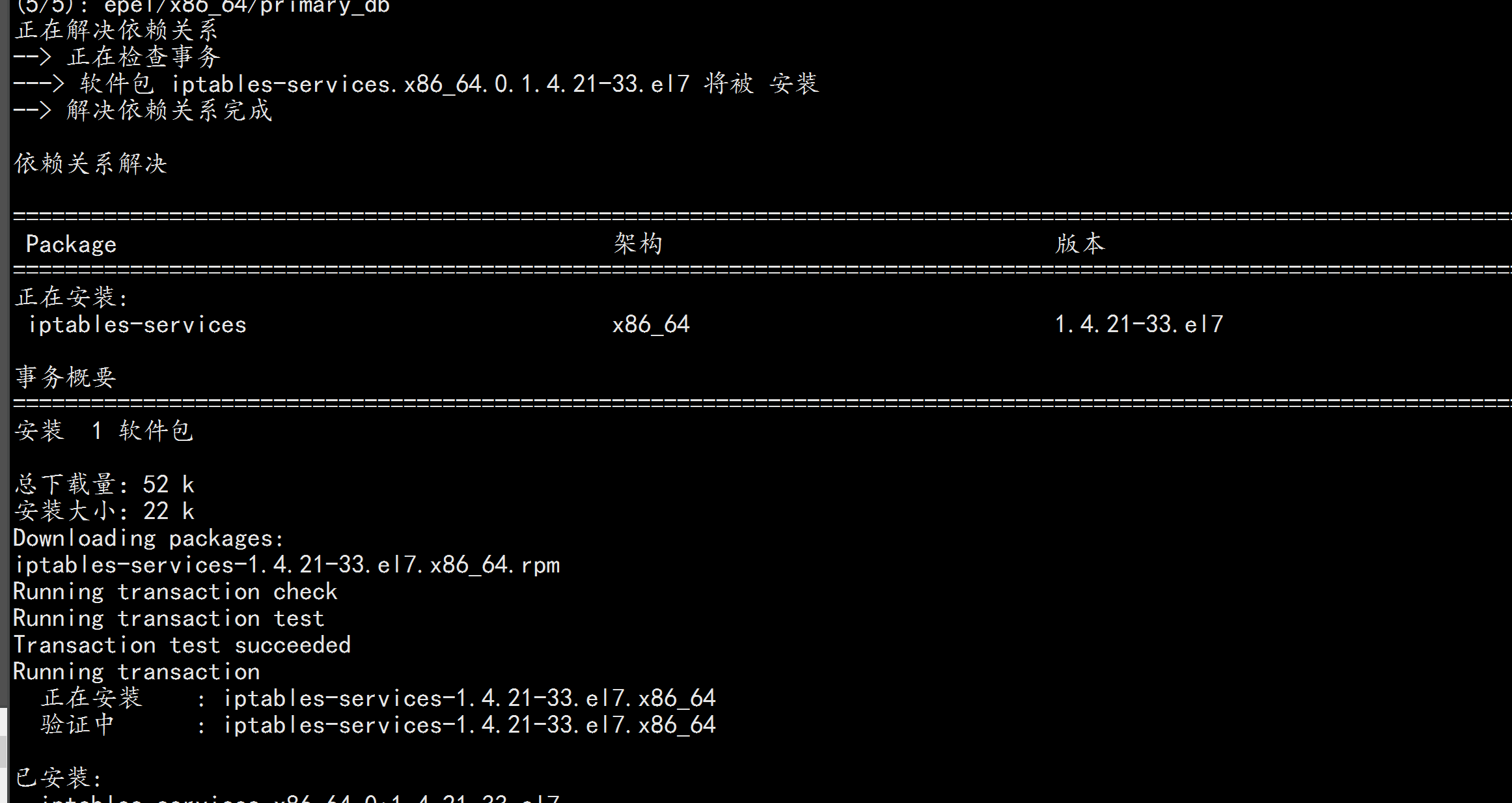

2.2 关闭firewalld 清空iptables 与 selinux 规则

系统节点全部执行: systemctl stop firewalld && systemctl disable firewalld && yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

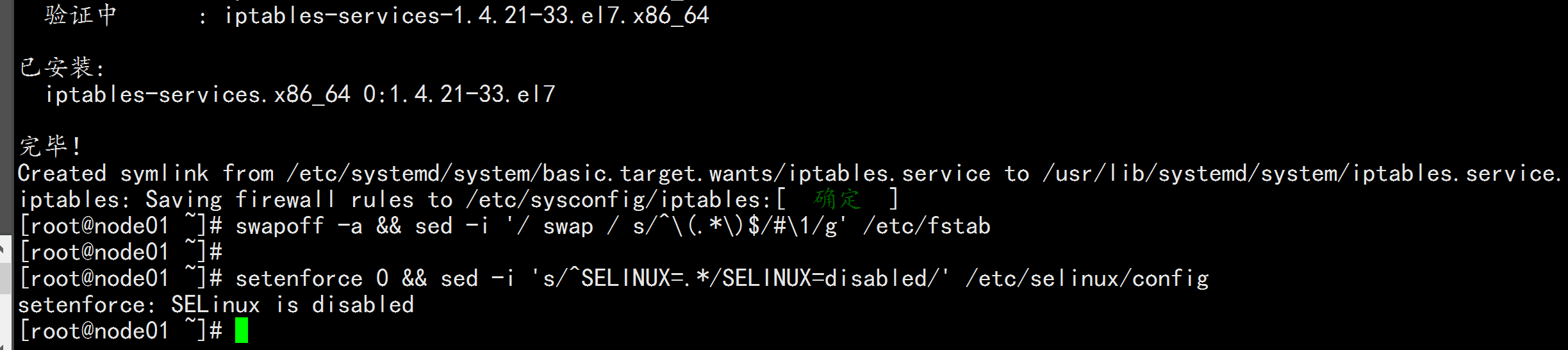

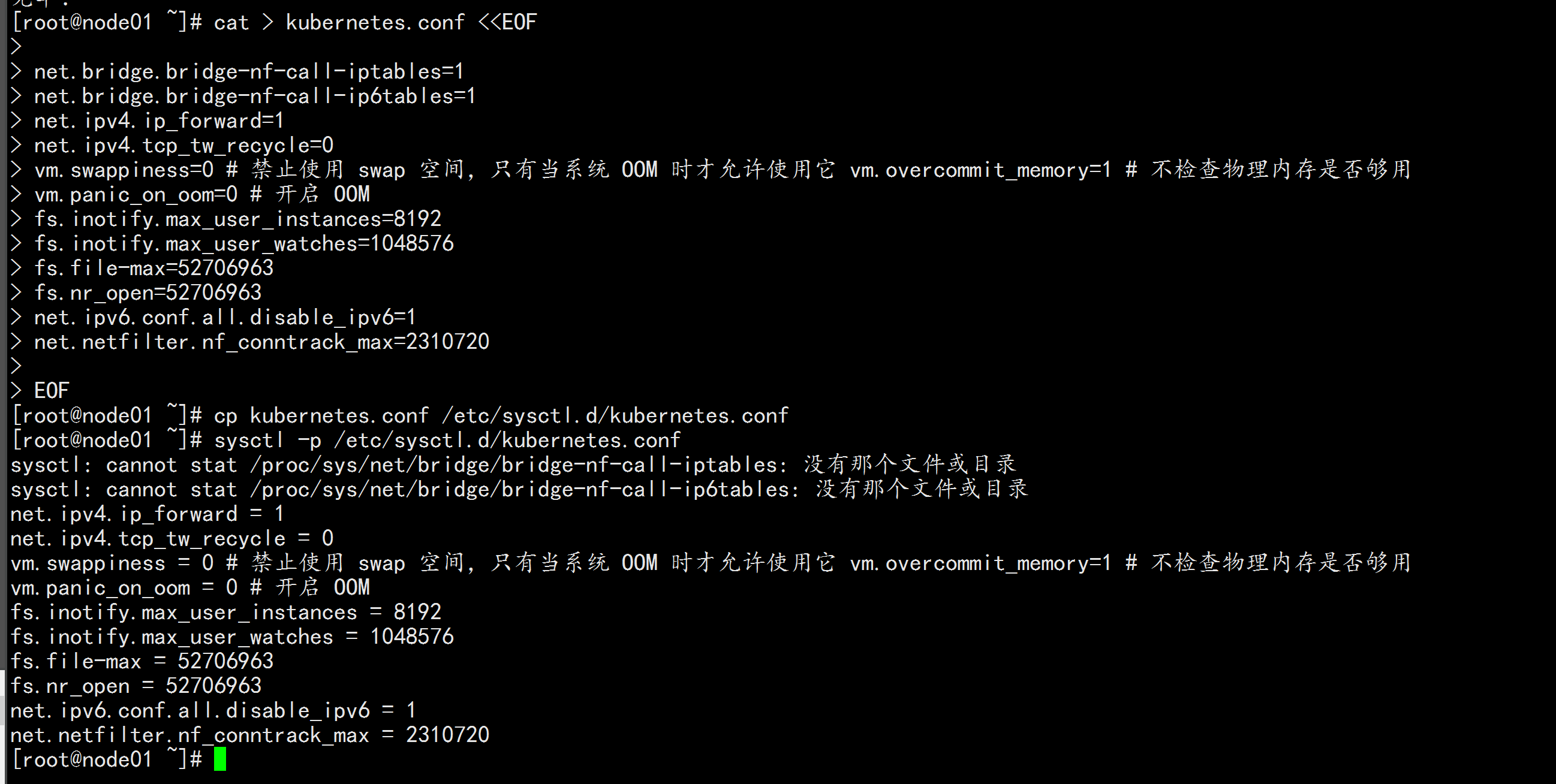

2.3 安装 依赖包

全部节点安装 yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

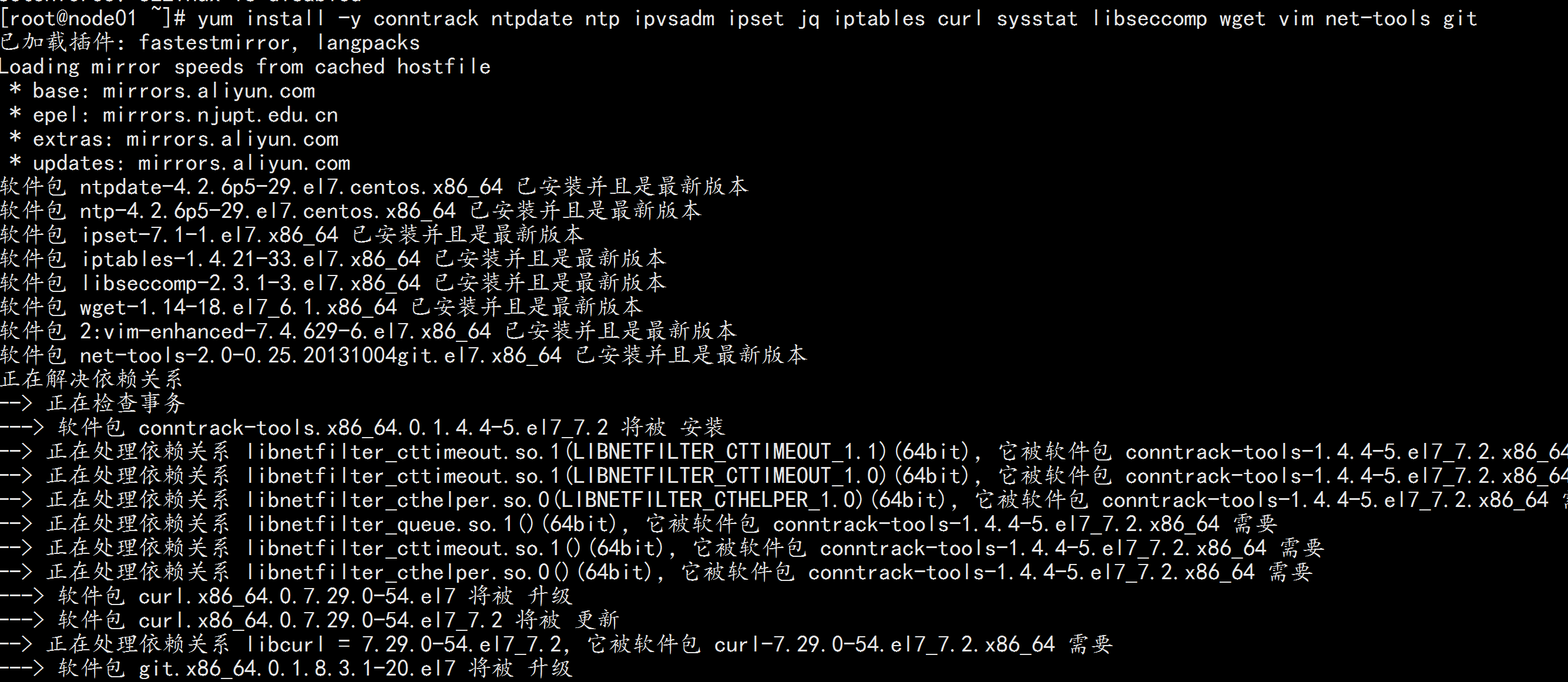

2.4升级调整内核参数,对于 K8S

所有节点都执行 cat > kubernetes.conf <<EOF net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv4.ip_forward=1 net.ipv4.tcp_tw_recycle=0 vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它 vm.overcommit_memory=1 # 不检查物理内存是否够用 vm.panic_on_oom=0 # 开启 OOM fs.inotify.max_user_instances=8192 fs.inotify.max_user_watches=1048576 fs.file-max=52706963 fs.nr_open=52706963 net.ipv6.conf.all.disable_ipv6=1 net.netfilter.nf_conntrack_max=2310720 EOF cp kubernetes.conf /etc/sysctl.d/kubernetes.conf sysctl -p /etc/sysctl.d/kubernetes.conf

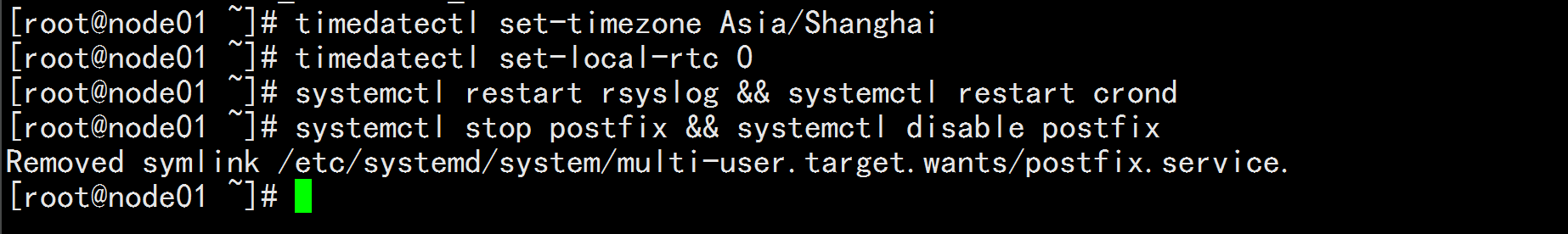

2.5 调整系统时区

# 设置系统时区为 中国/上海 timedatectl set-timezone Asia/Shanghai # 将当前的 UTC 时间写入硬件时钟 timedatectl set-local-rtc 0 # 重启依赖于系统时间的服务 systemctl restart rsyslog && systemctl restart crond 关闭系统不需要的服务 systemctl stop postfix && systemctl disable postfix

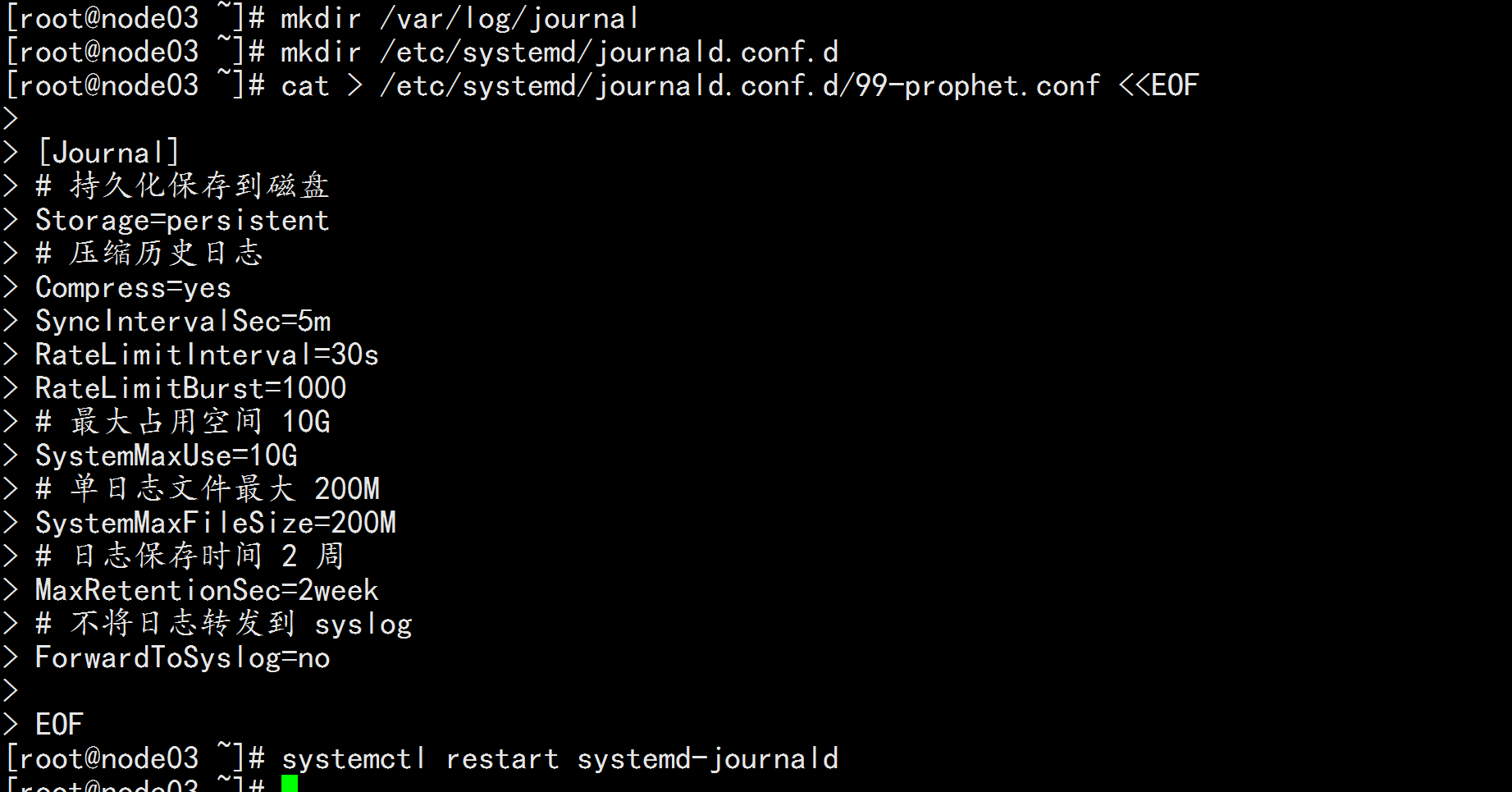

2.6 设置 rsyslogd 和 systemd journald

系统全部节点 mkdir /var/log/journal # 持久化保存日志的目录 mkdir /etc/systemd/journald.conf.d cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF [Journal] # 持久化保存到磁盘 Storage=persistent # 压缩历史日志 Compress=yes SyncIntervalSec=5m RateLimitInterval=30s RateLimitBurst=1000 # 最大占用空间 10G SystemMaxUse=10G # 单日志文件最大 200M SystemMaxFileSize=200M # 日志保存时间 2 周 MaxRetentionSec=2week # 不将日志转发到 syslog ForwardToSyslog=no EOF systemctl restart systemd-journald

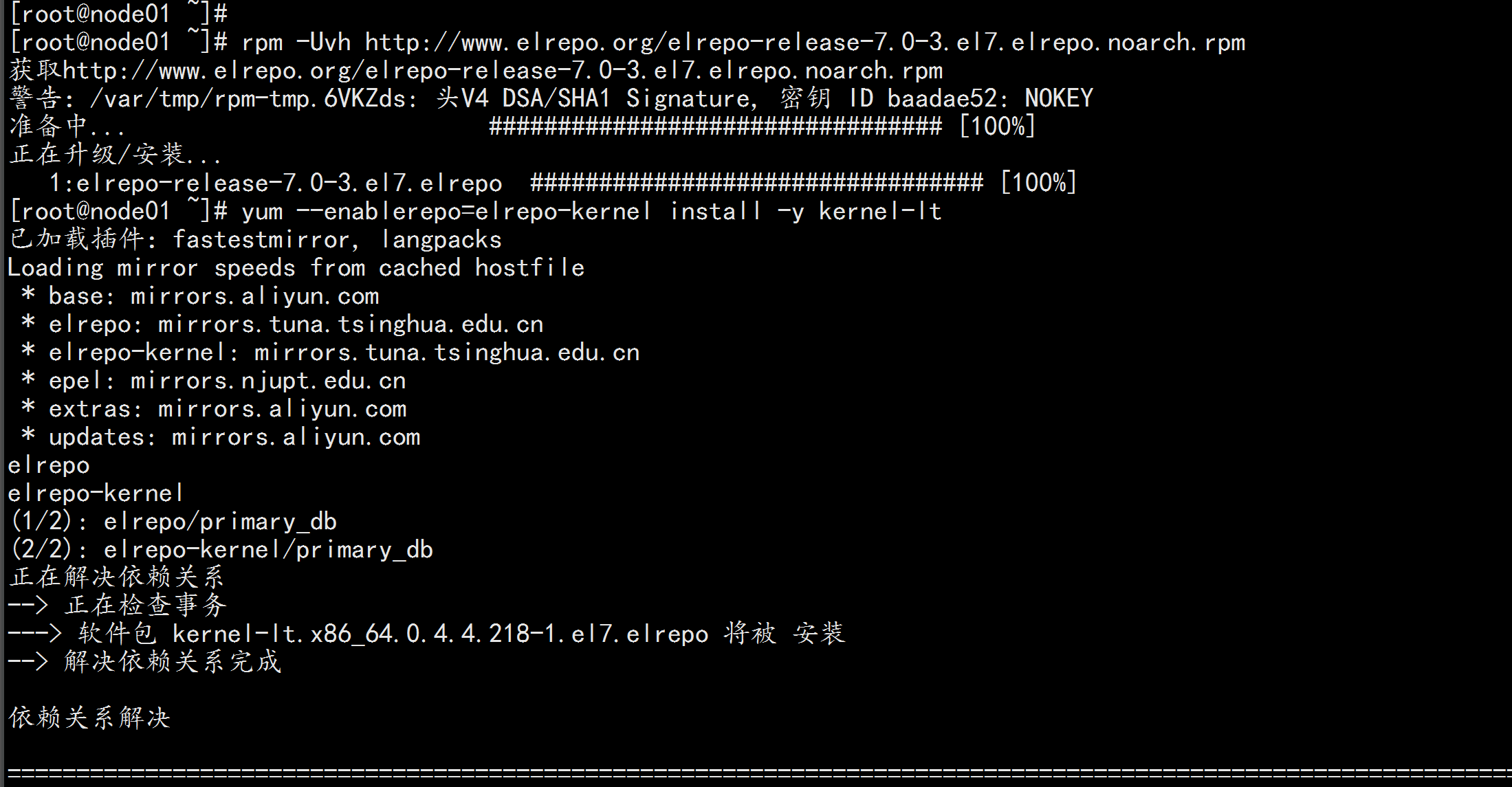

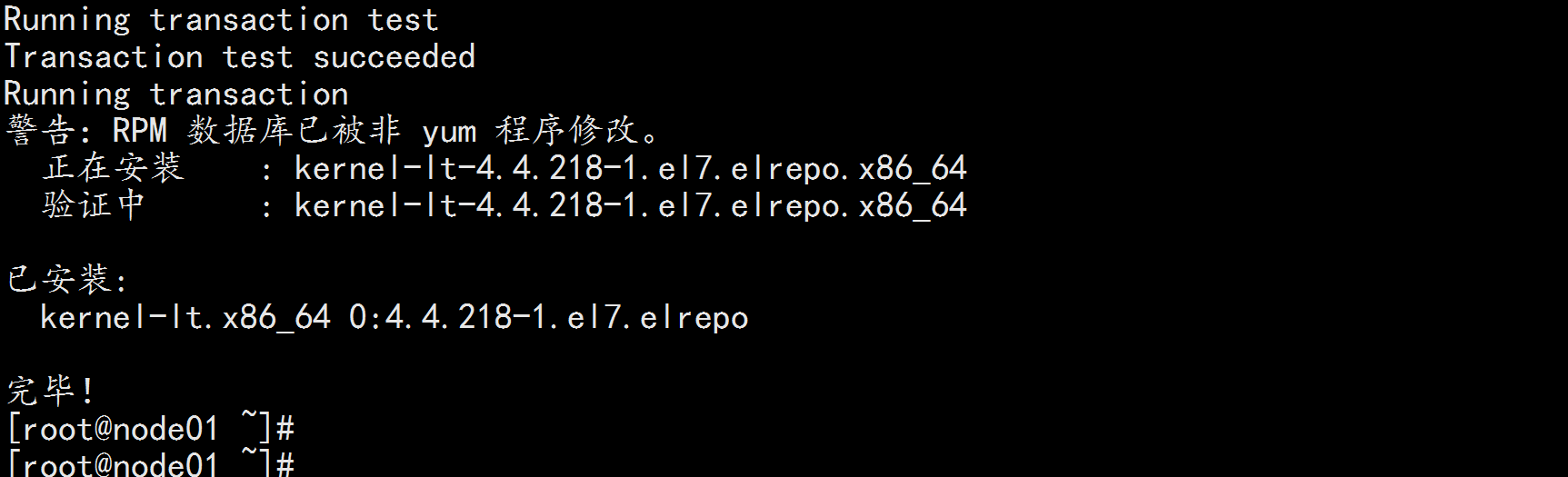

2.7升级系统内核为 4.44

CentOS 7.x 系统自带的 3.10.x 内核存在一些 Bugs,导致运行的 Docker、Kubernetes 不稳定,例如: rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm # 安装完成后检查 /boot/grub2/grub.cfg 中对应内核 menuentry 中是否包含 initrd16 配置,如果没有,再安装 一次! yum --enablerepo=elrepo-kernel install -y kernel-lt # 设置开机从新内核启动 grub2-set-default "CentOS Linux (4.4.182-1.el7.elrepo.x86_64) 7 (Core)" reboot # 重启后安装内核源文件 yum --enablerepo=elrepo-kernel install kernel-lt-devel-$(uname -r) kernel-lt-headers-$(uname -r)

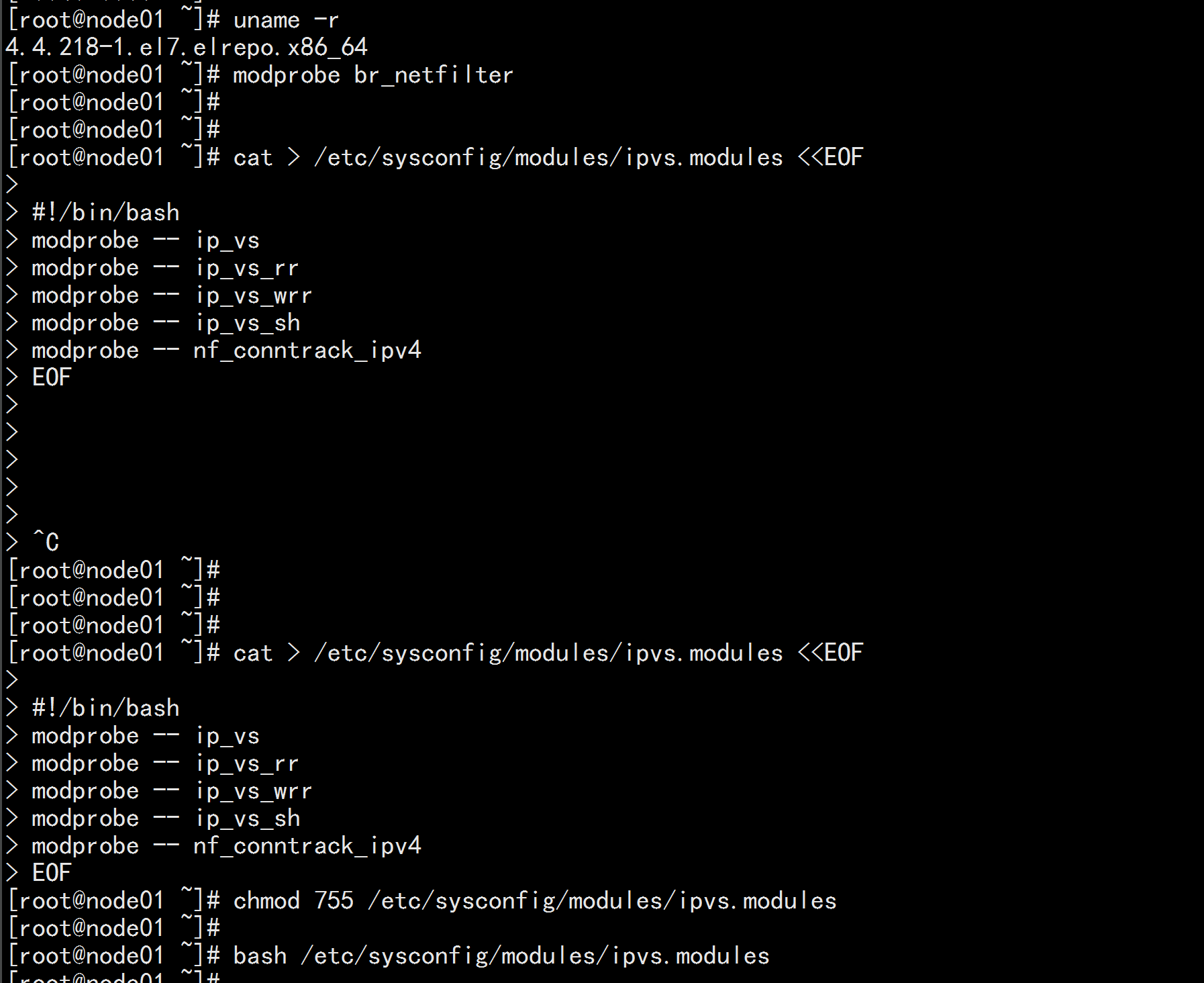

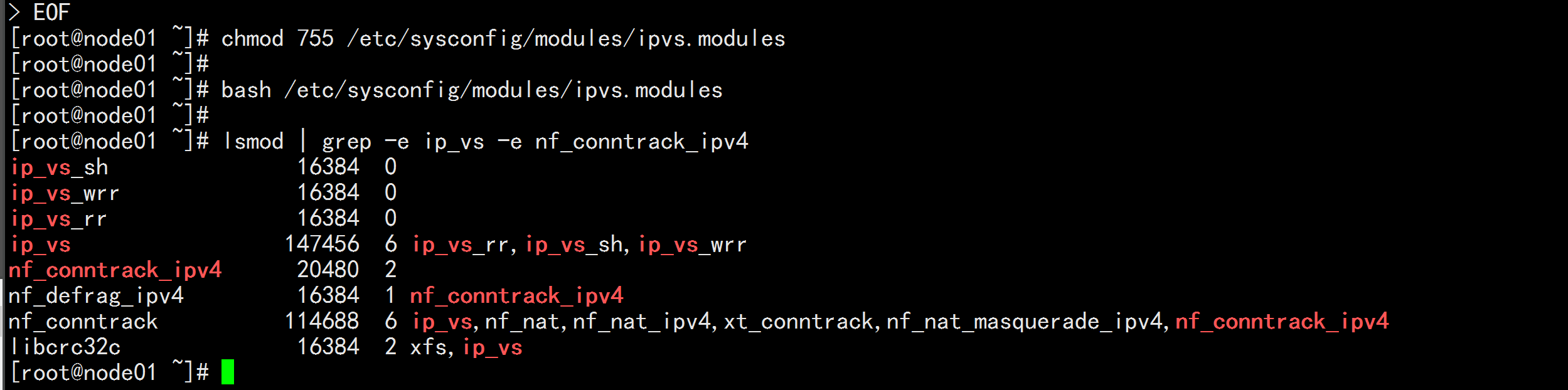

2.8 kube-proxy开启ipvs的前置条件

modprobe br_netfilter cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules bash /etc/sysconfig/modules/ipvs.modules lsmod | grep -e ip_vs -e nf_conntrack_ipv4

三: 开始安装docker

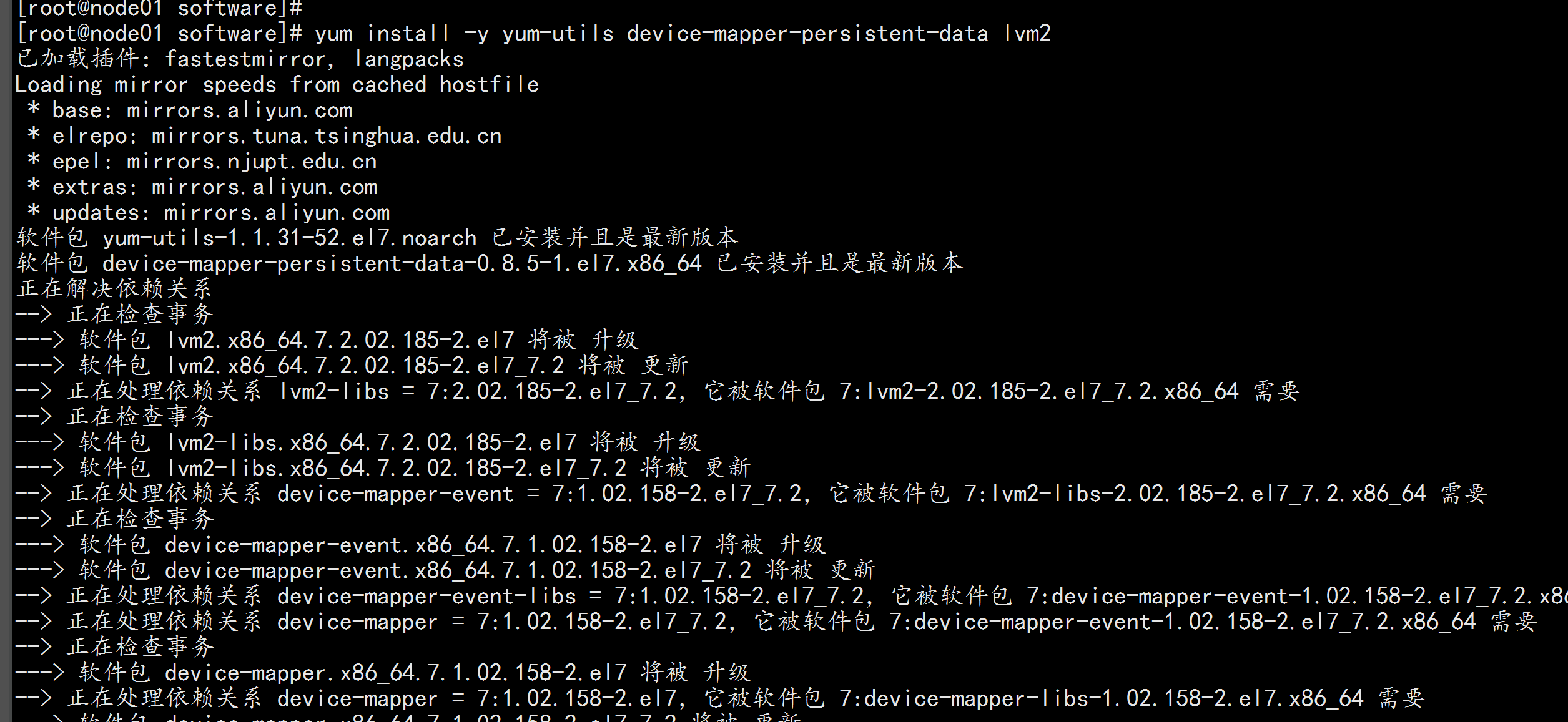

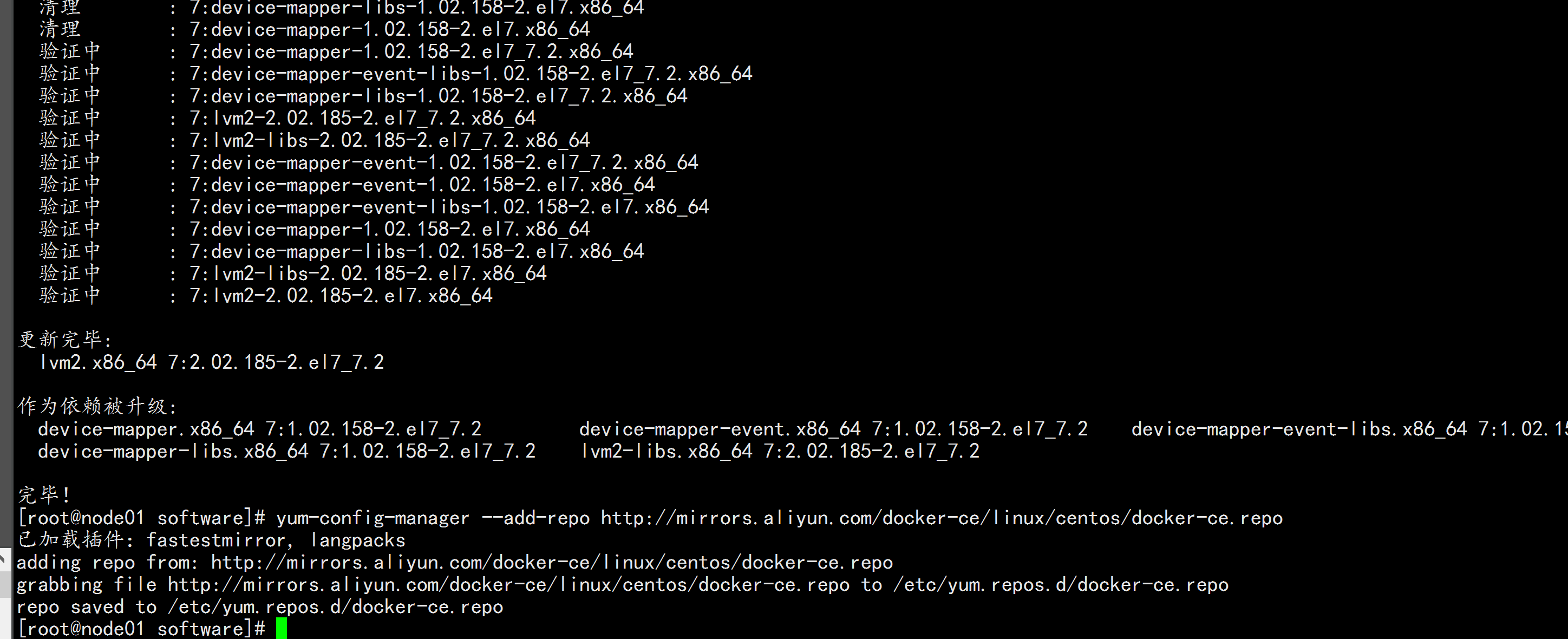

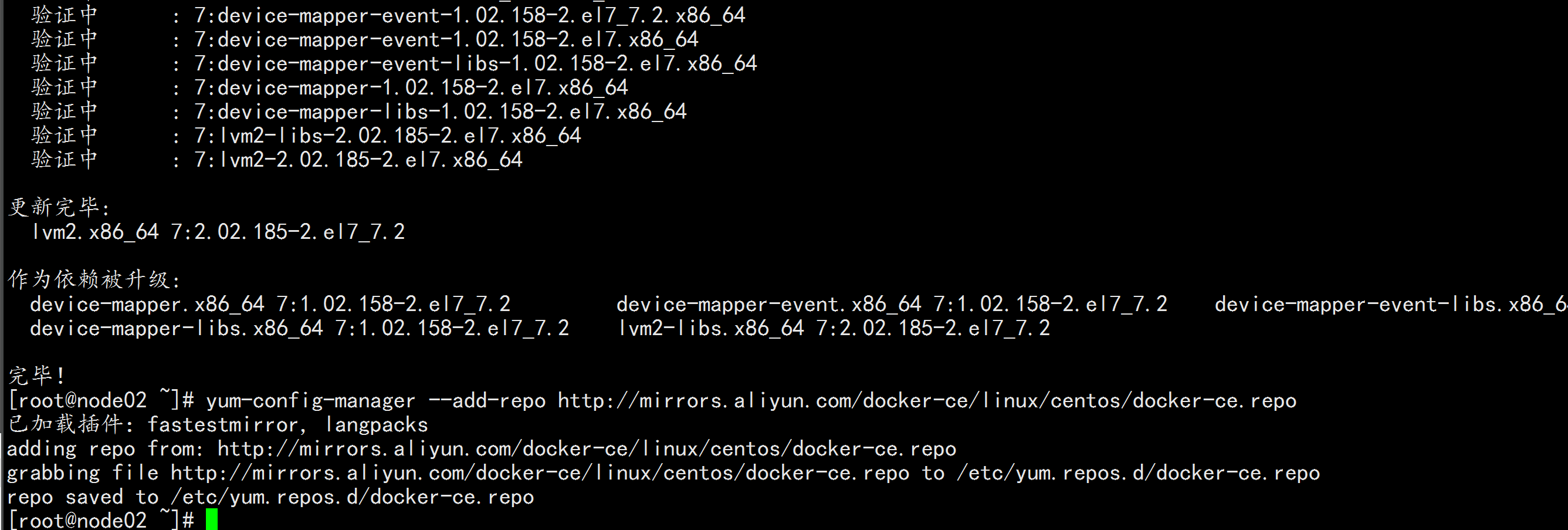

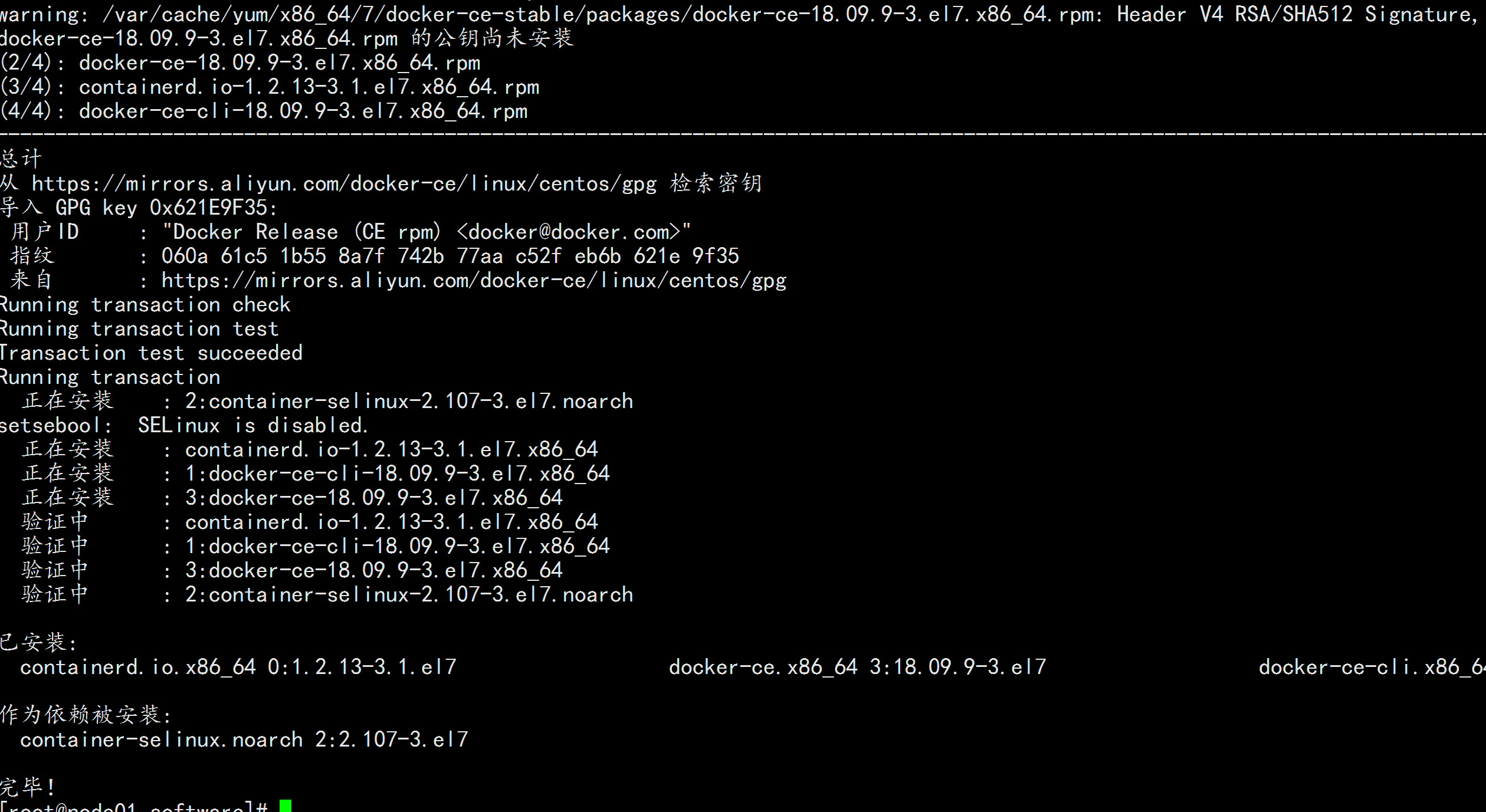

3.1 安装docker

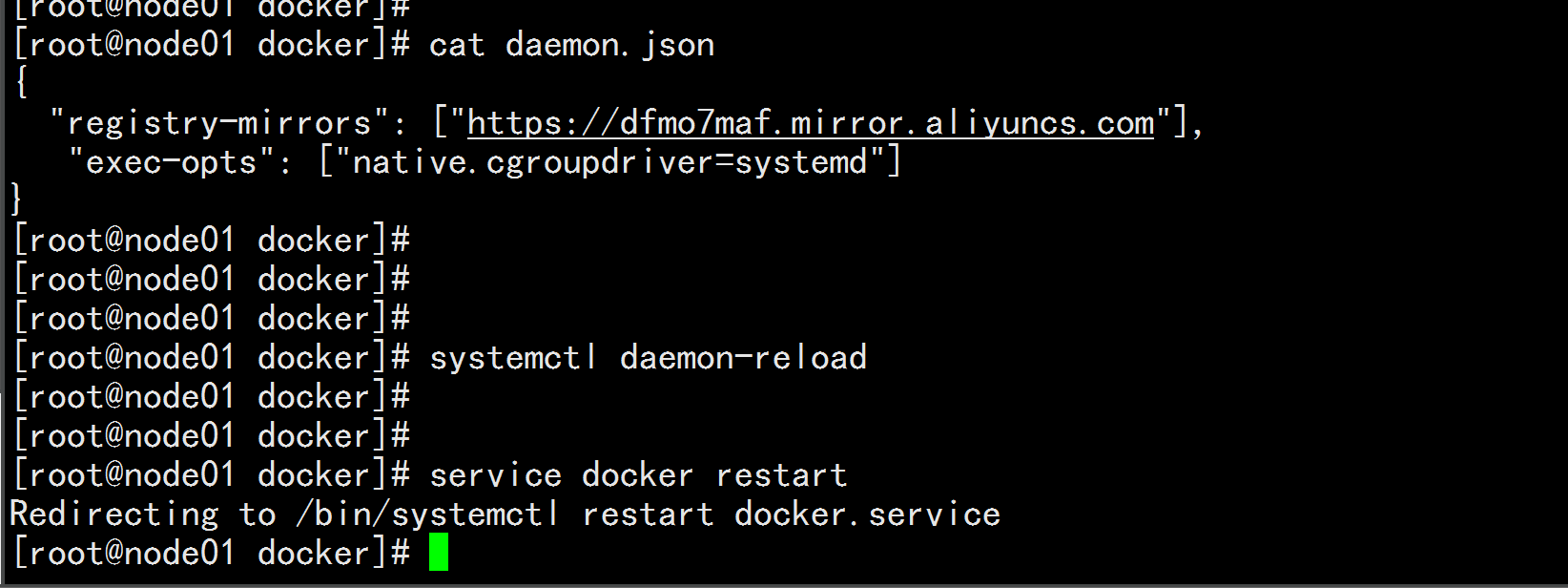

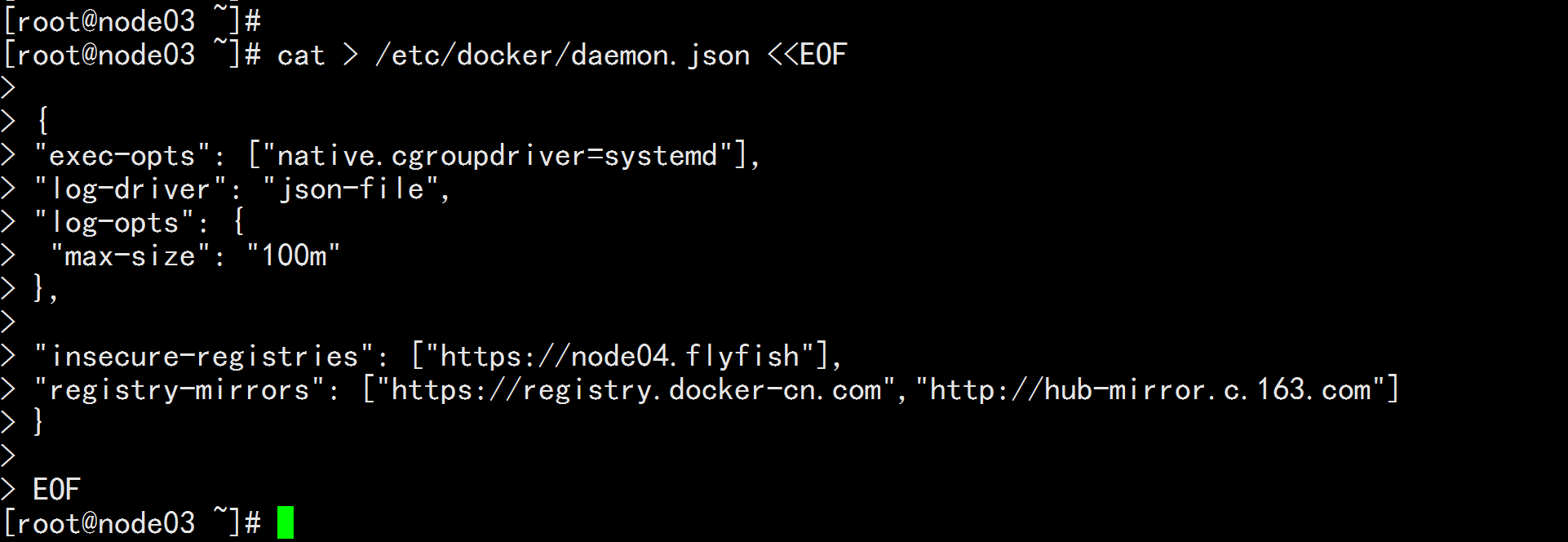

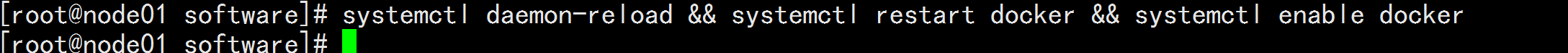

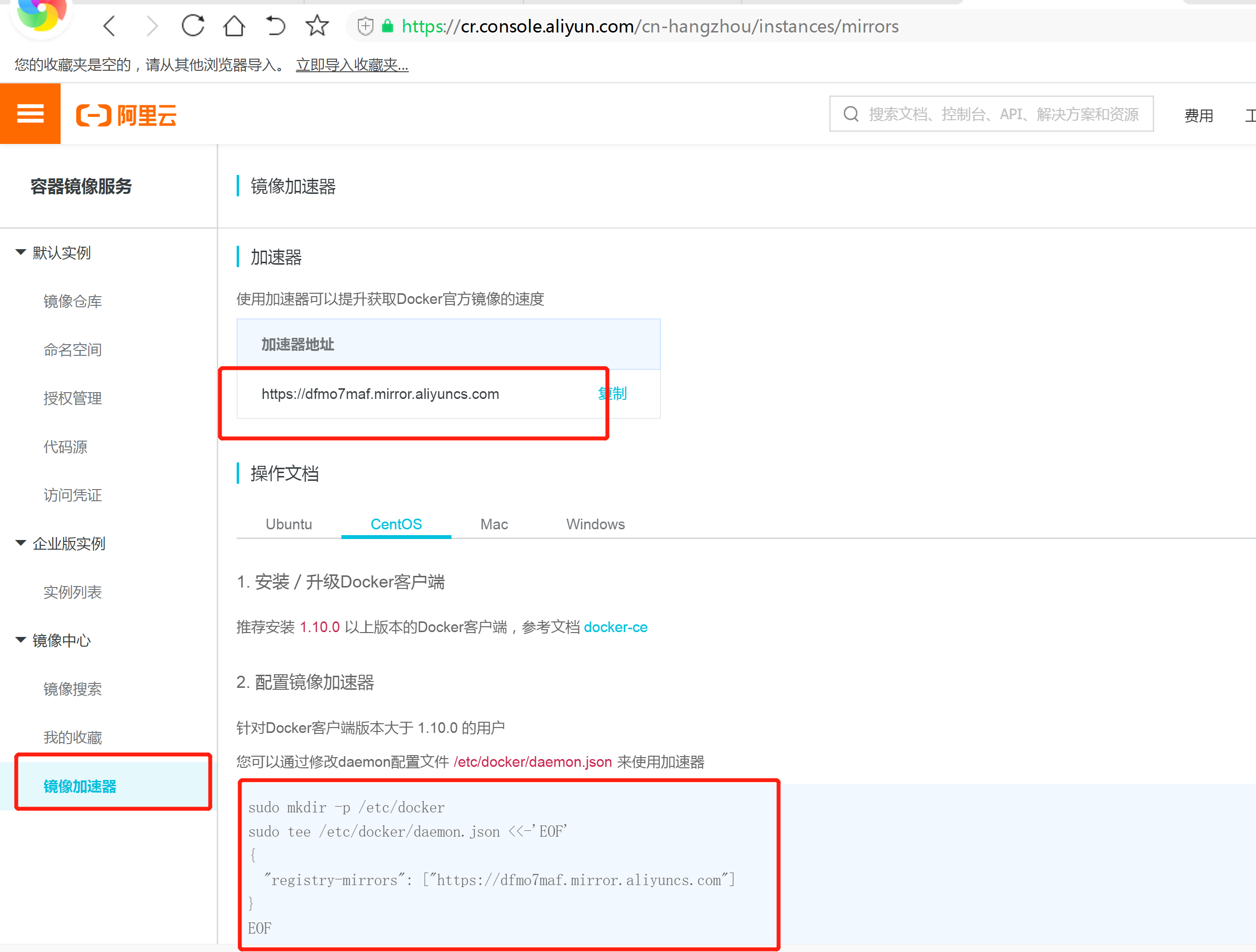

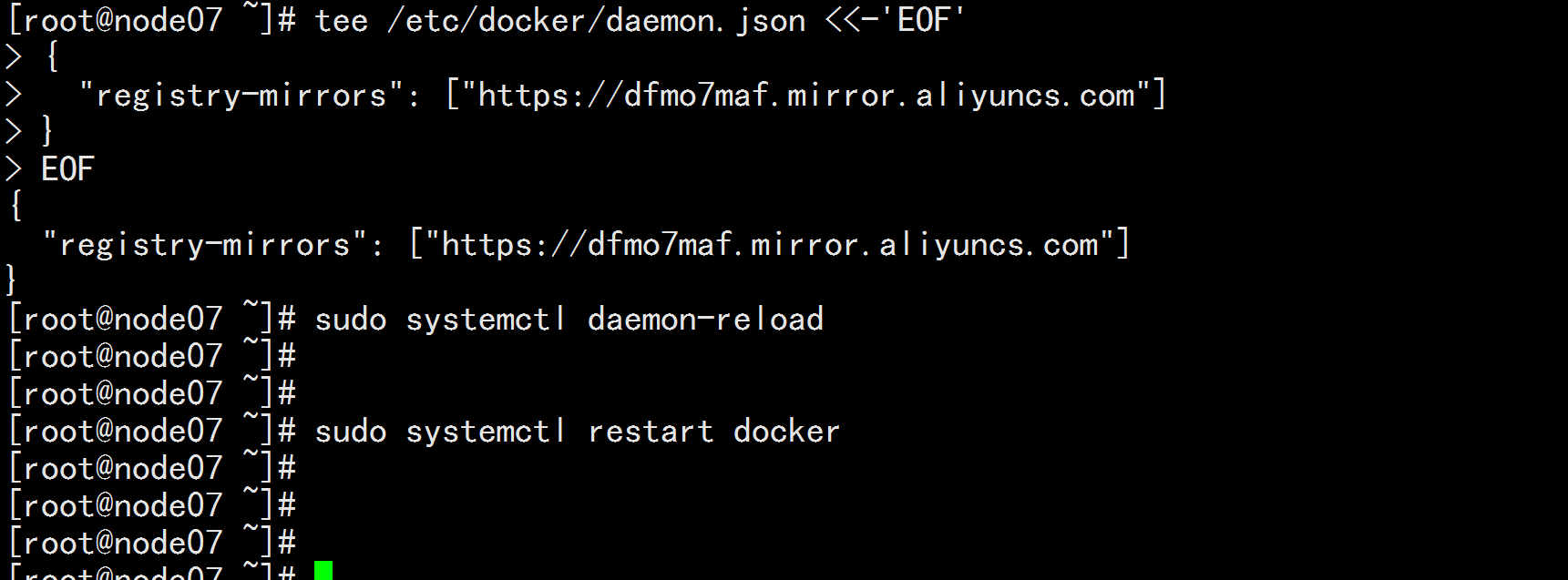

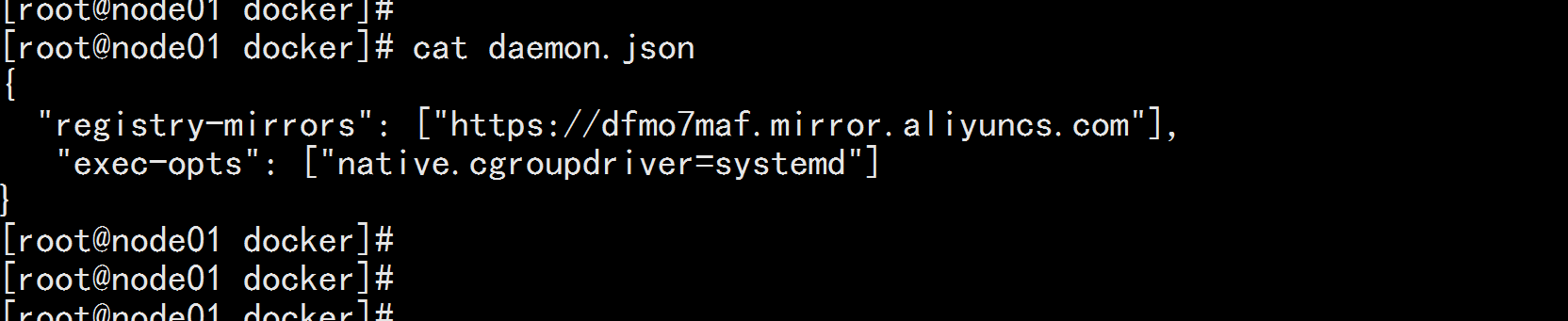

机器节点都执行: yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum update -y && yum install docker-ce-18.09.9 docker-ce-cli-18.09.9 containerd.io -y 重启机器: reboot 查看内核版本: uname -r 在加载: grub2-set-default "CentOS Linux (4.4.182-1.el7.elrepo.x86_64) 7 (Core)" && reboot 如果还不行 就改 文件 : vim /etc/grub2.cfg 注释掉 3.10 的 内核 保证 内核的版本 为 4.4 service docker start chkconfig docker on ## 创建 /etc/docker 目录 cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "insecure-registries": ["https://node04.flyfish"], "registry-mirrors": ["https://registry.docker-cn.com","http://hub-mirror.c.163.com"] } EOF mkdir -p /etc/systemd/system/docker.service.d # 重启docker服务 systemctl daemon-reload && systemctl restart docker && systemctl enable docker

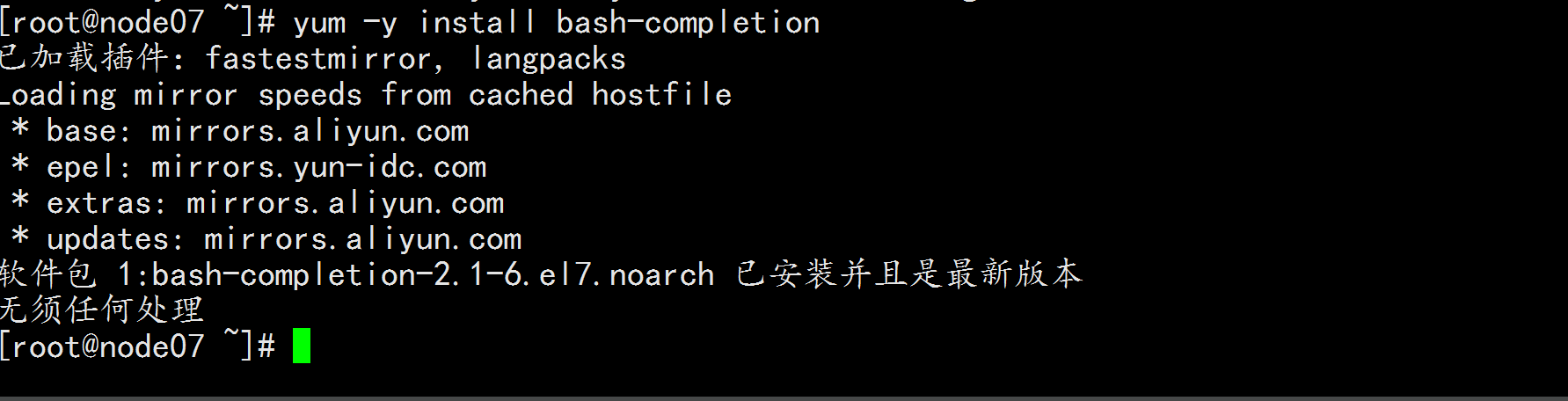

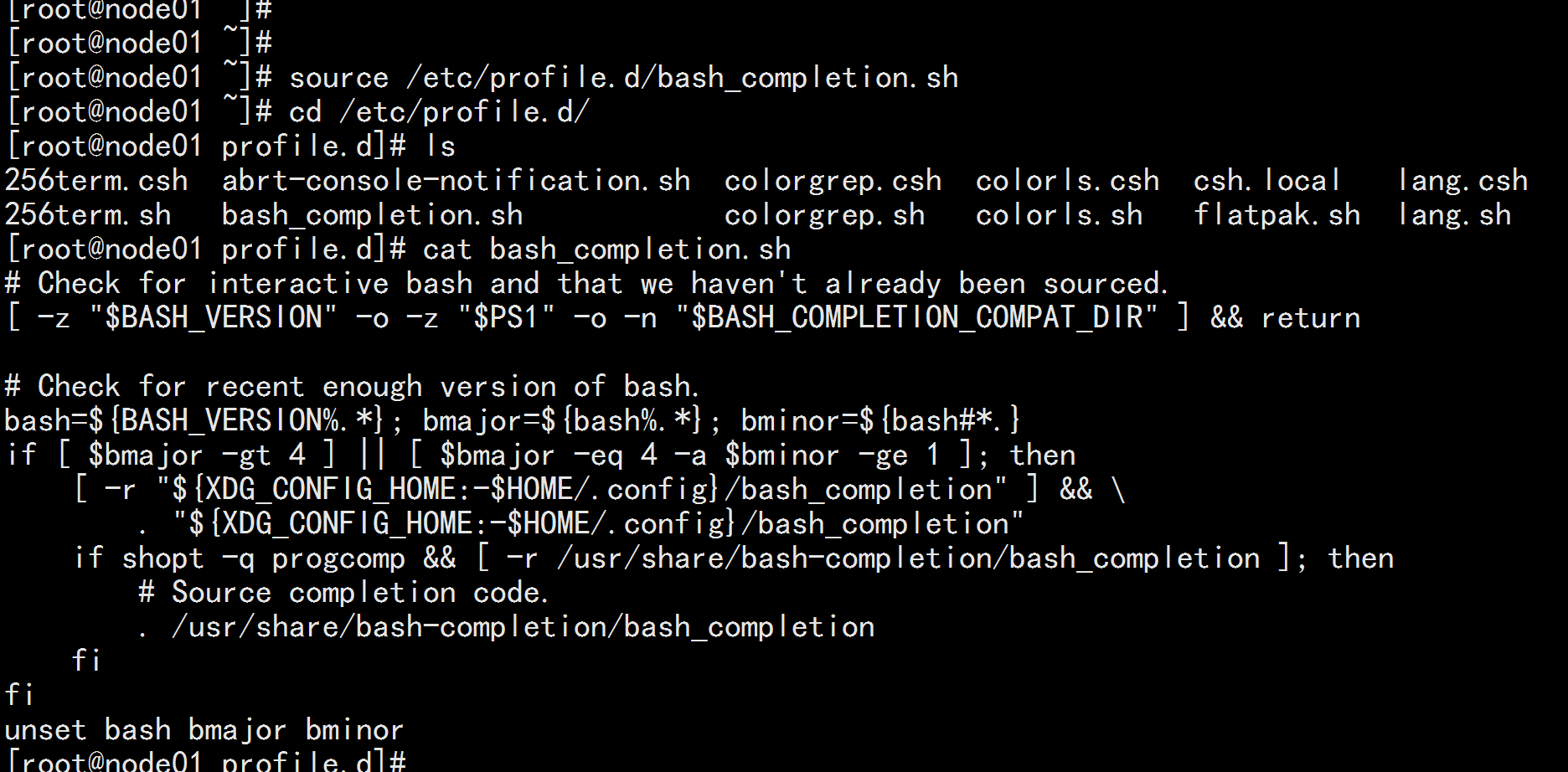

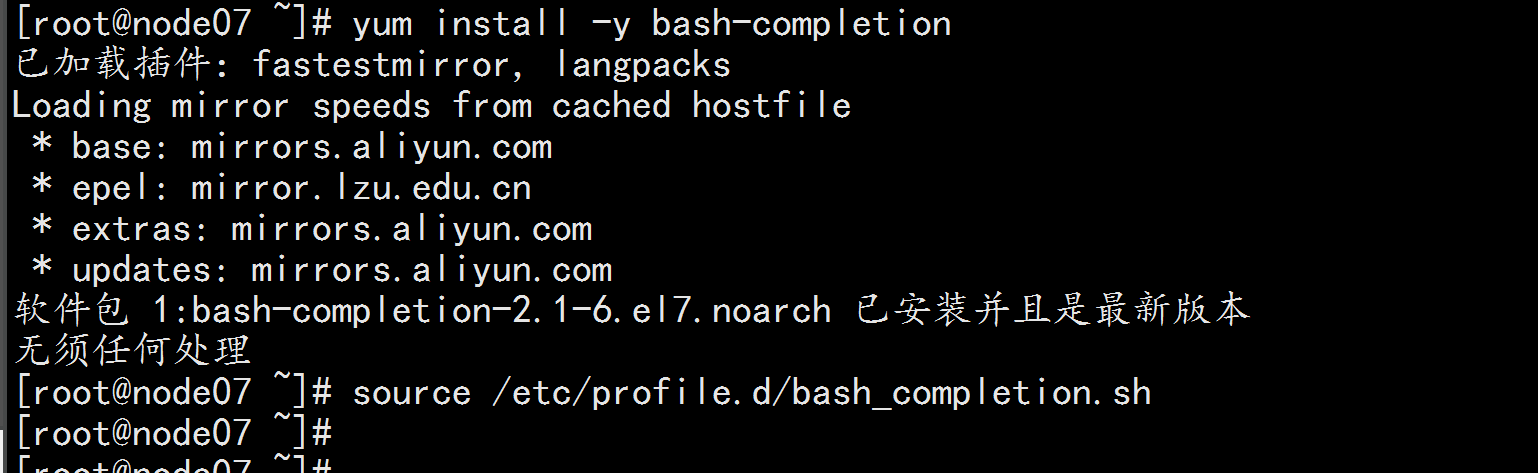

安装命令补全工具 yum -y install bash-completion source /etc/profile.d/bash_completion.sh

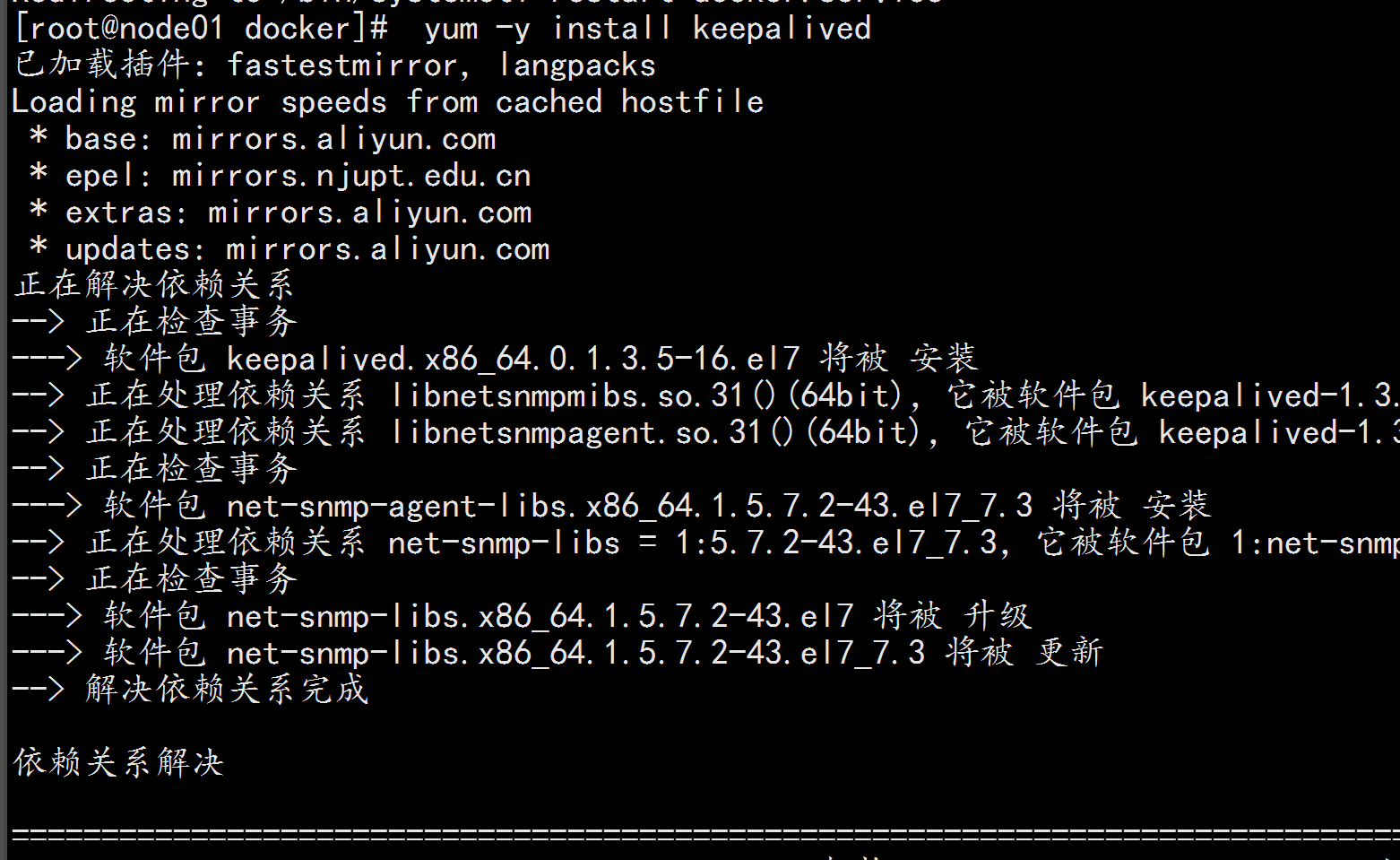

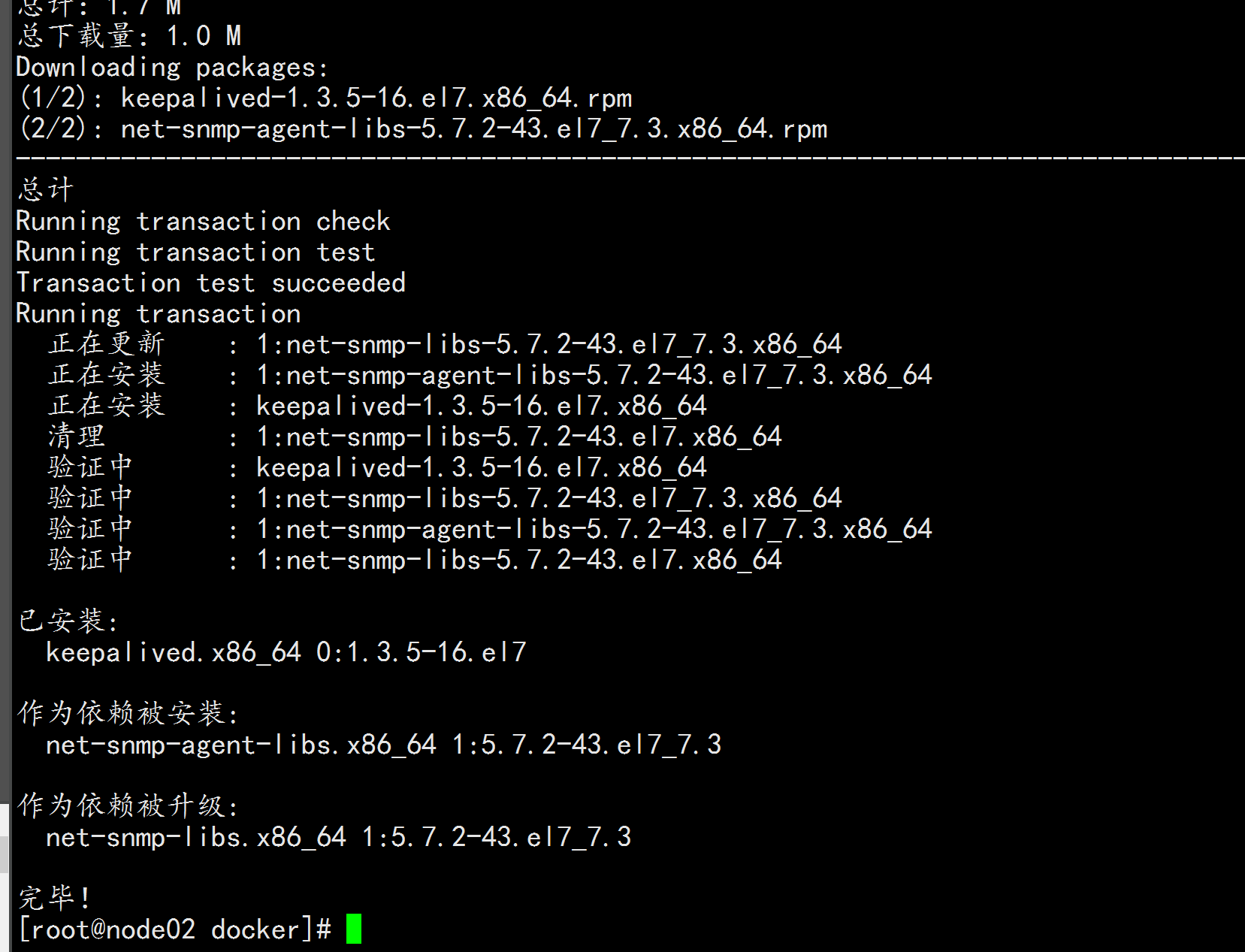

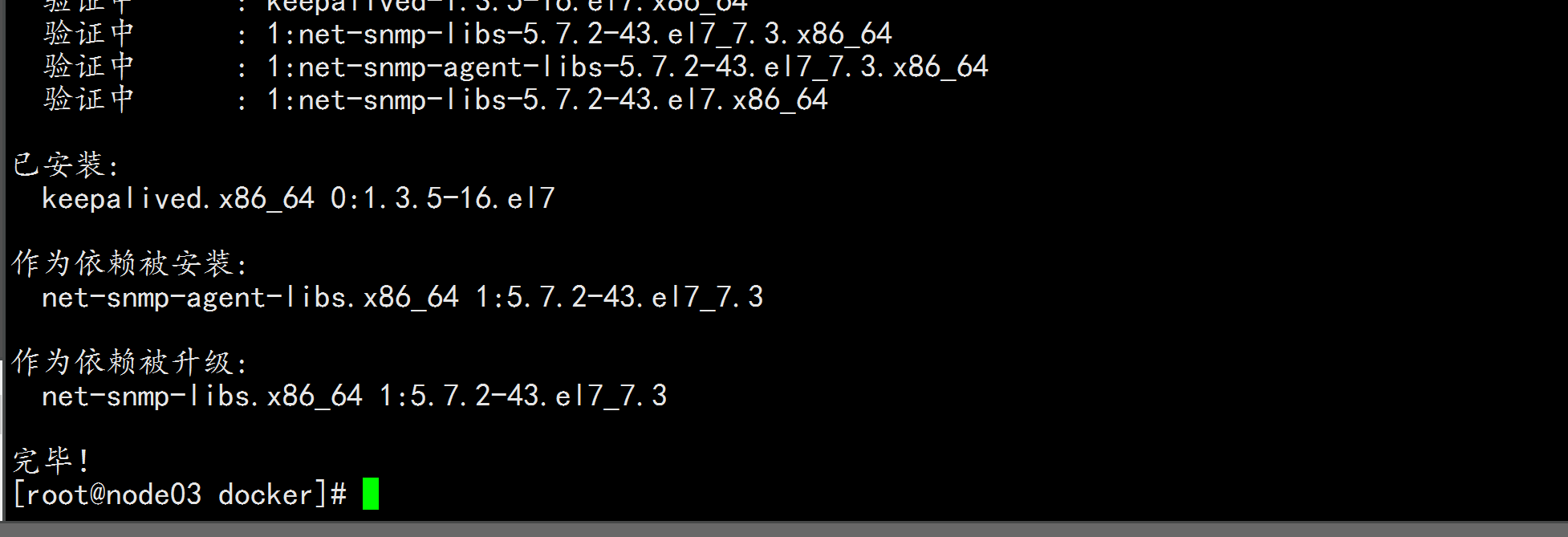

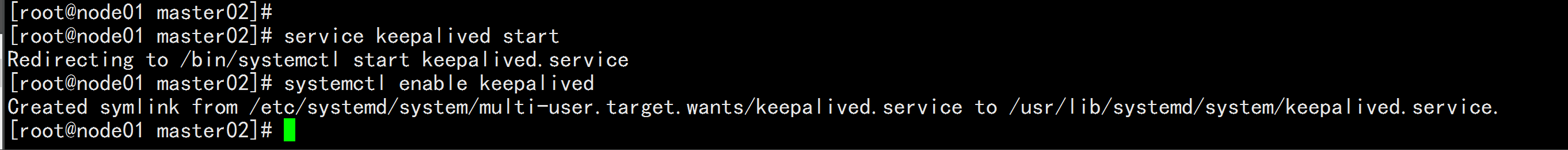

四:安装keepalived

control plane节点都执行本部分操作。 安装keepalived yum install -y keepalived

五: k8s安装

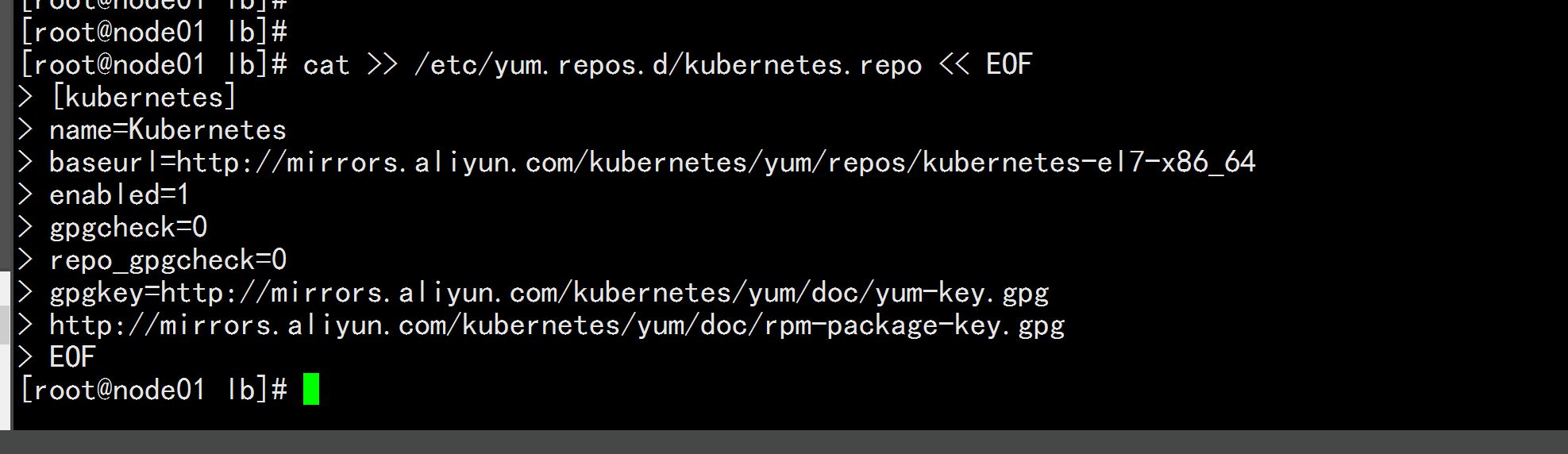

5.1:安装 Kubeadm (主从配置)

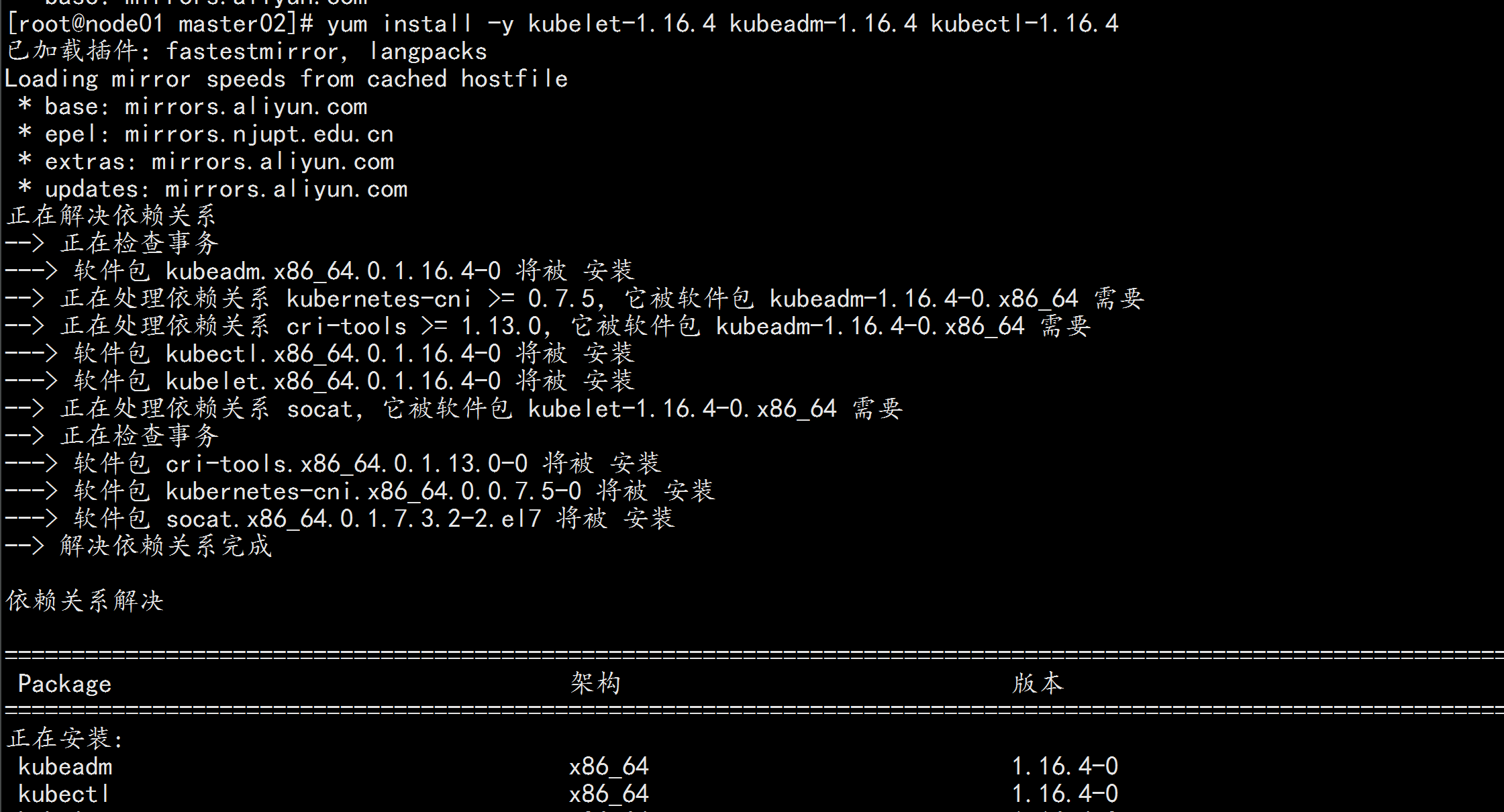

control plane和work节点都执行本部分操作。 cat >> /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

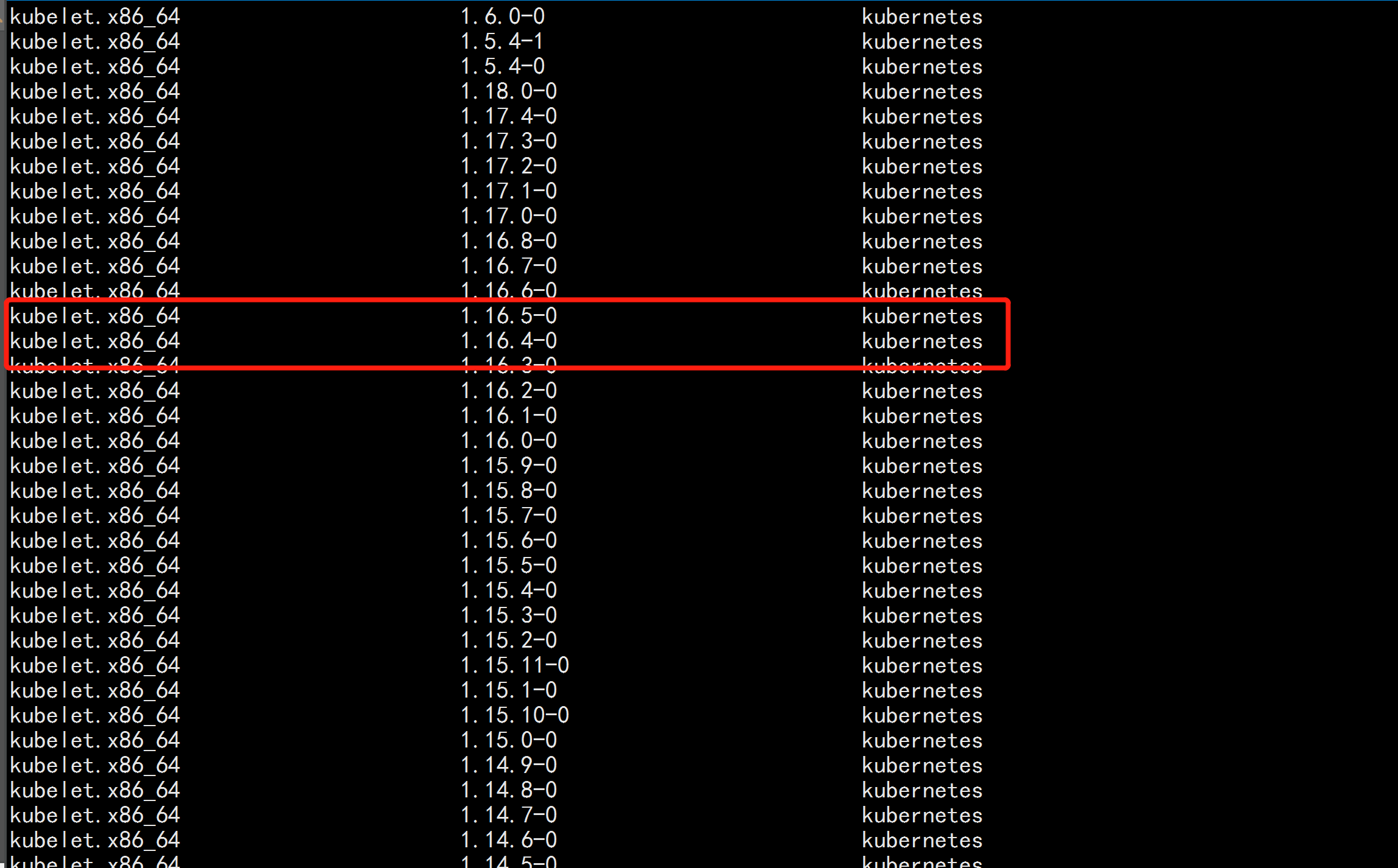

yum list kubelet --showduplicates | sort -r 本文安装的kubelet版本是1.16.4,该版本支持的docker版本为1.13.1, 17.03, 17.06, 17.09, 18.06, 18.09。

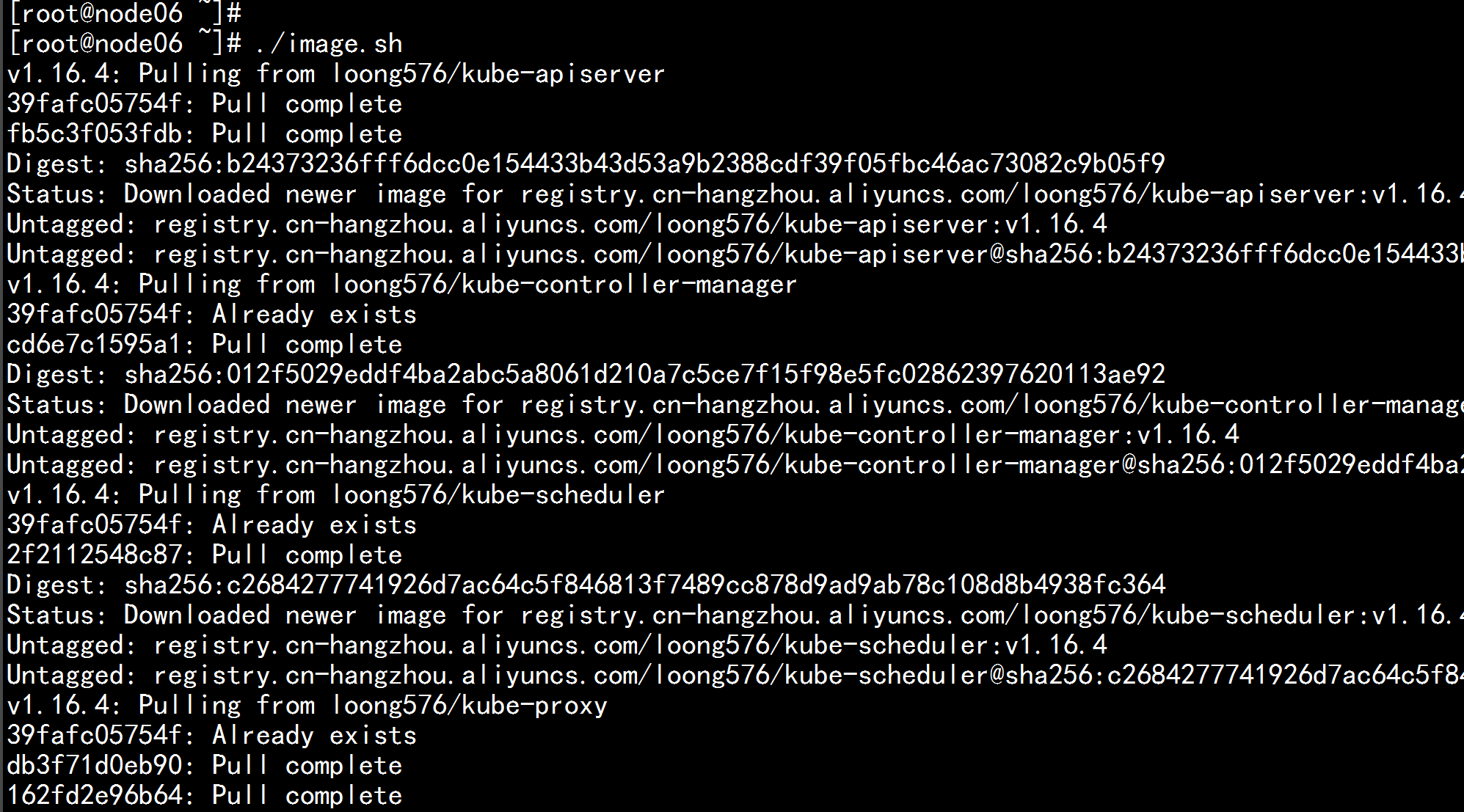

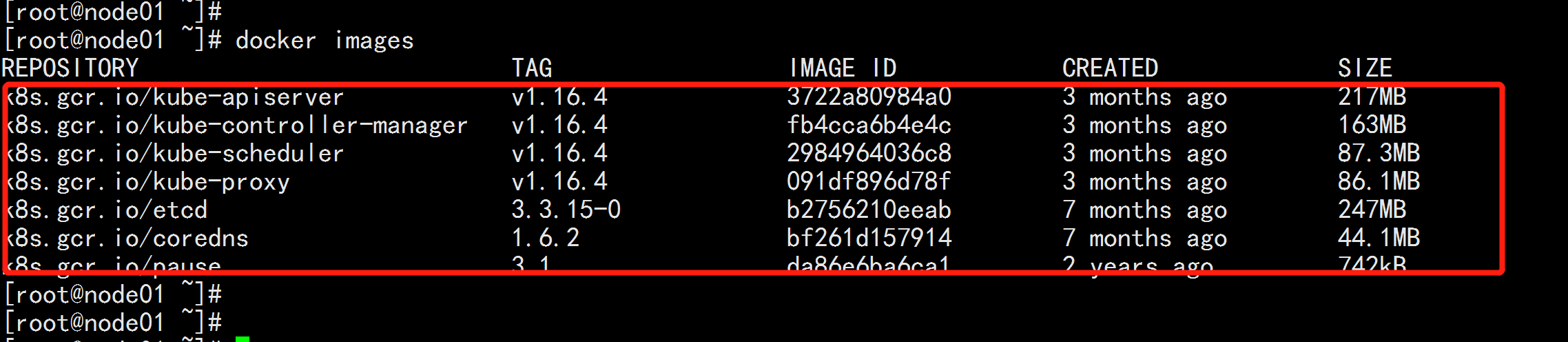

5.2 下载镜像

镜像下载的脚本: Kubernetes几乎所有的安装组件和Docker镜像都放在goolge自己的网站上,直接访问可能会有网络问题,这里的解决办法是从阿里云镜像仓库下载镜像,拉取到本地以后改回默认的镜像tag。本文通过运行image.sh脚本方式拉取镜像。下载脚本 vim image.sh --- #!/bin/bash url=registry.cn-hangzhou.aliyuncs.com/loong576 version=v1.16.4 images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`) for imagename in ${images[@]} ; do docker pull $url/$imagename docker tag $url/$imagename k8s.gcr.io/$imagename docker rmi -f $url/$imagename done --- ./image.sh docker images

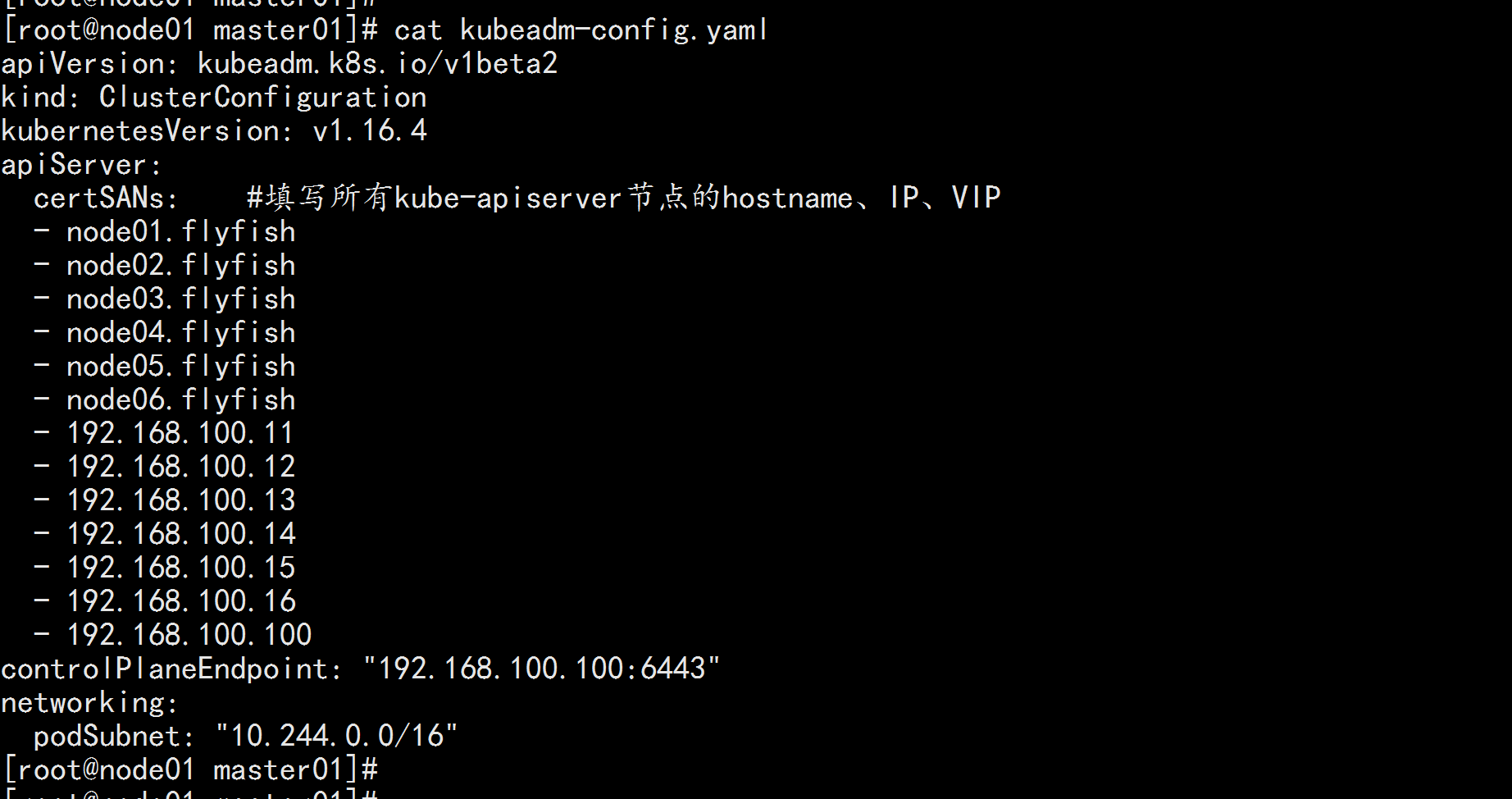

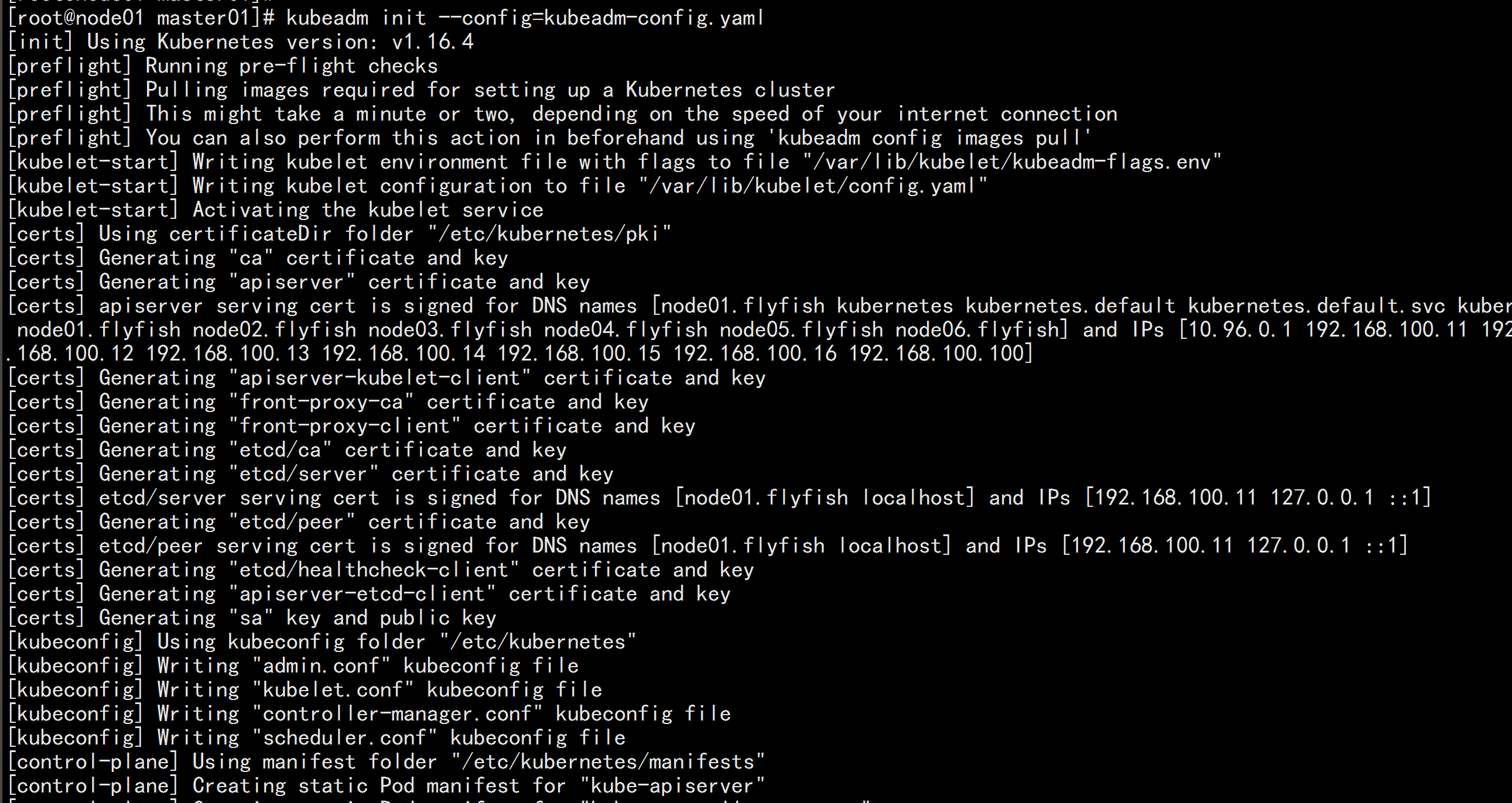

初始化主机节点: kubeadm init --config=kubeadm-config.yaml --- Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of control-plane nodes by copying certificate authorities and service account keys on each node and then running the following as root: kubeadm join 192.168.100.100:6443 --token 3j4th7.4va6qsj7at7ky2qs \ --discovery-token-ca-cert-hash sha256:13d17c476688e4e78837b9cac94efa7edf689bf530a2120e2b81bf13b588fff9 \ --control-plane Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.100.100:6443 --token 3j4th7.4va6qsj7at7ky2qs \ --discovery-token-ca-cert-hash sha256:13d17c476688e4e78837b9cac94efa7edf689bf530a2120e2b81bf13b588fff9 ---

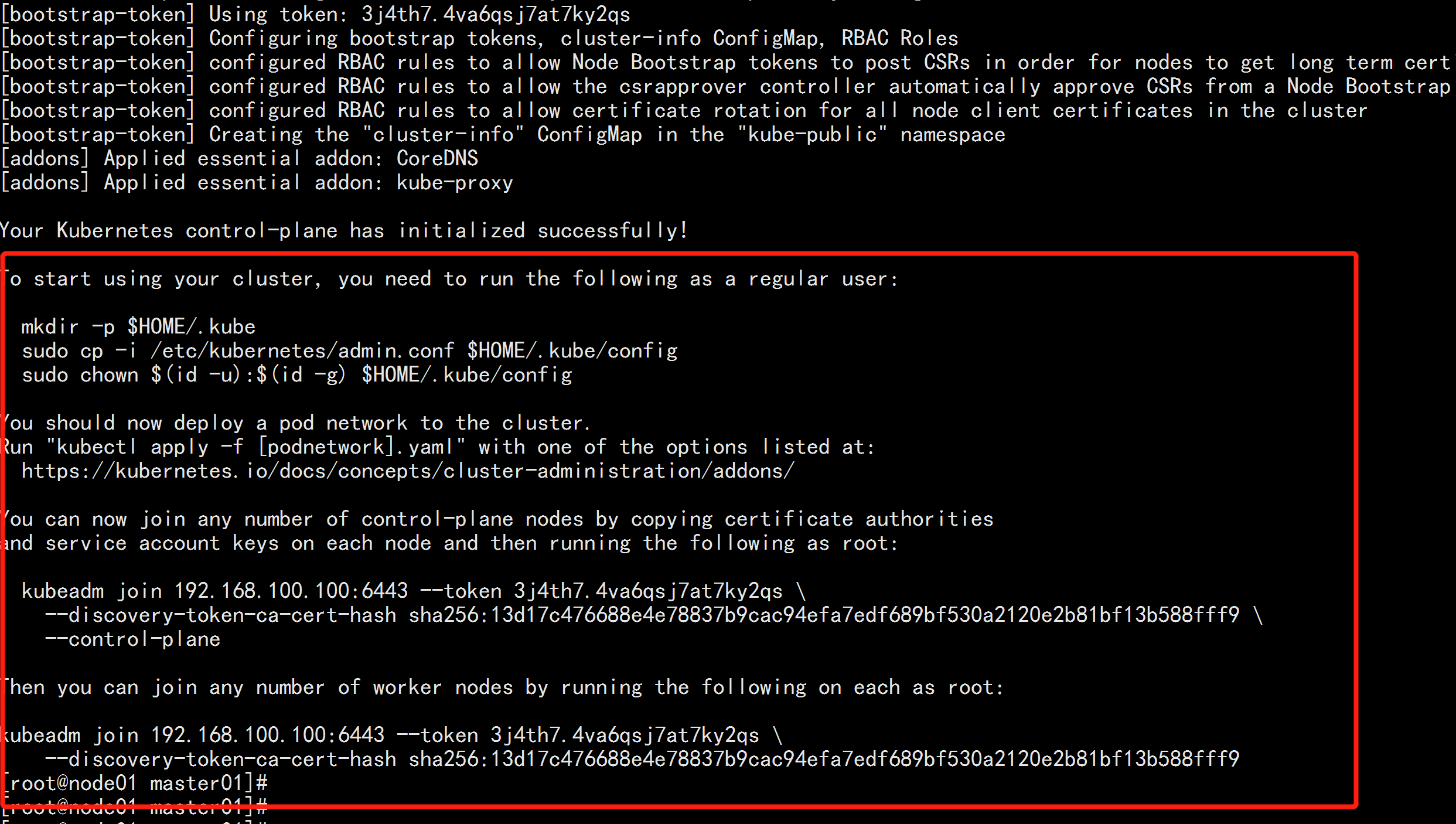

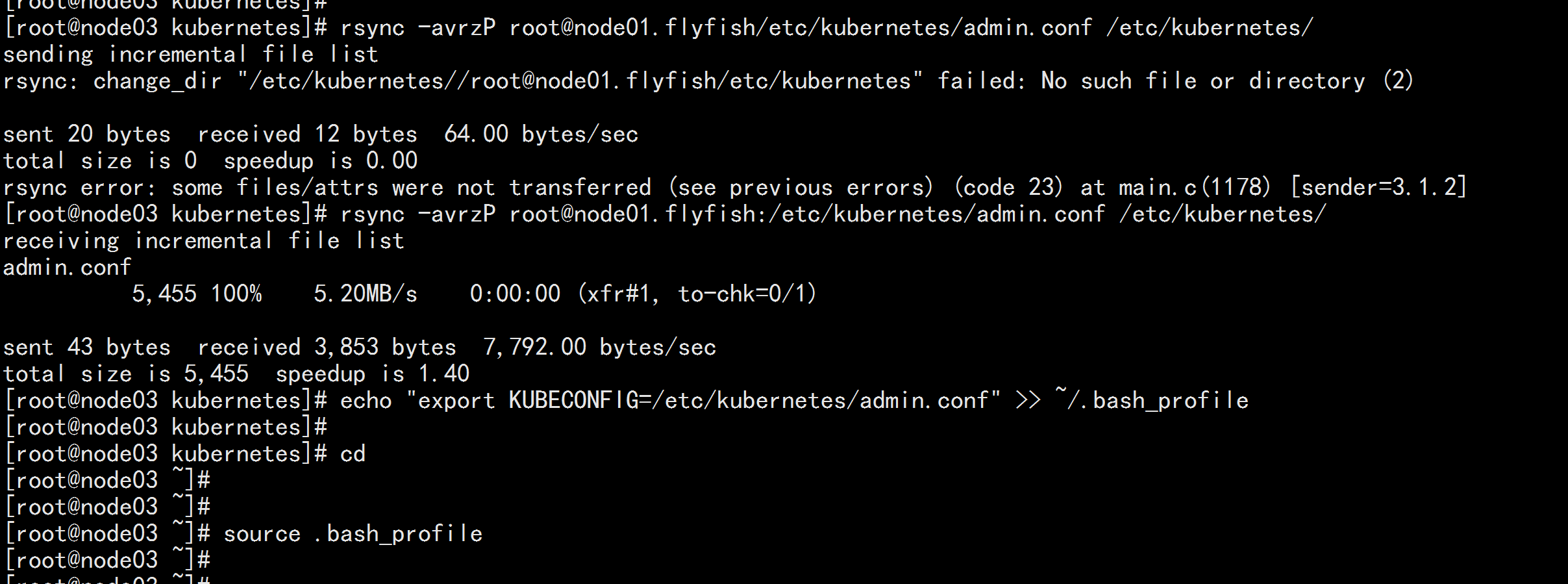

加载环境变量 echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile source .bash_profile

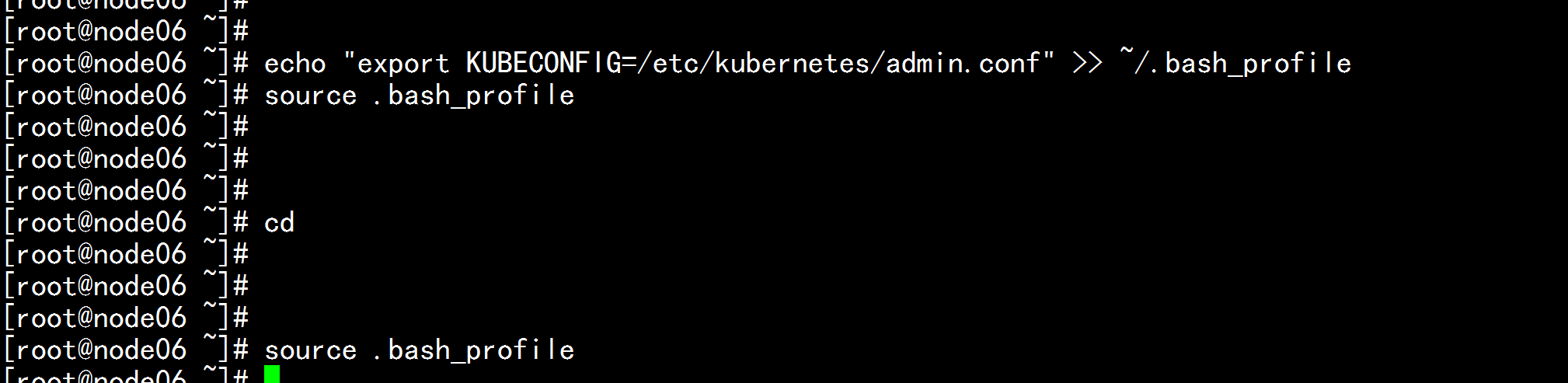

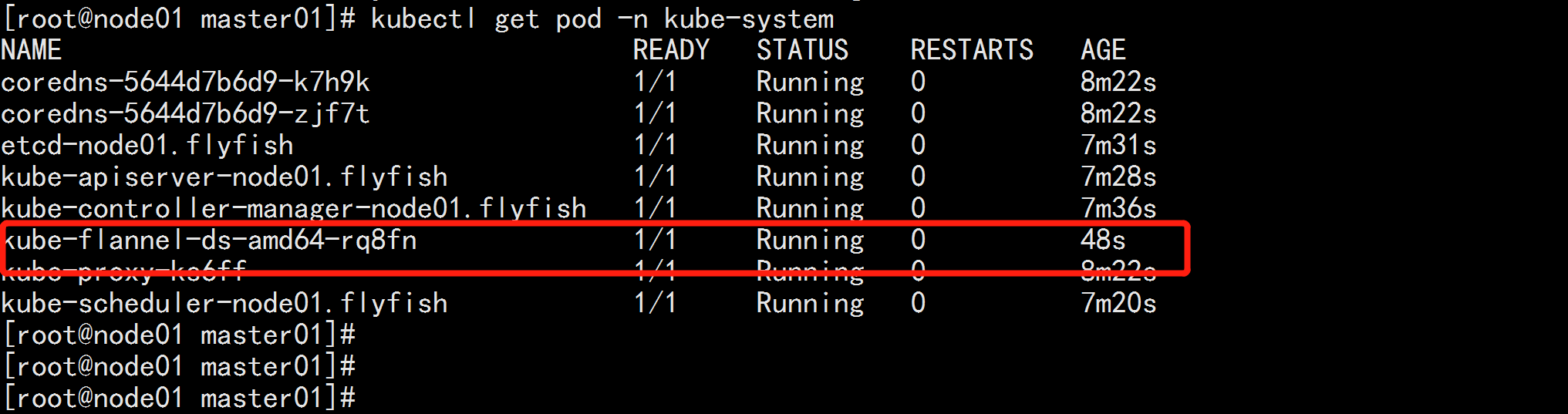

安装flannel网络 kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml kubectl get pod -n kube-system

5.3 control plane节点加入集群

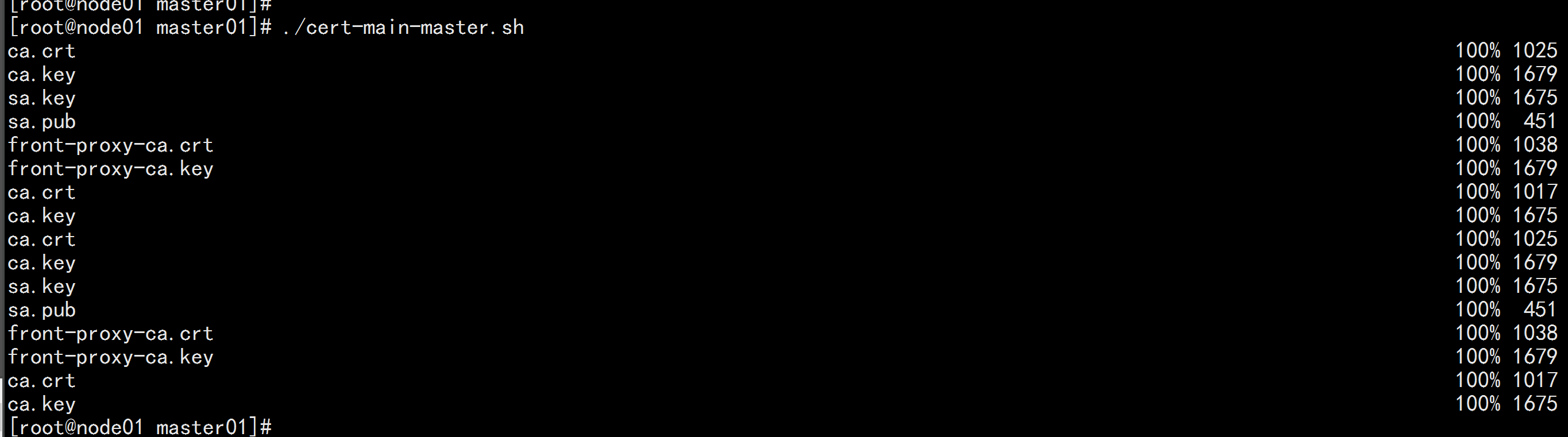

证书分发 在node01.flyfish 上面执行 脚本:cert-main-master.sh vim cert-main-master.sh --- #!/bin/bash USER=root # customizable CONTROL_PLANE_IPS="192.168.100.12 192.168.100.13" for host in ${CONTROL_PLANE_IPS}; do scp /etc/kubernetes/pki/ca.crt "${USER}"@$host: scp /etc/kubernetes/pki/ca.key "${USER}"@$host: scp /etc/kubernetes/pki/sa.key "${USER}"@$host: scp /etc/kubernetes/pki/sa.pub "${USER}"@$host: scp /etc/kubernetes/pki/front-proxy-ca.crt "${USER}"@$host: scp /etc/kubernetes/pki/front-proxy-ca.key "${USER}"@$host: scp /etc/kubernetes/pki/etcd/ca.crt "${USER}"@$host:etcd-ca.crt # Quote this line if you are using external etcd scp /etc/kubernetes/pki/etcd/ca.key "${USER}"@$host:etcd-ca.key done --- ./cert-main-master.sh

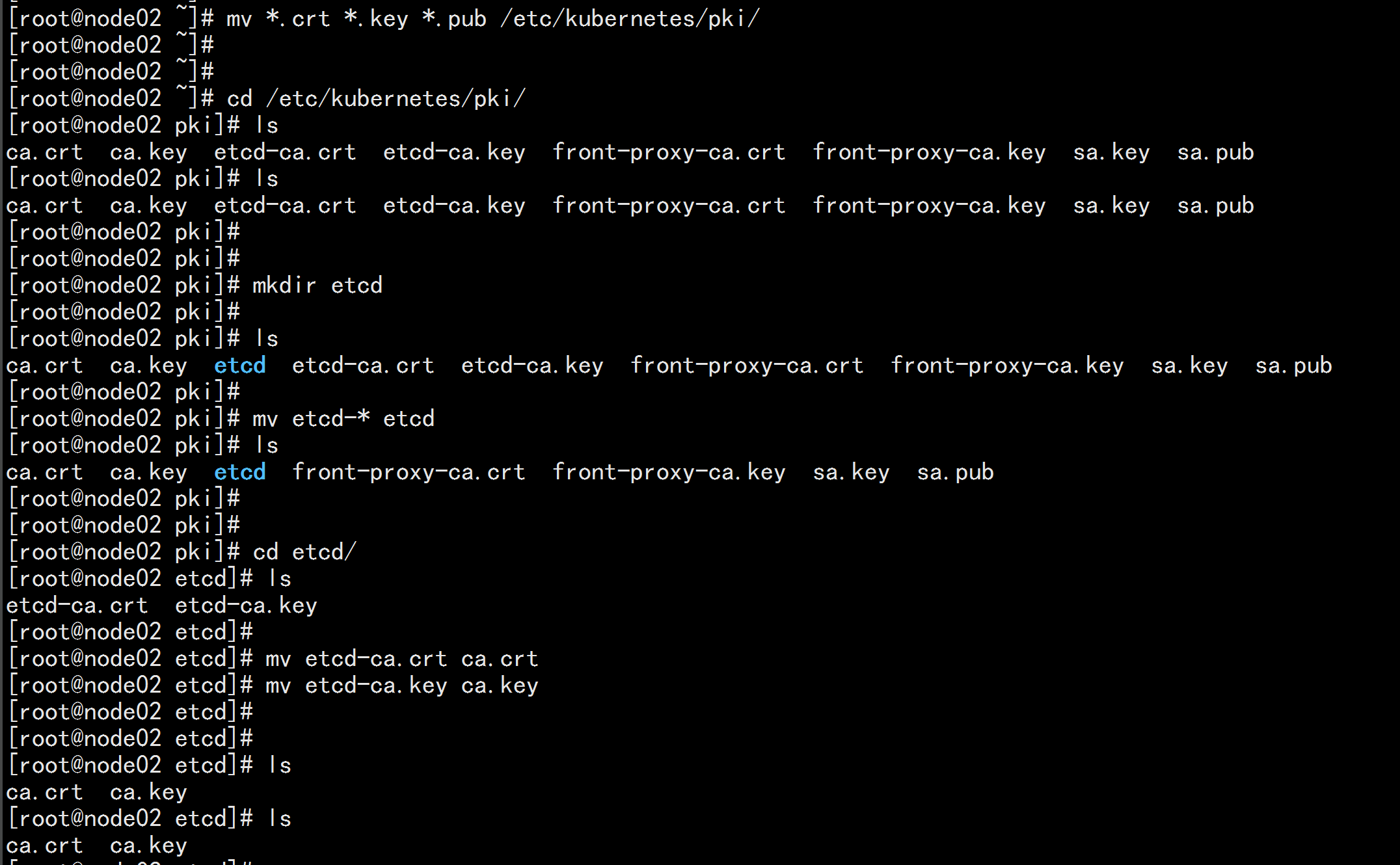

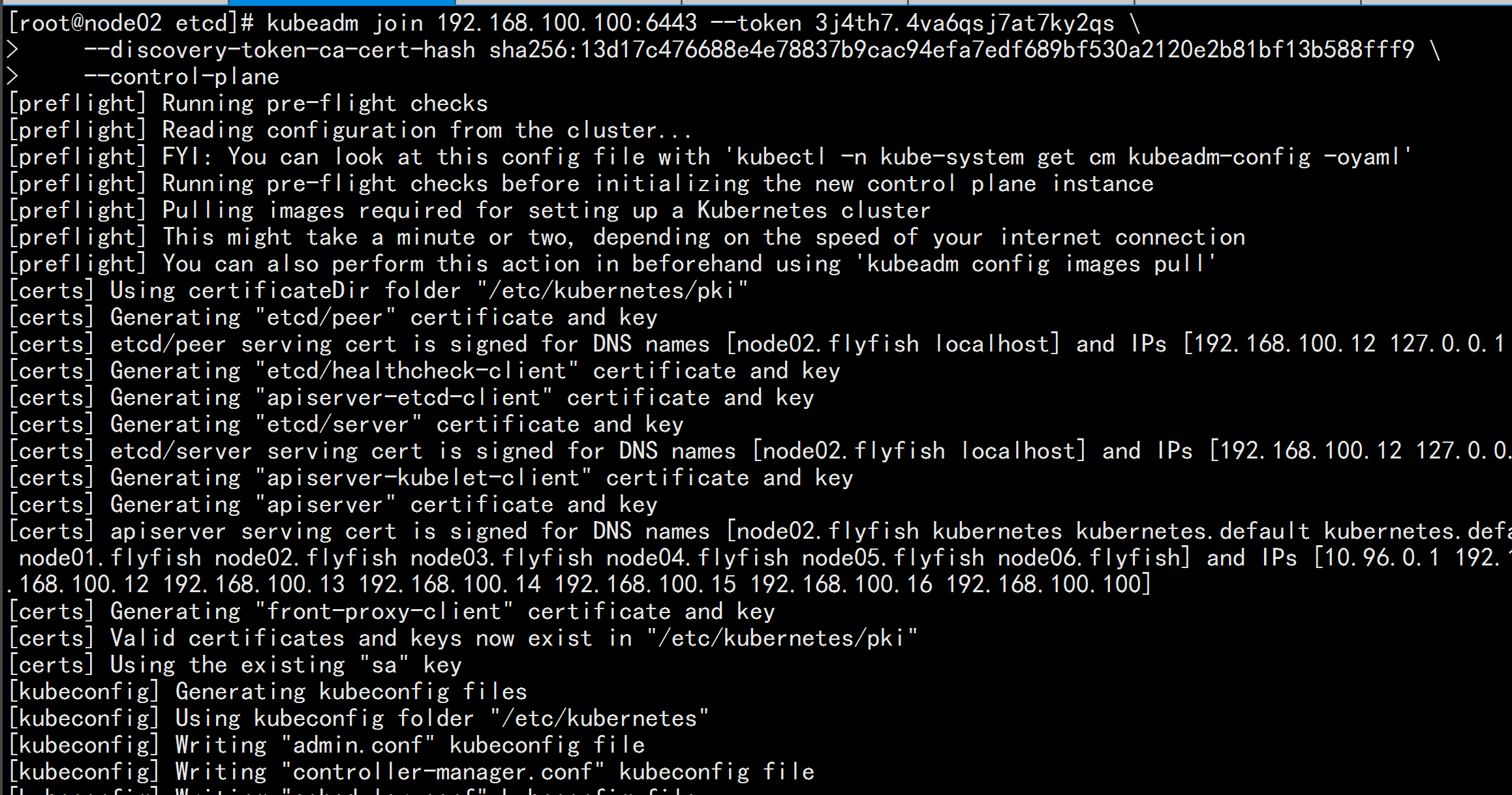

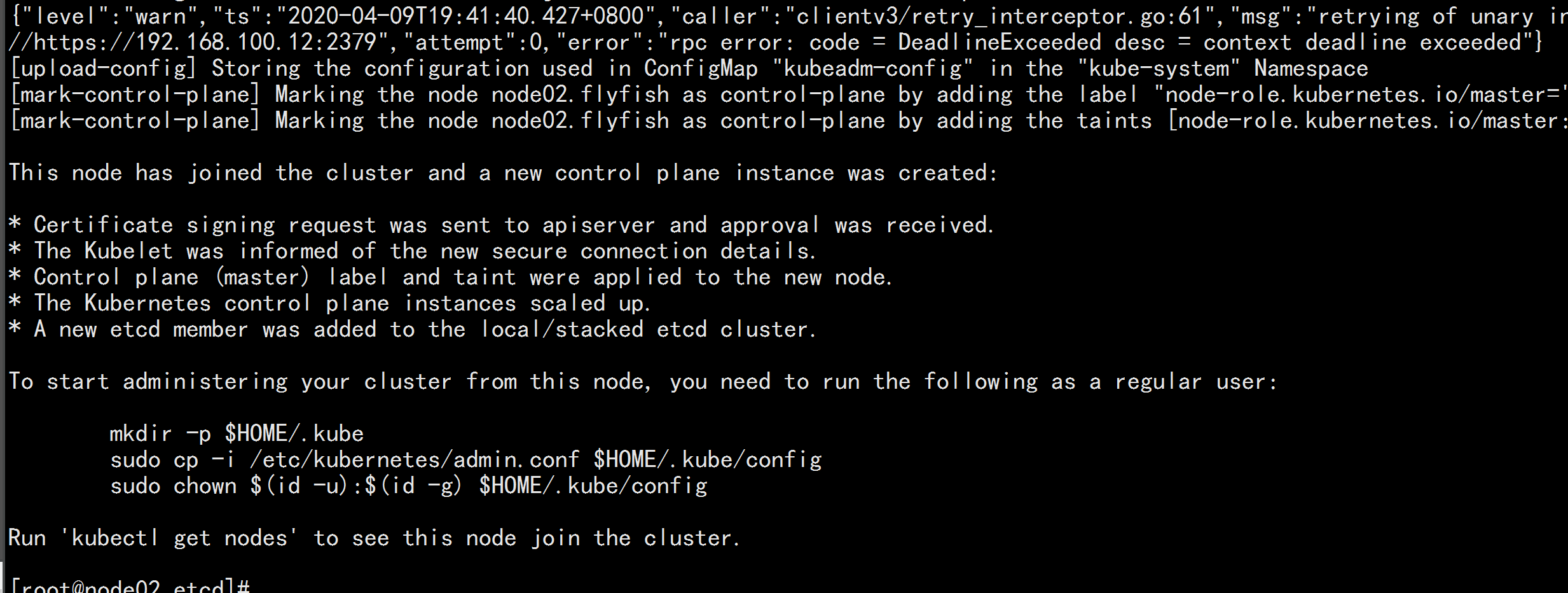

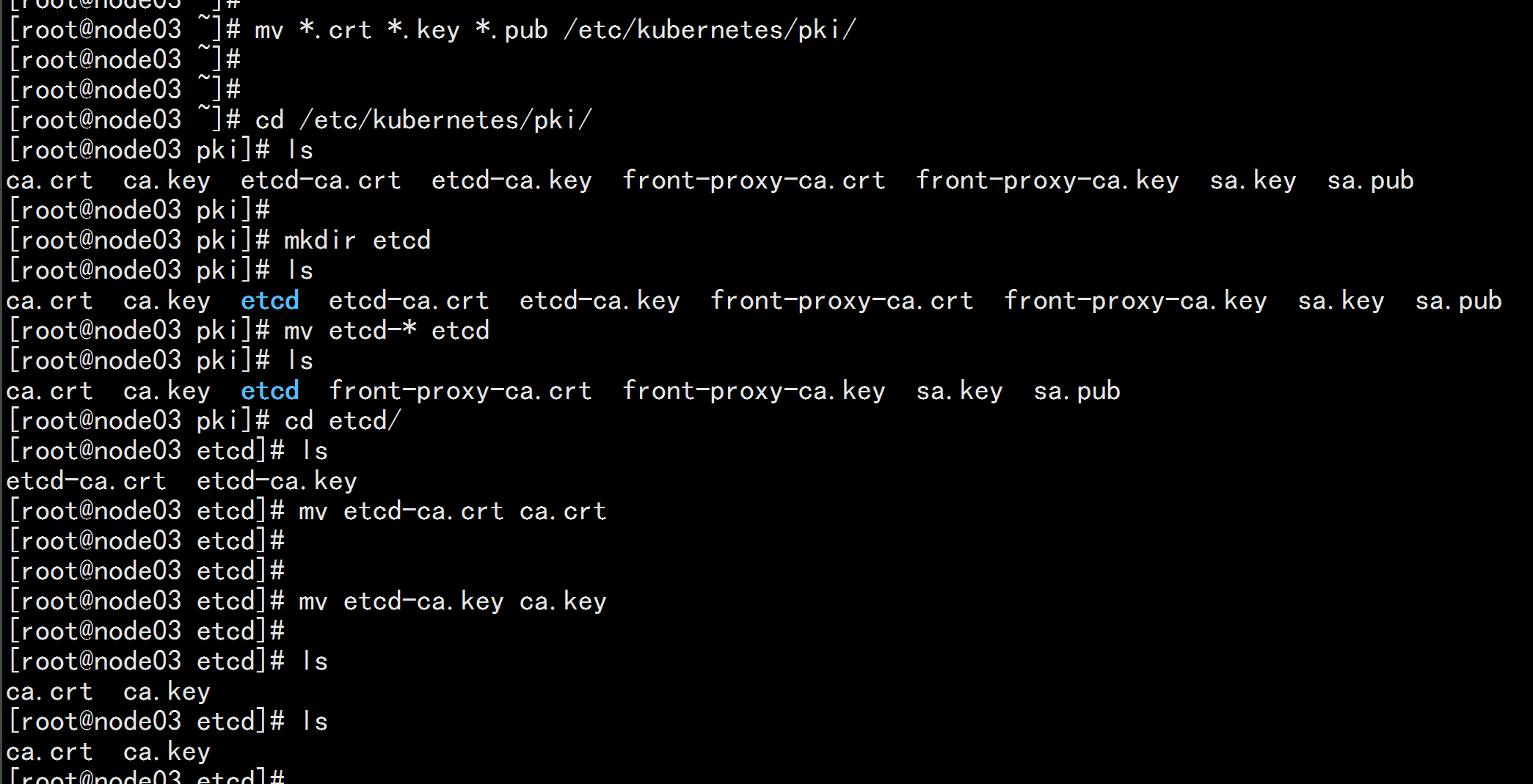

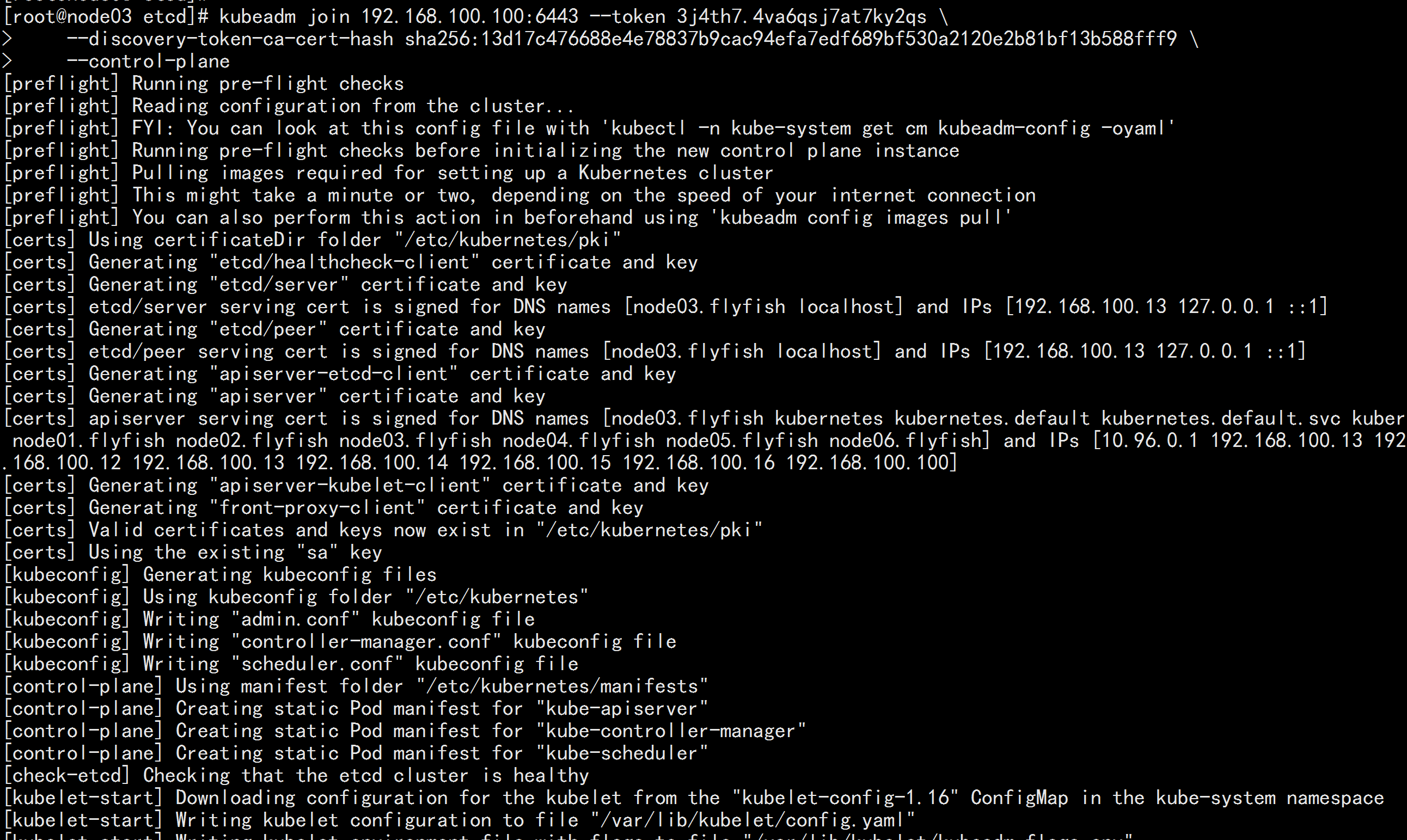

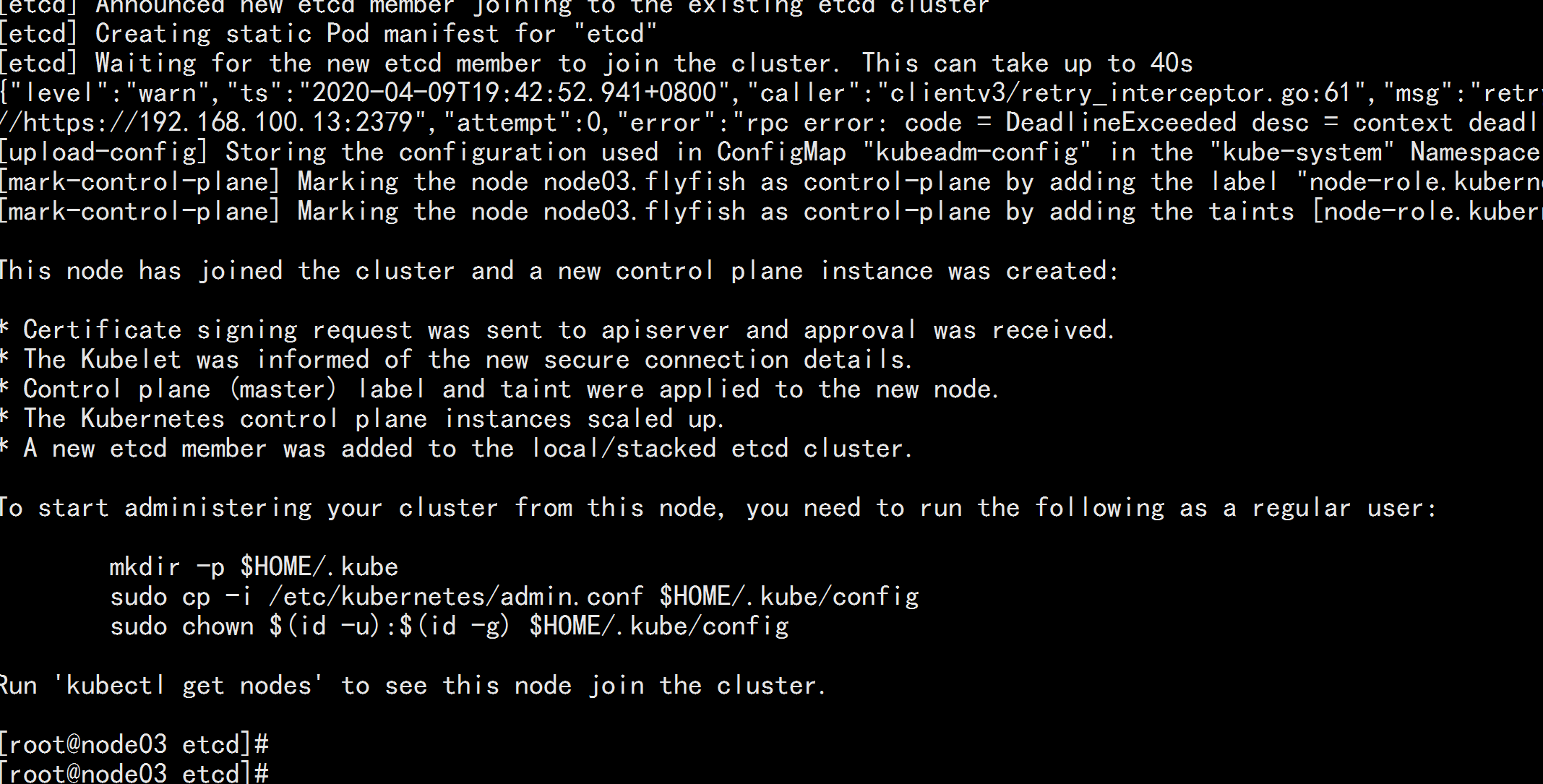

登录 node03.flyfish cd /root mkdir -p /etc/kubernetes/pki mv *.crt *.key *.pub /etc/kubernetes/pki/ cd /etc/kubernetes/pki mkdir etcd mv etcd-* etcd cd etcd mv etcd-ca.key ca.key mv etcd-ca.crt ca.crt node03.flyfish 加入集群 kubeadm join 192.168.100.100:6443 --token 3j4th7.4va6qsj7at7ky2qs \ --discovery-token-ca-cert-hash sha256:13d17c476688e4e78837b9cac94efa7edf689bf530a2120e2b81bf13b588fff9 \ --control-plane

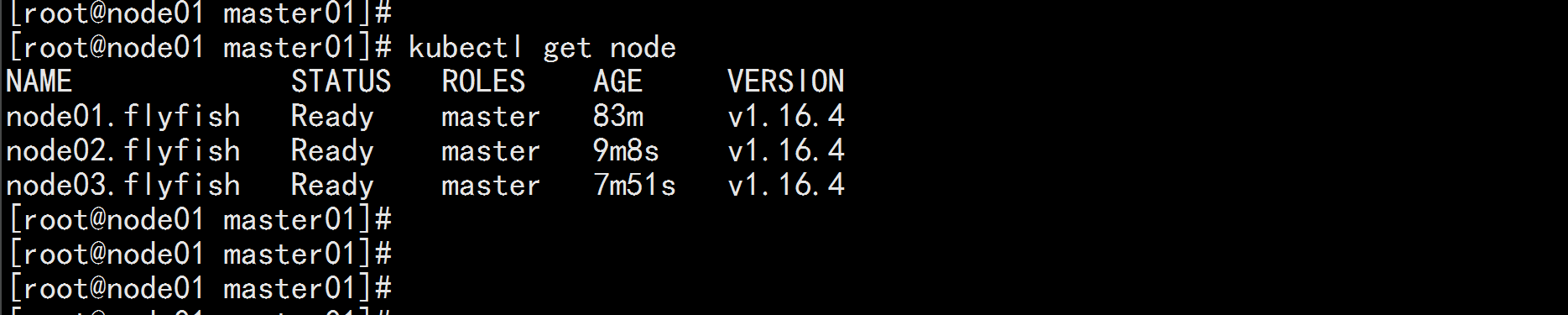

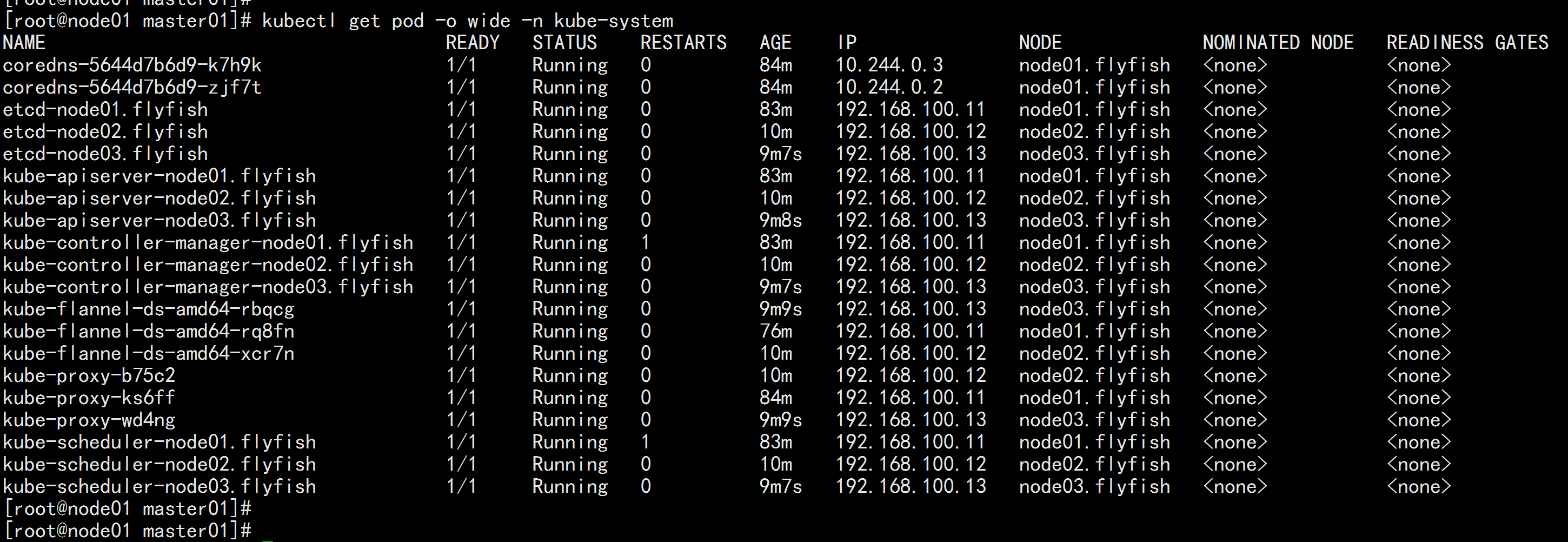

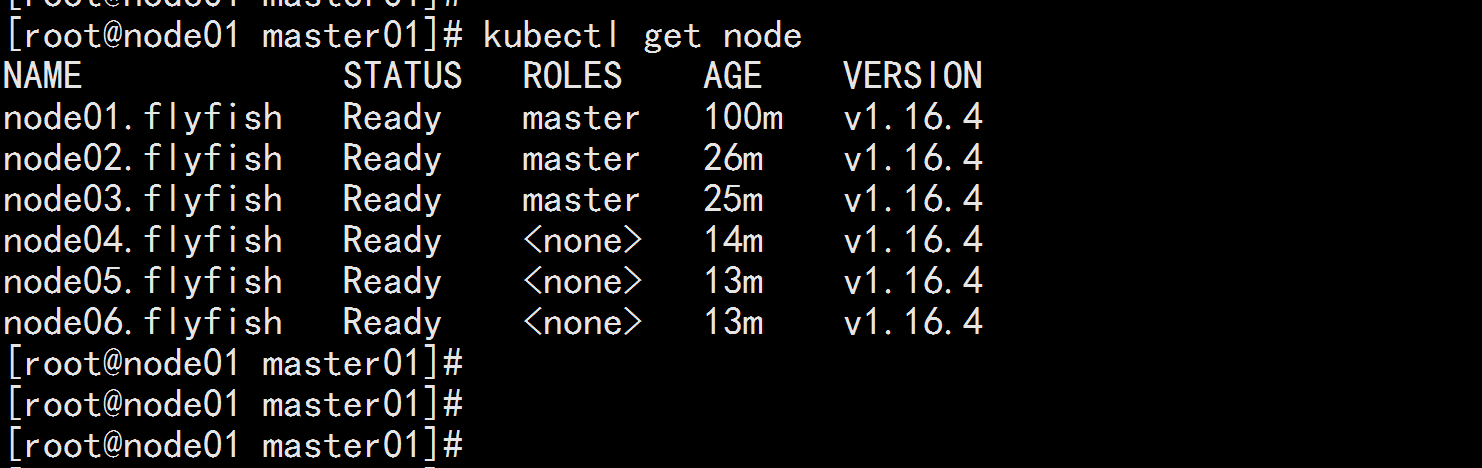

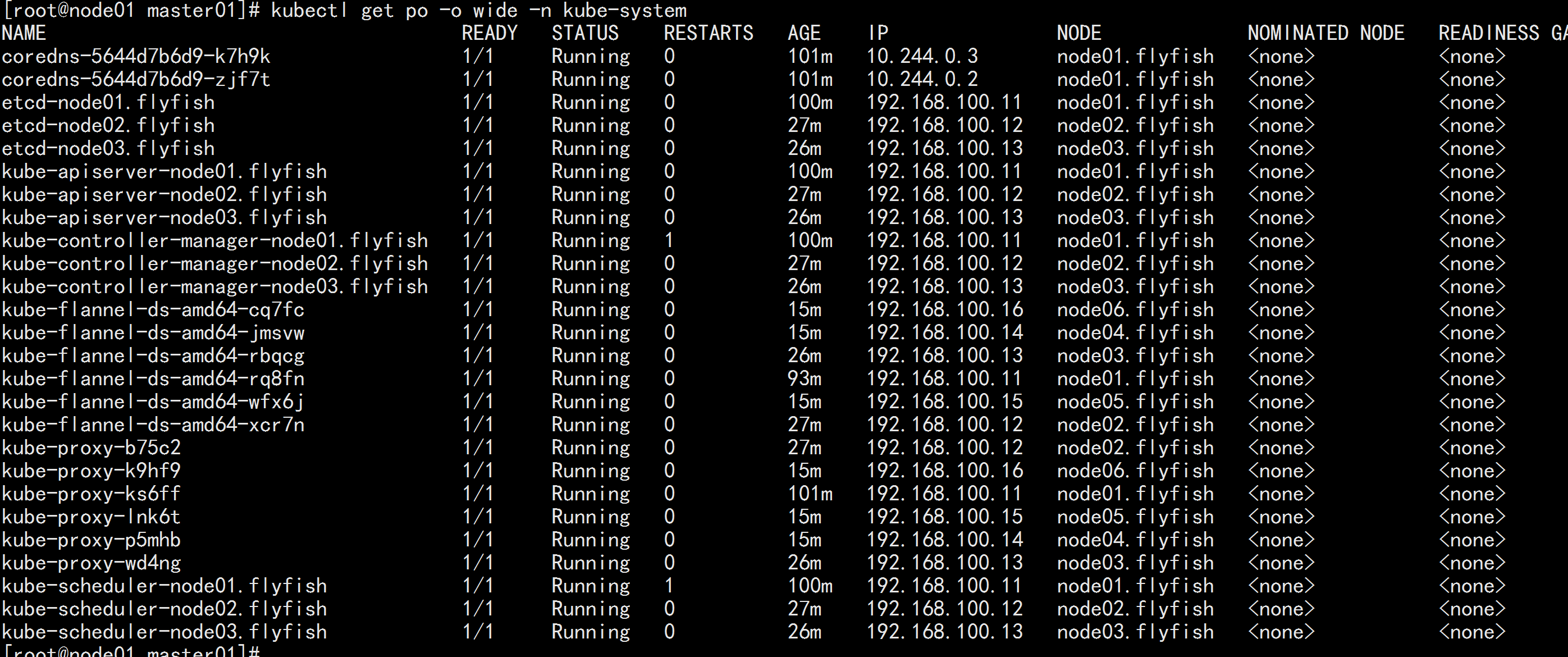

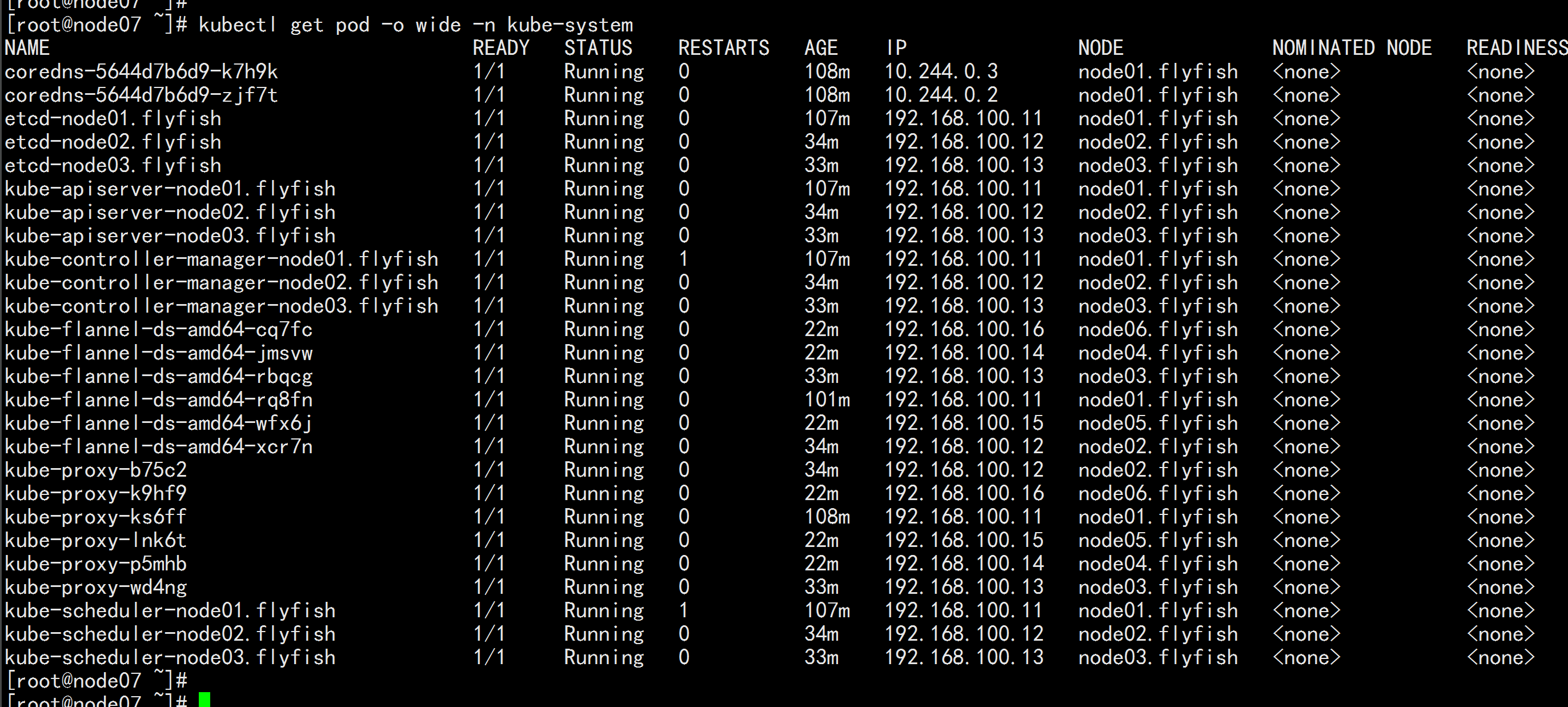

查看节点 kubectl get node kubectl get pod -o wide -n kube-system

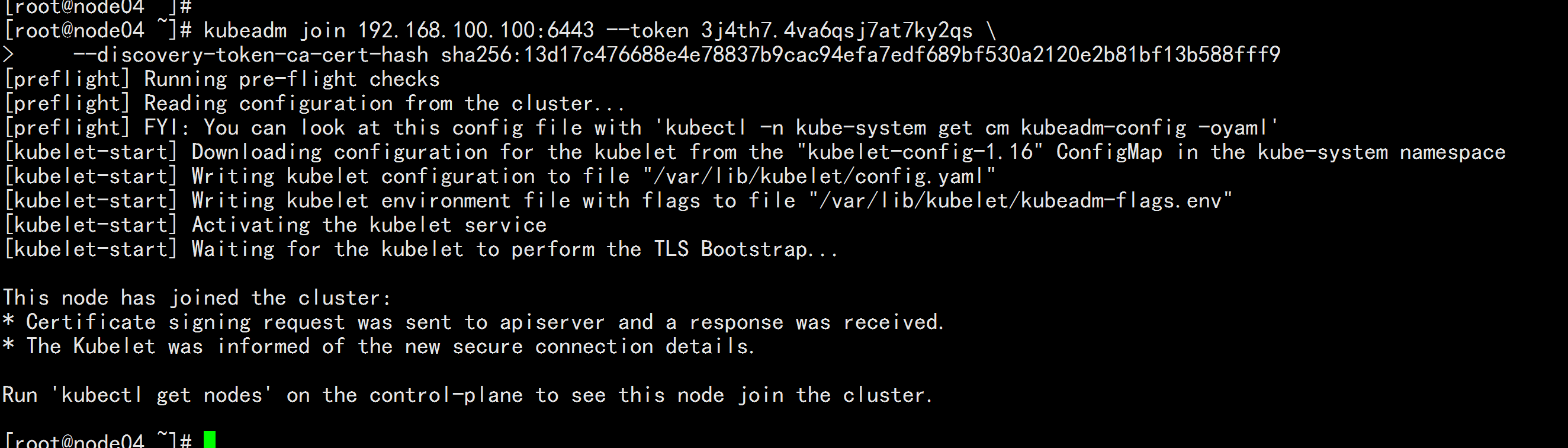

5.4 将从节点加入集群

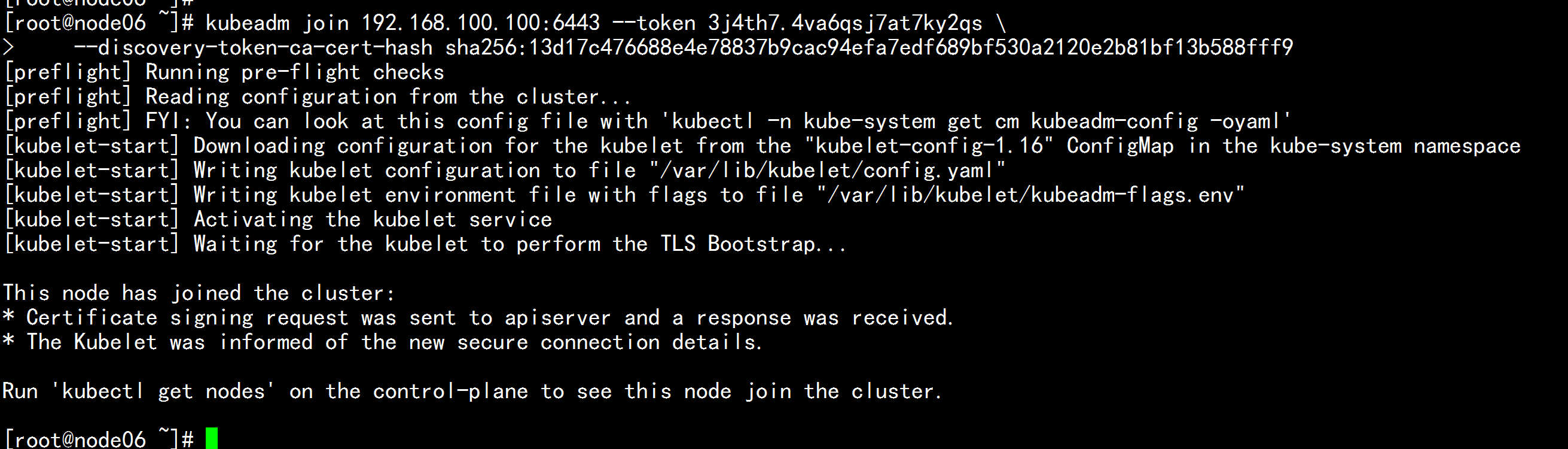

node04.flyfish 加入 集群 kubeadm join 192.168.100.100:6443 --token 3j4th7.4va6qsj7at7ky2qs \ --discovery-token-ca-cert-hash sha256:13d17c476688e4e78837b9cac94efa7edf689bf530a2120e2b81bf13b588fff9

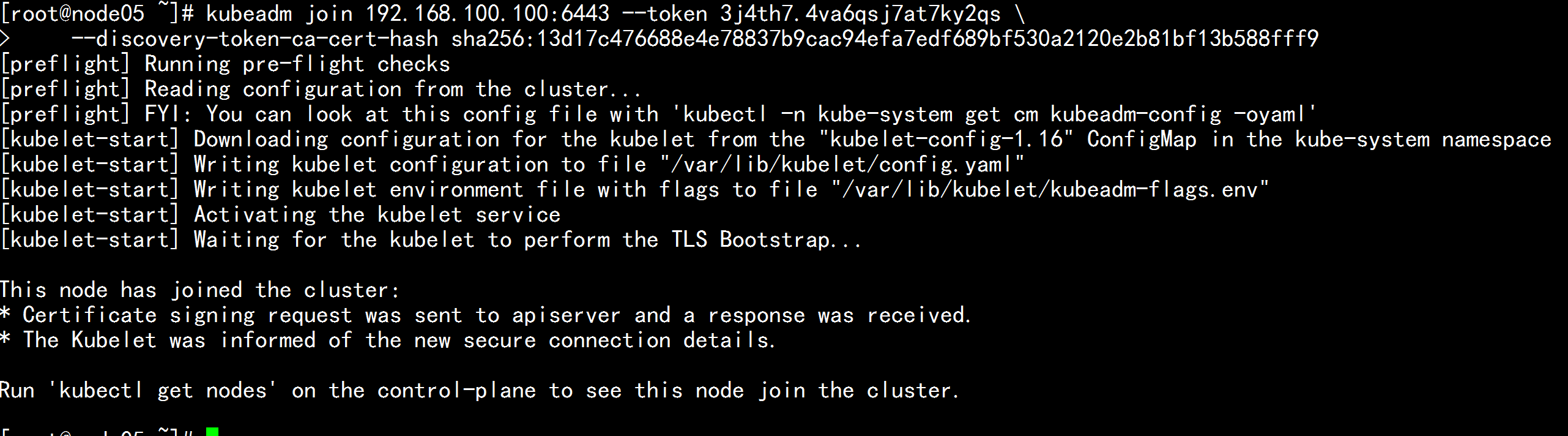

node05.flyfish 加入集群 kubeadm join 192.168.100.100:6443 --token 3j4th7.4va6qsj7at7ky2qs \ --discovery-token-ca-cert-hash sha256:13d17c476688e4e78837b9cac94efa7edf689bf530a2120e2b81bf13b588fff9

kubectl get node kubectl get pods -o wide -n kube-system

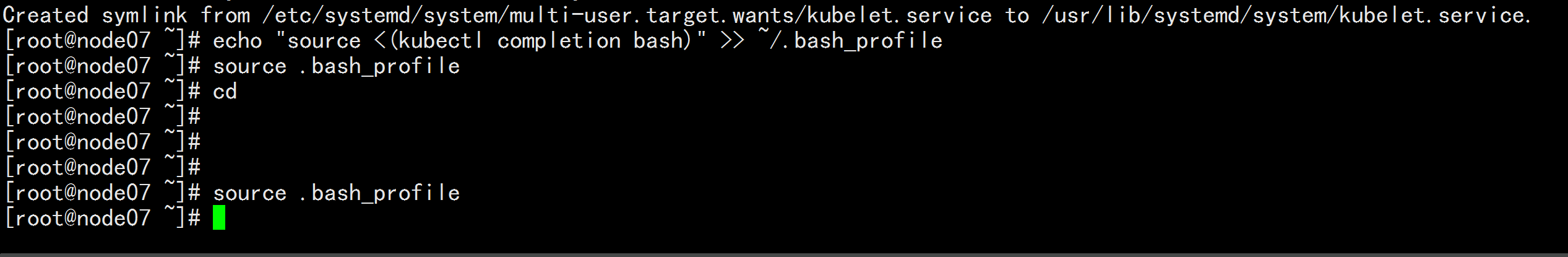

5.5 在node07.flyfish 上面进行测试

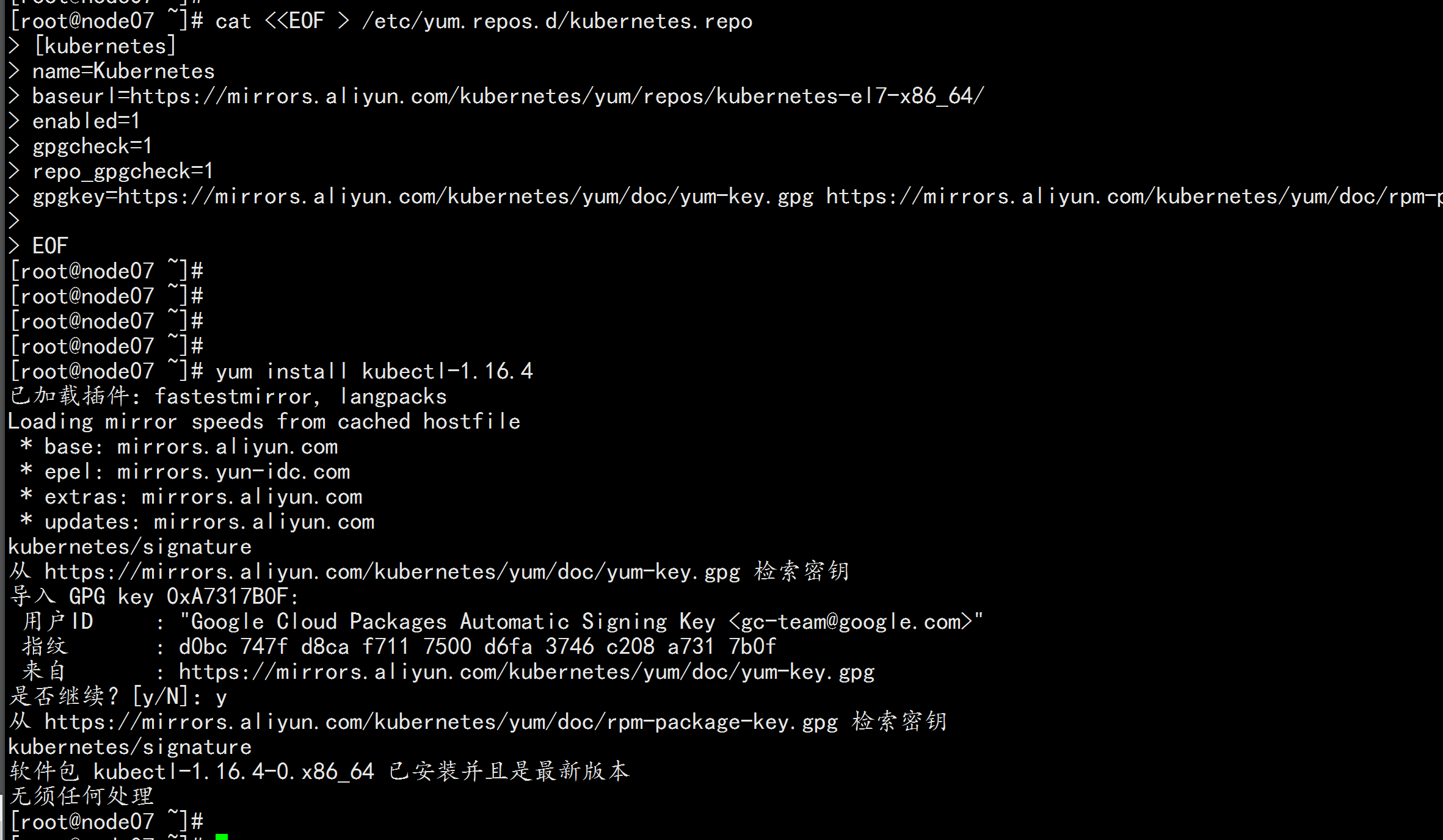

登录 node07.flyfish 设置kubernetes源 cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install -y kubectl-1.16.4

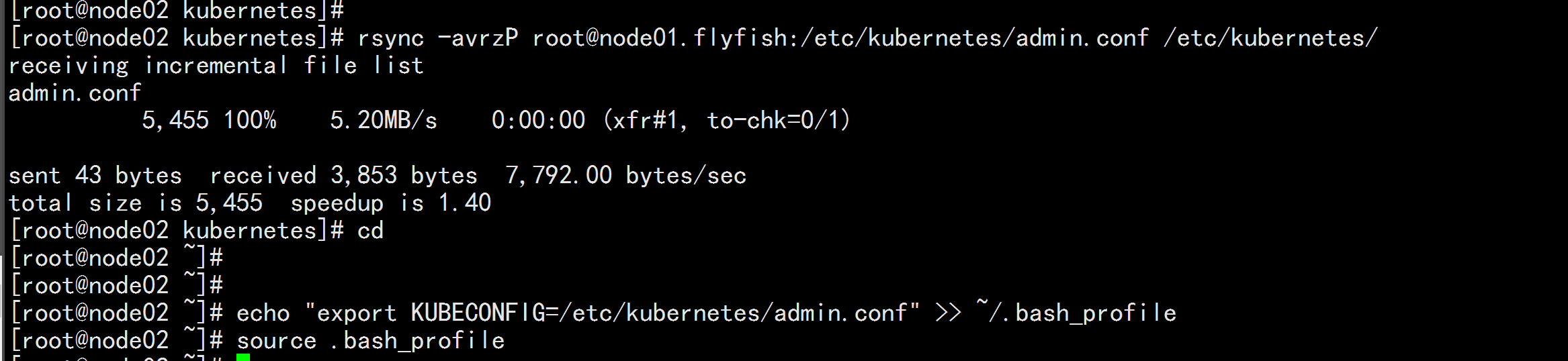

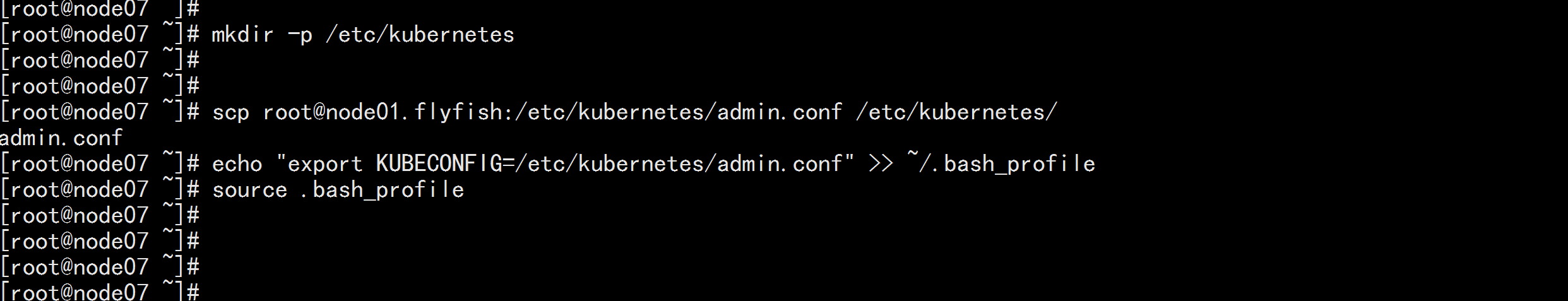

拷贝admin.conf mkdir -p /etc/kubernetes scp root@node01.flyfish:/etc/kubernetes/admin.conf /etc/kubernetes/ echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile source .bash_profile

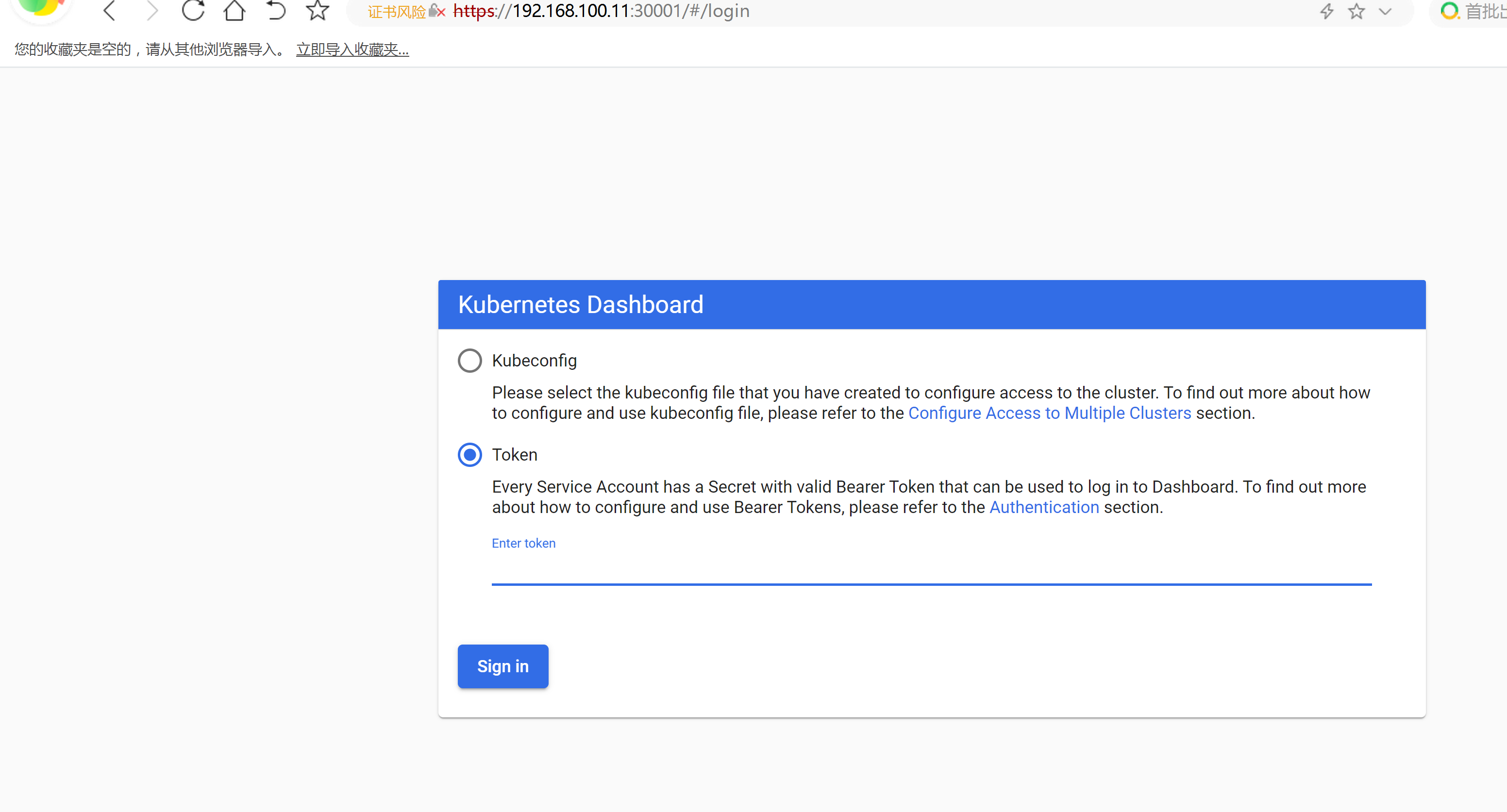

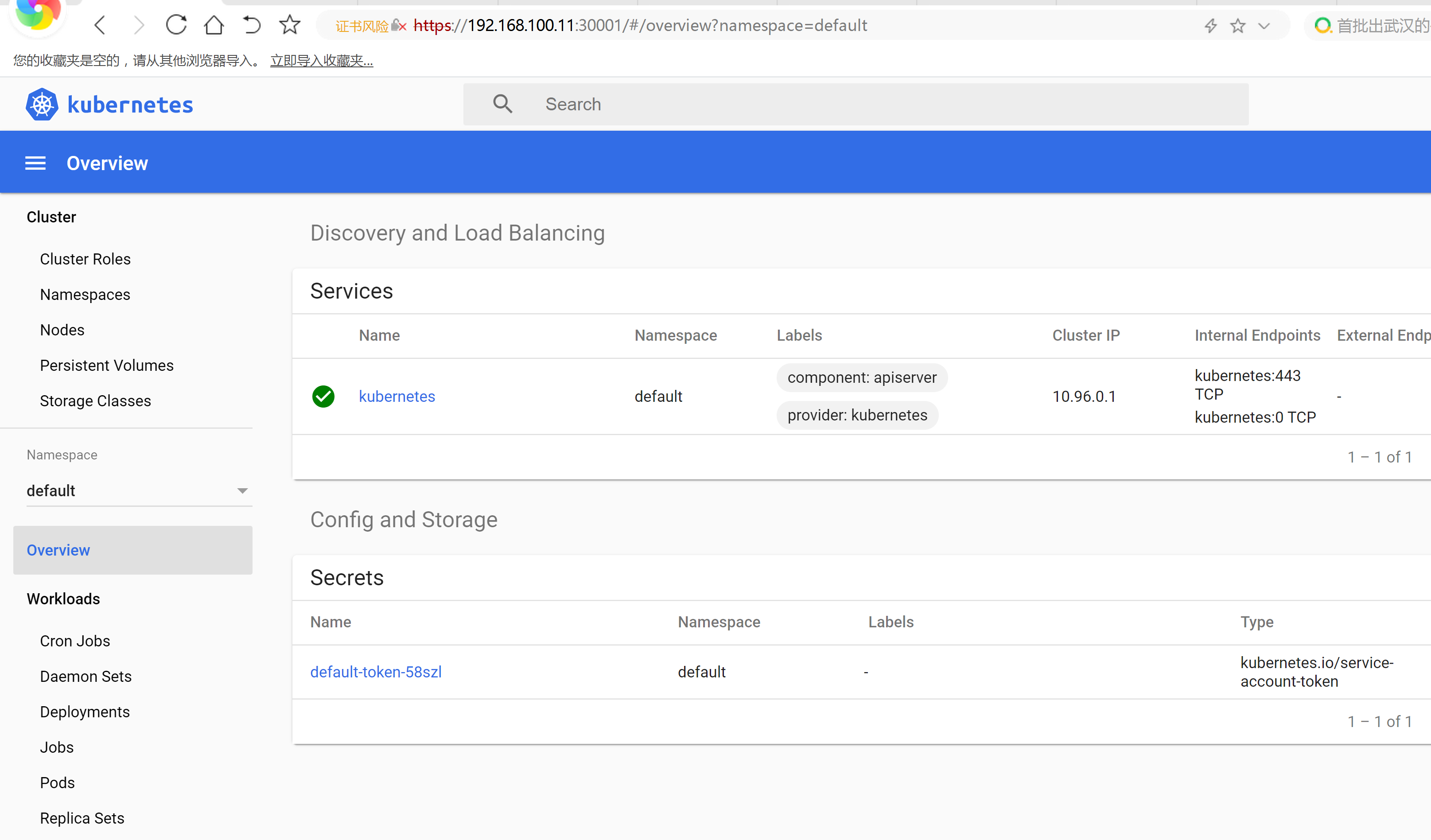

5.6部署dashboard 界面

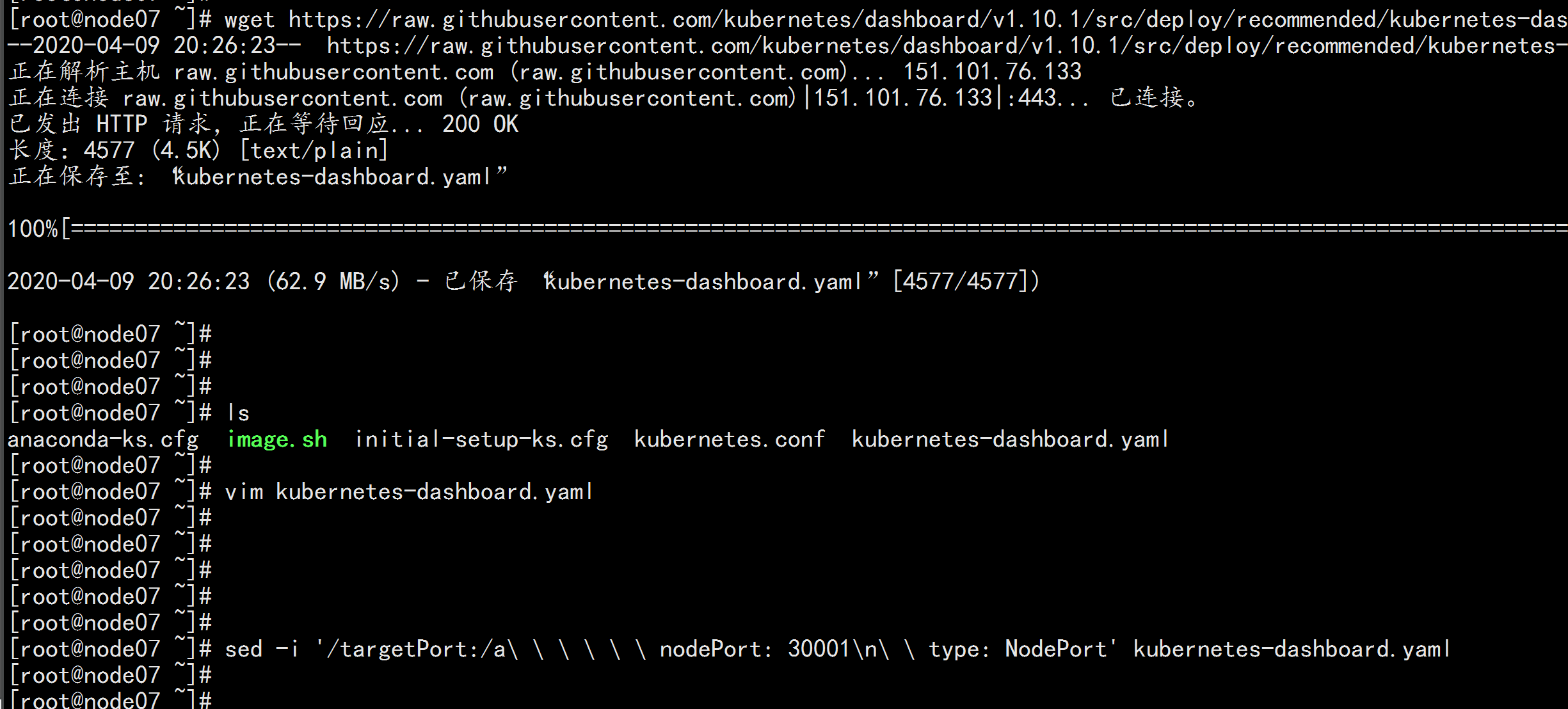

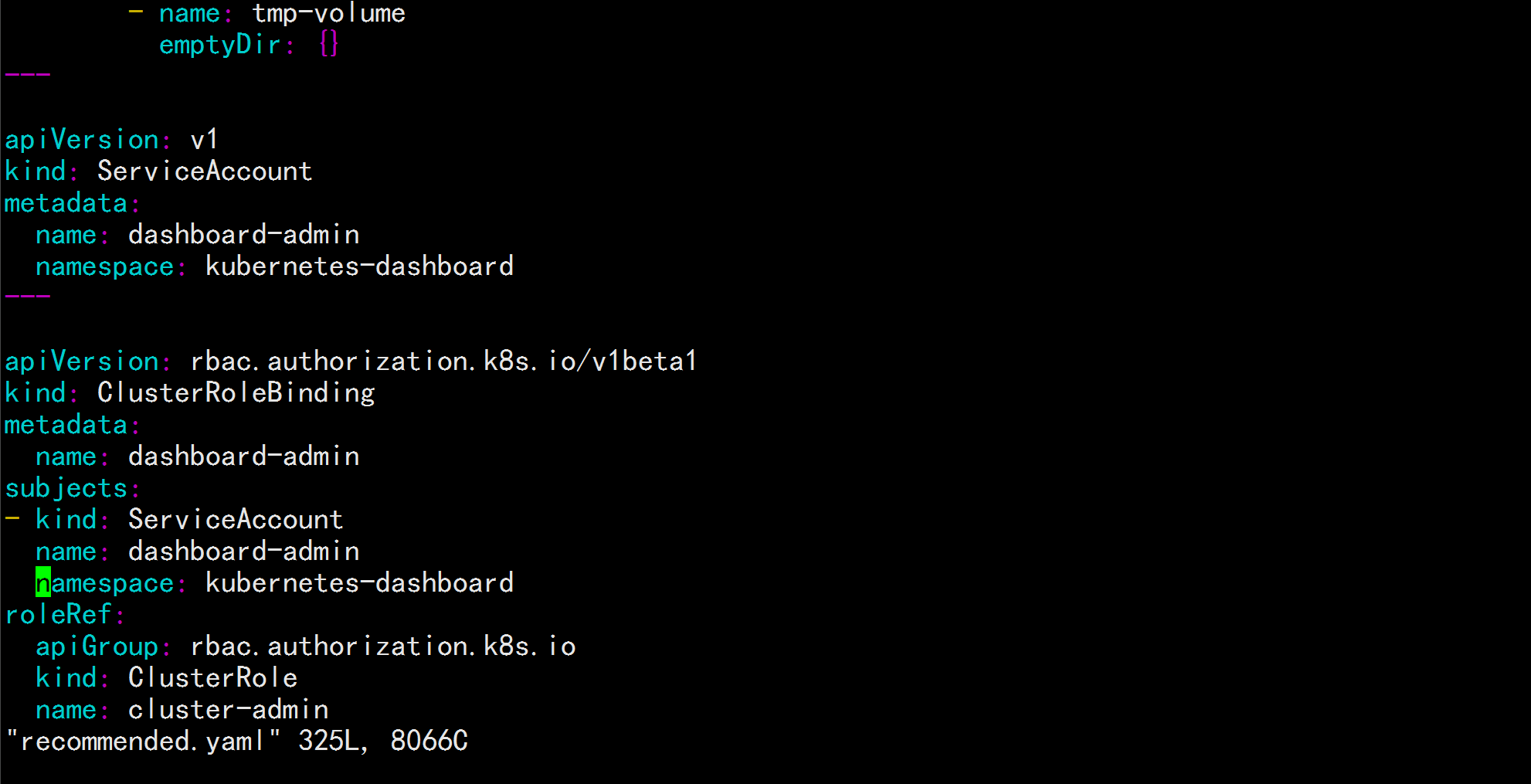

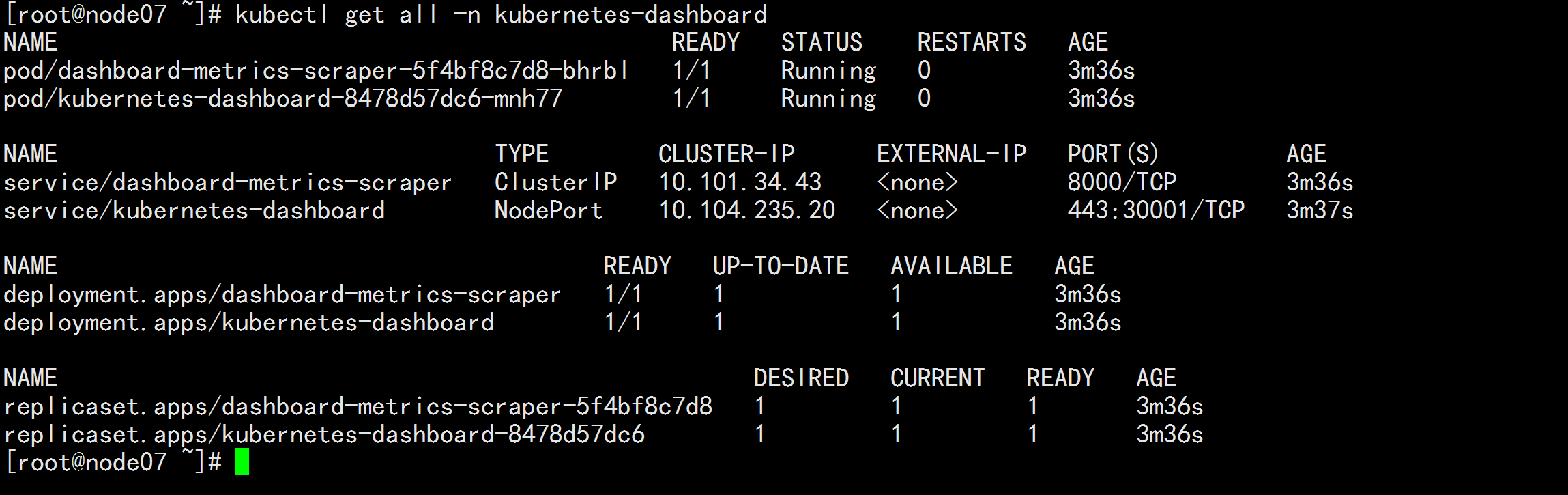

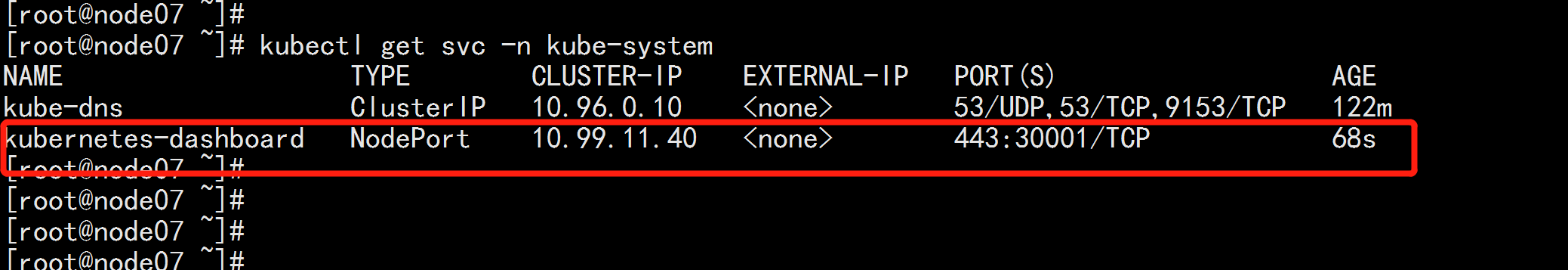

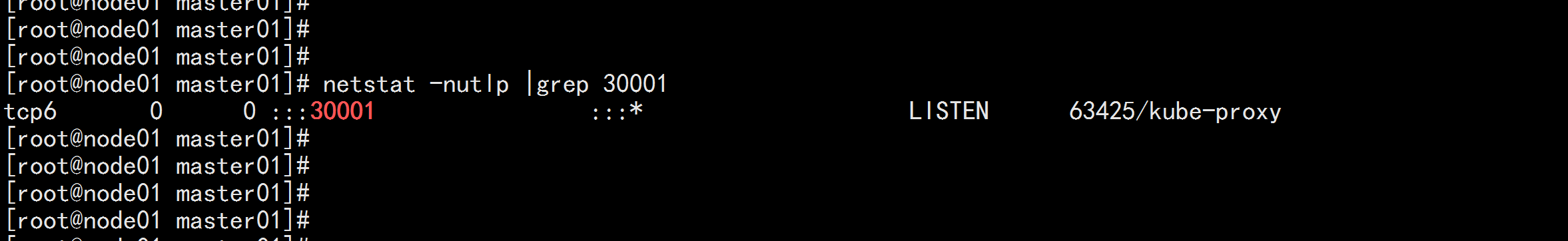

注:在node07.flyfish节点上进行如下操作 1.创建Dashboard的yaml文件 wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml sed -i 's/kubernetesui/registry.cn-hangzhou.aliyuncs.com\/loong576/g' recommended.yaml sed -i '/targetPort: 8443/a\ \ \ \ \ \ nodePort: 30001\n\ \ type: NodePort' recommended.yaml 新增管理员帐号 vim recommended.yaml 到最后加上: --- --- apiVersion: v1 kind: ServiceAccount metadata: name: dashboard-admin namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: dashboard-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin ---

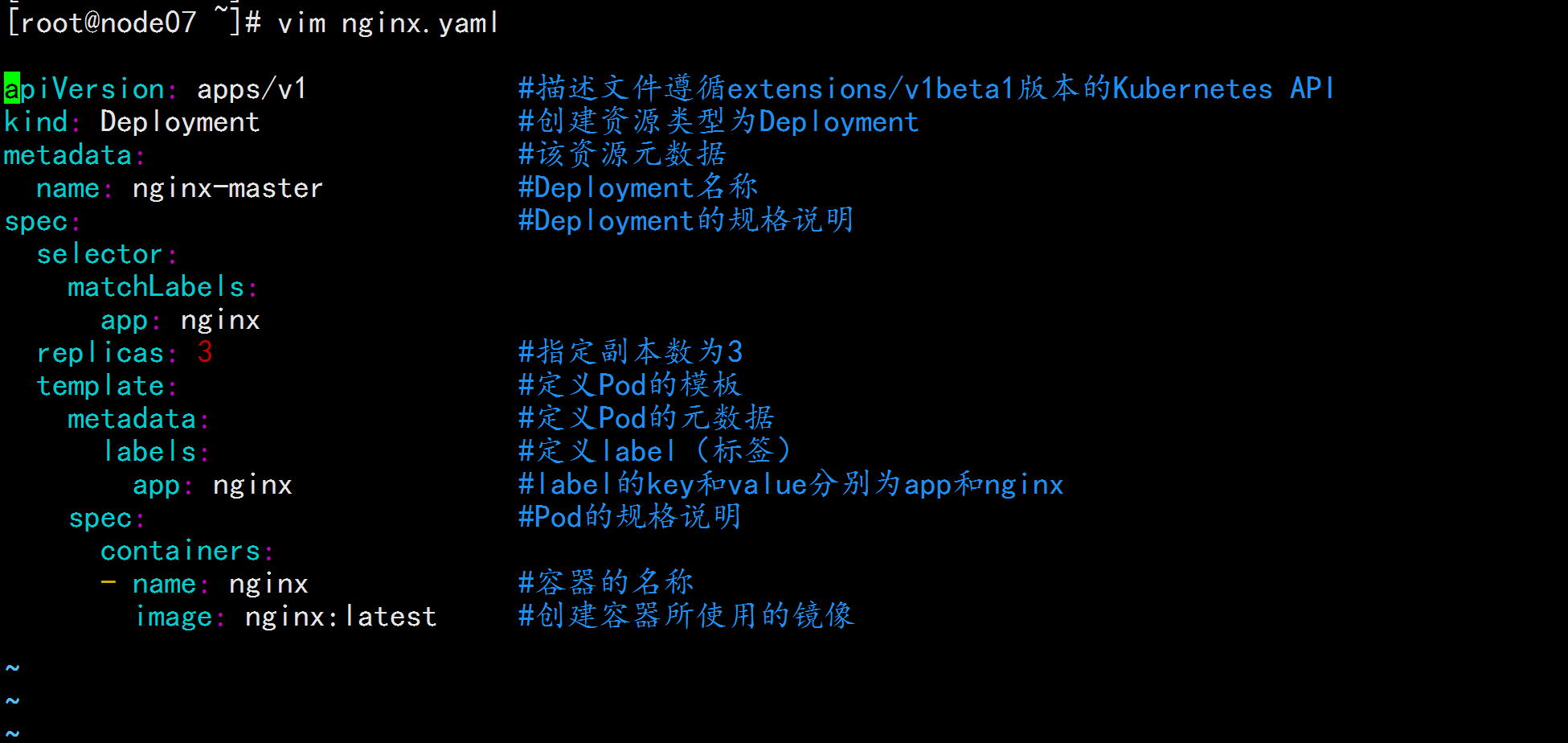

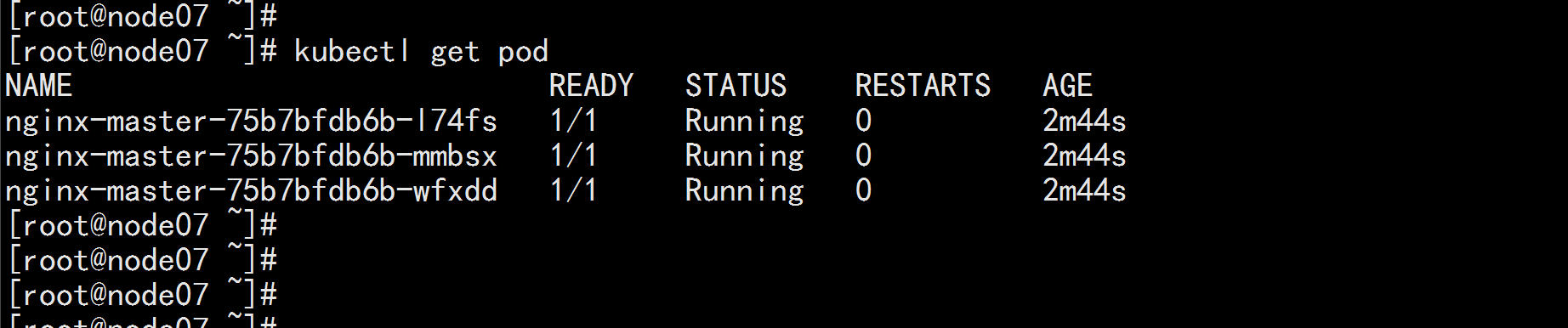

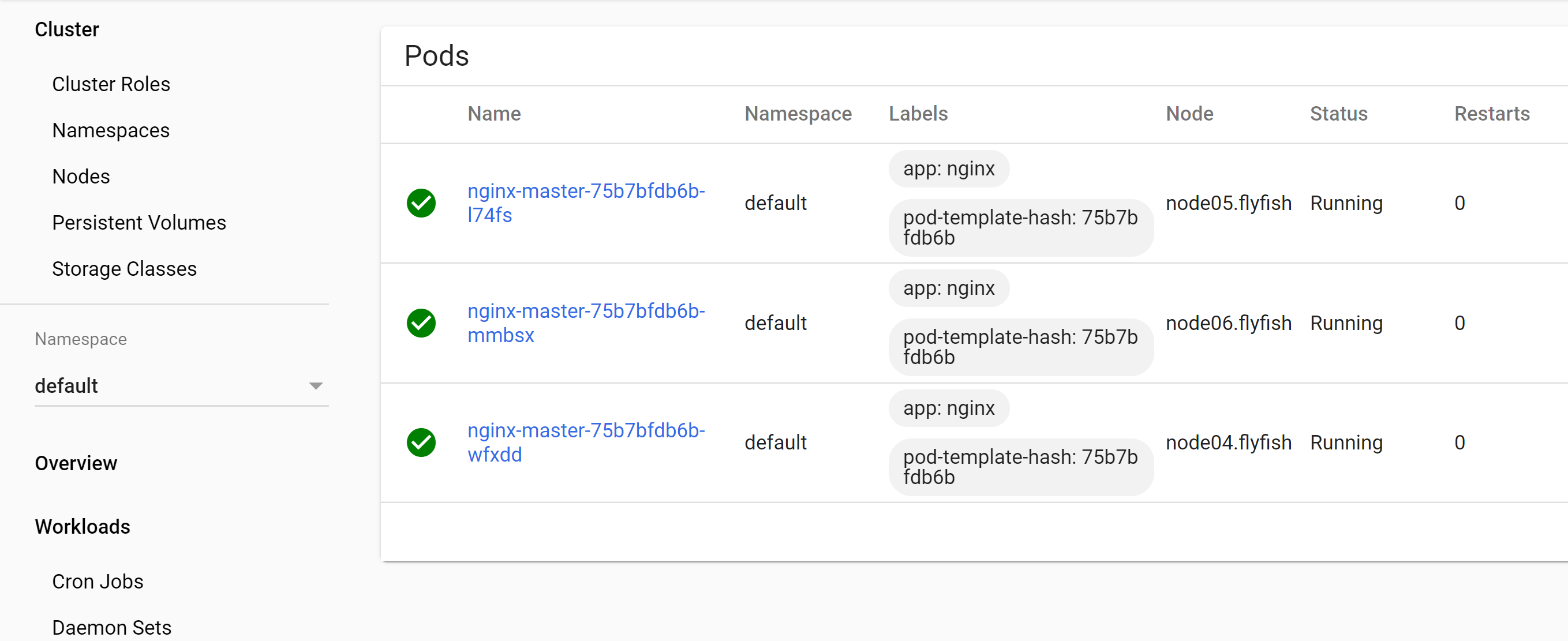

新建一个pod ---- vim nignx.yaml apiVersion: apps/v1 #描述文件遵循extensions/v1beta1版本的Kubernetes API kind: Deployment #创建资源类型为Deployment metadata: #该资源元数据 name: nginx-master #Deployment名称 spec: #Deployment的规格说明 selector: matchLabels: app: nginx replicas: 3 #指定副本数为3 template: #定义Pod的模板 metadata: #定义Pod的元数据 labels: #定义label(标签) app: nginx #label的key和value分别为app和nginx spec: #Pod的规格说明 containers: - name: nginx #容器的名称 image: nginx:latest #创建容器所使用的镜像 ---- kubectl apply -f nginx.yaml kubectl get pod