链家二手房源信息采集 这里以采集 北京二手房源 为例,要进行获取的字段如下 爬虫逻辑:【分页url获取】– 【页面列表数据的获取】 函数式编程: 函数1:get_urls(city_url,n

链家二手房源信息采集

这里以采集 北京二手房源 为例,要进行获取的字段如下

爬虫逻辑:【分页url获取】–> 【页面列表数据的获取】

函数式编程:

函数1:get_urls(city_url,n) → 【分页网页url获取】函数

city_url:不同城市起始网址

n:页数参数

函数2:get_data(ui,d_h,table) → 【数据采集及mongo入库】函数

ui:数据信息网页

d_h:user-agent信息

table:mongo集合对象

前期准备及封装第一个函数

import time

from bs4 import BeautifulSoup

import pymongo

if __name__ == '__main__':

一般查找该页面下面的2-4页即可

u3 = https://bj.lianjia.com/ershoufang/pg3/

u4 = https://bj.lianjia.com/ershoufang/pg4/

......

'''【分页网页url获取】函数

city_url:不同城市起始网址

n:页数参数

'''

lst = []

for i in range(1,n+1):

lst.append(city_url + f'pg{i}/')

return lst

print(get_urls('https://bj.lianjia.com/ershoufang/',5))

输出结果为:

[‘https://bj.lianjia.com/ershoufang/pg1/’,

‘https://bj.lianjia.com/ershoufang/pg2/’,

‘https://bj.lianjia.com/ershoufang/pg3/’,

‘https://bj.lianjia.com/ershoufang/pg4/’,

‘https://bj.lianjia.com/ershoufang/pg5/’]

向网站发送请求

在获取网址后要检测一下是否可以进行数据的获取,以第一个页面的url为例(记得提前配置好headers和cookies),代码如下

dic_headers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'}cookies = "TY_SESSION_ID=a63a5c48-ee8a-411b-b774-82b887a09de9; lianjia_uuid=1e4ed8ae-d689-4d12-a788-2e93397646fd; _smt_uid=5dbcff46.49fdcd46; UM_distinctid=16e2a452be0688-0c84653ae31a8-e343166-1fa400-16e2a452be1b5b; _jzqy=1.1572667207.1572667207.1.jzqsr=baidu|jzqct=%E9%93%BE%E5%AE%B6.-; _ga=GA1.2.1275958388.1572667209; _jzqx=1.1572671272.1572671272.1.jzqsr=sh%2Elianjia%2Ecom|jzqct=/ershoufang/pg2l1/.-; select_city=310000; lianjia_ssid=a2a11c0a-c451-43aa-879e-0d202a663a5d; Hm_lvt_9152f8221cb6243a53c83b956842be8a=1582085114; CNZZDATA1253492439=1147125909-1572665418-https%253A%252F%252Fsp0.baidu.com%252F%7C1582080390; CNZZDATA1254525948=626340744-1572665293-https%253A%252F%252Fsp0.baidu.com%252F%7C1582083769; CNZZDATA1255633284=176672440-1572665274-https%253A%252F%252Fsp0.baidu.com%252F%7C1582083985; CNZZDATA1255604082=1717363940-1572665282-https%253A%252F%252Fsp0.baidu.com%252F%7C1582083899; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%2216e2a452d07c03-0d376ce6817042-e343166-2073600-16e2a452d08ab2%22%2C%22%24device_id%22%3A%2216e2a452d07c03-0d376ce6817042-e343166-2073600-16e2a452d08ab2%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_referrer%22%3A%22%22%2C%22%24latest_referrer_host%22%3A%22%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%2C%22%24latest_utm_source%22%3A%22baidu%22%2C%22%24latest_utm_medium%22%3A%22pinzhuan%22%2C%22%24latest_utm_campaign%22%3A%22sousuo%22%2C%22%24latest_utm_content%22%3A%22biaotimiaoshu%22%2C%22%24latest_utm_term%22%3A%22biaoti%22%7D%7D; _qzjc=1; _jzqa=1.941285633448461200.1572667207.1572671272.1582085116.3; _jzqc=1; _jzqckmp=1; _gid=GA1.2.1854019821.1582085121; Hm_lpvt_9152f8221cb6243a53c83b956842be8a=1582085295; _qzja=1.476033730.1572667206855.1572671272043.1582085116087.1582085134003.1582085295034.0.0.0.14.3; _qzjb=1.1582085116087.4.0.0.0; _qzjto=4.1.0; _jzqb=1.4.10.1582085116.1"

dic_cookies = {}

for i in cookies.split('; '):

dic_cookies[i.split("=")[0]] = i.split("=")[1]

r = requests.get('https://bj.lianjia.com/ershoufang/pg1/',headers = dic_headers, cookies = dic_cookies)

print(r)

输出结果为:(当结果返回200时候,说明网页可以正常进行数据获取)

<Response [200]>

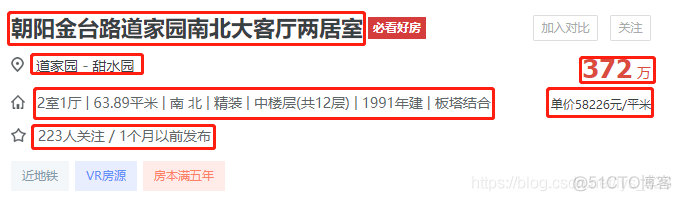

查找每个字段对应的标签并获取数据

soup = BeautifulSoup(r.text,'lxml')

dic = {}

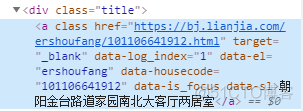

dic['标题'] = soup.find('div',class_="title").a.text

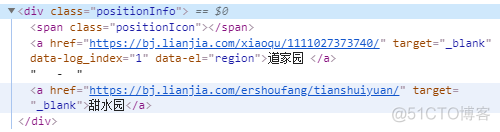

info1 = soup.find('div',class_="positionInfo").text

dic['小区'] = info1.split(" - ")[0]

dic['地址'] = info1.split(" - ")[1]

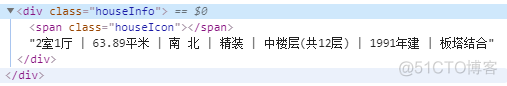

info2 = soup.find('div', class_="houseInfo").text

dic['户型'] = info2.split(" | ")[0]

dic['面积'] = info2.split(" | ")[1]

dic['朝向'] = info2.split(" | ")[2]

dic['装修类型'] = info2.split(" | ")[3]

dic['楼层'] = info2.split(" | ")[4]

dic['建筑完工时间'] = info2.split(" | ")[5]

dic['是否为板房'] = info2.split(" | ")[6]

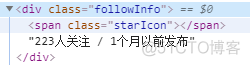

info3 = soup.find('div',class_="followInfo").text

dic['关注量'] = info3.split(" / ")[0]

dic['发布时间'] = info3.split(" / ")[1]

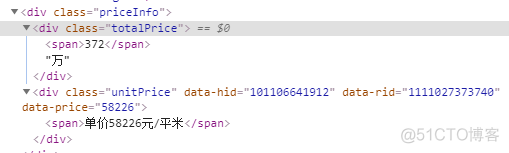

dic['总价'] = soup.find('div', class_="totalPrice").text

dic['单价'] = soup.find('div', class_="unitPrice").text.replace('单价','')

dic['链接'] = soup.find('div',class_="title").a['href']

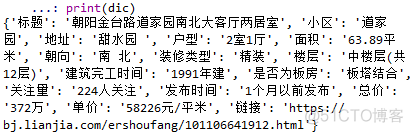

print(dic)

输出结果为:

封装第二个函数

在进行试错无误后,就可以进行函数的封装

db = myclient['链家二手房_1']

datatable = db['data_1']

#datatable.delete_many({}) 如果该表格下有数据的话可以使用这条语句

'''【数据采集及mongo入库】函数

ui:数据信息网页

d_h:user-agent信息

table:mongo集合对象

'''

ri = requests.get(ui,headers = d_h,cookies = d_c)

soupi = BeautifulSoup(ri.text, 'lxml')

lis = soupi.find('ul',class_="sellListContent").find_all("li")

n = 0

for li in lis:

dic = {}

dic['标题'] = li.find('div',class_="title").text

info1 = li.find('div',class_="positionInfo").text

dic['小区'] = info1.split(" - ")[0]

dic['地址'] = info1.split(" - ")[1]

info2 = li.find('div', class_="houseInfo").text

dic['户型'] = info2.split(" | ")[0]

dic['面积'] = info2.split(" | ")[1]

dic['朝向'] = info2.split(" | ")[2]

dic['装修类型'] = info2.split(" | ")[3]

dic['楼层'] = info2.split(" | ")[4]

dic['建筑完工时间'] = info2.split(" | ")[5]

dic['是否为板房'] = info2.split(" | ")[6]

info3 = li.find('div',class_="followInfo").text

dic['关注量'] = info3.split(" / ")[0]

dic['发布时间'] = info3.split(" / ")[1]

dic['价钱'] = li.find('div', class_="totalPrice").text

dic['每平米价钱'] = li.find('div', class_="unitPrice").text

dic['房间优势'] = li.find('div', class_="tag").text

dic['链接'] = li.find('a')['href']

table.insert_one(dic)

n += 1

return

count = 0

for u in urllst:

print("程序正在休息......")

time.sleep(5)

try:

count += get_data(urllst[0],dic_headers,dic_cookies,datatable)

print(f'成功采集{count}条数据')

except:

errorlst.append(u)

print('数据采集失败,网址为:',u)

输出的结果如下: