Ceph 是一种为优秀的性能、可靠性和可扩展性而设计的统一的、分布式文件系统。Ceph 的统一体现在可以提供文件系统、块存储和对象存储,分布式体现在可以动态扩展。在国内一些公司

# 在node1、node2、node3上安装ceph并组成集群

yum -y install ceph[root@node1 ~]# cat /etc/ceph/ceph.conf

[global]

fsid = a7f64266-0894-4f1e-a635-d0aeaca0e993

mon initial members = node1

mon host = 192.168.31.101

public_network = 192.168.31.0/24

cluster_network = 172.16.100.0/24ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *'sudo ceph-authtool --create-keyring /etc/ceph/ceph.client.admin.keyring \

--gen-key -n client.admin \

--cap mon 'allow *' --cap osd 'allow *' --cap mds 'allow *' --cap mgr 'allow *'生成bootstrap-osd秘钥,生成client.bootstrap-osd用户并将该用户添加到秘钥

sudo ceph-authtool --create-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring \

--gen-key -n client.bootstrap-osd \

--cap mon 'profile bootstrap-osd' --cap mgr 'allow r'sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /etc/ceph/ceph.client.admin.keyring

sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring

sudo chown ceph:ceph /tmp/ceph.mon.keyringmonmaptool --create --add node1 192.168.31.101 --fsid a7f64266-0894-4f1e-a635-d0aeaca0e993 /tmp/monmapsudo -u ceph mkdir /var/lib/ceph/mon/ceph-node1使用监视器映射和秘钥填充监视器守护程序

sudo -u ceph ceph-mon --mkfs -i node1 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring启动监视器

sudo systemctl start ceph-mon@node1

sudo systemctl enable ceph-mon@node1ceph -sceph mon enable-msgr2ceph config set mon auth_allow_insecure_global_id_reclaim falsepip3 install pecan werkzeugmkdir /var/lib/ceph/mgr/ceph-node1

ceph auth get-or-create mgr.node1 mon 'allow profile mgr' osd 'allow *' mds 'allow *' > \

/var/lib/ceph/mgr/ceph-node1/keyring

-------------------------------------

systemctl start ceph-mgr@node1

systemctl enable ceph-mgr@node1 # 在node1上将添加3个100G硬盘加入ceph

ceph-volume lvm create --data /dev/sdb

ceph-volume lvm create --data /dev/sdc

ceph-volume lvm create --data /dev/sdd# 同步配置到其它节点

scp /etc/ceph/* node2:/etc/ceph/

scp /etc/ceph/* node3:/etc/ceph/

scp /var/lib/ceph/bootstrap-osd/* node2:/var/lib/ceph/bootstrap-osd/

scp /var/lib/ceph/bootstrap-osd/* node3:/var/lib/ceph/bootstrap-osd/

# node2、node3节点上添加OSD

ceph-volume lvm create --data /dev/sdb

ceph-volume lvm create --data /dev/sdc

ceph-volume lvm create --data /dev/sdd[root@node1 ~]# ceph -s

cluster:

id: a7f64266-0894-4f1e-a635-d0aeaca0e993

health: HEALTH_OK

services:

mon: 1 daemons, quorum node1 (age 8m)

mgr: node1(active, since 7m)

osd: 9 osds: 9 up (since 5s), 9 in (since 7s)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 9.0 GiB used, 891 GiB / 900 GiB avail

pgs: [root@node1 ~]# cat /etc/ceph/ceph.conf

[global]

fsid = a7f64266-0894-4f1e-a635-d0aeaca0e993

mon initial members = node1,node2,node3

mon host = 192.168.31.101,192.168.31.102,192.168.31.103

public_network = 192.168.31.0/24

cluster_network = 172.16.100.0/24

auth cluster required = cephx

auth service required = cephx

auth client required = cephx

osd journal size = 1024

osd pool default size = 3

osd pool default min size = 2

osd pool default pg num = 333

osd pool default pgp num = 333

osd crush chooseleaf type = 1

------------------------------------# 拷贝文件到其它节点

scp /etc/ceph/ceph.conf node2:/etc/ceph/

scp /etc/ceph/ceph.conf node3:/etc/ceph/-------------------------# 这里给出node2的操作,node3操作类似,修改对应名字即可

# 获取集群已有的mon.keyring

ceph auth get mon. -o mon.keyring

# 获取集群已有的mon.map

ceph mon getmap -o mon.map

# 创建监视器数据目录,会自动创建/var/lib/ceph/mon/ceph-node2

ceph-mon -i node2 --mkfs --monmap mon.map --keyring mon.keyring

chown ceph.ceph /var/lib/ceph/mon/ceph-node2 -R

# 启动mon

systemctl start ceph-mon@node2

systemctl enable ceph-mon@node2

-------------------------------------------

# 查看状态

[root@node1 ~]# ceph -s

cluster:

id: a7f64266-0894-4f1e-a635-d0aeaca0e993

health: HEALTH_OK

services:

mon: 3 daemons, quorum node1,node2,node3 (age 2m)

mgr: node1(active, since 20m)

osd: 9 osds: 9 up (since 29s), 9 in (since 13m)

task status:

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 9.1 GiB used, 891 GiB / 900 GiB avail

pgs:

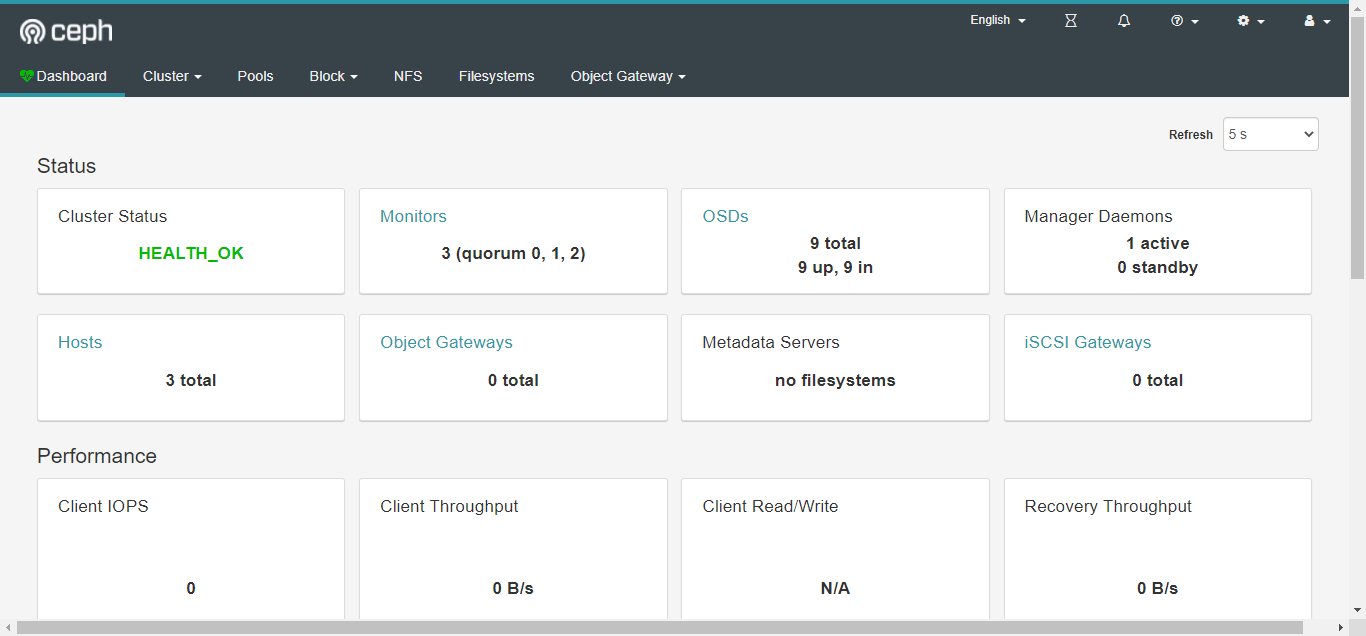

# node1安装Dashboard

yum install ceph-mgr-dashboard -y

# 开启mgr功能

ceph mgr module enable dashboard

# 生成并安装自签名的证书

ceph dashboard create-self-signed-cert

# 创建一个dashboard登录用户名密码(ceph、123456)

echo 123456 > ceph-dashboard-password.txt

ceph dashboard ac-user-create ceph -i ceph-dashboard-password.txt administrator

# 查看服务访问方式

[root@node1 ~]# ceph mgr services

{

"dashboard": "https://node1:8443/"

}

---------------------------------------------# OpenStack对接ceph前的准备

# 前三个节点node1,node2,ndoe3是ceph集群,已经安装组件,所以node4和node5要安装ceph-common

yum -y install ceph-common

--- 拷贝配置文件,在node1上拷贝到所有nova节点

for i in $(seq 2 5); do scp /etc/ceph/* node$i:/etc/ceph;done

--创建存储池 64

ceph osd pool create images 64

ceph osd pool create vms 64

ceph osd pool create volumes 64

ceph osd pool application enable images rbd

ceph osd pool application enable vms rbd

ceph osd pool application enable volumes rbd

---配置鉴权

ceph auth get-or-create client.cinder mon 'allow r' \

osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images' \

-o /etc/ceph/ceph.client.cinder.keyring

# ceph auth caps client.cinder mon 'allow *' osd 'allow *' # 开放所有权限,这里两个命令是将上面的命令进行分解

# ceph auth get client.cinder -o /etc/ceph/ceph.client.cinder.keyring # 将证书导出给服务用

ceph auth get-or-create client.glance mon 'allow r' \

osd 'allow class-read object_prefix rbd_children, allow rwx pool=images' \

-o /etc/ceph/ceph.client.glance.keyring

---将生成的key拷贝,拷贝到所有nova节点

for i in $(seq 2 5); do scp /etc/ceph/*.keyring node$i:/etc/ceph;done

--- 修改权限,拷贝到所有nova节点的key文件

for i in $(seq 2 5); do ssh node$i chown glance:glance /etc/ceph/ceph.client.glance.keyring ;done # 这两个文件glance和cinder要使用

for i in $(seq 2 5); do ssh node$i chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring;done

---将秘钥加入到libvcirt中,所有nova节点执行(node2,node3.node4,node5)

ceph auth get-key client.cinder | tee client.cinder.key

uuidgen # 生成uuid,这里使用文档中的uuid

cat > secret.xml <<EOF

<secret ephemeral='no' private='no'>

<uuid>ae3d9d0a-df88-4168-b292-c07cdc2d8f02</uuid>

<usage type='ceph'>

<name>client.cinder secret</name>

</usage>

</secret>

EOF

virsh secret-define --file secret.xml

virsh secret-set-value --secret ae3d9d0a-df88-4168-b292-c07cdc2d8f02 --base64 $(cat client.cinder.key) && rm client.cinder.key secret.xml

---------------------------------------------# OpenStack对接ceph,配置 Glance 节点(node1),对接后需要重新上传镜像

# crudini --set /etc/glance/glance-api.conf DEFAULT "show_image_direct_url" "True"

# crudini --set /etc/glance/glance-api.conf glance_store "default_store" "rbd"

# crudini --set /etc/glance/glance-api.conf glance_store "rbd_store_user" "glance"

# crudini --set /etc/glance/glance-api.conf glance_store "rbd_store_pool" "images"

# crudini --set /etc/glance/glance-api.conf glance_store "stores" "glance.store.filesystem.Store, glance.store.http.Store, glance.store.rbd.Store"

# crudini --set /etc/glance/glance-api.conf paste_deploy "flavor" "keystone"

---------------------------------------------# OpenStack对接ceph,配置 Cinder 节点(node4,node5)

crudini --set /etc/cinder/cinder.conf DEFAULT "enabled_backends" "lvm,nfs,ceph" # 同时支持lvm、nfs、ceph

crudini --set /etc/cinder/cinder.conf ceph "volume_driver" "cinder.volume.drivers.rbd.RBDDriver"

crudini --set /etc/cinder/cinder.conf ceph "volume_backend_name" "ceph"

crudini --set /etc/cinder/cinder.conf ceph "rbd_pool" "volumes"

crudini --set /etc/cinder/cinder.conf ceph "rbd_ceph_conf" "/etc/ceph/ceph.conf"

crudini --set /etc/cinder/cinder.conf ceph "rbd_flatten_volume_from_snapshot" "false"

crudini --set /etc/cinder/cinder.conf ceph "rbd_max_clone_depth" "5"

crudini --set /etc/cinder/cinder.conf ceph "rados_connect_timeout" "-1"

crudini --set /etc/cinder/cinder.conf ceph "glance_api_version" "2"

crudini --set /etc/cinder/cinder.conf ceph "rbd_user" "cinder"

crudini --set /etc/cinder/cinder.conf ceph "rbd_secret_uuid" "ae3d9d0a-df88-4168-b292-c07cdc2d8f02"

---------------------------------------------# OpenStack对接ceph,配置 Nova 节点(node2,node3,node4,node5)

crudini --set /etc/nova/nova.conf libvirt "images_type" "rbd"

crudini --set /etc/nova/nova.conf libvirt "images_rbd_pool" "vms"

crudini --set /etc/nova/nova.conf libvirt "images_rbd_ceph_conf" "/etc/ceph/ceph.conf"

crudini --set /etc/nova/nova.conf libvirt "rbd_user" "cinder"

crudini --set /etc/nova/nova.conf libvirt "rbd_secret_uuid" "ae3d9d0a-df88-4168-b292-c07cdc2d8f02"

crudini --set /etc/nova/nova.conf libvirt "inject_password" "false"

crudini --set /etc/nova/nova.conf libvirt "inject_key" "false"

crudini --set /etc/nova/nova.conf libvirt "inject_partition" "-2"

crudini --set /etc/nova/nova.conf libvirt "live_migration_flag" "VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_PERSIST_DEST"

---------------------------------------------# OpenStack重启服务

--- 控制节点

systemctl restart openstack-glance-api openstack-nova-api openstack-cinder-api openstack-cinder-scheduler

--- 计算节点

for i in $(seq 2 5); do ssh node$i systemctl restart openstack-nova-compute;done

--- 存储节点

for i in 4 5; do ssh node$i systemctl restart openstack-cinder-volume;done

---------------------------------------------# OpenStack验证,在node1节点执行

[root@node1 ~]# cinder type-create ceph

+--------------------------------------+------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+------+-------------+-----------+

| 228269c3-6008-4e62-9408-a2fb04d74c1a | ceph | - | True |

+--------------------------------------+------+-------------+-----------+

[root@node1 ~]# cinder type-key ceph set volume_backend_name=ceph

--- 对glance、cinder、nova操作后确认 rbd ls images / vms / images

创建一个卷后确认

[root@node1 ~]# openstack volume create --size 1 --type ceph volume1

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2022-03-01T10:22:13.000000 |

| description | None |

| encrypted | False |

| id | 3a0ee405-ad4b-4453-8b66-029aa67f7af0 |

| migration_status | None |

| multiattach | False |

| name | volume1 |

| properties | |

| replication_status | None |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| type | ceph |

| updated_at | None |

| user_id | 5a44718261844cbd8a65621b9e3cea8d |

+---------------------+--------------------------------------+

[root@node1 ~]# rbd -p volumes ls -l # 在ceph中查看创建的块设备

NAME SIZE PARENT FMT PROT LOCK

volume-3a0ee405-ad4b-4453-8b66-029aa67f7af0 1 GiB 2