1 简介 在孤立词语音识别中,动态时间规整DTW算法是一种应用较为广泛的算法之一,有着较强的科学性,在立足于当前DTW语音识别算法应用的实际情况下,简略阐述了该课题的研究背景,并从

1 简介

在孤立词语音识别中,动态时间规整DTW算法是一种应用较为广泛的算法之一,有着较强的科学性,在立足于当前DTW语音识别算法应用的实际情况下,简略阐述了该课题的研究背景,并从预处理和特征参数提取以及DTW算法两方面着手对基于DTW算法的语音识别系统实现进行了探究,以此为基础展开了相应的仿真和分析,旨在为相关研究人员提供参考.

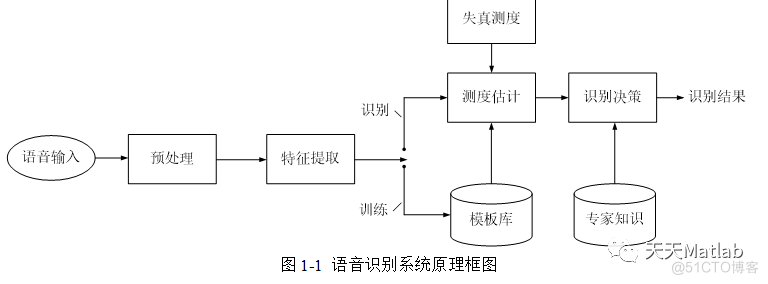

语音识别系统的典型原理框图如图1-1所示。从图中可以看出语音识别系统的本质就是一种模式识别系统,它也包括特征提取、模式匹配、参考模式库等基本单元。由于语音信号是一种典型的非平稳信号,加之呼吸气流、外部噪音、电流干扰等使得语音信号不能直接用于提取特征,而要进行前期的预处理。预处理过程包括预滤波、采样和量化、分帧、加窗、预加重、端点检测等。经过预处理的语音数据就可以进行特征参数提取。在训练阶段,将特征参数进行一定的处理之后,为每个词条得到一个模型,保存为模板库。在识别阶段,语音信号经过相同的通道得到语音参数,生成测试模板,与参考模板进行匹配,将匹配分数最高的参考模板作为识别结果。后续的处理过程还可能包括更高层次的词法、句法和文法处理等,从而最终将输入的语音信号转变成文本或命令。

本文所描述的语音识别系统(下称本系统)将对数字0~9共10段参考语音进行训练并建立模板库,之后将对多段测试语音进行识别测试。系统实现了上图中的语音输入、预处理、特征提取、训练建立模板库和识别等模块,最终建立了一个比较完整的语音识别系统。

2 部分代码

function [x,mn,mx]=melbankm(p,n,fs,fl,fh,w)%MELBANKM determine matrix for a mel-spaced filterbank [X,MN,MX]=(P,N,FS,FL,FH,W)

%

% Inputs: p number of filters in filterbank

% n length of fft

% fs sample rate in Hz

% fl low end of the lowest filter as a fraction of fs (default = 0)

% fh high end of highest filter as a fraction of fs (default = 0.5)

% w any sensible combination of the following:

% 't' triangular shaped filters in mel domain (default)

% 'n' hanning shaped filters in mel domain

% 'm' hamming shaped filters in mel domain

%

% 'z' highest and lowest filters taper down to zero (default)

% 'y' lowest filter remains at 1 down to 0 frequency and

% highest filter remains at 1 up to nyquist freqency

%

% If 'ty' or 'ny' is specified, the total power in the fft is preserved.

%

% Outputs: x a sparse matrix containing the filterbank amplitudes

% If x is the only output argument then size(x)=[p,1+floor(n/2)]

% otherwise size(x)=[p,mx-mn+1]

% mn the lowest fft bin with a non-zero coefficient

% mx the highest fft bin with a non-zero coefficient

%

% Usage: f=fft(s); f=fft(s);

% x=melbankm(p,n,fs); [x,na,nb]=melbankm(p,n,fs);

% n2=1+floor(n/2); z=log(x*(f(na:nb)).*conj(f(na:nb)));

% z=log(x*abs(f(1:n2)).^2);

% c=dct(z); c(1)=[];

%

% To plot filterbanks e.g. plot(melbankm(20,256,8000)')

%

%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% This program is free software; you can redistribute it and/or modify

% it under the terms of the GNU General Public License as published by

% the Free Software Foundation; either version 2 of the License, or

% (at your option) any later version.

%

% This program is distributed in the hope that it will be useful,

% but WITHOUT ANY WARRANTY; without even the implied warranty of

% MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

% GNU General Public License for more details.

%

% You can obtain a copy of the GNU General Public License from

% ftp://prep.ai.mit.edu/pub/gnu/COPYING-2.0 or by writing to

% Free Software Foundation, Inc.,675 Mass Ave, Cambridge, MA 02139, USA.

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

if nargin < 6

w='tz';

if nargin < 5

fh=0.5;

if nargin < 4

fl=0;

end

end

end

f0=700/fs;

fn2=floor(n/2);

lr=log((f0+fh)/(f0+fl))/(p+1);

% convert to fft bin numbers with 0 for DC term

bl=n*((f0+fl)*exp([0 1 p p+1]*lr)-f0);

b2=ceil(bl(2));

b3=floor(bl(3));

if any(w=='y')

pf=log((f0+(b2:b3)/n)/(f0+fl))/lr;

fp=floor(pf);

r=[ones(1,b2) fp fp+1 p*ones(1,fn2-b3)];

c=[1:b3+1 b2+1:fn2+1];

v=2*[0.5 ones(1,b2-1) 1-pf+fp pf-fp ones(1,fn2-b3-1) 0.5];

mn=1;

mx=fn2+1;

else

b1=floor(bl(1))+1;

b4=min(fn2,ceil(bl(4)))-1;

pf=log((f0+(b1:b4)/n)/(f0+fl))/lr;

fp=floor(pf);

pm=pf-fp;

k2=b2-b1+1;

k3=b3-b1+1;

k4=b4-b1+1;

r=[fp(k2:k4) 1+fp(1:k3)];

c=[k2:k4 1:k3];

v=2*[1-pm(k2:k4) pm(1:k3)];

mn=b1+1;

mx=b4+1;

end

if any(w=='n')

v=1-cos(v*pi/2);

elseif any(w=='m')

v=1-0.92/1.08*cos(v*pi/2);

end

if nargout > 1

x=sparse(r,c,v);

else

x=sparse(r,c+mn-1,v,p,1+fn2);

end

3 仿真结果

4 参考文献

[1]吴晓平, 崔光照, 路康. 基于DTW算法的语音识别系统实现[J]. 信息化研究, 2004(07):17-19.

博主简介:擅长智能优化算法、神经网络预测、信号处理、元胞自动机、图像处理、路径规划、无人机等多种领域的Matlab仿真,相关matlab代码问题可私信交流。

部分理论引用网络文献,若有侵权联系博主删除。