@[toc] 一.master2 节点部署 承接上篇文章 //从 master01 节点上拷贝证书文件、各master组件的配置文件和服务管理文件到 master02 节点scp -r /opt/etcd/ root@192.168.19.18:/opt/scp -r /opt/kubernetes/ root@192

@[toc]

一.master2 节点部署

承接上篇文章

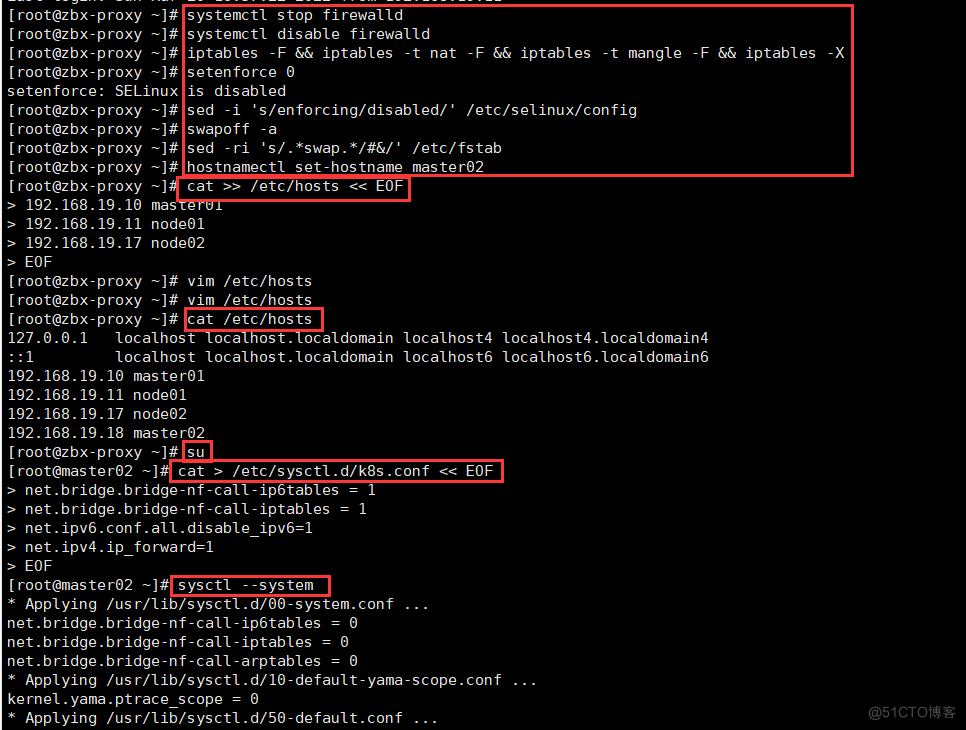

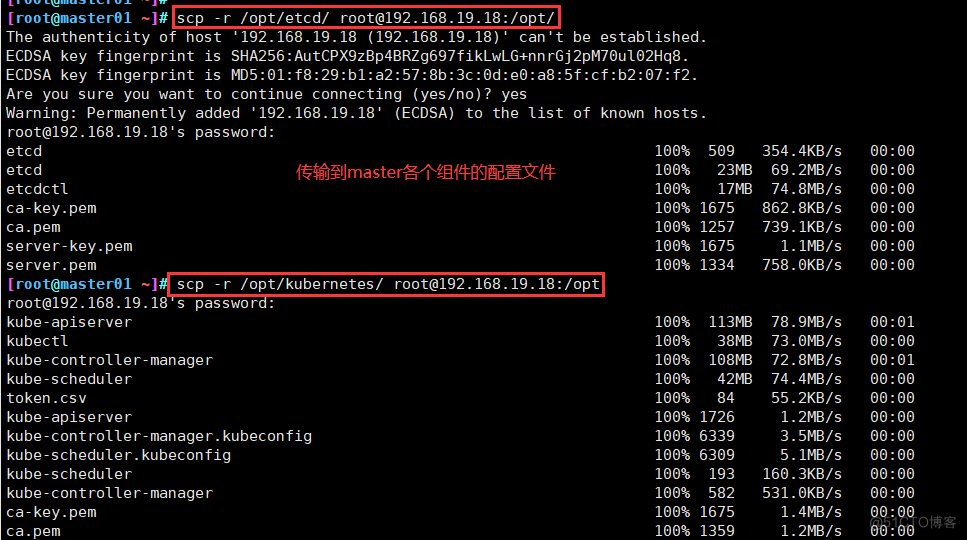

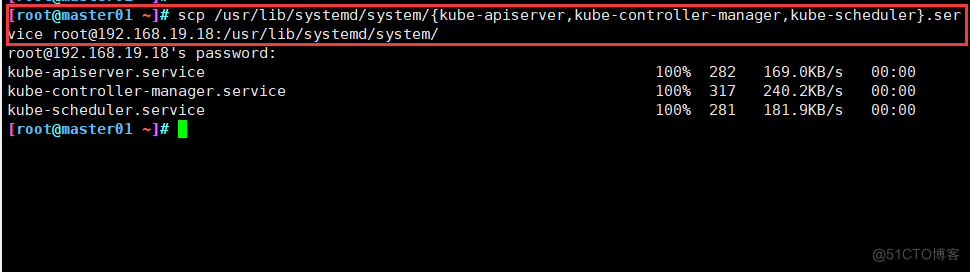

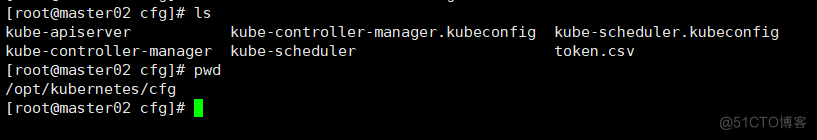

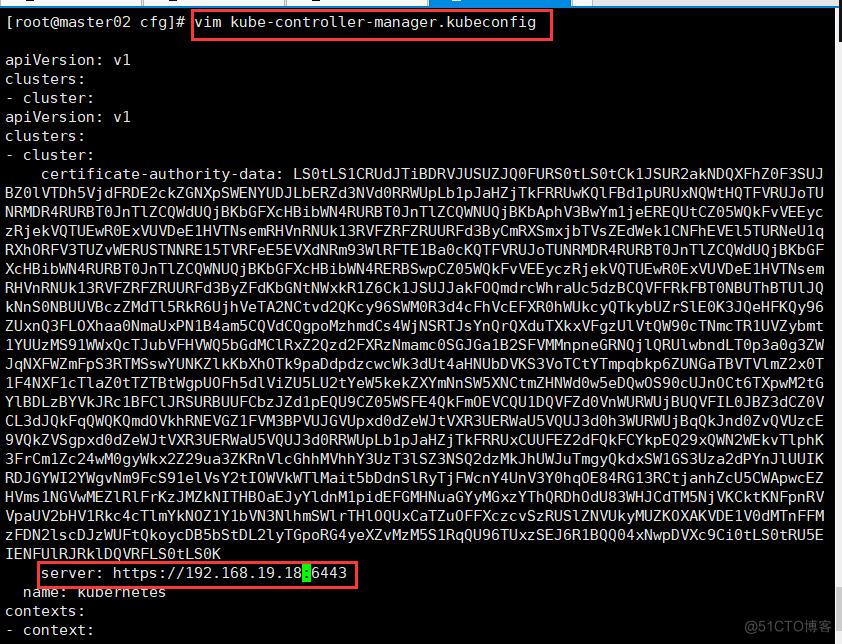

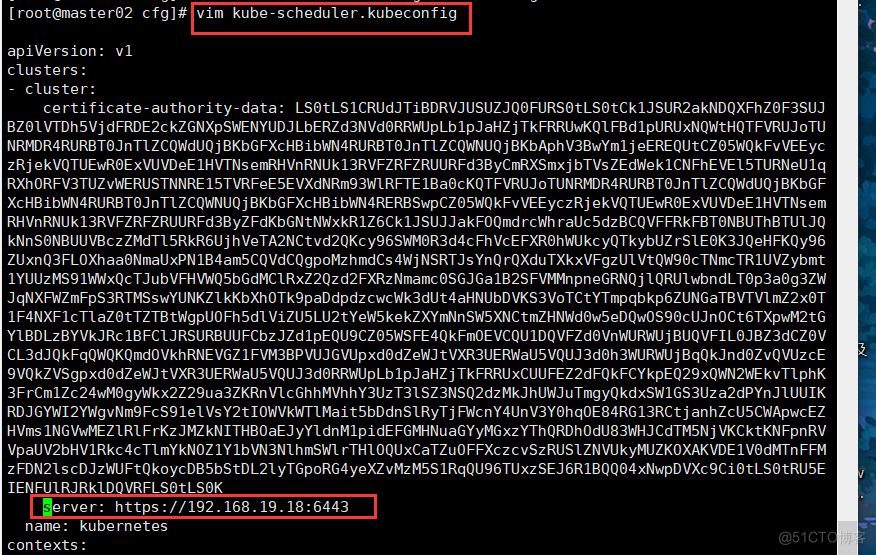

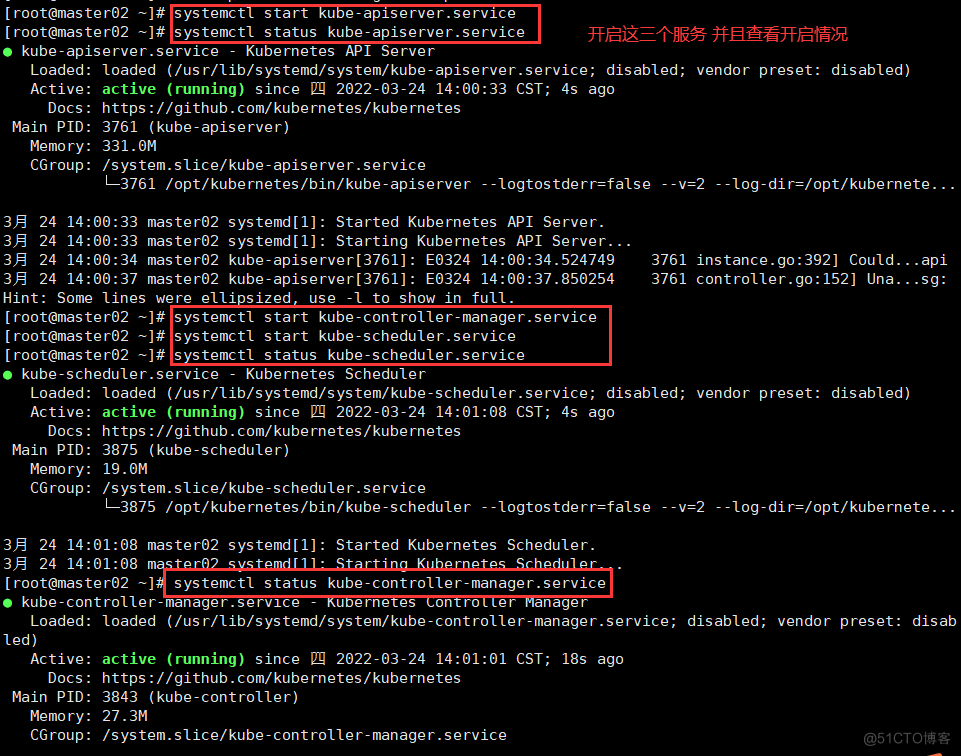

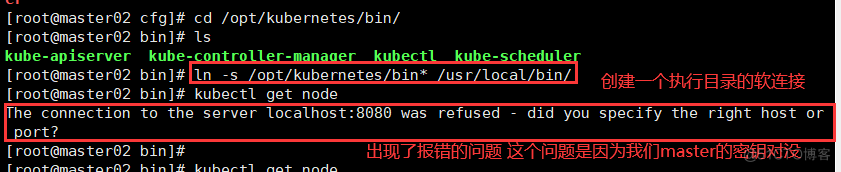

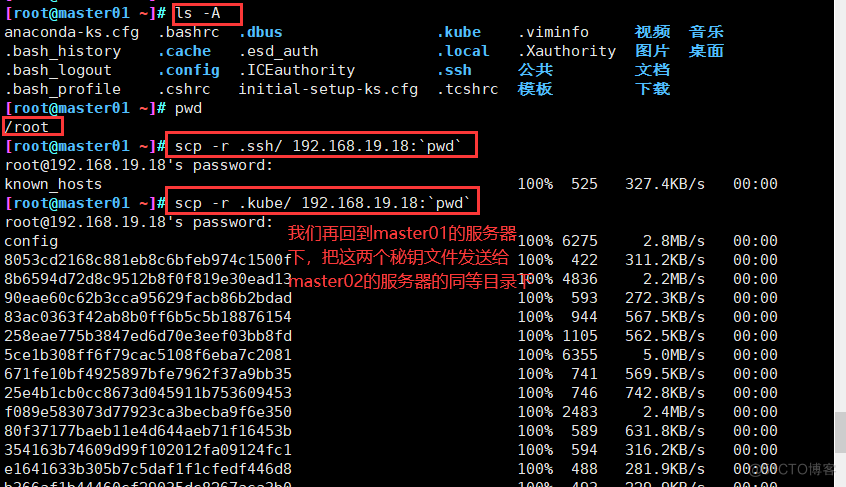

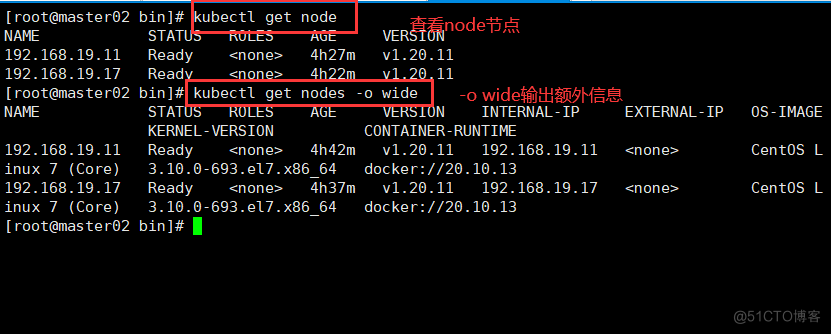

//从 master01 节点上拷贝证书文件、各master组件的配置文件和服务管理文件到 master02 节点 scp -r /opt/etcd/ root@192.168.19.18:/opt/ scp -r /opt/kubernetes/ root@192.168.19.18:/opt scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@192.168.19.18:/usr/lib/systemd/system/ //修改配置文件kube-apiserver中的IP vim /opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=https://192.168.19.18:2379,https://192.168.19.11:2379,https://192.168.19.17:2379 \ --bind-address=192.168.19.18 \ #修改 --secure-port=6443 \ --advertise-address=192.168.19.18 \ #修改 ...... //在 master02 节点上启动各服务并设置开机自启 systemctl start kube-apiserver.service systemctl enable kube-apiserver.service systemctl start kube-controller-manager.service systemctl enable kube-controller-manager.service systemctl start kube-scheduler.service systemctl enable kube-scheduler.service //查看node节点状态 ln -s /opt/kubernetes/bin/* /usr/local/bin/ kubectl get nodes kubectl get nodes -o wide #-o=wide:输出额外信息;对于Pod,将输出Pod所在的Node名 //此时在master02节点查到的node节点状态仅是从etcd查询到的信息,而此时node节点实际上并未与master02节点建立通信连接,因此需要使用一个VIP把node节点与master节点都关联起来首先按照master1的配置来做master2的服务

==接下来master配置文件==

二.负载均衡部署

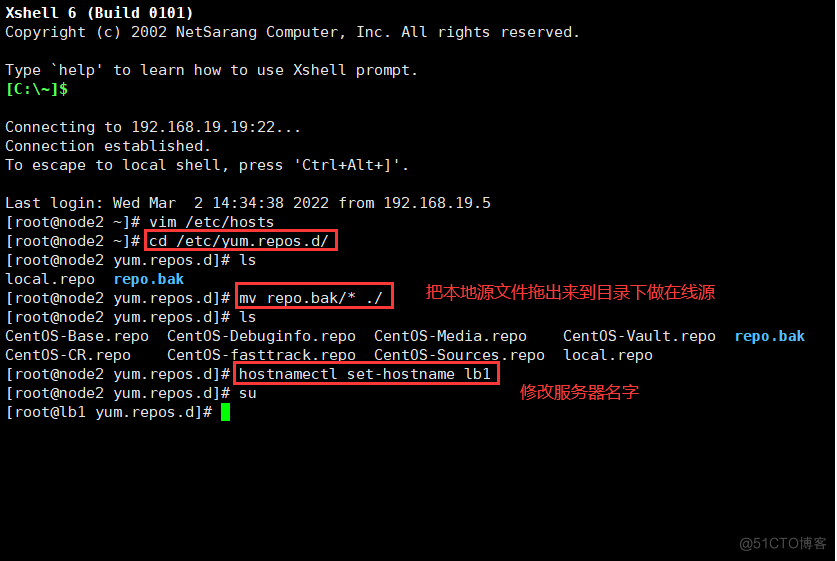

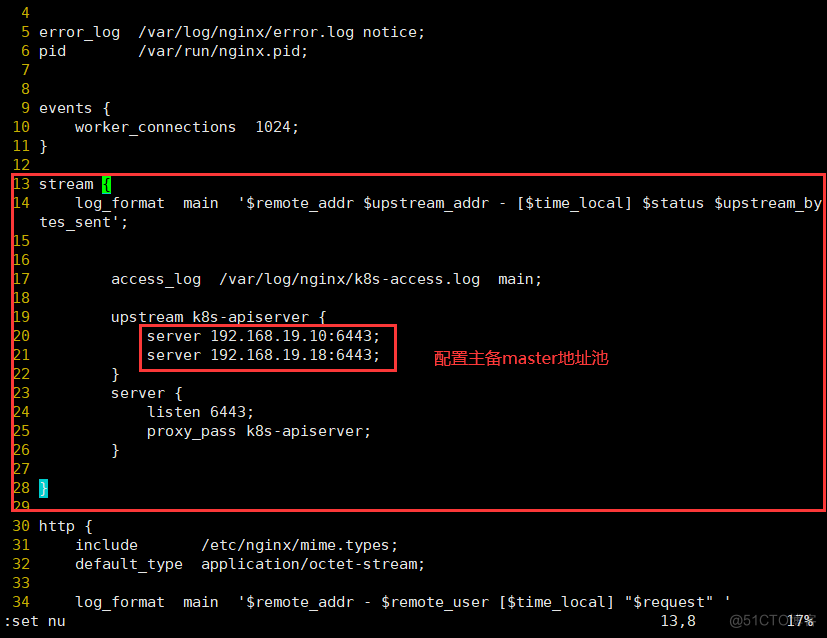

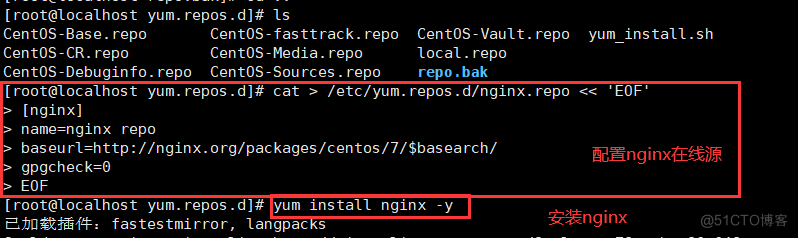

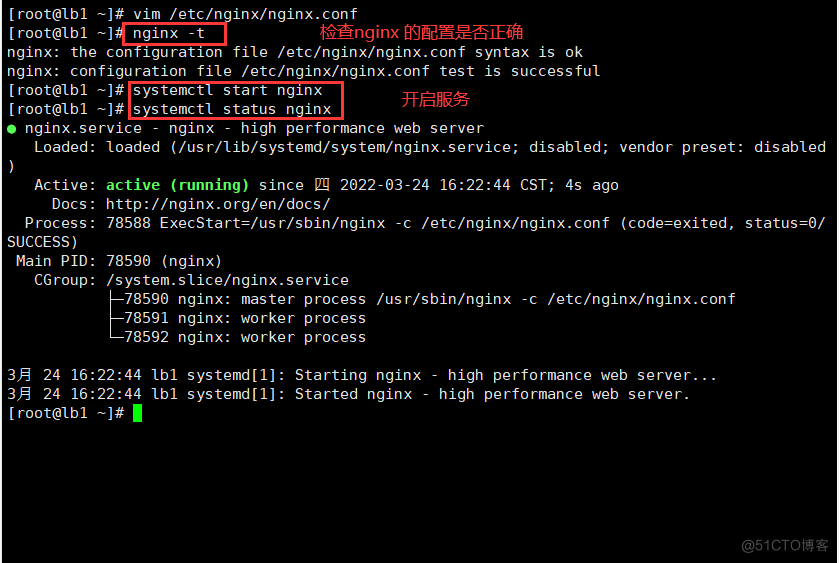

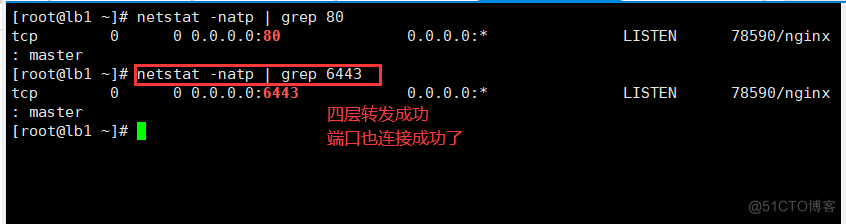

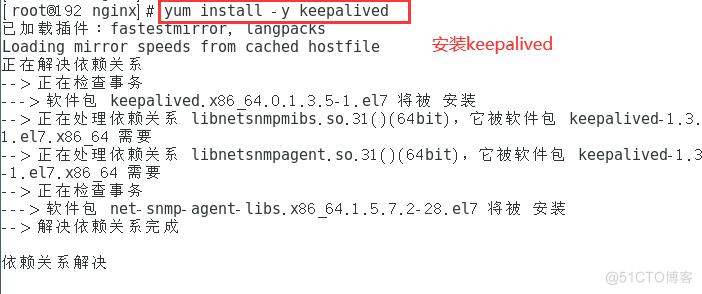

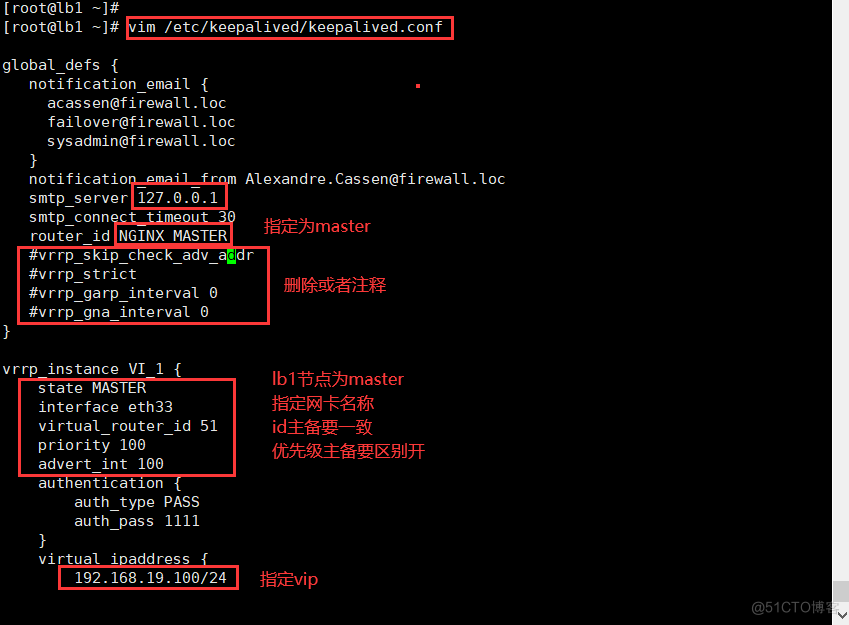

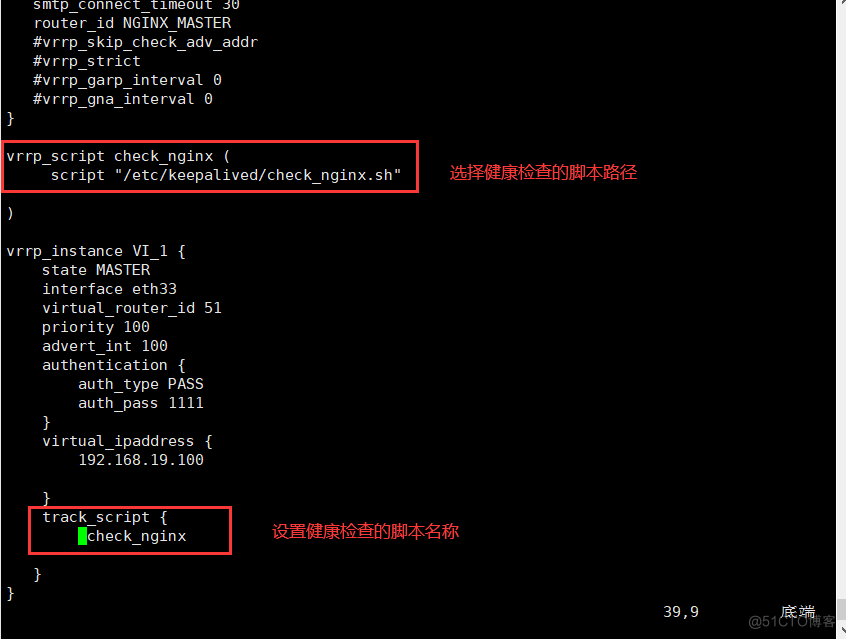

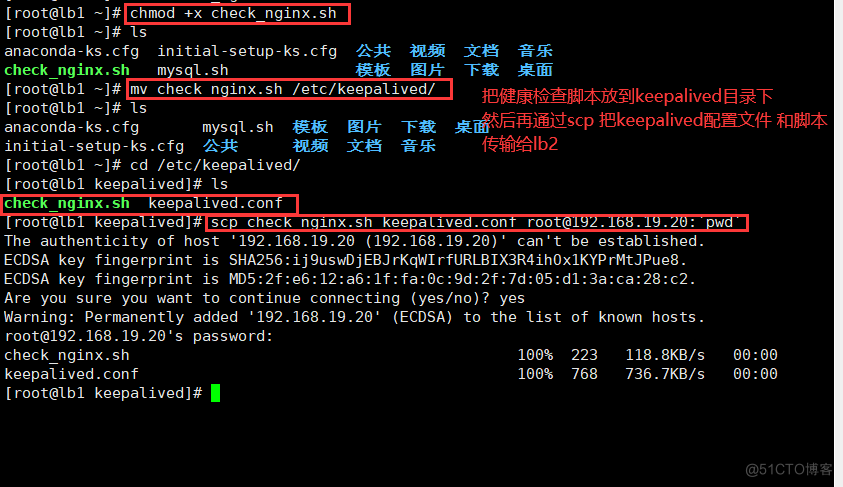

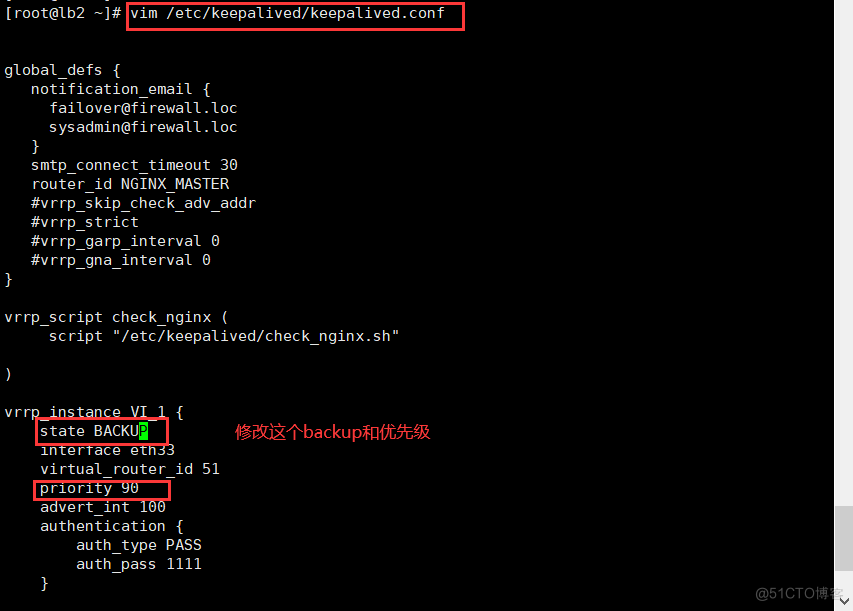

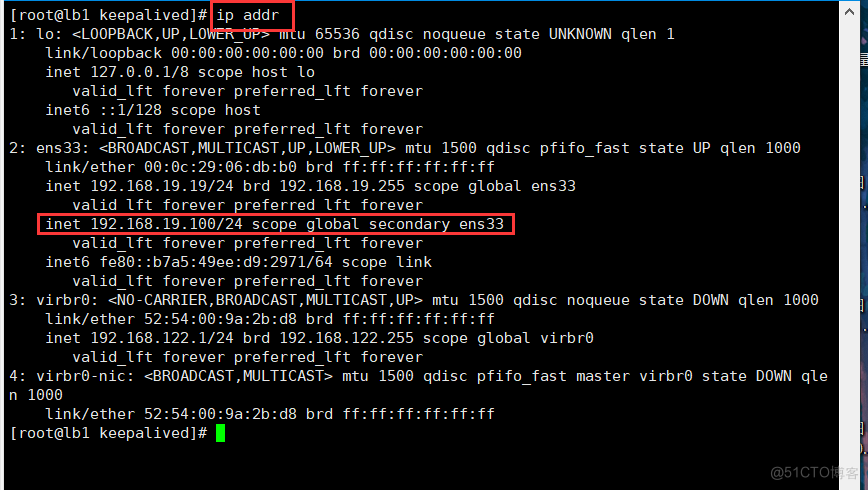

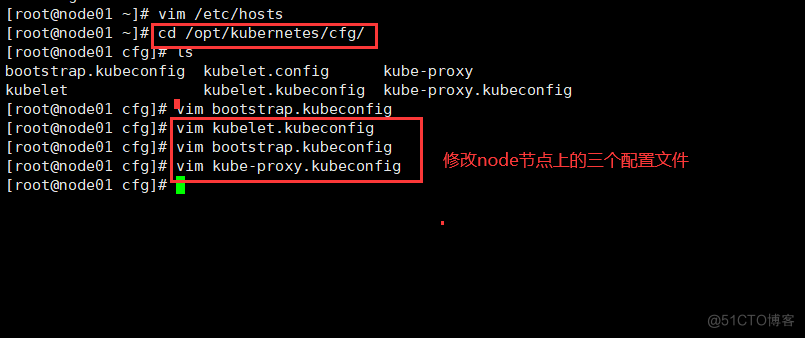

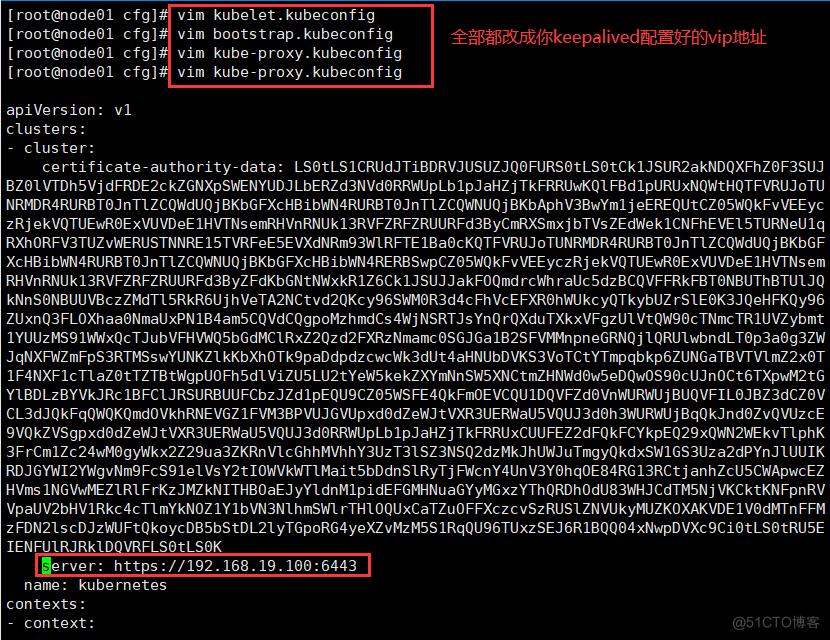

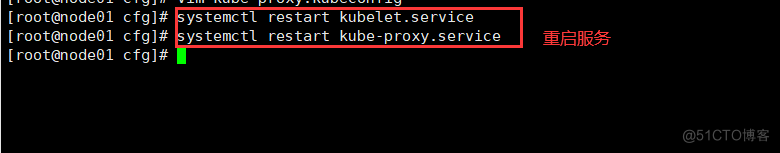

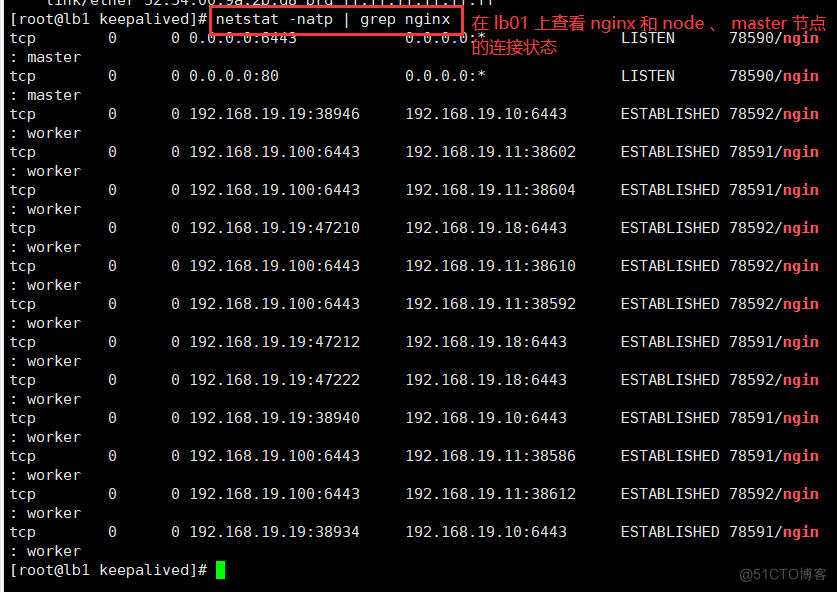

//配置load balancer集群双机热备负载均衡(nginx实现负载均衡,keepalived实现双机热备) ##### 在lb01、lb02节点上操作 ##### //配置nginx的官方在线yum源,配置本地nginx的yum源 cat > /etc/yum.repos.d/nginx.repo << 'EOF' [nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/7/$basearch/ gpgcheck=0 EOF yum install nginx -y //修改nginx配置文件,配置四层反向代理负载均衡,指定k8s群集2台master的节点ip和6443端口 vim /etc/nginx/nginx.conf events { worker_connections 1024; } #添加 stream { log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent'; access_log /var/log/nginx/k8s-access.log main; upstream k8s-apiserver { server 192.168.19.10:6443; server 192.168.19.18:6443; } server { listen 6443; proxy_pass k8s-apiserver; } } http { ...... //检查配置文件语法 nginx -t //启动nginx服务,查看已监听6443端口 systemctl start nginx systemctl enable nginx netstat -natp | grep nginx //部署keepalived服务 yum install keepalived -y //修改keepalived配置文件 vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { # 接收邮件地址 notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } # 邮件发送地址 notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id NGINX_MASTER #lb01节点的为 NGINX_MASTER,lb02节点的为 NGINX_BACKUP } #添加一个周期性执行的脚本 vrrp_script check_nginx { script "/etc/nginx/check_nginx.sh" #指定检查nginx存活的脚本路径 } vrrp_instance VI_1 { state MASTER #lb01节点的为 MASTER,lb02节点的为 BACKUP interface ens33 #指定网卡名称 ens33 virtual_router_id 51 #指定vrid,两个节点要一致 priority 100 #lb01节点的为 100,lb02节点的为 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.19.100/24 #指定 VIP } track_script { check_nginx #指定vrrp_script配置的脚本 } } //创建nginx状态检查脚本 vim /etc/nginx/check_nginx.sh #!/bin/bash #egrep -cv "grep|$$" 用于过滤掉包含grep 或者 $$ 表示的当前Shell进程ID count=$(ps -ef | grep nginx | egrep -cv "grep|$$") if [ "$count" -eq 0 ];then systemctl stop keepalived fi chmod +x /etc/nginx/check_nginx.sh //启动keepalived服务(一定要先启动了nginx服务,再启动keepalived服务) systemctl start keepalived systemctl enable keepalived ip a #查看VIP是否生成 //修改node节点上的bootstrap.kubeconfig,kubelet.kubeconfig配置文件为VIP cd /opt/kubernetes/cfg/ vim bootstrap.kubeconfig server: https://192.168.80.100:6443 vim kubelet.kubeconfig server: https://192.168.80.100:6443 vim kube-proxy.kubeconfig server: https://192.168.80.100:6443 //重启kubelet和kube-proxy服务 systemctl restart kubelet.service systemctl restart kube-proxy.service //在lb01上查看nginx的k8s日志 tail /var/log/nginx/k8s-access.log----------------在lb1 和 lb2 同时操作-------------------------------

三.在 master01 节点上操作

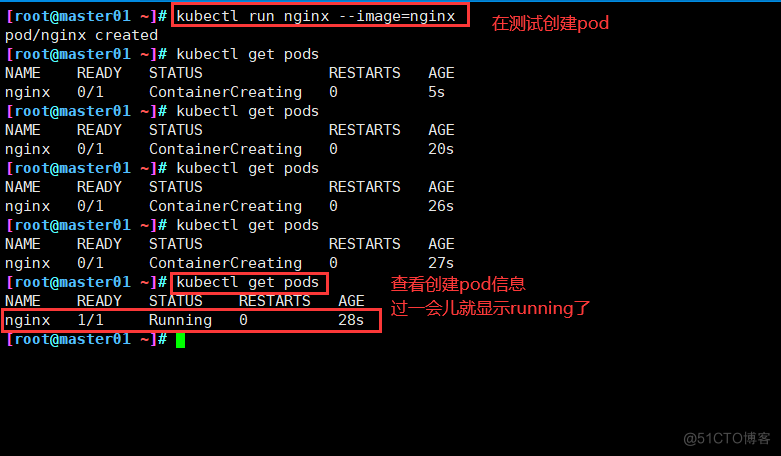

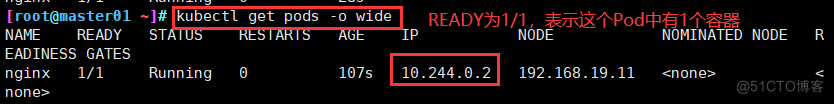

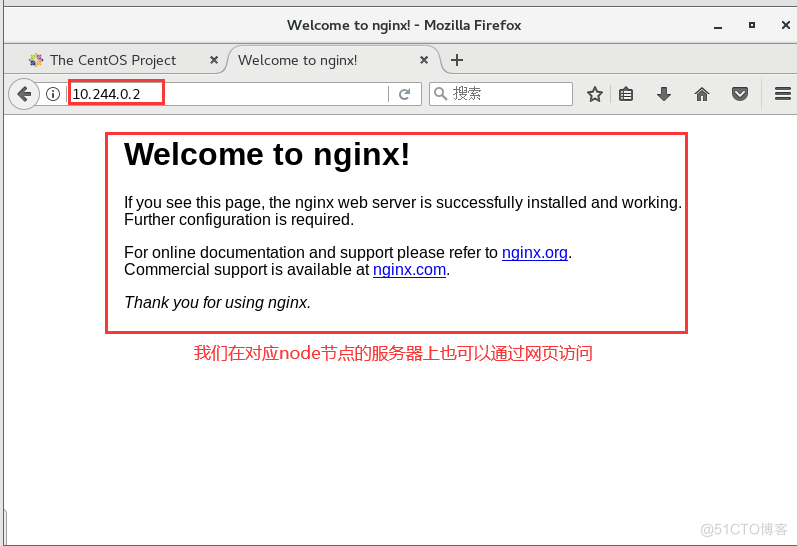

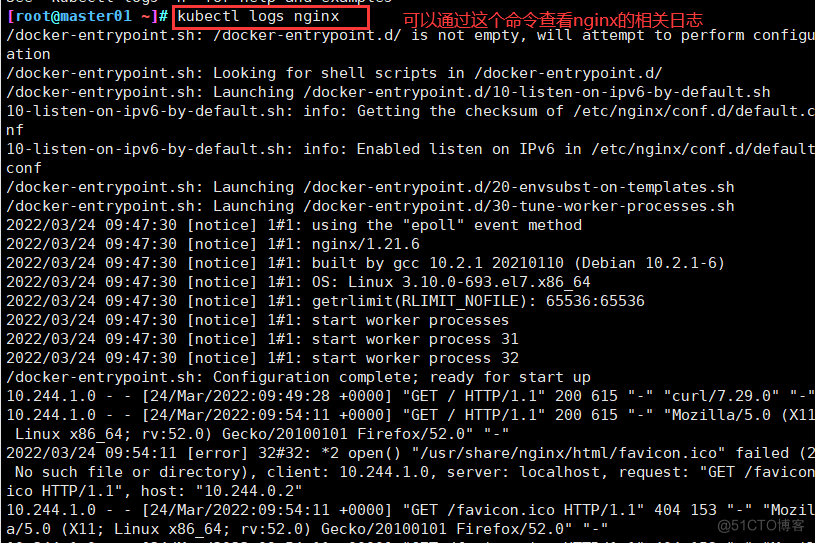

//测试创建pod kubectl run nginx --image=nginx //查看Pod的状态信息 kubectl get pods NAME READY STATUS RESTARTS AGE nginx-dbddb74b8-nf9sk 0/1 ContainerCreating 0 33s #正在创建中 kubectl get pods NAME READY STATUS RESTARTS AGE nginx-dbddb74b8-nf9sk 1/1 Running 0 80s #创建完成,运行中 kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE nginx-dbddb74b8-26r9l 1/1 Running 0 10m 172.17.36.2 192.168.80.15 <none> //READY为1/1,表示这个Pod中有1个容器 //在对应网段的node节点上操作,可以直接使用浏览器或者curl命令访问 curl 172.17.36.2 //这时在master01节点上查看nginx日志 kubectl logs nginx

------------------------------ 部署 Dashboard UI ------------------------------

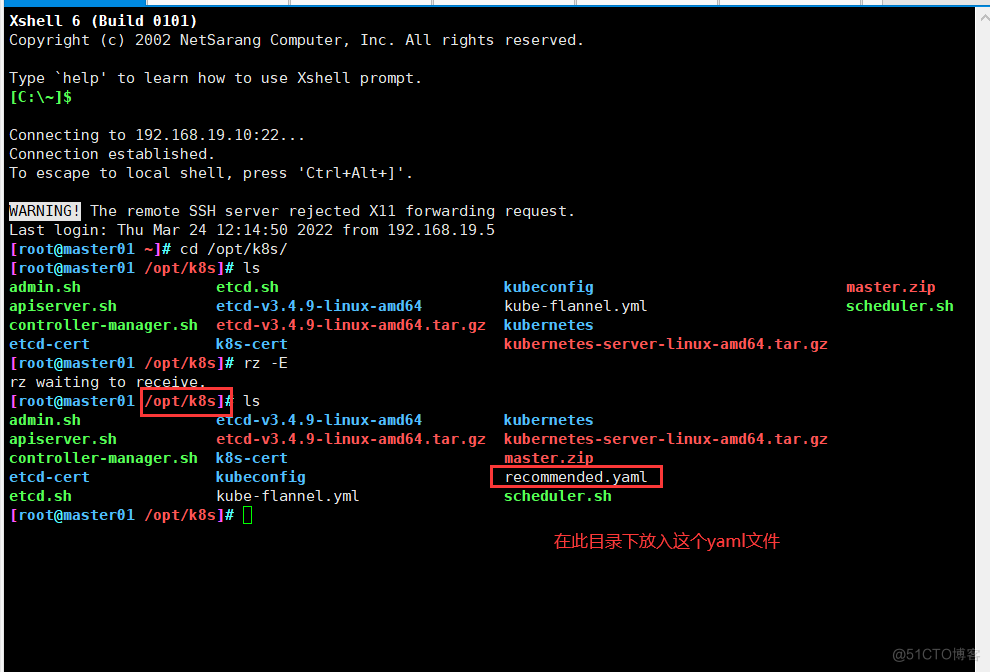

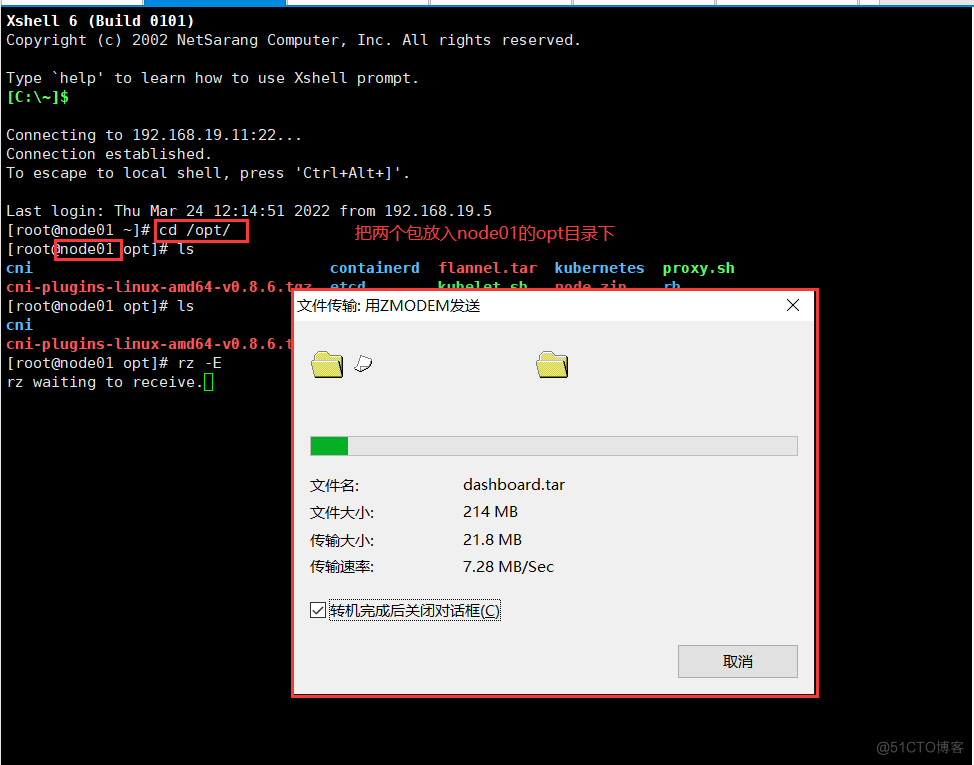

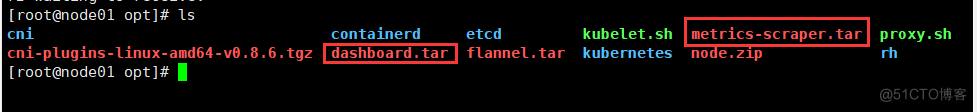

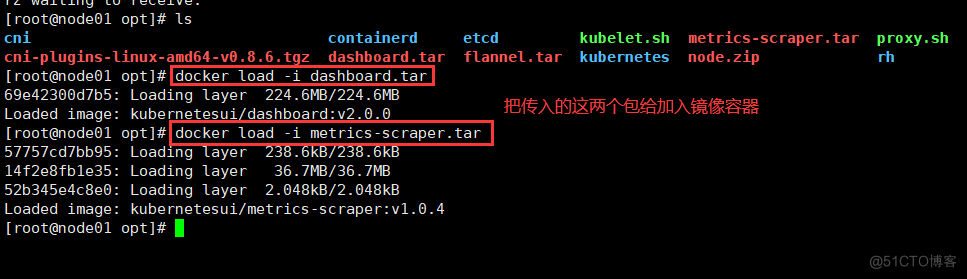

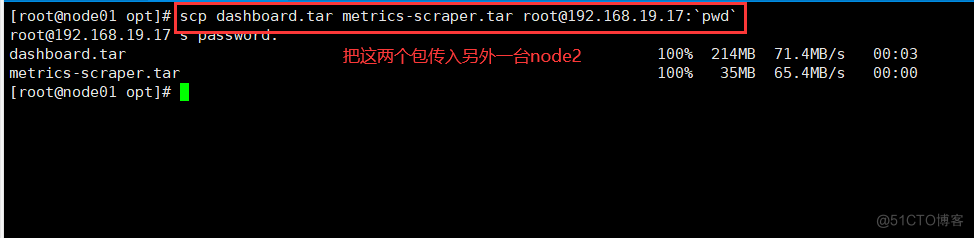

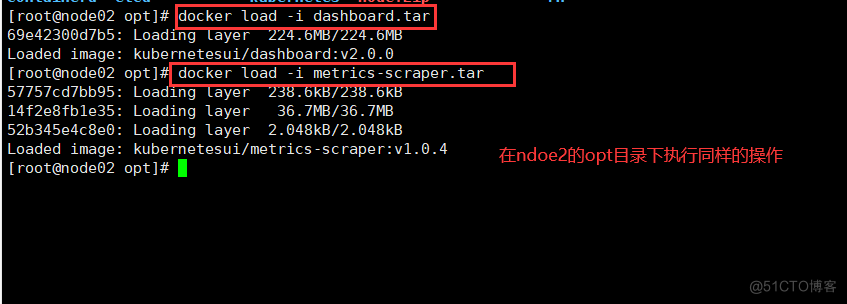

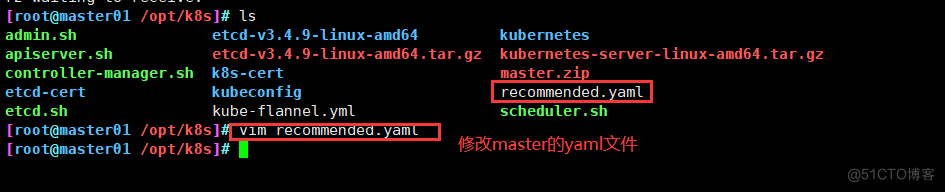

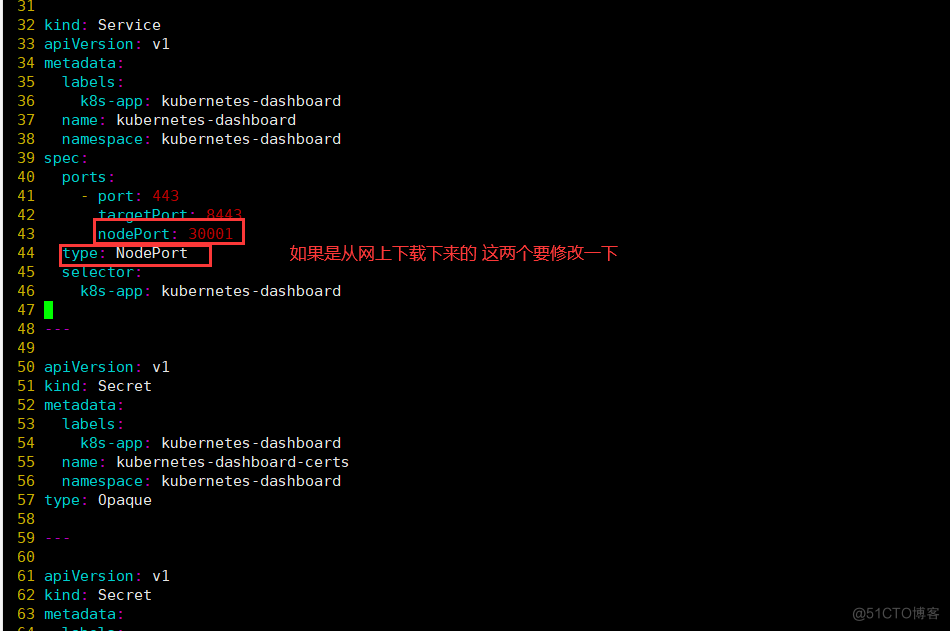

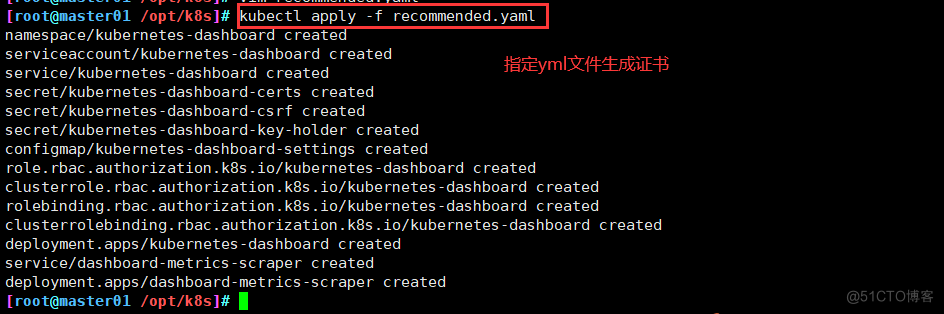

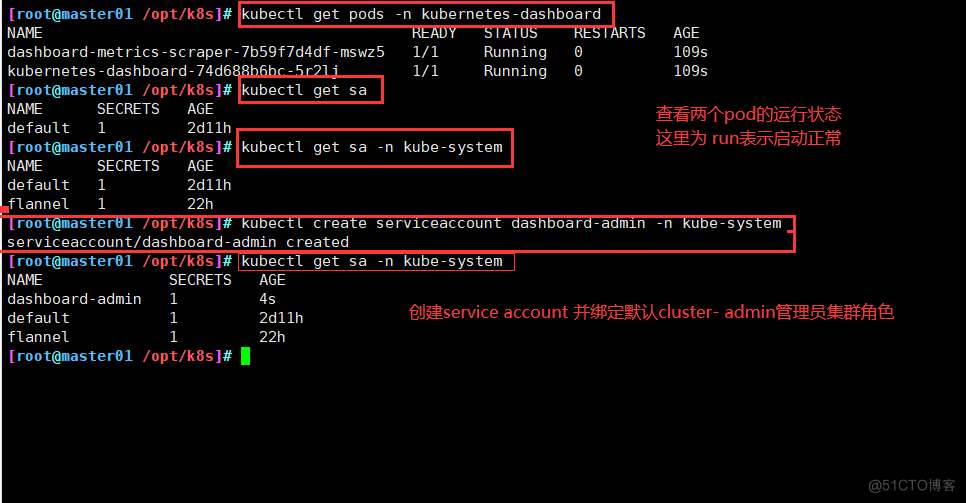

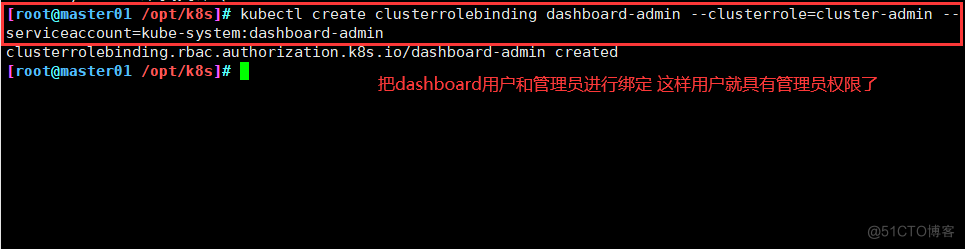

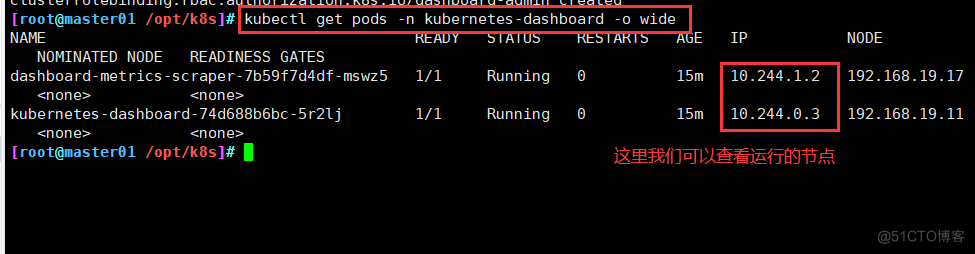

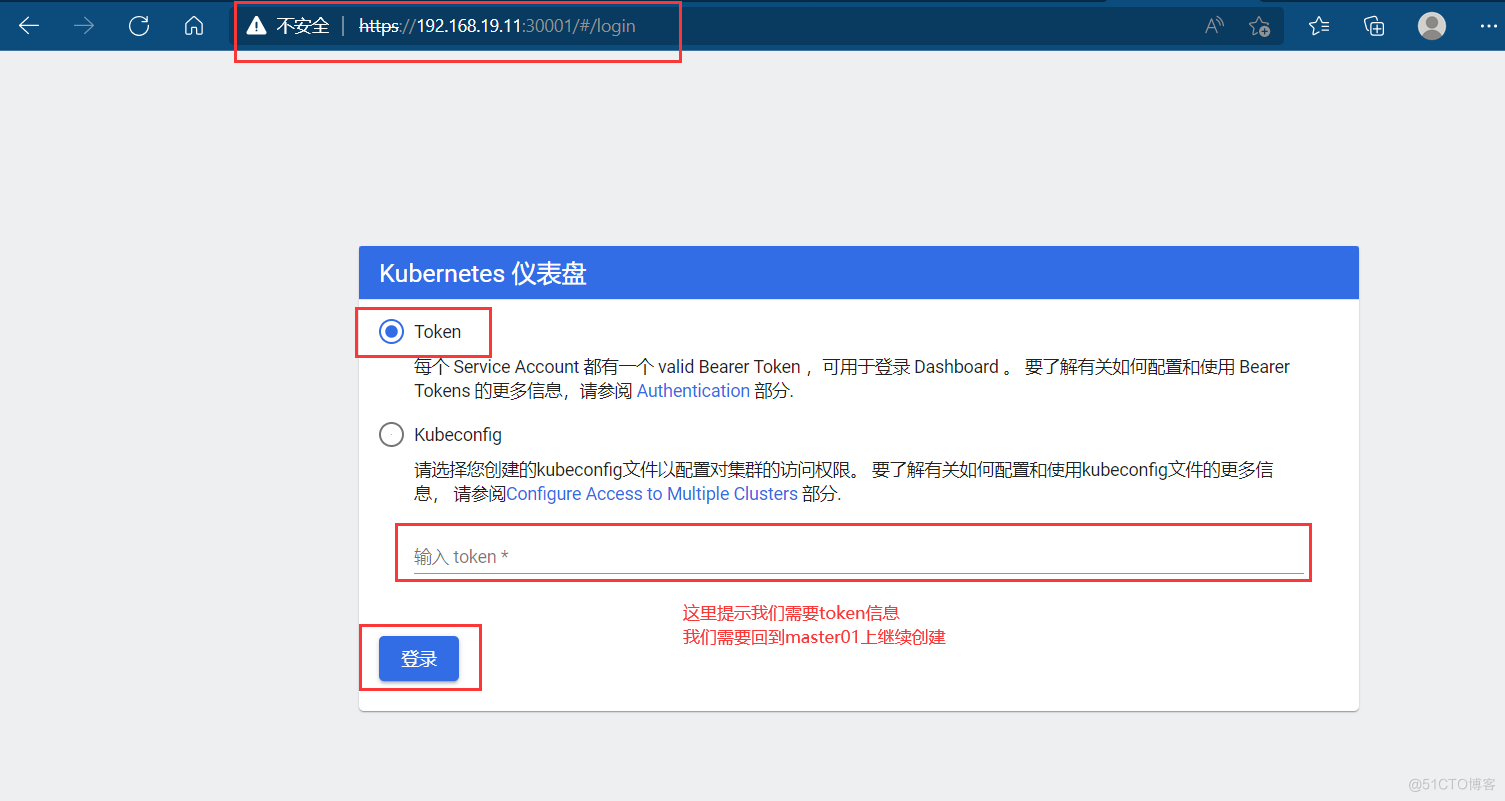

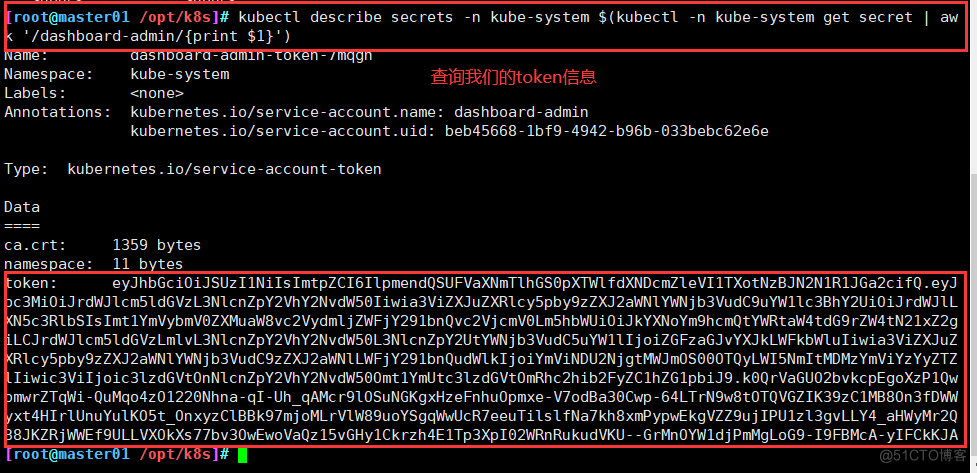

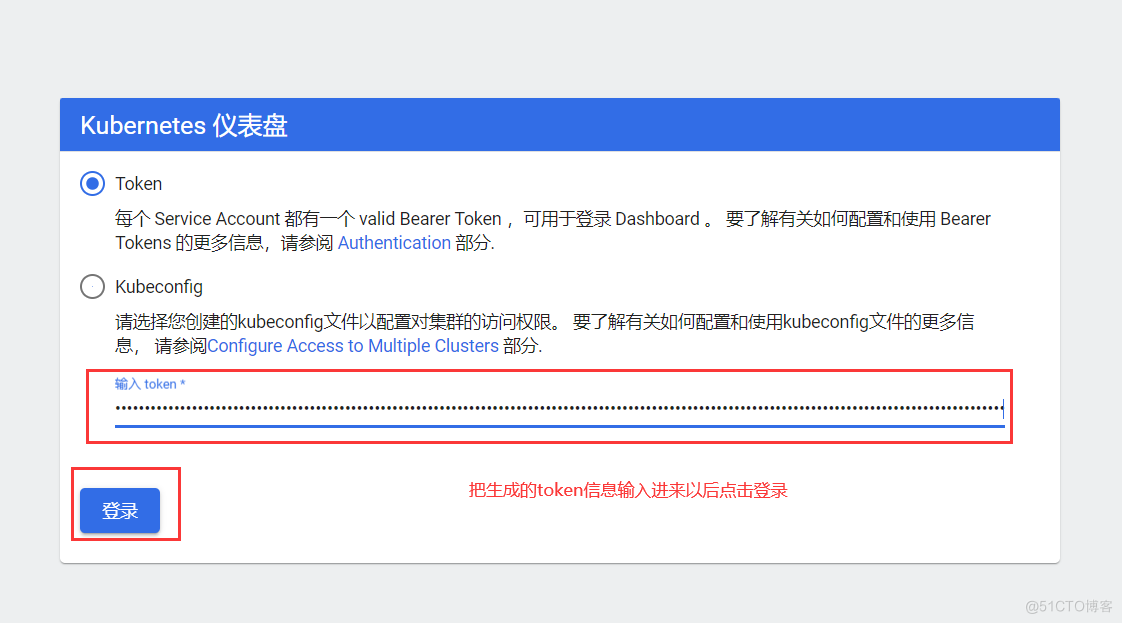

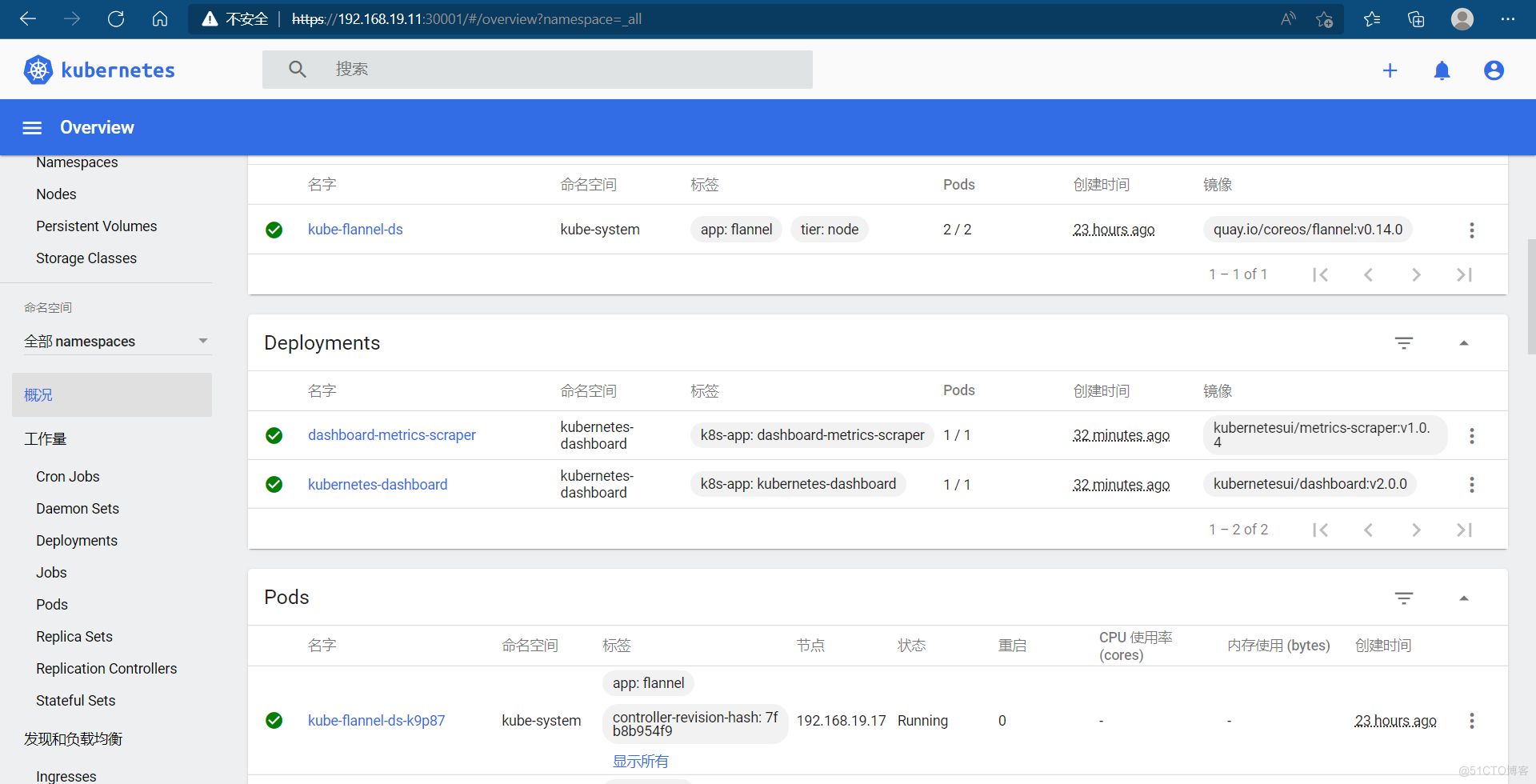

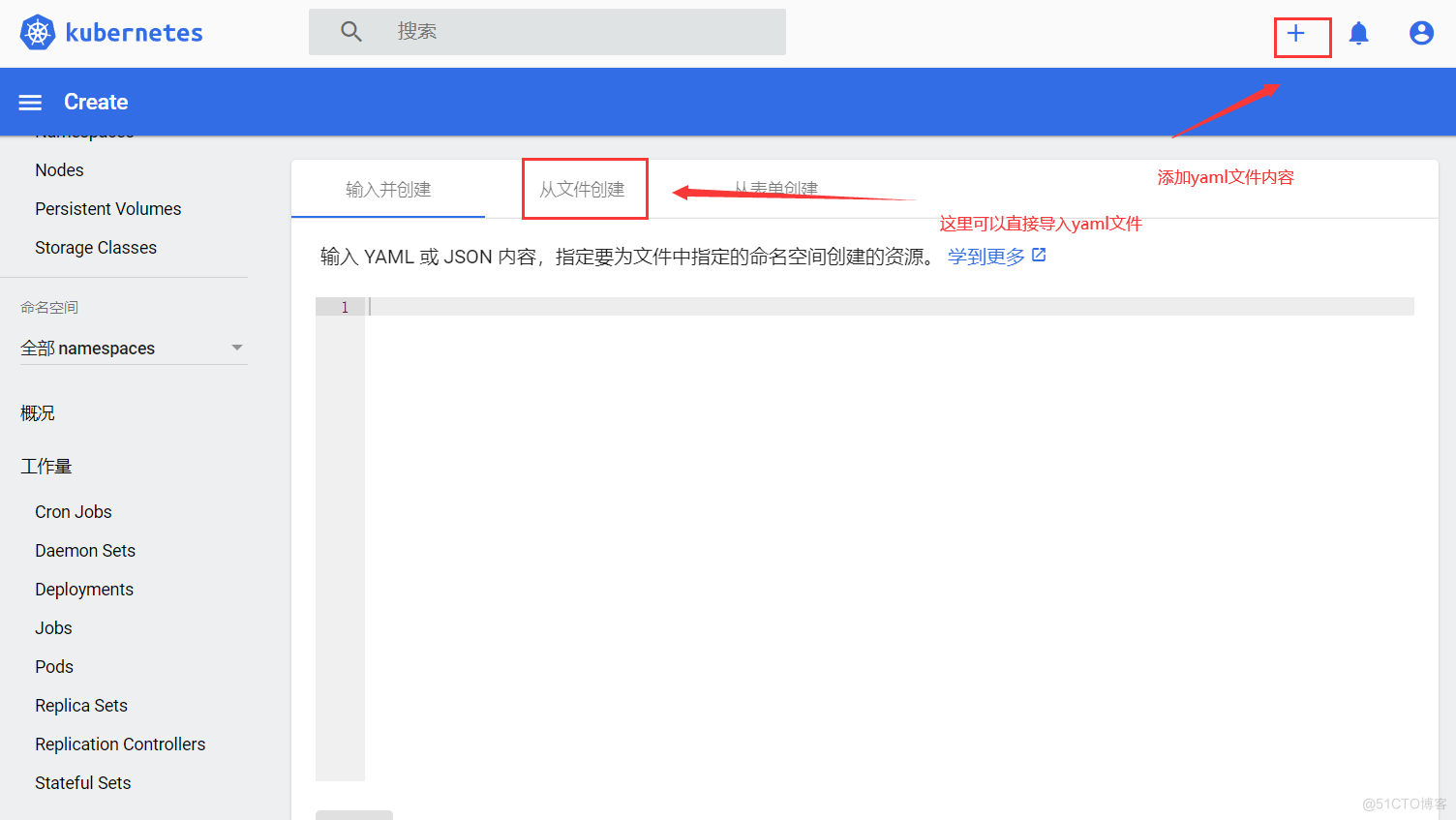

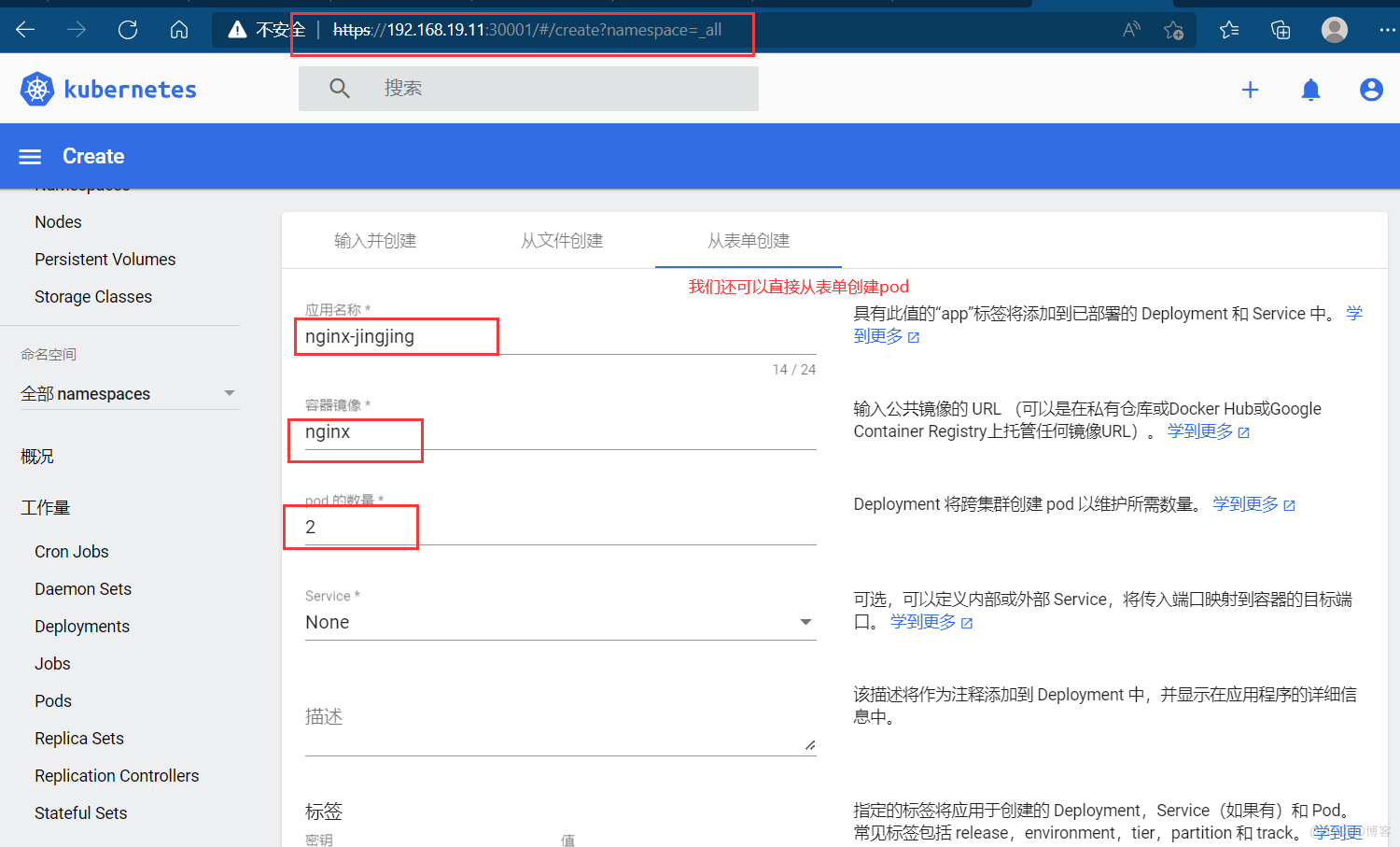

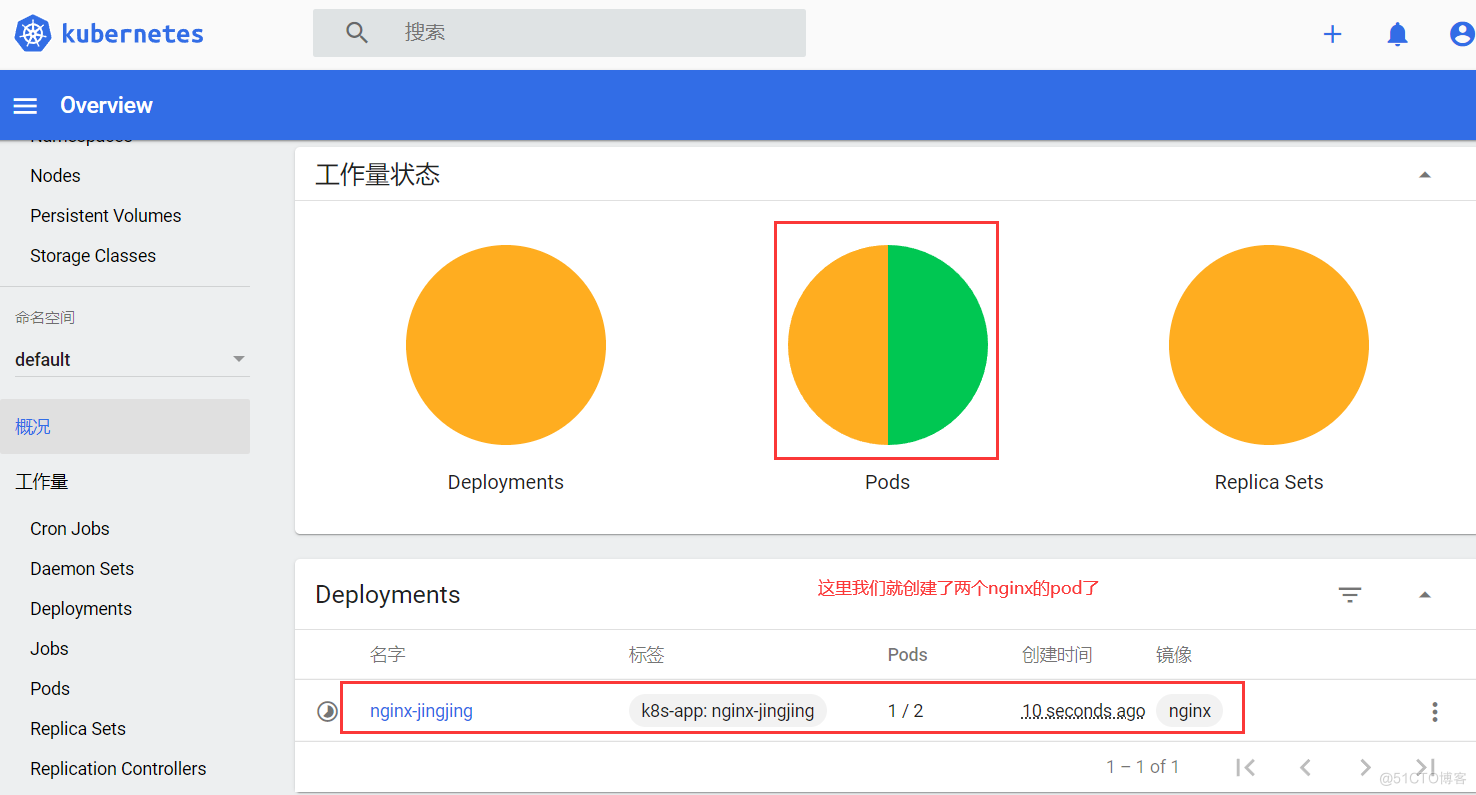

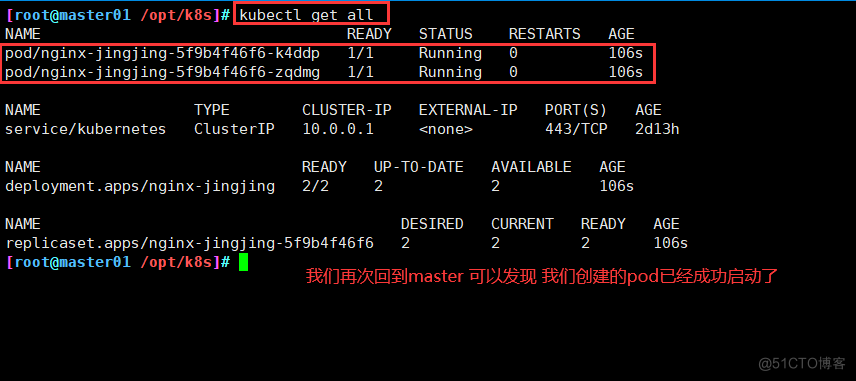

====== 在 master1 节点上操作 ====== //在k8s工作目录中放入yaml文件 cd/opt/k8s 拖入 recommended.yaml这个文件 //核心文件官方下载资源地址:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard dashboard-configmap.yaml dashboard-rbac.yaml dashboard-service.yaml dashboard-controller.yaml dashboard-secret.yaml k8s-admin.yaml dashboard-cert.sh ------------------------------------------------------------------------------------------ 1、dashboard-rbac.yaml:用于访问控制设置,配置各种角色的访问控制权限及角色绑定(绑定角色和服务账户),内容中包含对应各种角色所配置的规则(rules) 2、dashboard-secret.yaml:提供令牌,访问API服务器所用(个人理解为一种安全认证机制) 3、dashboard-configmap.yaml:配置模板文件,负责设置Dashboard的文件,ConfigMap提供了将配置数据注入容器的方式,保证容器中的应用程序配置从 Image 内容中解耦 4、dashboard-controller.yaml:负责控制器及服务账户的创建,来管理pod副本 5、dashboard-service.yaml:负责将容器中的服务提供出去,供外部访问 ------------------------------------------------------------------------------------------ 在node01上 传入两个包 cd /opt/ metrics-scraper.tar dashboard.tar docker load -i dashboard.tar docker load -i metrics-scraper.tar scp dashboard.tar metrics-scraper.tar root@192.168.19.17:`pwd` 在node02上 cd /opt/ docker load -i dashboard.tar docker load -i metrics-scraper.tar 在master上 cd /opt/k8s/ vim recommended.yaml 修改servers 模块下的 nodePort: 30001 type: NodePort kubectl apply -f recommended.yaml kubectl get pods -n kubernetes-dashboard 查看两个pod节点是否启动 #创建service account 并绑定默认cluster- admin管理员集群角色 kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl get pods -n kubernetes-dashboard -o wide kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') #使用输出的token登录Dashboard https://NodeIP:30001