K8S内置的 StatefulSet 为 Pods 分配连续的序号。比如 3 个副本时,Pods 分别为 pod-0, pod-1, pod-2。扩缩容时,必须在尾部增加或删除 Pods。比如扩容到 4 个副本时,会新增 pod-3。缩容到 2 副本时,会删除 pod-2。

在使用本地存储时,Pods 与 Nodes 存储资源绑定,无法自由调度。若希望删除掉中间某个 Pod ,以便维护其所在的 Node 但并没有其他 Node 可以迁移时,或者某个 Pod 故障想直接删除,另起一个序号不一样的 Pod 时,无法通过内置 StatefulSet 实现。

增强型 StatefulSet 控制器 基于内置 StatefulSet 实现,新增了自由控制 Pods 序号的功能。本文介绍如何在 TiDB Operator 中使用。

开启

载入 Advanced StatefulSet 的CRD文件:

- Kubernetes 1.16 之前版本:

kubectl apply -f https://raw.githubusercontent.com/pingcap/tidb-operator/v1.3.9/manifests/advanced-statefulset-crd.v1beta1.yaml

- Kubernetes 1.16 及之后版本:

kubectl apply -f https://raw.githubusercontent.com/pingcap/tidb-operator/v1.3.9/manifests/advanced-statefulset-crd.v1.yaml

具体执行如下:

[root@k8s-master tidb]# kubectl apply -f https://raw.githubusercontent.com/pingcap/tidb-operator/v1.3.9/manifests/advanced-statefulset-crd.v1beta1.yaml

Warning: apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

customresourcedefinition.apiextensions.k8s.io/statefulsets.apps.pingcap.com configured

在 TiDB Operator chart 的 values.yaml 中启用 AdvancedStatefulSet 特性:

##修改1

features:

- AdvancedStatefulSet=true

advancedStatefulset:

create: true

##修改2:

advancedStatefulset:

create: true

image: pingcap/advanced-statefulset:v0.4.0

imagePullPolicy: IfNotPresent

serviceAccount: advanced-statefulset-controller

logLevel: 4

replicas: 1

resources:

limits:

cpu: 500m

memory: 300Mi

requests:

cpu: 200m

memory: 50Mi

具体实例操作如下:

生成相关的配置文件

[root@k8s-master tidb]# helm inspect values pingcap/tidb-operator --version=v1.3.9 > /home/tidb/tidb-operator/values-tidb-operator.yaml

[root@k8s-master tidb]# vim tidb-operator/values-tidb-operator.yaml

[root@k8s-master tidb-operator]# cat values-tidb-operator.yaml

# Default values for tidb-operator

# clusterScoped is whether tidb-operator should manage kubernetes cluster wide tidb clusters

# Also see rbac.create, controllerManager.serviceAccount, scheduler.create and controllerManager.clusterPermissions.

clusterScoped: true

# Also see clusterScoped and controllerManager.serviceAccount

rbac:

create: true

# timezone is the default system timzone

timezone: UTC

# operatorImage is TiDB Operator image

operatorImage: pingcap/tidb-operator:v1.3.9

imagePullPolicy: IfNotPresent

# imagePullSecrets: []

# tidbBackupManagerImage is tidb backup manager image

tidbBackupManagerImage: pingcap/tidb-backup-manager:v1.3.9

#

# Enable or disable tidb-operator features:

#

# StableScheduling (default: true)

# Enable stable scheduling of tidb servers.

#

# AdvancedStatefulSet (default: false)

# If enabled, tidb-operator will use AdvancedStatefulSet to manage pods

# instead of Kubernetes StatefulSet.

# It's ok to turn it on if this feature is not enabled. However it's not ok

# to turn it off when the tidb-operator already uses AdvancedStatefulSet to

# manage pods. This is in alpha phase.

#

features:

- AdvancedStatefulSet=true

advancedStatefulset:

create: true

# - AdvancedStatefulSet=false

# - StableScheduling=true

# - AutoScaling=false

appendReleaseSuffix: false

controllerManager:

create: true

# With rbac.create=false, the user is responsible for creating this account

# With rbac.create=true, this service account will be created

# Also see rbac.create and clusterScoped

serviceAccount: tidb-controller-manager

# clusterPermissions are some cluster scoped permissions that will be used even if `clusterScoped: false`.

# the default value of these fields is `true`. if you want them to be `false`, you MUST set them to `false` explicitly.

clusterPermissions:

nodes: true

persistentvolumes: true

storageclasses: true

logLevel: 2

replicas: 1

resources:

requests:

cpu: 80m

memory: 50Mi

# # REF: https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/

# priorityClassName: system-cluster-critical

#

# REF: https://pkg.go.dev/k8s.io/client-go/tools/leaderelection#LeaderElectionConfig

## leaderLeaseDuration is the duration that non-leader candidates will wait to force acquire leadership

# leaderLeaseDuration: 15s

## leaderRenewDeadline is the duration that the acting master will retry refreshing leadership before giving up

# leaderRenewDeadline: 10s

## leaderRetryPeriod is the duration the LeaderElector clients should wait between tries of actions

# leaderRetryPeriod: 2s

## number of workers that are allowed to sync concurrently. default 5

# workers: 5

# autoFailover is whether tidb-operator should auto failover when failure occurs

autoFailover: true

# pd failover period default(5m)

pdFailoverPeriod: 5m

# tikv failover period default(5m)

tikvFailoverPeriod: 5m

# tidb failover period default(5m)

tidbFailoverPeriod: 5m

# tiflash failover period default(5m)

tiflashFailoverPeriod: 5m

# dm-master failover period default(5m)

dmMasterFailoverPeriod: 5m

# dm-worker failover period default(5m)

dmWorkerFailoverPeriod: 5m

## affinity defines pod scheduling rules,affinity default settings is empty.

## please read the affinity document before set your scheduling rule:

## ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity

affinity: {}

## nodeSelector ensure pods only assigning to nodes which have each of the indicated key-value pairs as labels

## ref:https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#nodeselector

nodeSelector: {}

## Tolerations are applied to pods, and allow pods to schedule onto nodes with matching taints.

## refer to https://kubernetes.io/docs/concepts/configuration/taint-and-toleration

tolerations: []

# - key: node-role

# operator: Equal

# value: tidb-operator

# effect: "NoSchedule"

## Selector (label query) to filter on, make sure that this controller manager only manages the custom resources that match the labels

## refer to https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#equality-based-requirement

selector: []

# - canary-release=v1

# - k1==v1

# - k2!=v2

# SecurityContext is security config of this component, it will set template.spec.securityContext

# Refer to https://kubernetes.io/docs/tasks/configure-pod-container/security-context

securityContext: {}

# runAsUser: 1000

# runAsGroup: 2000

# fsGroup: 2000

# PodAnnotations will set template.metadata.annotations

# Refer to https://kubernetes.io/docs/concepts/overview/working-with-objects/annotations/

podAnnotations: {}

scheduler:

create: true

# With rbac.create=false, the user is responsible for creating this account

# With rbac.create=true, this service account will be created

# Also see rbac.create and clusterScoped

serviceAccount: tidb-scheduler

logLevel: 2

replicas: 1

schedulerName: tidb-scheduler

resources:

limits:

cpu: 250m

memory: 150Mi

requests:

cpu: 80m

memory: 50Mi

kubeSchedulerImageName: k8s.gcr.io/kube-scheduler

# This will default to matching your kubernetes version

# kubeSchedulerImageTag:

## affinity defines pod scheduling rules,affinity default settings is empty.

## please read the affinity document before set your scheduling rule:

## ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity

affinity: {}

## nodeSelector ensure pods only assigning to nodes which have each of the indicated key-value pairs as labels

## ref:https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#nodeselector

nodeSelector: {}

## Tolerations are applied to pods, and allow pods to schedule onto nodes with matching taints.

## refer to https://kubernetes.io/docs/concepts/configuration/taint-and-toleration

tolerations: []

# - key: node-role

# operator: Equal

# value: tidb-operator

# effect: "NoSchedule"

#

# SecurityContext is security config of this component, it will set template.spec.securityContext

# Refer to https://kubernetes.io/docs/tasks/configure-pod-container/security-context

securityContext: {}

# runAsUser: 1000

# runAsGroup: 2000

# fsGroup: 2000

# PodAnnotations will set template.metadata.annotations

# Refer to https://kubernetes.io/docs/concepts/overview/working-with-objects/annotations/

podAnnotations: {}

# additional annotations for the configmap, mainly to prevent spinnaker versioning the cm

configmapAnnotations: {}

# When AdvancedStatefulSet feature is enabled, you must install

# AdvancedStatefulSet controller.

# Note that AdvancedStatefulSet CRD must be installed manually via the following

# command:

# kubectl apply -f manifests/advanced-statefulset-crd.v1beta1.yaml # k8s version < 1.16.0

# kubectl apply -f manifests/advanced-statefulset-crd.v1.yaml # k8s version >= 1.16.0

advancedStatefulset:

create: true

image: pingcap/advanced-statefulset:v0.4.0

imagePullPolicy: IfNotPresent

serviceAccount: advanced-statefulset-controller

logLevel: 4

replicas: 1

resources:

limits:

cpu: 500m

memory: 300Mi

requests:

cpu: 200m

memory: 50Mi

## affinity defines pod scheduling rules,affinity default settings is empty.

## please read the affinity document before set your scheduling rule:

## ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity

affinity: {}

## nodeSelector ensure pods only assigning to nodes which have each of the indicated key-value pairs as labels

## ref:https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#nodeselector

nodeSelector: {}

## Tolerations are applied to pods, and allow pods to schedule onto nodes with matching taints.

## refer to https://kubernetes.io/docs/concepts/configuration/taint-and-toleration

tolerations: []

# - key: node-role

# operator: Equal

# value: tidb-operator

# effect: "NoSchedule"

#

# SecurityContext is security config of this component, it will set template.spec.securityContext

# Refer to https://kubernetes.io/docs/tasks/configure-pod-container/security-context

securityContext: {}

# runAsUser: 1000

# runAsGroup: 2000

# fsGroup: 2000

admissionWebhook:

create: false

replicas: 1

serviceAccount: tidb-admission-webhook

logLevel: 2

rbac:

create: true

## validation webhook would check the given request for the specific resource and operation

validation:

## statefulsets hook would check requests for updating tidbcluster's statefulsets

## If enabled it, the statefulsets of tidbcluseter would update in partition by tidbcluster's annotation

statefulSets: false

## validating hook validates the correctness of the resources under pingcap.com group

pingcapResources: false

## mutation webhook would mutate the given request for the specific resource and operation

mutation:

## defaulting hook set default values for the the resources under pingcap.com group

pingcapResources: true

## failurePolicy are applied to ValidatingWebhookConfiguration which affect tidb-admission-webhook

## refer to https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#failure-policy

failurePolicy:

## the validation webhook would check the request of the given resources.

## If the kubernetes api-server version >= 1.15.0, we recommend the failurePolicy as Fail, otherwise, as Ignore.

validation: Ignore

## the mutation webhook would mutate the request of the given resources.

## If the kubernetes api-server version >= 1.15.0, we recommend the failurePolicy as Fail, otherwise, as Ignore.

mutation: Ignore

## tidb-admission-webhook deployed as kubernetes apiservice server

## refer to https://github.com/openshift/generic-admission-server

apiservice:

## apiservice config

## refer to https://kubernetes.io/docs/tasks/access-kubernetes-api/configure-aggregation-layer/#contacting-the-extension-apiserver

insecureSkipTLSVerify: true

## The Secret includes the TLS ca, cert and key for the `tidb-admission-webook.<Release Namespace>.svc` Service.

## If insecureSkipTLSVerify is true, this would be ignored.

## You can create the tls secret by:

## kubectl create secret generic <secret-name> --namespace=<release-namespace> --from-file=tls.crt=<path-to-cert> --from-file=tls.key=<path-to-key> --from-file=ca.crt=<path-to-ca>

tlsSecret: ""

## The caBundle for the webhook apiservice, you could get it by the secret you created previously:

## kubectl get secret <secret-name> --namespace=<release-namespace> -o=jsonpath='{.data.ca\.crt}'

caBundle: ""

## certProvider indicate the key and cert for the webhook configuration to communicate with `kubernetes.default` service.

## If your kube-apiserver's version >= 1.13.0, you can leave cabundle empty and the kube-apiserver

## would trust the roots on the apiserver.

## refer to https://github.com/kubernetes/api/blob/master/admissionregistration/v1/types.go#L529

## or you can get the cabundle by:

## kubectl get configmap -n kube-system extension-apiserver-authentication -o=jsonpath='{.data.client-ca-file}' | base64 | tr -d '\n'

cabundle: ""

# SecurityContext is security config of this component, it will set template.spec.securityContext

# Refer to https://kubernetes.io/docs/tasks/configure-pod-container/security-context

securityContext: {}

# runAsUser: 1000

# runAsGroup: 2000

# fsGroup: 2000

## nodeSelector ensures that pods are only scheduled to nodes that have each of the indicated key-value pairs as labels

## ref:https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#nodeselector

nodeSelector: {}

## Tolerations are applied to pods, and allow pods to schedule onto nodes with matching taints.

## refer to https://kubernetes.io/docs/concepts/configuration/taint-and-toleration

tolerations: []

# - key: node-role

# operator: Equal

# value: tidb-operator

# effect: "NoSchedule"

#

更新相关的tidb operator配置

[root@k8s-master tidb]# helm upgrade tidb-operator pingcap/tidb-operator --namespace=tidb-admin --version=v1.3.9 -f ./tidb-operator/values-tidb-operator.yaml && kubectl get po -n tidb-admin -l app.kubernetes.io/name=tidb-operator

Release "tidb-operator" has been upgraded. Happy Helming!

NAME: tidb-operator

LAST DEPLOYED: Fri Dec 9 16:31:42 2022

NAMESPACE: tidb-admin

STATUS: deployed

REVISION: 7

TEST SUITE: None

NOTES:

Make sure tidb-operator components are running:

kubectl get pods --namespace tidb-admin -l app.kubernetes.io/instance=tidb-operator

NAME READY STATUS RESTARTS AGE

advanced-statefulset-controller-67885c5dd9-lfmbl 1/1 Running 0 27m

tidb-controller-manager-d5fc64f85-nlv4k 1/1 Running 1 34m

tidb-scheduler-566f48d4bd-82rrd 2/2 Running 0 34m

[root@k8s-master tidb]# kubectl get pods --namespace tidb-admin -l app.kubernetes.io/instance=tidb-operator

NAME READY STATUS RESTARTS AGE

advanced-statefulset-controller-67885c5dd9-lfmbl 1/1 Running 0 28m

tidb-controller-manager-d5fc64f85-nlv4k 1/1 Running 1 34m

tidb-scheduler-566f48d4bd-82rrd 2/2 Running 0 34m

TiDB Operator 通过开启 AdvancedStatefulSet 特性,会将当前 StatefulSet 对象转换成 AdvancedStatefulSet 对象。但是,TiDB Operator 不支持在关闭 AdvancedStatefulSet 特性后,自动从 AdvancedStatefulSet 转换为 Kubernetes 内置的 StatefulSet 对象。

使用

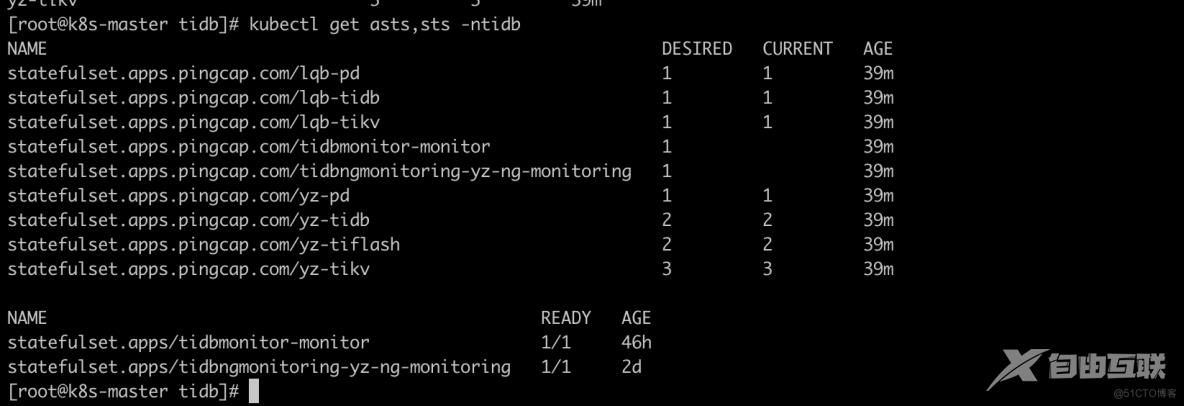

查看AdvancedStatefulSet 对象

AdvancedStatefulSet 数据格式与 StatefulSet 完全一致,但以 CRD 方式实现,别名为 asts ,可通过以下方法查看命名空间下的对象。

[root@k8s-master tidb]# kubectl get asts -ntidb

NAME DESIRED CURRENT AGE

lqb-pd 1 1 38m

lqb-tidb 1 1 38m

lqb-tikv 1 1 38m

tidbmonitor-monitor 1 38m

tidbngmonitoring-yz-ng-monitoring 1 38m

yz-pd 1 1 38m

yz-tidb 2 2 38m

yz-tiflash 2 2 38m

yz-tikv 3 3 38m

[root@k8s-master tidb]# kubectl get sts -ntidb

NAME READY AGE

tidbmonitor-monitor 1/1 46h

tidbngmonitoring-yz-ng-monitoring 1/1 2d

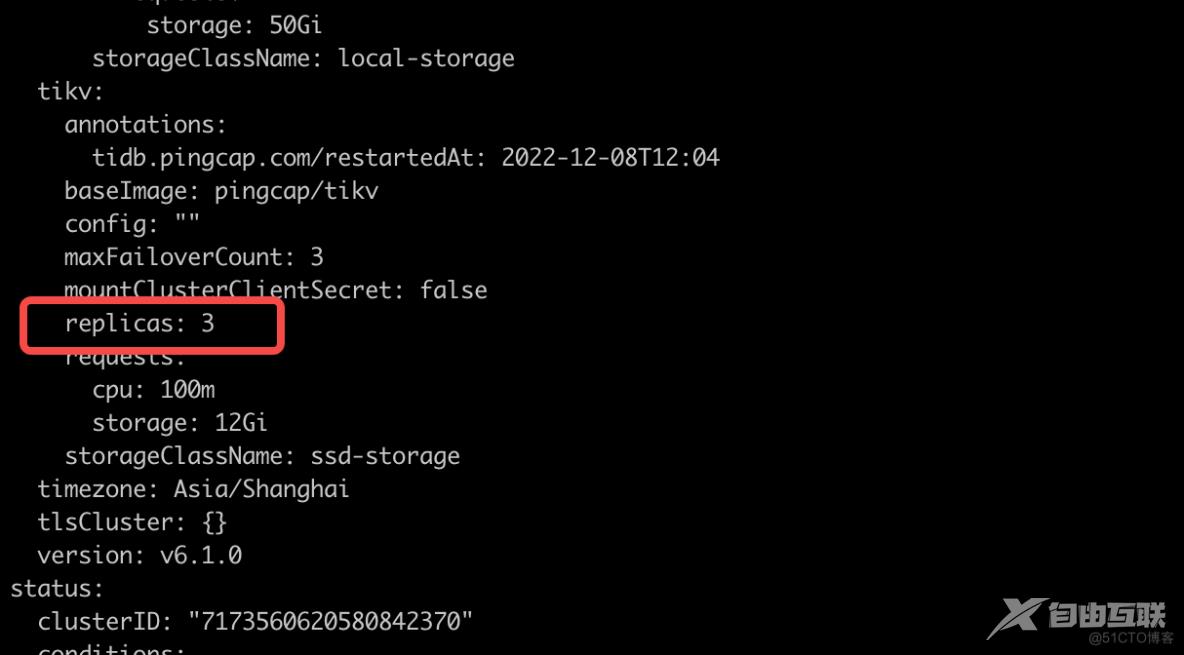

对集群指定的pod进行缩容

使用AdvancedStatefulSet在对TiDBCluster进行缩容时,除了减少副本数,可同时通过配置annotations()对指定的PD、TiKV、TiDB组件下任意一个pod的编号进行缩容

metadata: annotations: tikv.tidb.pingcap.com/delete-slots: '[1]'

如果想要缩容需同时配置修改replicas和annotations两个配置文件

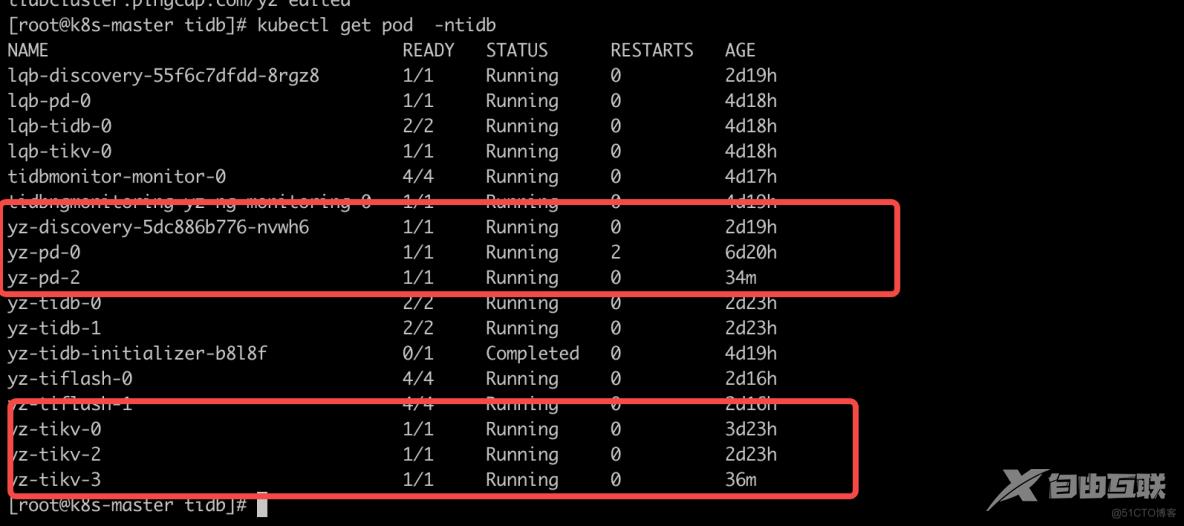

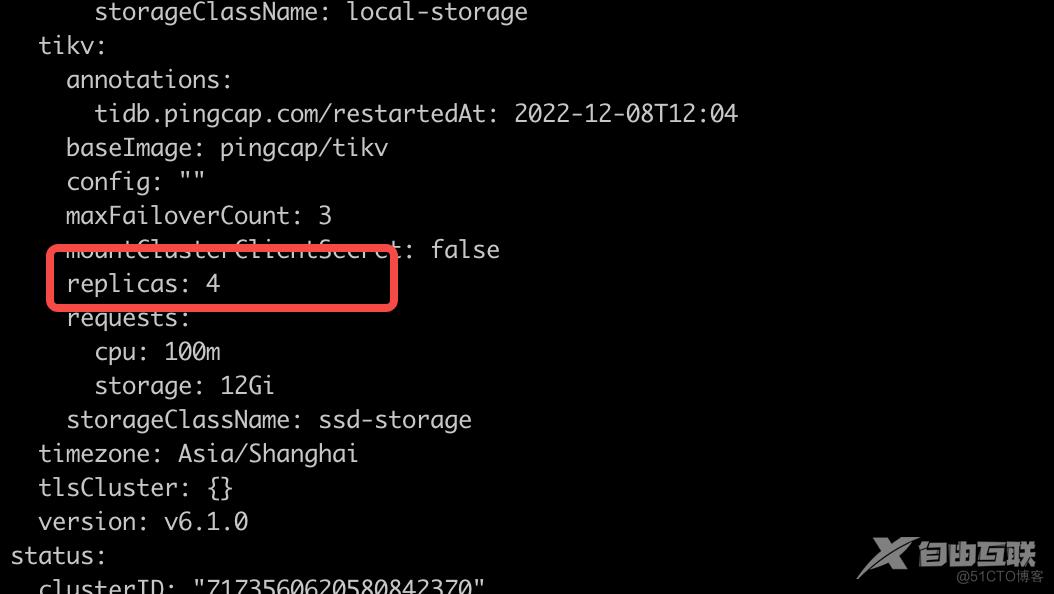

下边已具体缩容tikv1和pd1为例

编辑所要缩容的集群

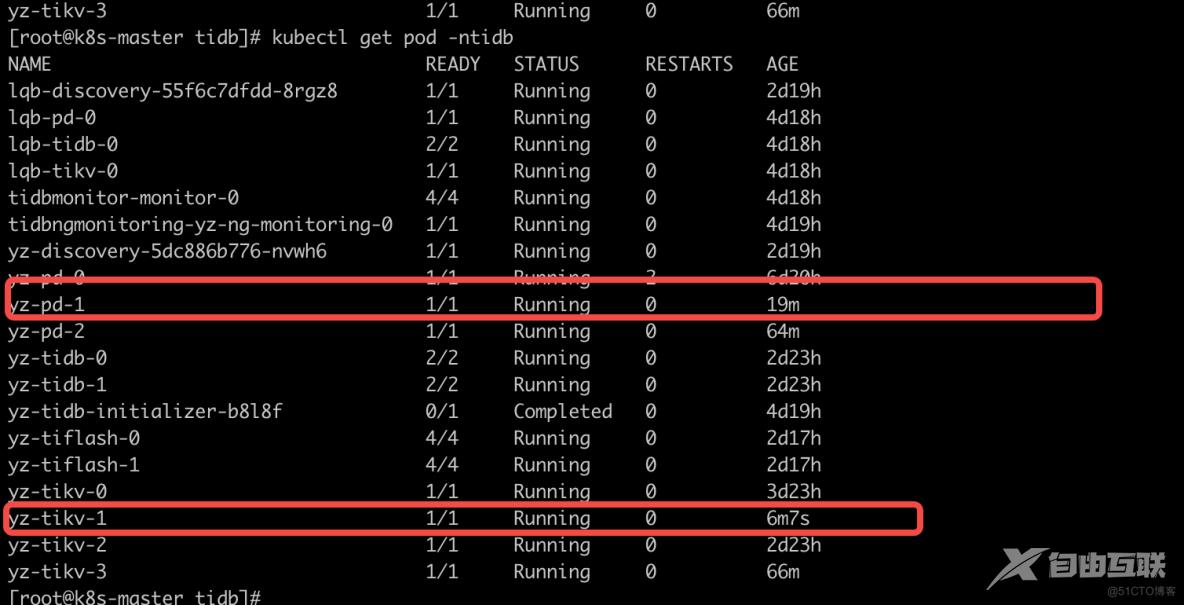

查看执行的结果:

总结

支持的annoations为:

-

pd.tidb.pingcap.com/delete-slots:指定 PD 组件需要删除的 Pod 序号。

<!---->

-

tidb.tidb.pingcap.com/delete-slots:指定 TiDB 组件需要删除的 Pod 序号。

<!---->

-

tikv.tidb.pingcap.com/delete-slots:指定 TiKV 组件需要删除的 Pod 序号。

其中 Annotation 值为 JSON 的整数数组,比如 [0], [0,1], [1,3] 等。

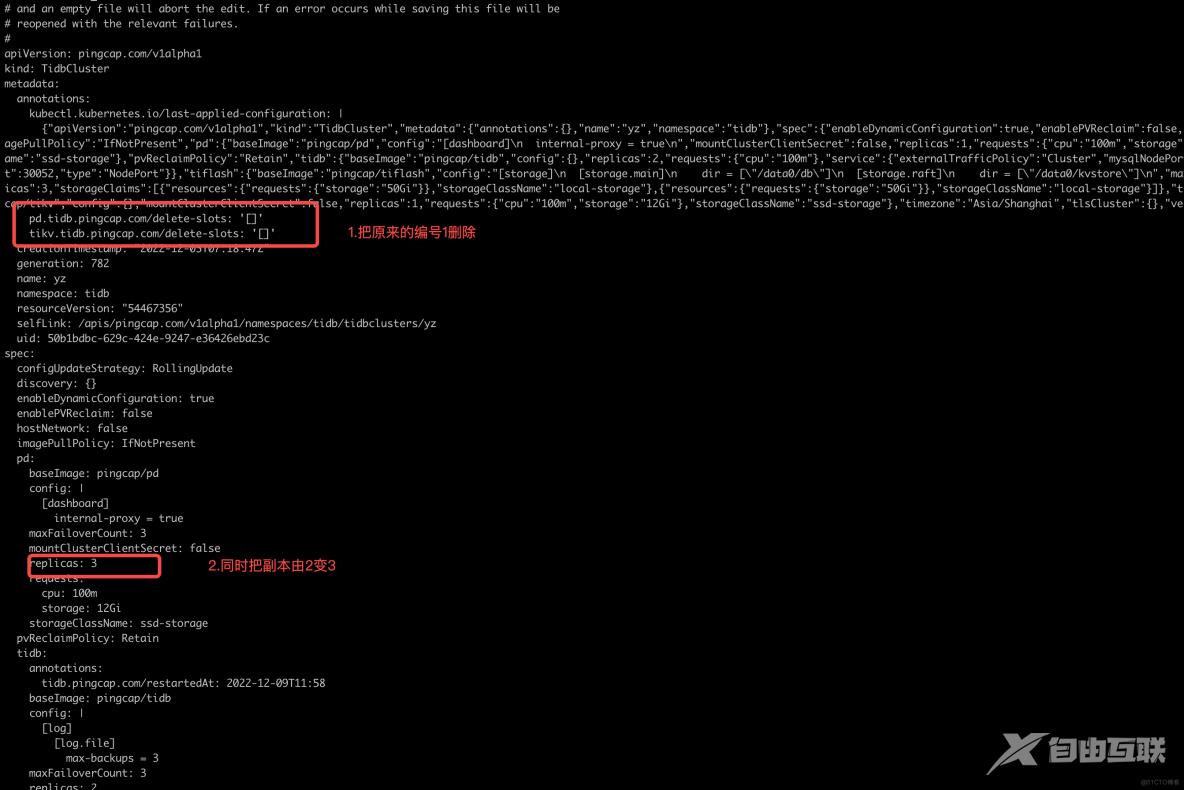

对集群指定位置进行扩容

如果扩容的话需要对前面进行反向操作即修改replicas和把annoations修改的数组进行删除即可( delete-slots annotations 可留空,也可完全删除)。

编辑要扩容的集群

查看执行的结果

TiDB增强型 StatefulSet 控制器--Advanced StatefulSet - lqbyz 的专栏 - https://tidb.net/blog/bac3e058?shareId=1deec6ea

【文章原创作者:响水网页开发 http://www.1234xp.com/xiangshui.html 处的文章,转载请说明出处】