本文是ELK(elasticsearch、logstash、kibana)专题之elasticsearch软件服务部署,采用集群模式部署,使用Vmware构建3台虚拟机,全部代码经过生产环境测试验证。

1.1 部署环境准备

本文应用全部软件版本如下:

操作系统

Ubuntu 22.04.1 LTS

应用软件

elasticsearch8.5.1

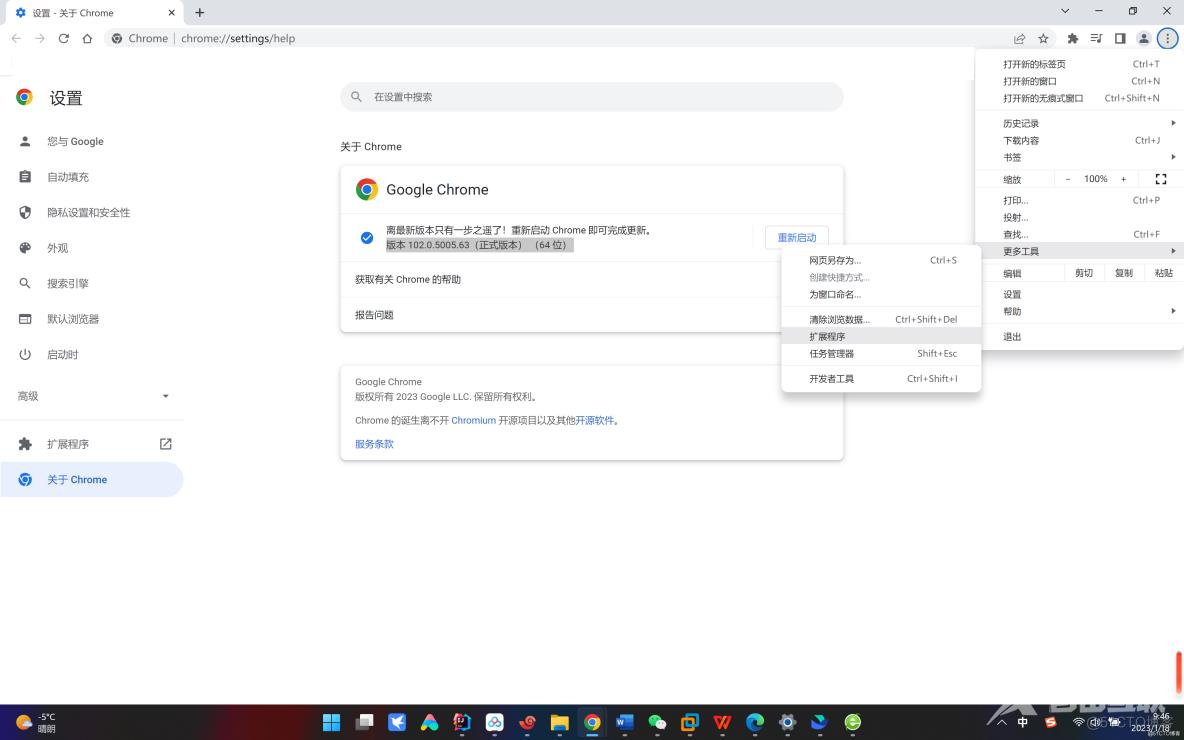

浏览器版本

google 102.0.5005.63(正式版本) (64 位)

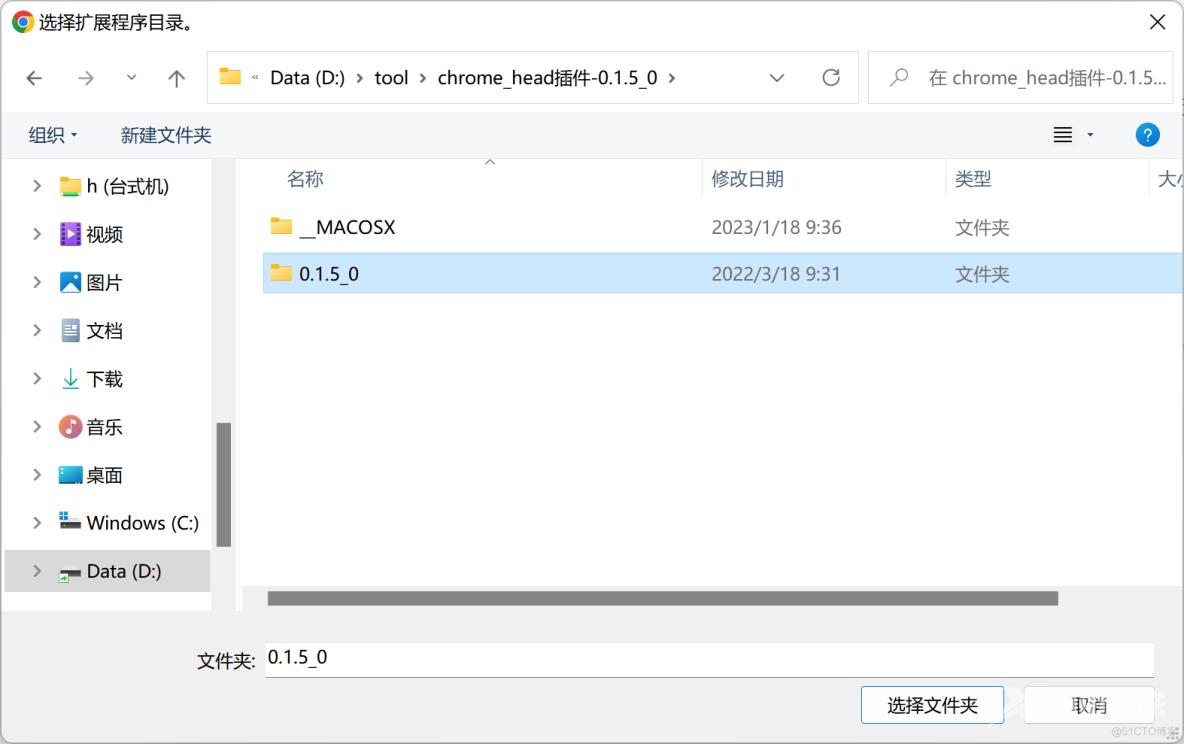

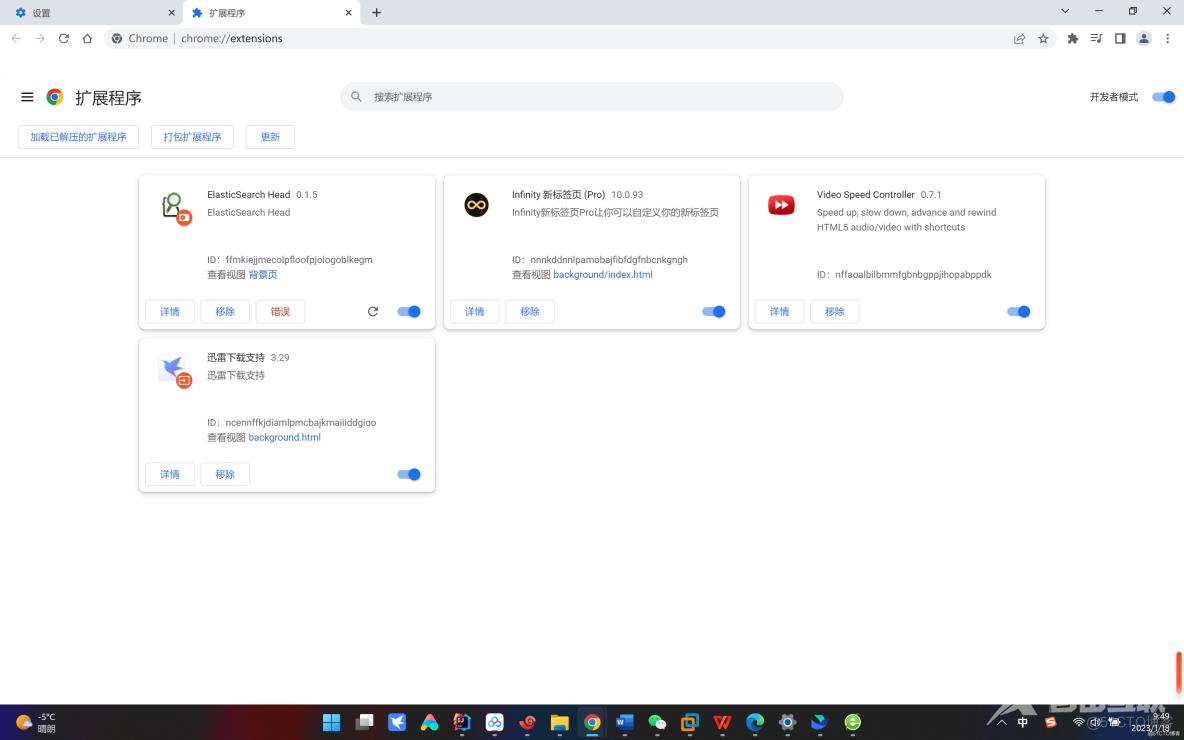

浏览器插件

chrome_head插件-0.1.5_0

远程连接工具

Xshell 7.0(自带Xftp工具)

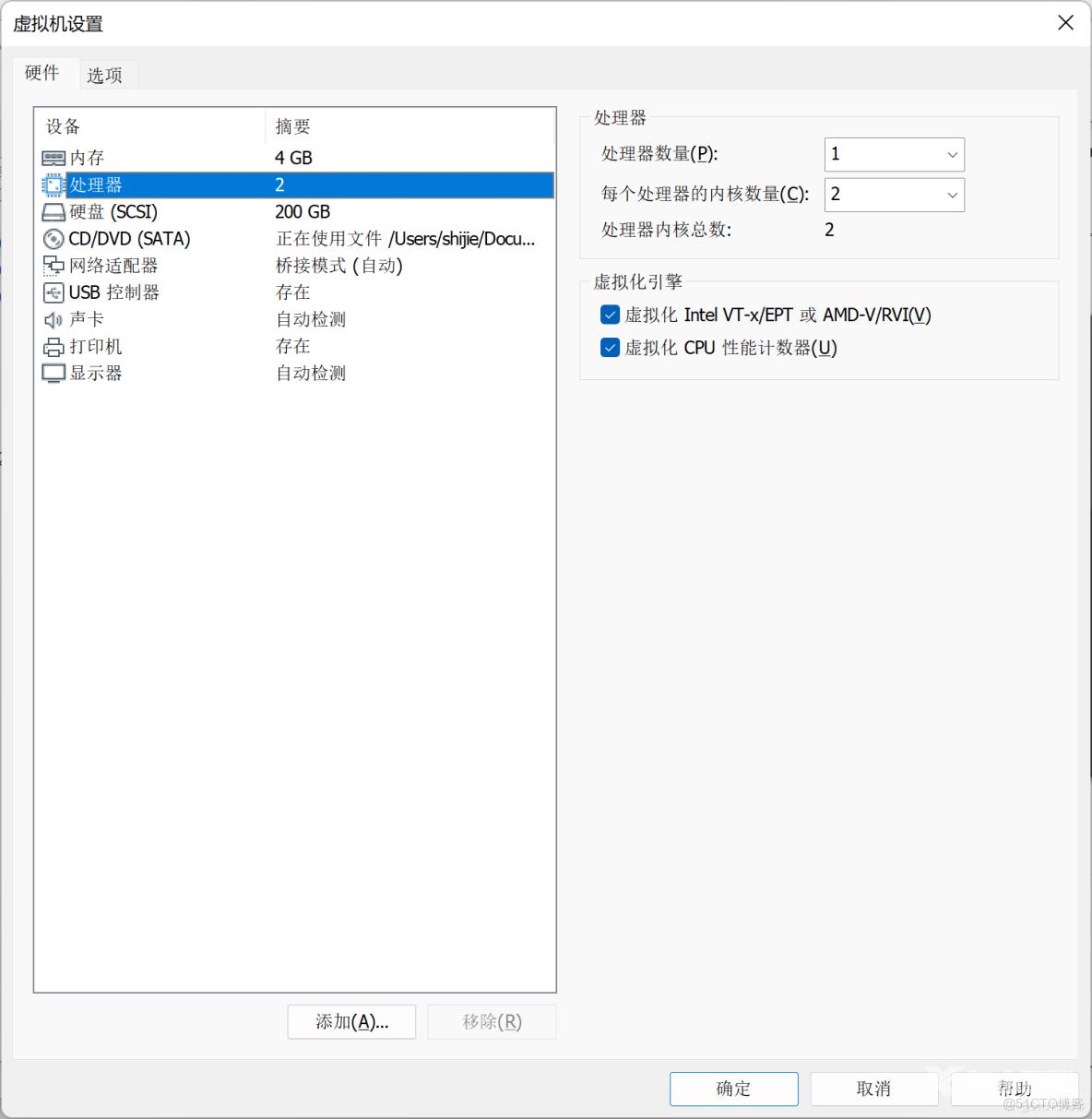

3台vmware虚拟机节点CPU、内存、硬盘资源分配如下所示:

3个节点elasticsearch软件安装在/apps目录,创建的root用户、elasticsearch用户密码默认都是123456,生产环境通常是大小写英文字母+特殊符号+阿拉伯数字。主机名与网络地址对应关系如下:

hostname ip

es1 192.168.31.101

es2 192.168.31.102

es3 192.168.31.103

修改3个主机节点名称:

root@es1:~# vim /etc/hostname

es1.example.com

root@es2:~# vim /etc/hostname

es2.example.com

root@es3:~# vim /etc/hostname

es3.example.com

在3个节点分别创建/apps目录,用于安装elasticsearch

root@es1:~# mkdir /apps

root@es2:~# mkdir /apps

root@es3:~# mkdir /apps

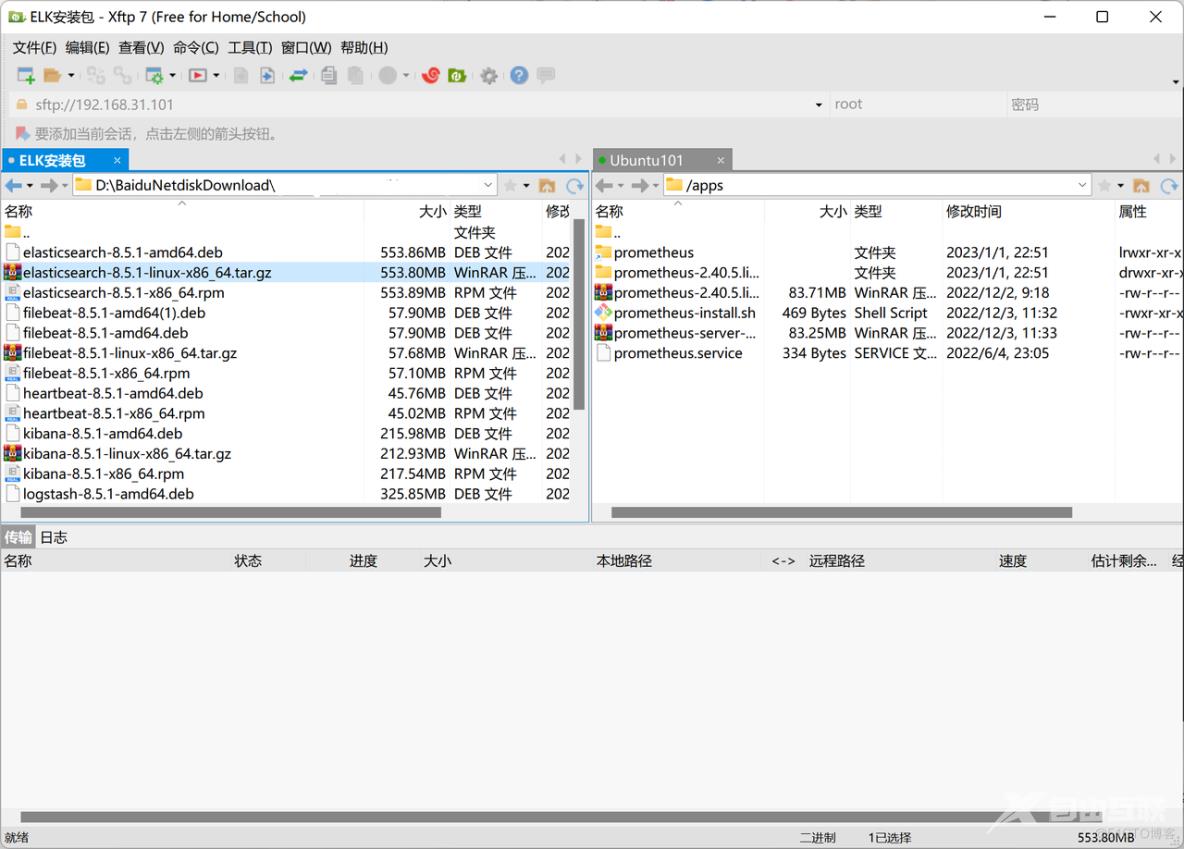

从es1节点上传elasticsearch8.5.1至/apps目录:

上传elasticsearch到es1节点apps目录,同时使用scp命令发送给es2和es3节点/apps目录下

root@es1:~# cd /apps

root@es1:/apps#

root@es1:/apps# ll elasticsearch-8.5.1-linux-x86_64.tar.gz

-rw-r--r-- 1 root root 580699401 Jan 14 00:40 elasticsearch-8.5.1-linux-x86_64.tar.gz

root@es1:/apps# scp elasticsearch-8.5.1-linux-x86_64.tar.gz 192.168.31.102:/apps

The authenticity of host '192.168.31.102 (192.168.31.102)' can't be established.

ED25519 key fingerprint is SHA256:8lFGzgz9f0CfEA5GvMYhTJi5FlEgV9XUJFc562SpxBg.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.31.102' (ED25519) to the list of known hosts.

root@192.168.31.102's password:

elasticsearch-8.5.1-linux-x86_64.tar.gz 100% 554MB 175.9MB/s 00:03

root@es1:/apps# scp elasticsearch-8.5.1-linux-x86_64.tar.gz 192.168.31.103:/apps #传输复制elasticsearch安装包

The authenticity of host '192.168.31.103 (192.168.31.103)' can't be established.

ED25519 key fingerprint is SHA256:8lFGzgz9f0CfEA5GvMYhTJi5FlEgV9XUJFc562SpxBg.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:1: [hashed name]

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.31.103' (ED25519) to the list of known hosts.

root@192.168.31.103's password:

elasticsearch-8.5.1-linux-x86_64.tar.gz 100% 554MB 166.1MB/s 00:03

1.2 参数优化:

由于es节点在生产环境中非常消耗容量,elasticsearch需要调整内核参数,es1内核参数优化如下所示,es2和es3做同样修改:

root@es1:/apps# vim /etc/sysctl.conf

vm.max_map_count=262144

root@es1:~# vim /etc/security/limits.conf

# End of file

root soft core unlimited

root hard core unlimited

root soft nproc 1000000

root hard nproc 1000000

root soft nofile 1000000

root hard nofile 1000000

root soft memlock 32000

root hard memlock 32000

root soft msgqueue 8192000

root hard msgqueue 8192000

* soft core unlimited

* hard core unlimited

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

* hard msgqueue 8192000

1.3 主机名解析

各节点配置DNS主机名解析,如下所示:

root@es1:~# vim /etc/hosts

root@es2:~# vim /etc/hosts

root@es3:~# vim /etc/hosts

192.168.31.101 es1 es1.example.com

192.168.31.102 es2 es2.example.com

192.168.31.103 es3 es3.example.com

1.4 创建普通⽤户运⾏环境

elasticsearch不支持root用户启动,需要创建普通用户启动,es1节点创建elasticsearch用户和用户组,创建数据目录/data/esdata,该目录在生产环境推荐使用挂载盘,创建数据目录,日志目录,并且给上述目录授权,如下所示:

root@es1:~# groupadd -g 2888 elasticsearch

root@es1:~# useradd -u 2888 -g 2888 -r -m -s /bin/bash elasticsearch

root@es1:~# passwd elasticsearch

New password:

Retype new password:

passwd: password updated successfully

root@es1:~# mkdir /data/esdata /data/eslogs /apps -pv

mkdir: created directory '/data'

mkdir: created directory '/data/esdata'

mkdir: created directory '/data/eslogs'

root@es1:~# chown elasticsearch.elasticsearch /data /apps/ -R

es2节点、es3节点依次同样执行上述操作。

1.5 部署elasticsearch集群

es1节点解压缩文档,做好软链接,重启系统使配置生效,使用ln命令确认软链接是否生效

root@es1:/apps# tar xvf elasticsearch-8.5.1-linux-x86_64.tar.gz (解压缩tar安装包)

root@es1:/apps# ln -sv /apps/elasticsearch-8.5.1 /apps/elasticsearch (建立软链接)

root@es1:/apps# reboot (重启使各配置生效)

root@es1:/apps# ln -sv /apps/elasticsearch-8.5.1 /apps/elasticsearch (检查软链接是否生效)

'/apps/elasticsearch' -> '/apps/elasticsearch-8.5.1'

es2节点、es3节点依次同样执行上述命令

1.5.1 xpack认证签发环境:

首先从root用户切换为elasticsearch用户,配置证书,启用认证,使用ca方式生成私钥:,

root@es1:~# su - elasticsearch

elasticsearch@es1:~$ cd /apps/elasticsearch

elasticsearch@es1:~$ cd /apps/elasticsearch

elasticsearch@es1:/apps/elasticsearch$ vim instances.yml #编辑配置文件

instances:

- name: "es1.example.com"

ip:

- "192.168.31.101"

- name: "es2.example.com"

ip:

- "192.168.31.102"

- name: "es3.example.com"

ip:

- "192.168.31.103"

#⽣成CA私钥,默认名字为elastic-stack-ca.p12

elasticsearch@es1:/apps/elasticsearch$ ./bin/elasticsearch-certutil ca

This tool assists you in the generation of X.509 certificates and certificate

signing requests for use with SSL/TLS in the Elastic stack.

The 'ca' mode generates a new 'certificate authority'

This will create a new X.509 certificate and private key that can be used

to sign certificate when running in 'cert' mode.

Use the 'ca-dn' option if you wish to configure the 'distinguished name'

of the certificate authority

By default the 'ca' mode produces a single PKCS#12 output file which holds:

* The CA certificate

* The CA's private key

If you elect to generate PEM format certificates (the -pem option), then the output will

be a zip file containing individual files for the CA certificate and private key

Please enter the desired output file [elastic-stack-ca.p12]: (回车)

Enter password for elastic-stack-ca.p12 : (回车)

elasticsearch@es1:/apps/elasticsearch$ ll elastic-stack-ca.p12 (检查私钥是否生成)

-rw------- 1 elasticsearch elasticsearch 2672 Jan 14 17:27 elastic-stack-ca.p12

使用ca方式生成公钥:

elasticsearch@es1:/apps/elasticsearch$ ./bin/elasticsearch-certutil cert --ca elastic-stack-ca.p12

(使用elasticsearch-certutil工具生成私钥)

This tool assists you in the generation of X.509 certificates and certificate

signing requests for use with SSL/TLS in the Elastic stack.

The 'cert' mode generates X.509 certificate and private keys.

* By default, this generates a single certificate and key for use

on a single instance.

* The '-multiple' option will prompt you to enter details for multiple

instances and will generate a certificate and key for each one

* The '-in' option allows for the certificate generation to be automated by describing

the details of each instance in a YAML file

* An instance is any piece of the Elastic Stack that requires an SSL certificate.

Depending on your configuration, Elasticsearch, Logstash, Kibana, and Beats

may all require a certificate and private key.

* The minimum required value for each instance is a name. This can simply be the

hostname, which will be used as the Common Name of the certificate. A full

distinguished name may also be used.

* A filename value may be required for each instance. This is necessary when the

name would result in an invalid file or directory name. The name provided here

is used as the directory name (within the zip) and the prefix for the key and

certificate files. The filename is required if you are prompted and the name

is not displayed in the prompt.

* IP addresses and DNS names are optional. Multiple values can be specified as a

comma separated string. If no IP addresses or DNS names are provided, you may

disable hostname verification in your SSL configuration.

* All certificates generated by this tool will be signed by a certificate authority (CA)

unless the --self-signed command line option is specified.

The tool can automatically generate a new CA for you, or you can provide your own with

the --ca or --ca-cert command line options.

By default the 'cert' mode produces a single PKCS#12 output file which holds:

* The instance certificate

* The private key for the instance certificate

* The CA certificate

If you specify any of the following options:

* -pem (PEM formatted output)

* -multiple (generate multiple certificates)

* -in (generate certificates from an input file)

then the output will be be a zip file containing individual certificate/key files

Enter password for CA (elastic-stack-ca.p12) : (回车)

Please enter the desired output file [elastic-certificates.p12]: (回车)

Enter password for elastic-certificates.p12 : (回车)

Certificates written to /apps/elasticsearch-8.5.1/elastic-certificates.p12

This file should be properly secured as it contains the private key for

your instance.

This file is a self contained file and can be copied and used 'as is'

For each Elastic product that you wish to configure, you should copy

this '.p12' file to the relevant configuration directory

and then follow the SSL configuration instructions in the product guide.

For client applications, you may only need to copy the CA certificate and

configure the client to trust this certificate.

elasticsearch@es1:/apps/elasticsearch$ ll elastic-certificates.p12 (检查公钥是否生成)

-rw------- 1 elasticsearch elasticsearch 3596 Jan 14 17:28 elastic-certificates.p12

指定instances.yml文件,使用静默方式,使用之前生成的ca证书,签发elasticsearch集群主机证书,生成的证书放入压缩包,指定证书密码是123456

elasticsearch@es1:/apps/elasticsearch$ ./bin/elasticsearch-certutil cert --silent --in instances.yml --out certs.zip --pass 123456 --ca elastic-stack-ca.p12

Enter password for CA (elastic-stack-ca.p12) : (回车)

elasticsearch@es1:/apps/elasticsearch$ ll certs.zip

-rw------- 1 elasticsearch elasticsearch 11574 Jan 14 23:01 certs.zip

解压缩生成的三个证书

elasticsearch@es1:/apps/elasticsearch$ unzip certs.zip

Archive: certs.zip

creating: es1.example.com/

inflating: es1.example.com/es1.example.com.p12

creating: es2.example.com/

inflating: es2.example.com/es2.example.com.p12

creating: es3.example.com/

inflating: es3.example.com/es3.example.com.p12

在es1节点创建证书目录,分发es1节点证书

elasticsearch@es1:/apps/elasticsearch$ mkdir config/certs

elasticsearch@es1:/apps/elasticsearch# cp -rp es1.example.com/es1.example.com.p12 config/certs

在es2和es3节点创建证书目录,从es1节点拷贝证书至es2节点,从es1节点拷贝证书至es3节点

elasticsearch@es2:/apps/elasticsearch$ mkdir config/certs

elasticsearch@es1:/apps/elasticsearch$ scp es2.example.com/es2.example.com.p12 192.168.31.102:/apps/elasticsearch/config/certs

elasticsearch@192.168.31.102's password:

es2.example.com.p12

elasticsearch@es3:/apps/elasticsearch$ mkdir config/certs 100% 3642 1.7MB/s 00:00

elasticsearch@es1:/apps/elasticsearch$ scp es3.example.com/es3.example.com.p12 192.168.31.103:/apps/elasticsearch/config/certs

elasticsearch@192.168.31.103's password:

es3.example.com.p12 100% 3626 1.7MB/s 00:00

检查es1节点,es2节点,es3节点密钥文件拷贝是否正常:

elasticsearch@es1:/apps/elasticsearch/config/certs$ ll es1.example.com.p12

-rw-rw-r-- 1 elasticsearch elasticsearch 3626 Jan 15 10:27 es1.example.com.p12

elasticsearch@es2:/apps/elasticsearch/config/certs$ ll es2.example.com.p12

-rw-rw-r-- 1 elasticsearch elasticsearch 3626 Jan 15 10:27 es2.example.com.p12

elasticsearch@es3:/apps/elasticsearch/config/certs$ ll es3.example.com.p12

-rw-rw-r-- 1 elasticsearch elasticsearch 3626 Jan 15 10:27 es3.example.com.p12

生成keystore文件(keystore是保存了证书密码的认证文件123456)

elasticsearch@es1:/apps/elasticsearch$ ./bin/elasticsearch-keystore create #创建keystore文件

Created elasticsearch keystore in /apps/elasticsearch/config/elasticsearch.keystore

elasticsearch@es1:/apps/elasticsearch$ file /apps/elasticsearch/config/elasticsearch.keystore #检查keystore文件

/apps/elasticsearch/config/elasticsearch.keystore: data #检查keystore文件类型

elasticsearch@es1:/apps/elasticsearch$ ./bin/elasticsearch-keystore add xpack.security.transport.ssl.keystore.secure_password

Enter value for xpack.security.transport.ssl.keystore.secure_password: #输入密码123456

elasticsearch@es1:/apps/elasticsearch$ ./bin/elasticsearch-keystore add xpack.security.transport.ssl.truststore.secure_password

Enter value for xpack.security.transport.ssl.truststore.secure_password: #输入密码123456

签发认证文件到es2节点,es3节点:

elasticsearch@es1:/apps/elasticsearch$ scp /apps/elasticsearch/config/elasticsearch.keystore 192.168.31.102:/apps/elasticsearch/config/elasticsearch.keystore

elasticsearch@192.168.31.102's password:

elasticsearch.ke 100% 331 177.9KB/s 00:00

elasticsearch@es1:/apps/elasticsearch$ scp /apps/elasticsearch/config/elasticsearch.keystore 192.168.31.103:/apps/elasticsearch/config/elasticsearch.keystore

elasticsearch@192.168.31.103's password:

elasticsearch.ke 100% 331 185.1KB/s 00:00

1.5.2 编辑配置⽂件:

几点注意事项,ES节点内存配置是宿主机的一半,最大不要超过30G;CPU核心越多越好,推荐规格32C;由于elasticsearch存储大量数据,磁盘推荐使用SSD。三个节点构成一个elasticsearch集群,需要确保集群名字是一致的(my-es-application),三个节点名字分别配置,需要注意一个参数bootstrap.memory_lock: true,这个如果开启,推荐万兆网卡。

1.5.2.1 节点es1

root@es1:/apps/elasticsearch/config# vim elasticsearch.yml

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

cluster.name: my-es-application

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

node.name: node-1

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

path.data: /data/esdata

#

# Path to log files:

#

path.logs: /data/eslogs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# By default Elasticsearch is only accessible on localhost. Set a different

# address here to expose this node on the network:

#

network.host: 0.0.0.0

#

# By default Elasticsearch listens for HTTP traffic on the first free port it

# finds starting at 9200. Set a specific HTTP port here:

#

http.port: 9200

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

discovery.seed_hosts: ["192.168.31.101", "192.1688.31.102", "192.168.31.103"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

#

cluster.initial_master_nodes: ["192.168.31.101", "192.1688.31.102", "192.168.31.103"]

#

# For more information, consult the discovery and cluster formation module documentation.

#

# --------------------------------- Readiness ----------------------------------

#

# Enable an unauthenticated TCP readiness endpoint on localhost

#

#readiness.port: 9399

#

# ---------------------------------- Various -----------------------------------

#

# Allow wildcard deletion of indices:

#

action.destructive_requires_name: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.keystore.path: /apps/elasticsearch/config/certs/es1.example.com.p12

xpack.security.transport.ssl.truststore.path: /apps/elasticsearch/config/certs/es1.example.com.p12

1.5.2.2 节点es2

root@es2:/apps/elasticsearch/config# vim elasticsearch.yml

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

cluster.name: my-es-application

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

node.name: node-2

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

#

path.data: /data/esdata

#

# Path to log files:

#

path.logs: /data/eslogs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# By default Elasticsearch is only accessible on localhost. Set a different

# address here to expose this node on the network:

#

network.host: 0.0.0.0

#

# By default Elasticsearch listens for HTTP traffic on the first free port it

# finds starting at 9200. Set a specific HTTP port here:

#

http.port: 9200

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

discovery.seed_hosts: ["192.168.31.101", "192.168.31.102", "192.168.31.103"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

#

cluster.initial_master_nodes: ["192.168.31.101", "192.168.31.102", "192.168.31.103"]

#

# For more information, consult the discovery and cluster formation module documentation.

#

# --------------------------------- Readiness ----------------------------------

#

# Enable an unauthenticated TCP readiness endpoint on localhost

#

#readiness.port: 9399

#

# ---------------------------------- Various -----------------------------------

#

# Allow wildcard deletion of indices:

#

action.destructive_requires_name: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.keystore.path: /apps/elasticsearch/config/certs/es2.example.com.p12

xpack.security.transport.ssl.truststore.path: /apps/elasticsearch/config/certs/es2.example.com.p12

1.5.2.3 节点es3

root@es3:/apps/elasticsearch/config# cat elasticsearch.yml

# ======================== Elasticsearch Configuration =========================

#

# NOTE: Elasticsearch comes with reasonable defaults for most settings.

# Before you set out to tweak and tune the configuration, make sure you

# understand what are you trying to accomplish and the consequences.

#

# The primary way of configuring a node is via this file. This template lists

# the most important settings you may want to configure for a production cluster.

#

# Please consult the documentation for further information on configuration options:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

#

# Use a descriptive name for your cluster:

#

cluster.name: my-es-application

#

# ------------------------------------ Node ------------------------------------

#

# Use a descriptive name for the node:

#

node.name: node-3

#

# Add custom attributes to the node:

#

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

#

# Path to directory where to store the data (separate multiple locations by comma):

# elasticsearch数据目录

path.data: /data/esdata

#

# Path to log files:

# elasticsearch日志目录

path.logs: /data/eslogs

#

# ----------------------------------- Memory -----------------------------------

#

# Lock the memory on startup:

#

#bootstrap.memory_lock: true

#

# Make sure that the heap size is set to about half the memory available

# on the system and that the owner of the process is allowed to use this

# limit.

#

# Elasticsearch performs poorly when the system is swapping the memory.

#

# ---------------------------------- Network -----------------------------------

#

# By default Elasticsearch is only accessible on localhost. Set a different

# address here to expose this node on the network:

#

network.host: 0.0.0.0

#

# By default Elasticsearch listens for HTTP traffic on the first free port it

# finds starting at 9200. Set a specific HTTP port here:

#

http.port: 9200

#

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

discovery.seed_hosts: ["host1", "host2"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

#

cluster.initial_master_nodes: ["node-1", "node-2"]

#

# For more information, consult the discovery and cluster formation module documentation.

#

# --------------------------------- Readiness ----------------------------------

#

# Enable an unauthenticated TCP readiness endpoint on localhost

#

#readiness.port: 9399

#

# ---------------------------------- Various -----------------------------------

#

# Allow wildcard deletion of indices:

#

action.destructive_requires_name: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.keystore.path: /apps/elasticsearch/config/certs/es3.example.com.p12

xpack.security.transport.ssl.truststore.path: /apps/elasticsearch/config/certs/es3.example.com.p12

使用root用户创建启动服务文件elasticsearch.service

root@es1:~# vim /lib/systemd/system/elasticsearch.service

root@es1:~# cat /lib/systemd/system/elasticsearch.service

[Unit]

Descriptinotallow=Elasticsearch

Documentatinotallow=http://www.elastic.co

Wants=network-online.target

After=network-online.target

[Service]

RuntimeDirectory=elasticsearch

Envirnotallow=ES_HOME=/apps/elasticsearch #应用目录

Envirnotallow=ES_PATH_CONF=/apps/elasticsearch/config #应用配置目录

Envirnotallow=PID_DIR=/apps/elasticsearch

WorkingDirectory=/apps/elasticsearch

User=elasticsearch #指定默认启动用户

Group=elasticsearch #指定默认启动用户组

ExecStart=/apps/elasticsearch/bin/elasticsearch --quiet

# StandardOutput is configured to redirect to journalctl since

# some error messages may be logged in standard output before

# elasticsearch logging system is initialized. Elasticsearch

# stores its logs in /var/log/elasticsearch and does not use

# journalctl by default. If you also want to enable journalctl

# logging, you can simply remove the "quiet" option from ExecStart.

StandardOutput=journal

StandardError=inherit

# Specifies the maximum file descriptor number that can be opened by this process

LimitNOFILE=65536

# Specifies the maximum number of processes

LimitNPROC=4096

# Specifies the maximum size of virtual memory

LimitAS=infinity

# Specifies the maximum file size

LimitFSIZE=infinity

# Disable timeout logic and wait until process is stopped

TimeoutStopSec=0

# SIGTERM signal is used to stop the Java process

KillSignal=SIGTERM

# Send the signal only to the JVM rather than its control group

KillMode=process

# Java process is never killed

SendSIGKILL=no

# When a JVM receives a SIGTERM signal it exits with code 143

SuccessExitStatus=143

[Install]

WantedBy=multi-user.target

将服务文件分发到es2和es3节点服务目录:

root@es1:~# scp /lib/systemd/system/elasticsearch.service 192.168.31.102:/lib/systemd/system/elasticsearch.service

root@192.168.31.102's password:

elasticsearch.service 100% 1541 780.4KB/s 00:00

root@es1:~# scp /lib/systemd/system/elasticsearch.service 192.168.31.103:/lib/systemd/system/elasticsearch.service

root@192.168.31.103's password:

elasticsearch.service 100% 1541 568.0KB/s 00:0

连续输入命令,同时启动3个节点服务,按照目前配置,没有特别制定master节点、data节点,目前3个节点都可以作为master节点,也可以作为data节点:

root@es1:~# systemctl daemon-reload && systemctl start elasticsearch.service &&systemctl enable elasticsearch.service

Created symlink /etc/systemd/system/multi-user.target.wants/elasticsearch.service → /lib/systemd/system/elasticsearch.service.

root@es2:~# systemctl daemon-reload && systemctl start elasticsearch.service &&systemctl enable elasticsearch.service

Created symlink /etc/systemd/system/multi-user.target.wants/elasticsearch.service → /lib/systemd/system/elasticsearch.service.

root@es3:~# systemctl daemon-reload && systemctl start elasticsearch.service &&systemctl enable elasticsearch.service

Created symlink /etc/systemd/system/multi-user.target.wants/elasticsearch.service → /lib/systemd/system/elasticsearch.service.

以es3节点为例,使用tail命令检查日志,查看elasticsearch是否成功启动:

root@es3:~# tail -f /data/eslogs/my-es-application.log

[2023-01-16T20:59:11,992][INFO ][o.e.l.LicenseService ] [node-3] license [11570d8d-30aa-4cc8-a014-8b0bb619d38e] mode [basic] - valid

[2023-01-16T20:59:11,993][INFO ][o.e.x.s.a.Realms ] [node-3] license mode is [basic], currently licensed security realms are [reserved/reserved,file/default_file,native/default_native]

[2023-01-16T20:59:17,627][INFO ][o.e.c.m.MetadataCreateIndexService] [node-3] [.geoip_databases] creating index, cause [auto(bulk api)], templates [], shards [1]/[0]

[2023-01-16T20:59:17,634][INFO ][o.e.c.r.a.AllocationService] [node-3] updating number_of_replicas to [1] for indices [.geoip_databases]

[2023-01-16T20:59:18,525][INFO ][o.e.c.r.a.AllocationService] [node-3] current.health="GREEN" message="Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[.geoip_databases][0]]])." previous.health="YELLOW" reasnotallow="shards started [[.geoip_databases][0]]"

[2023-01-16T20:59:19,297][INFO ][o.e.c.s.MasterService ] [node-3] node-join[{node-1}{N4pAkC83RIyNAA2EZlQgOA}{w0aODHHATue_YLxsnEurxQ}{node-1}{192.168.31.101}{192.168.31.101:9300}{cdfhilmrstw} joining], term: 1, version: 29, delta: added {{node-1}{N4pAkC83RIyNAA2EZlQgOA}{w0aODHHATue_YLxsnEurxQ}{node-1}{192.168.31.101}{192.168.31.101:9300}{cdfhilmrstw}}

[2023-01-16T20:59:19,784][INFO ][o.e.c.s.ClusterApplierService] [node-3] added {{node-1}{N4pAkC83RIyNAA2EZlQgOA}{w0aODHHATue_YLxsnEurxQ}{node-1}{192.168.31.101}{192.168.31.101:9300}{cdfhilmrstw}}, term: 1, version: 29, reason: Publication{term=1, versinotallow=29}

[2023-01-16T20:59:21,361][INFO ][o.e.i.g.DatabaseNodeService] [node-3] successfully loaded geoip database file [GeoLite2-ASN.mmdb]

[2023-01-16T20:59:56,341][INFO ][o.e.i.g.DatabaseNodeService] [node-3] successfully loaded geoip database file [GeoLite2-City.mmdb]

[2023-01-16T20:59:58,233][INFO ][o.e.i.g.DatabaseNodeService] [node-3] successfully loaded geoip database file [GeoLite2-Country.mmdb] #successfully代表节点成功启动

使用ps命令,检查elasticsearch进程是否成功启动:

root@es3:~# ps aux|grep elasticsearch

elastic+ 884 0.2 2.3 2602252 93796 ? Ssl 21:59 0:09 /apps/elasticsearch/jdk/bin/java -Xms4m -Xmx64m -XX:+UseSerialGC -Dcli.name=server -Dcli.script=/apps/elasticsearch/bin/elasticsearch -Dcli.libs=lib/tools/server-cli -Des.path.home=/apps/elasticsearch -Des.path.cnotallow=/apps/elasticsearc/config -Des.distribution.type=tar -cp /apps/elasticsearch/lib/*:/apps/elasticsearch/lib/cli-launcher/* org.elasticsearch.launcher.CliToolLauncher --quiet

elastic+ 1361 2.1 59.0 4742556 2351492 ? Sl 21:59 1:21 /apps/elasticsearch-8.5.1/jdk/bin/java -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -Djava.security.manager=allow -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimizatinotallow=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Dlog4j2.formatMsgNoLookups=true -Djava.locale.providers=SPI,COMPAT --add-opens=java.base/java.io=ALL-UNNAMED -XX:+UseG1GC -Djava.io.tmpdir=/tmp/elasticsearch-12669585393377606587 -XX:+HeapDumpOnOutOfMemoryError -XX:+ExitOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m -Xms1944m -Xmx1944m -XX:MaxDirectMemorySize=1019215872 -XX:G1HeapReginotallow=4m -XX:InitiatingHeapOccupancyPercent=30 -XX:G1ReservePercent=15 -Des.distribution.type=tar --module-path /apps/elasticsearch-8.5.1/lib --add-modules=jdk.net -m org.elasticsearch.server/org.elasticsearch.bootstrap.Elasticsearch

elastic+ 1392 0.0 0.1 107900 7636 ? Sl 21:59 0:00 /apps/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

root 1683 0.0 0.0 7004 2240 pts/0 S+ 23:02 0:00 grep --color=auto elasticsearch

1.6 测试验证

1.6.1 批量修改默认账户密码:

在es1节点上从root用户切换为elasticsearch用户,设置所有默认用户密码为123456

root@es1:~# su - elasticsearch

elasticsearch@es1:/apps/elasticsearch$ ./bin/elasticsearch-setup-passwords interactive

******************************************************************************

Note: The 'elasticsearch-setup-passwords' tool has been deprecated. This command will be removed in a future release.

******************************************************************************

Initiating the setup of passwords for reserved users elastic,apm_system,kibana,kibana_system,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

Enter password for [elastic]: #默认用户密码均为123456

Reenter password for [elastic]:

Enter password for [apm_system]:

Reenter password for [apm_system]:

Enter password for [kibana_system]:

Reenter password for [kibana_system]:

Enter password for [logstash_system]:

Reenter password for [logstash_system]:

Enter password for [beats_system]:

Reenter password for [beats_system]:

Enter password for [remote_monitoring_user]:

Reenter password for [remote_monitoring_user]:

Changed password for user [apm_system]

Changed password for user [kibana_system]

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [beats_system]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

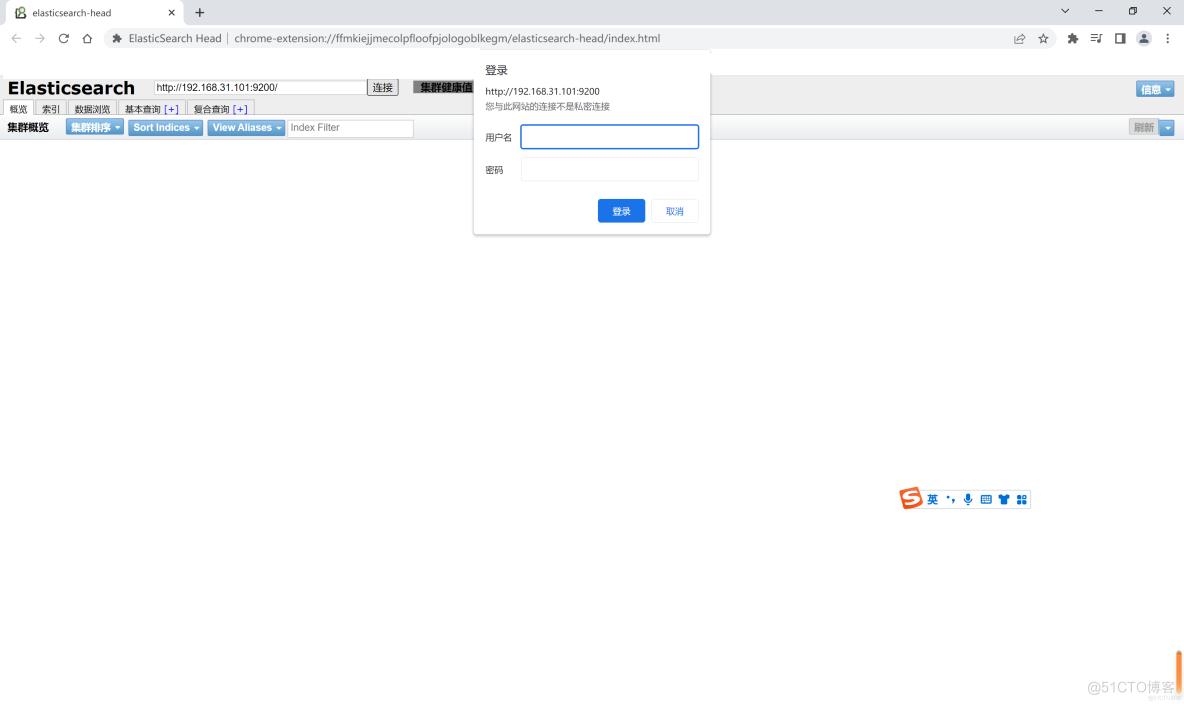

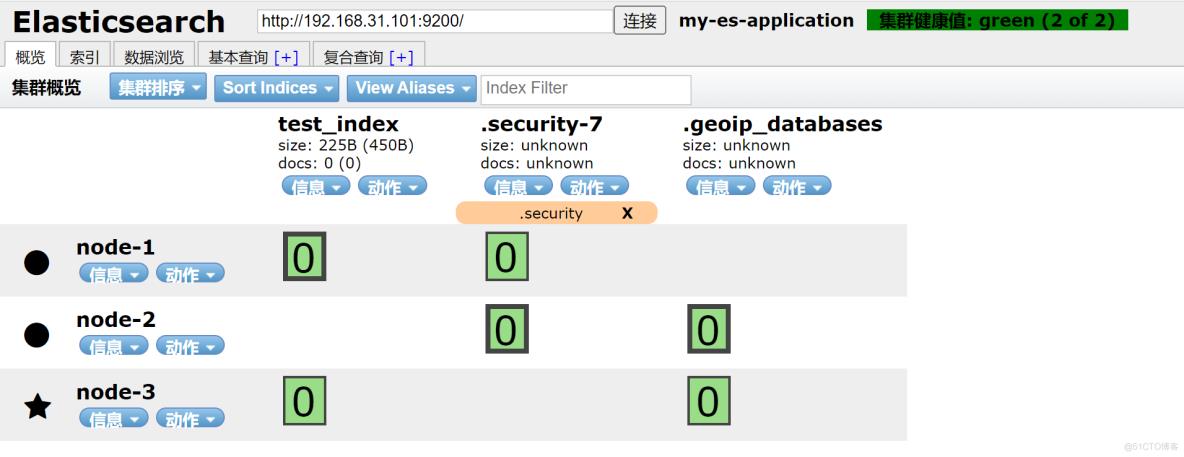

在浏览器安装插件,方便登录,使用上述默认用户进行登录验证:

使用elasticsearch-head应用插件通过图形化方式管理es集群,使用manager用户,密码123456,登录es1节点:

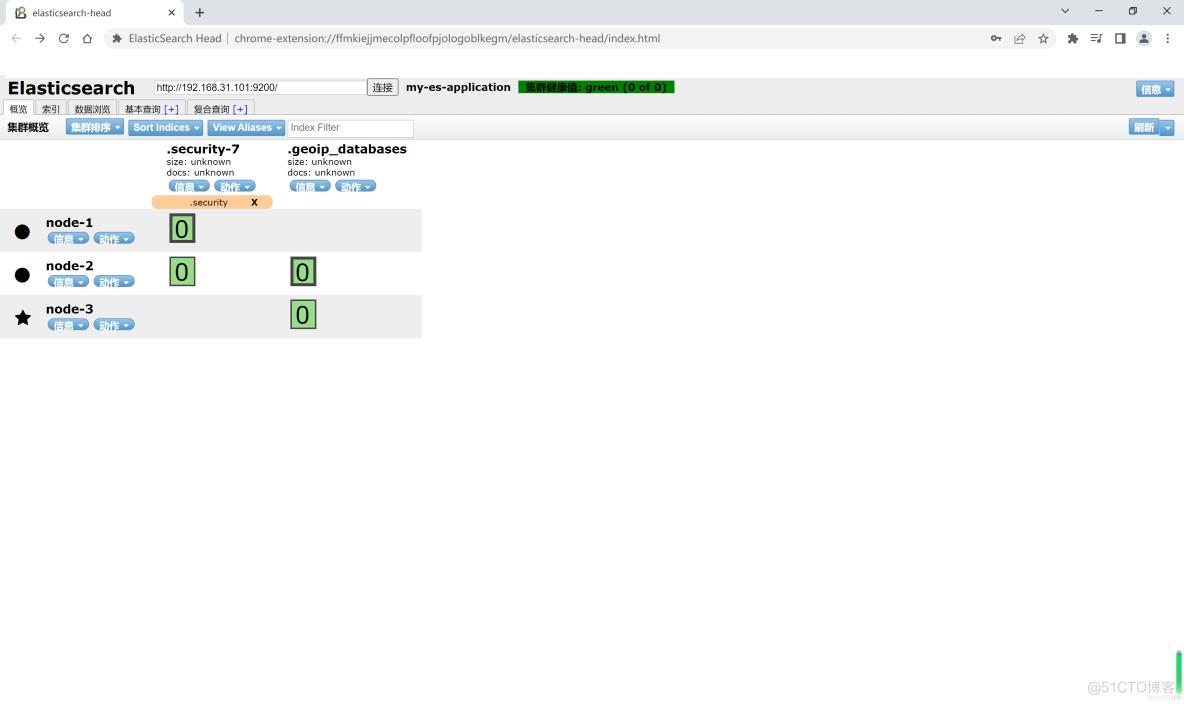

登录成功,可以通过elasticsearch-head插件工具查看节点的状态

1.6.2:创建超级管理员账户:

使用elasticsearch用户登录3个节点,创建本地超级用户manager,密码为123456

elasticsearch@es1:/apps/elasticsearch$ ./bin/elasticsearch-users useradd manager -p 123456 -r superuser

elasticsearch@es2:/apps/elasticsearch$ ./bin/elasticsearch-users useradd manager -p 123456 -r superuser

elasticsearch@es3:/apps/elasticsearch$ ./bin/elasticsearch-users useradd manager -p 123456 -r superuser

使用manager用户访问es1节点API,验证是否正确

elasticsearch@es1:/apps/elasticsearch$ curl -u manager:123456 http://192.168.31.101:9200

{

"name" : "node-1",

"cluster_name" : "my-es-application",

"cluster_uuid" : "R3kgPZnVTuqBoGKG0dc9jQ",

"version" : {

"number" : "8.5.1",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "c1310c45fc534583afe2c1c03046491efba2bba2",

"build_date" : "2022-11-09T21:02:20.169855900Z",

"build_snapshot" : false,

"lucene_version" : "9.4.1",

"minimum_wire_compatibility_version" : "7.17.0",

"minimum_index_compatibility_version" : "7.0.0"

},

"tagline" : "You Know, for Search"

}

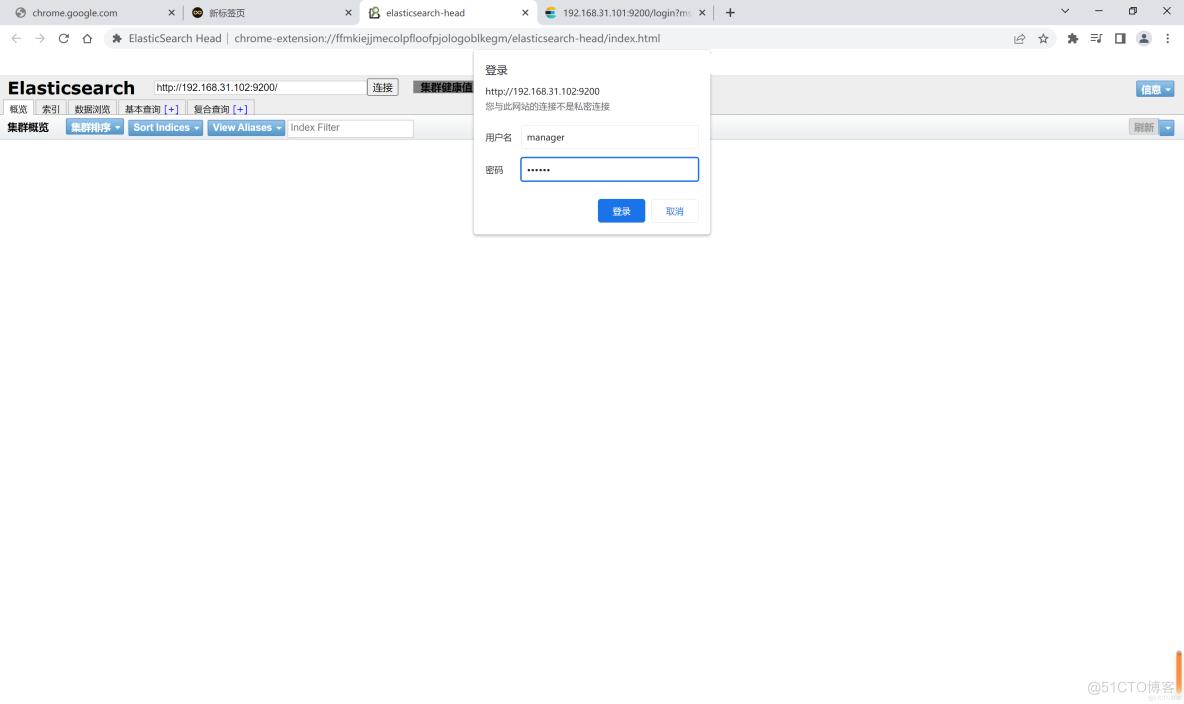

使用manger用户,输入密码123456,通过elasticsearch-head插件应用登录es节点

使用elasticsearch用户设置es1节点信息,既是master节点,也是data节点,然后切换root用户重新启动elasticsearch进程:

elasticsearch@es1:/apps/elasticsearch/config# vim elasticsearch.yml

......省略部分配置信息......

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.keystore.path: /apps/elasticsearch/config/certs/es1.example.com.p12

xpack.security.transport.ssl.truststore.path: /apps/elasticsearch/config/certs/es1.example.com.p12

node.roles: [ master,data ]

elasticsearch@es1:/apps/elasticsearch/config$ su - root #切换为root用户

Password:

root@es1:~# systemctl restart elasticsearch.service #重启elasticsearch进程

root@es1:~# ps aux|grep java #检查elasticsearch进程是否正常启动

elastic+ 4429 6.1 2.3 2602252 92864 ? Ssl 22:47 0:02 /apps/elasticsearch/jdk/bin/java -Xms4m -Xmx64m -XX:+UseSerialGC -Dcli.name=server -Dcli.script=/apps/elasticsearch/bin/elasticsearch -Dcli.libs=lib/tools/server-cli -Des.path.home=/apps/elasticsearch -Des.path.cnotallow=/apps/elasticsearch/config -Des.distribution.type=tar -cp /apps/elasticsearch/lib/*:/apps/elasticsearch/lib/cli-launcher/* org.elasticsearch.launcher.CliToolLauncher --quiet

elastic+ 4488 76.2 58.7 4744928 2341744 ? Sl 22:47 0:25 /apps/elasticsearch-8.5.1/jdk/bin/java -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -Djava.security.manager=allow -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimizatinotallow=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Dlog4j2.formatMsgNoLookups=true -Djava.locale.providers=SPI,COMPAT --add-opens=java.base/java.io=ALL-UNNAMED -XX:+UseG1GC -Djava.io.tmpdir=/tmp/elasticsearch-6269804536900529910 -XX:+HeapDumpOnOutOfMemoryError -XX:+ExitOnOutOfMemoryError -XX:HeapDumpPath=data -XX:ErrorFile=logs/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m -Xms1944m -Xmx1944m -XX:MaxDirectMemorySize=1019215872 -XX:G1HeapReginotallow=4m -XX:InitiatingHeapOccupancyPercent=30 -XX:G1ReservePercent=15 -Des.distribution.type=tar --module-path /apps/elasticsearch-8.5.1/lib --add-modules=jdk.net -m org.elasticsearch.server/org.elasticsearch.bootstrap.Elasticsearch

root 4550 0.0 0.0 7004 2116 pts/0 R+ 22:48 0:00 grep --color=auto java

root@es1:~#cd /data/eslogs

root@es1:/data/eslogs# tail -f /data/eslogs/my-es-application.log #查看es1节点日志

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144) ~[?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642) ~[?:?]

at java.lang.Thread.run(Thread.java:1589) ~[?:?]

[2023-01-18T22:48:01,434][INFO ][o.e.c.s.ClusterApplierService] [node-1] master node changed {previous [], current [{node-3}{OWwaojELR32cGqvhnjOHUg}{Zg4Jx5chSmaHQCfNmMXelg}{node-3}{192.168.31.103}{192.168.31.103:9300}{cdfhilmrstw}]}, added {{node-2}{KuFIWKRaQu2fnUwxBWVH1Q}{cfx61Z6BRramXZ-WpkYZbg}{node-2}{192.168.31.102}{192.168.31.102:9300}{cdfhilmrstw}, {node-3}{OWwaojELR32cGqvhnjOHUg}{Zg4Jx5chSmaHQCfNmMXelg}{node-3}{192.168.31.103}{192.168.31.103:9300}{cdfhilmrstw}}, term: 12, version: 130, reason: ApplyCommitRequest{term=12, versinotallow=130, sourceNode={node-3}{OWwaojELR32cGqvhnjOHUg}{Zg4Jx5chSmaHQCfNmMXelg}{node-3}{192.168.31.103}{192.168.31.103:9300}{cdfhilmrstw}{ml.allocated_processors_double=4.0, ml.machine_memory=2035961856, xpack.installed=true, ml.max_jvm_size=1019215872, ml.allocated_processors=4}}

[2023-01-18T22:48:01,627][INFO ][o.e.x.s.a.TokenService ] [node-1] refresh keys

[2023-01-18T22:48:01,742][INFO ][o.e.x.s.a.TokenService ] [node-1] refreshed keys

[2023-01-18T22:48:01,770][INFO ][o.e.l.LicenseService ] [node-1] license [11570d8d-30aa-4cc8-a014-8b0bb619d38e] mode [basic] - valid

[2023-01-18T22:48:01,772][INFO ][o.e.x.s.a.Realms ] [node-1] license mode is [basic], currently licensed security realms are [reserved/reserved,file/default_file,native/default_native]

[2023-01-18T22:48:01,779][INFO ][o.e.h.AbstractHttpServerTransport] [node-1] publish_address {192.168.31.101:9200}, bound_addresses {[::]:9200}

[2023-01-18T22:48:01,779][INFO ][o.e.n.Node ] [node-1] started {node-1}{N4pAkC83RIyNAA2EZlQgOA}{fUqnjKOXR_SLr3xH7Ze26w}{node-1}{192.168.31.101}{192.168.31.101:9300}{dm}{xpack.installed=true}

一般冷数据节点可以使用机械硬盘存储,一般热数据节点可以使用SSD硬盘存储,默认情况主数据与副本数据必须在不同的节点上,可以使用各种命令检查状态 ,比如检查集群node节点信息和master节点信息:

root@es1:/apps/elasticsearch/config# curl -u manager:123456 -X GET http://192.168.31.101:9200/_cat/nodes?v

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.31.102 25 97 0 0.08 0.07 0.08 cdfhilmrstw - node-2

192.168.31.101 29 96 0 0.01 0.07 0.13 dm - node-1

192.168.31.103 22 96 0 0.38 0.22 0.14 cdfhilmrstw * node-3

root@es1:/apps/elasticsearch/config# curl -u manager:123456 -X GET http://192.168.31.101:9200/_cat/master?v

id host ip node

OWwaojELR32cGqvhnjOHUg 192.168.31.103 192.168.31.103 node-3

检查集群心跳信息:

root@es1:/apps/elasticsearch/config# curl -u manager:123456 -X GET http://192.168.31.101:9200/_cat/health?v

epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent

1674054536 15:08:56 my-es-application green 3 3 4 2 0 0 0 0 - 100.0%

尝试在es1节点使用命令创建一个空的索引:

root@es1:/apps/elasticsearch/config# curl -u manager:123456 -X PUT http://192.168.31.101:9200/test_index?pretty

{

"acknowledged" : true,

"shards_acknowledged" : true,

"index" : "test_index"

}

在elasticsearch-head插件图形界面中查看索引是否成功创建索引:

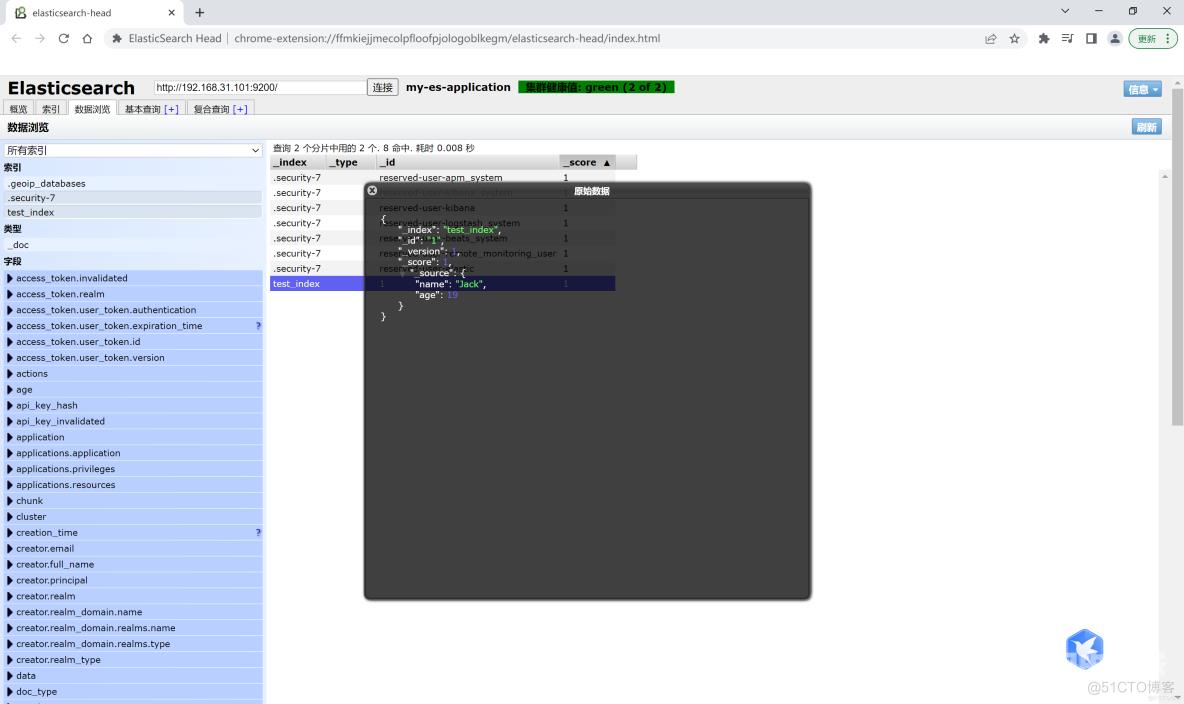

给创建的索引上传一个数据:

root@es1:~# curl -u manager:123456 -X POST "http://192.168.31.101:9200/test_index/_doc/1?pretty" -H 'Content-Type: application/json' -d' {"name": "Jack","age": 19}'

{

"_index" : "test_index",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"_seq_no" : 0,

"_primary_term" : 1

}检查创建的索引相关信息:

root@es1:~# curl -u manager:123456 -X GET http://192.168.31.101:9200/test_index?pretty

{

"test_index" : {

"aliases" : { },

"mappings" : {

"properties" : {

"age" : {

"type" : "long"

},

"name" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

},

"settings" : {

"index" : {

"routing" : {

"allocation" : {

"include" : {

"_tier_preference" : "data_content"

}

}

},

"number_of_shards" : "1",

"provided_name" : "test_index",

"creation_date" : "1674141627767",

"number_of_replicas" : "1",

"uuid" : "hNwnsFDTRvuWxANsSeWCoQ",

"version" : {

"created" : "8050199"

}

}

}

}

}

可以在elasticsearch-head插件程序中查看已经上传数据的索引信息:

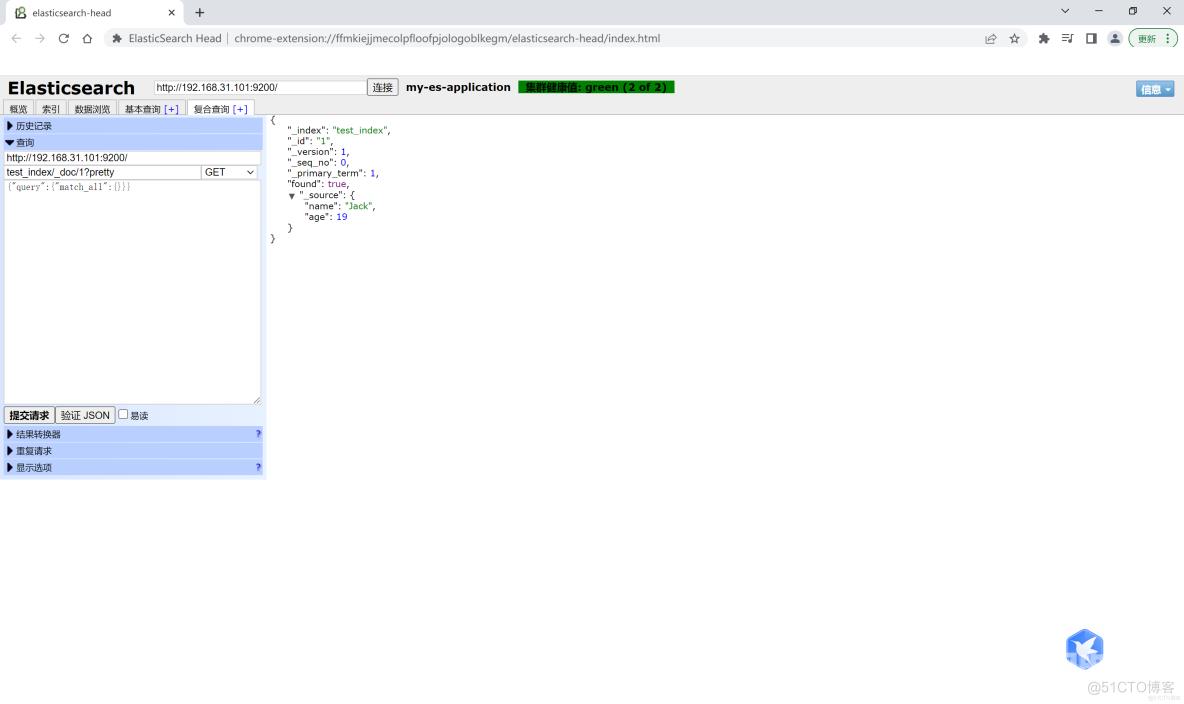

可以在elasticsearch-head插件应用程序图形界面中,选择GET方法,点击“提交请求”按键访问API路径,检查索引数据正确性(生产环境慎用此法):

关于生产环境参数设置需要注意,3个节点需要设置elasticsearch的默认分片数为1000000,否则生产环境容易报错。

root@es1:~# curl -u manager:123456 -X PUT http://192.168.31.101:9200/_cluster/settings -H 'Content-Type: application/json' -d'

{

"persistent" : {

"cluster.max_shards_per_node" : "1000000"

}

}'

{"acknowledged":true,"persistent":{"cluster":{"max_shards_per_node":"1000000"}},"transient":{}}root@es1:~#

root@es2:~# curl -u manager:123456 -X PUT http://192.168.31.102:9200/_cluster/settings -H 'Content-Type: application/json' -d'

{

"persistent" : {

"cluster.max_shards_per_node" : "1000000"

}

}'

{"acknowledged":true,"persistent":{"cluster":{"max_shards_per_node":"1000000"}},"transient":{}}root@es2:~#

root@es3:~# curl -u manager:123456 -X PUT http://192.168.31.103:9200/_cluster/settings -H 'Content-Type: application/json' -d'

{

"persistent" : {

"cluster.max_shards_per_node" : "1000000"

}

}'

{"acknowledged":true,"persistent":{"cluster":{"max_shards_per_node":"1000000"}},"transient":{}}root@es3:~#

3个节点设置磁盘最低和最高使用百分比95%,默认85%不会在当前节点创新新的分配副本、90%开始将副本移动至其它节点、95所有索引只读:

root@es1:~# curl -u manager:123456 -X PUT http://192.168.31.101:9200/_cluster/settings -H 'Content-Type: application/json' -d'

{

"persistent": {

"cluster.routing.allocation.disk.watermark.low": "95%"

,

"cluster.routing.allocation.disk.watermark.high": "95%"

}

}'

{"acknowledged":true,"persistent":{"cluster":{"routing":{"allocation":{"disk":{"watermark":{"low":"95%","high":"95%"}}}}}},"transient":{}}root@es1:~#

root@es2:~# curl -u manager:123456 -X PUT http://192.168.31.102:9200/_cluster/settings -H 'Content-Type: application/json' -d'

{

"persistent": {

"cluster.routing.allocation.disk.watermark.low": "95%"

,

"cluster.routing.allocation.disk.watermark.high": "95%"

}

}'

{"acknowledged":true,"persistent":{"cluster":{"routing":{"allocation":{"disk":{"watermark":{"low":"95%","high":"95%"}}}}}},"transient":{}}root@es2:~#

root@es3:~# curl -u manager:123456 -X PUT http://192.168.31.103:9200/_cluster/settings -H 'Content-Type: application/json' -d'

{

"persistent": {

"cluster.routing.allocation.disk.watermark.low": "95%"

,

"cluster.routing.allocation.disk.watermark.high": "95%"

}

}'

{"acknowledged":true,"persistent":{"cluster":{"routing":{"allocation":{"disk":{"watermark":{"low":"95%","high":"95%"}}}}}},"transient":{}}root@es3:~#

1.7 Elasticsearch常用API命令示例参考:

root@es1:~# curl -u manager:123456 -X GET http://172.31.2.102:9200 #获取集群状态

root@es1:~# curl -u manager:123456 -X GET http://192.168.31.101:9200/_cat #集群支持的操作

root@es1:~# curl -u manager:123456 -X GET http://192.168.31.101:9200/_cat/master?v #获取master信息

root@es1:~# curl -u manager:123456 -X GET http://192.168.31.101:9200/_cat/nodes?v #获取node节点信息

root@es1:~# curl -u manager:123456 -X GET http://192.168.31.101:9200/_cat/health?v #获取集群心跳信息

root@es1:~# curl -u manager:123456 -X PUT http://192.168.31.101:9200/test_index?pretty #创建索引test_index,pretty 为格式序列化

root@es1:~# curl -u manager:123456 -X GET http://192.168.31.101:9200/test_index?pretty #查看索引

root@es1:~# curl -u manager:123456 -X POST "http://192.168.31.101:9200/test_index/_doc/1?pretty" -H 'Content-Type: application/json' -d' {"name": "Jack","age": 19}' #上传数据

root@es1:~# curl -u manager:123456 -X GET "http://192.168.31.101:9200/test_index/_doc/1?pretty" #查看文档

root@es1:~# curl -u manager:123456 -X PUT http://192.168.31.101:9200/test_index/_settings -H 'content-Type:application/json' -d

'{"number_of_replicas": 2}' #修改副本数,副本数可动态调整

root@es1:~# curl -u manager:123456 -X GET http://192.168.31.101:9200/test_index/_settings?pretty

#查看索引设置

root@es1:~# curl -u manager:123456 -X DELETE "http://192.168.31.101:9200/test_index?pretty" #删除索引

root@es1:~# curl -u manager:123456 -X POST "http://192.168.31.101:9200/test_index/_close" #关闭索引

root@es1:~# curl -u manager:123456 -X POST "http://192.168.31.101:9200/test_index/_open?pretty" #打开索引