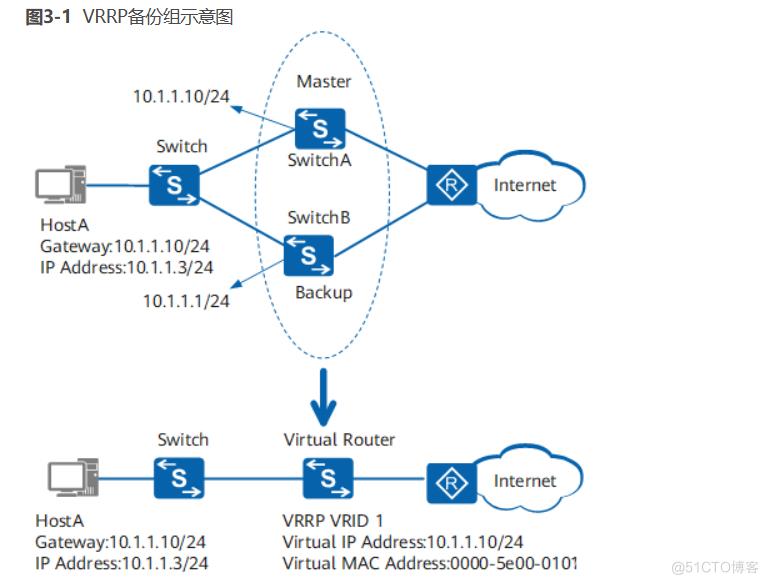

VRRP:Virtual Router Redundancy Protocol 虚拟路由冗余协议,解决静态网关单点风险 物理层:路由器、三层交换机 软件层:keepalived VRRP 网络层硬件实现 https://support.huawei.com/enterprise/zh/doc/EDOC1000141

VRRP:Virtual Router Redundancy Protocol

虚拟路由冗余协议,解决静态网关单点风险

- 物理层:路由器、三层交换机

- 软件层:keepalived

VRRP 网络层硬件实现

https://support.huawei.com/enterprise/zh/doc/EDOC1000141382/19258d72/basicconcepts-of-vrrp

VRRP 相关术语

- 虚拟路由器:Virtual Router

- 虚拟路由器标识:VRID(0-255),唯一标识虚拟路由器

- VIP:Virtual IP

- VMAC:Virutal MAC (00-00-5e-00-01-VRID)

- 物理路由器:

- master:主设备

- backup:备用设备

- priority:优先级

VRRP 相关技术

- 通告:心跳,优先级等;周期性

- 工作方式:抢占式,非抢占式

- 安全认证:

- 无认证

- 简单字符认证:预共享密钥

- MD5

- 工作模式:

- 主/备:单虚拟路由器

- 主/主:主/备(虚拟路由器1),备/主(虚拟路由器2)

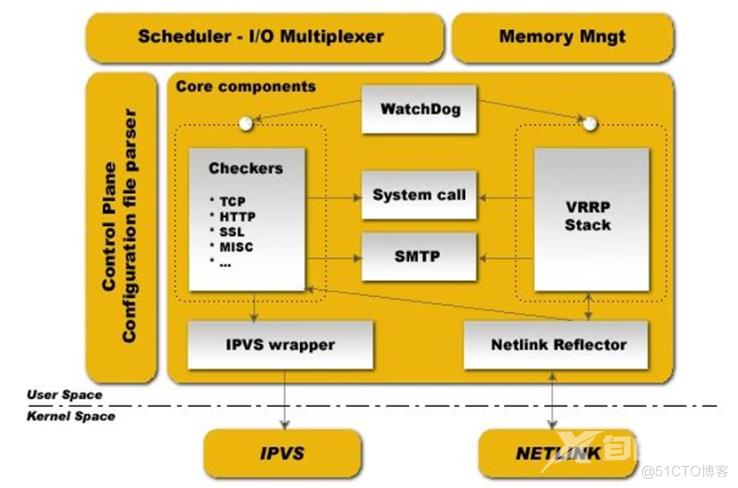

Keepalived 架构

- vrrp 协议的软件实现,原生设计目的为了高可用 ipvs服务

- keepalived 是高可用集群的通用无状态应用解决方案

- 功能:

- 基于vrrp协议完成地址流动

- 为vip地址所在的节点生成ipvs规则(在配置文件中预先定义)

- 为ipvs集群的各RS做健康状态检测

- 基于脚本调用接口完成脚本中定义的功能,进而影响集群事务,以此支持nginx、haproxy等服务

Keepalived 环境准备

包安装

[root@ubuntu2204 ~]#apt list keepalived

正在列表... 完成

keepalived/jammy 1:2.2.4-0.2build1 amd64

[root@ubuntu2204 ~]#apt update;apt -y install keepalived

#默认缺少配置,服务无法启动,提示/etc/keepalived/keepalived.conf 不存在

[root@ubuntu2204 ~]#systemctl status keepalived.service

○ keepalived.service - Keepalive Daemon (LVS and VRRP)

Loaded: loaded (/lib/systemd/system/keepalived.service; enabled; vendor

preset: enabled)

Active: inactive (dead)

Condition: start condition failed at Thu 2023-01-12 15:28:42 CST; 4s ago

└─ ConditionFileNotEmpty=/etc/keepalived/keepalived.conf was not

met

1月 12 15:26:47 ubuntu2204.wang.org systemd[1]: Condition check resulted in

Keepalive Daemon (LVS and VRRP) being skipped.

1月 12 15:28:42 ubuntu2204.wang.org systemd[1]: Condition check resulted in

Keepalive Daemon (LVS and VRRP) being skipped.

#利用模板生成生配置文件

[root@ubuntu2204 ~]#cp /usr/share/doc/keepalived/samples/keepalived.conf.sample

/etc/keepalived/keepalived.conf

[root@ubuntu2204 ~]#systemctl start keepalived.service

[root@ubuntu2204 ~]#systemctl status keepalived.service

● keepalived.service - Keepalive Daemon (LVS and VRRP)

Loaded: loaded (/lib/systemd/system/keepalived.service; enabled; vendor

preset: enabled)

Active: active (running) since Thu 2023-01-12 15:29:54 CST; 1s ago

Main PID: 2174 (keepalived)

Tasks: 3 (limit: 2196)

Memory: 4.5M

CPU: 40ms

CGroup: /system.slice/keepalived.service

├─2174 /usr/sbin/keepalived --dont-fork

├─2175 /usr/sbin/keepalived --dont-fork

└─2176 /usr/sbin/keepalived --dont-fork

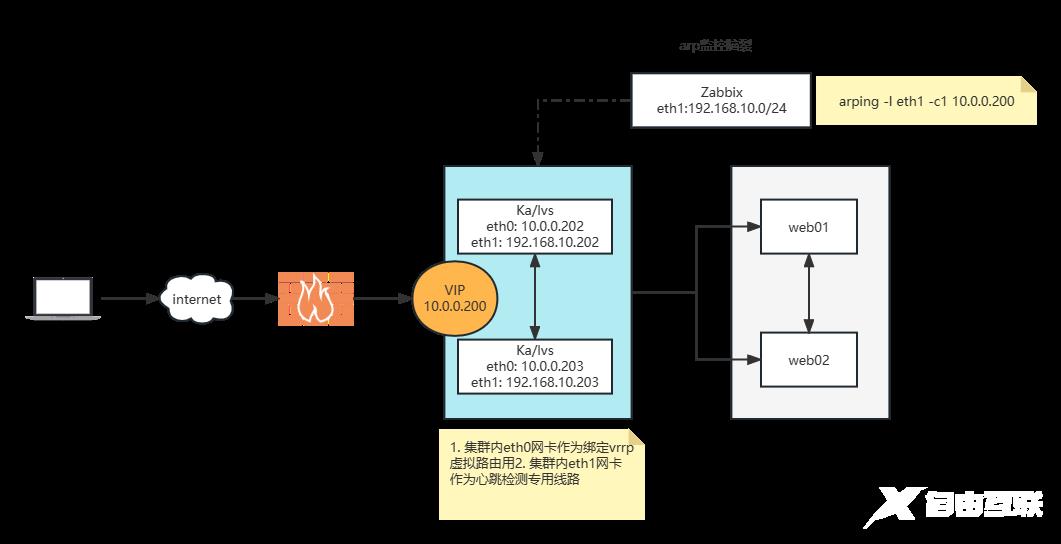

案例:实现master/slave的 Keepalived 单主架构

配置主从vrrp虚拟路由设备,同时加设心跳检测专用网卡

#202 主设备

[root@ka1 ~]#cat /etc/keepalived/keepalived.conf

global_defs {

router_id ka1.mooreyxia.org #每个keepalived主机唯一标识,建议使用当前主机名

vrrp_mcast_group4 224.1.1.1 #指定组播IP地址

}

vrrp_instance VI_1 { #vrrp的实例名,一般为业务名称

state MASTER #BACKUP#当前节点在此虚拟路由器上的初始状态,状态为MASTER或者BACKUP,当priority相同时,先启动的节点优先获取VIP

interface eth1 #绑定为当前VRRP虚拟路由器使用的物理接口做集群内心跳检测.注意:如果和虚拟IP一样,有脑裂现象

virtual_router_id 88 #每个虚拟路由器唯一标识,同一集群内公用

priority 100 #当前物理节点在此虚拟路由器的优先级

advert_int 1 #vrrp通告的时间间隔,默认1s

authentication { #认证机制

auth_type PASS #AH为IPSEC认证(不推荐),PASS为简单密码(建议使用)

auth_pass 123456 #预共享密钥,仅前8位有效,同一个虚拟路由器的多个keepalived节点必须一样

}

virtual_ipaddress { #虚拟IP,生产环境可能指定几十上百个VIP地址

10.0.0.200/24 dev eth0 lable eth0:1 #指定VIP的网卡,建议和interface指令指定的网卡不在一个网卡 #指定VIP的网卡label

}

[root@ka1 ~]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f6:07:67 brd ff:ff:ff:ff:ff:ff

altname enp2s1

altname ens33

inet 10.0.0.202/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.200/24 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fef6:767/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f6:07:71 brd ff:ff:ff:ff:ff:ff

altname enp2s5

altname ens37

inet 192.168.10.202/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fef6:771/64 scope link

valid_lft forever preferred_lft forever

[root@ka1 ~]#systemctl restart keepalived.service

[root@ka1 ~]#hostname -I

10.0.0.202 10.0.0.200 192.168.10.202

#203 从设备

[root@ka2 ~]#cat /etc/keepalived/keepalived.conf

global_defs {

router_id ka2.mooreyxia.org

vrrp_mcast_group4 224.1.1.1

}

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 88

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

}

[root@ka2 ~]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:bb:ef:c8 brd ff:ff:ff:ff:ff:ff

altname enp2s1

altname ens33

inet 10.0.0.203/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:febb:efc8/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:bb:ef:d2 brd ff:ff:ff:ff:ff:ff

altname enp2s5

altname ens37

inet 192.168.10.203/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:febb:efd2/64 scope link

valid_lft forever preferred_lft forever

[root@ka2 ~]#systemctl restart keepalived.service

[root@ka2 ~]#hostname -I

10.0.0.203 192.168.10.203

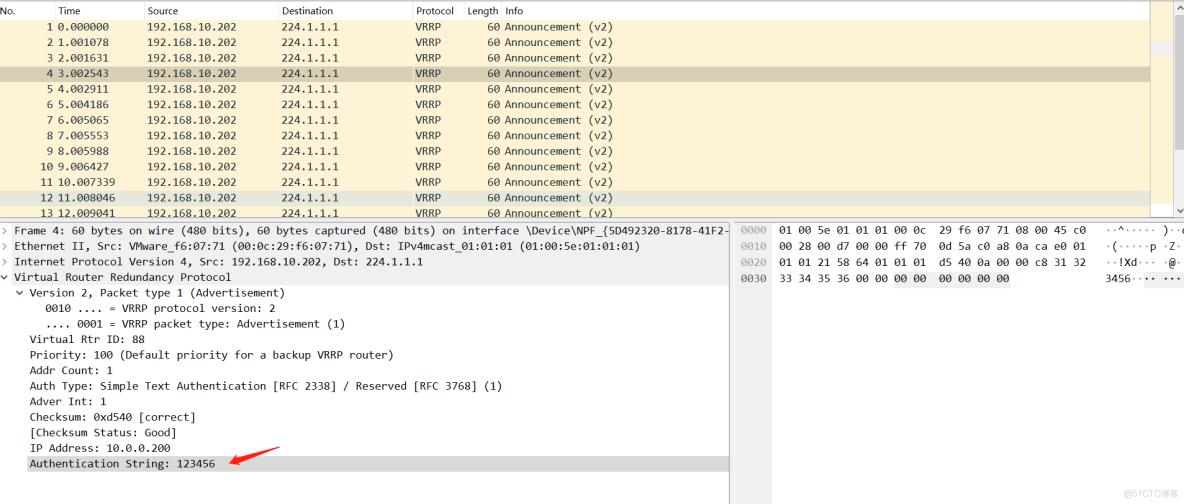

#测试 - 抓包查看数据发送

[root@ka2 ~]#tcpdump -i eth1 -nn host 224.1.1.1

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), snapshot length 262144 bytes

20:47:55.724918 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

20:47:56.726320 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

20:47:57.726704 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

#停止202主设备服务

[root@ka1 ~]#systemctl stop keepalived.service

#数据包内容发生变化,从设备上线 注意prio值变化 100 --> 0 --> 80

[root@ka2 ~]#tcpdump -i eth1 -nn host 224.1.1.1

20:48:55.768656 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

20:48:56.768491 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

20:48:57.567124 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 0, authtype simple, intvl 1s, length 20

20:48:58.255613 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

20:48:59.257236 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

20:49:00.257956 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

20:49:01.258241 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

#此时主设备上的vrrp ip地址消失,在从设备上出现

[root@ka1 ~]#hostname -I

10.0.0.202 192.168.10.202

[root@ka2 ~]#hostname -I

10.0.0.203 10.0.0.200 192.168.10.203

#恢复主设备服务

[root@ka1 ~]#systemctl restart keepalived.service

#数据包内容发生变化,从设备上线 注意prio值变化 80 --> 100

[root@ka2 ~]#tcpdump -i eth1 -nn host 224.1.1.1

20:53:06.452921 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

20:53:07.453781 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

20:53:08.454422 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

20:53:09.454788 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

20:53:10.455557 IP 192.168.10.203 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 80, authtype simple, intvl 1s, length 20

20:53:10.522302 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

20:53:11.524605 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

20:53:12.525119 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

20:53:13.525798 IP 192.168.10.202 > 224.1.1.1: VRRPv2, Advertisement, vrid 88, prio 100, authtype simple, intvl 1s, length 20

#此时主设备上的vrrp ip地址恢复,在从设备上vrrp ip地址消失

[root@ka1 ~]#hostname -I

10.0.0.202 10.0.0.200 192.168.10.202

[root@ka2 ~]#hostname -I

10.0.0.203 192.168.10.203

查看心跳线间的传输协议

*虽然集群间通信密码没有加密,还是可以被看到,有大于无

启用 Keepalived 日志功能

案例:

[root@ka2 ~]#cat /lib/systemd/system/keepalived.service |grep EnvironmentFile

EnvironmentFile=-/apps/keepalived/etc/sysconfig/keepalived

[root@ka2 ~]#vim /apps/keepalived/etc/sysconfig/keepalived

[root@ka2 ~]#cat /apps/keepalived/etc/sysconfig/keepalived

# Options for keepalived. See `keepalived --help' output and keepalived(8) and

# keepalived.conf(5) man pages for a list of all options. Here are the most

# common ones :

#

# --vrrp -P Only run with VRRP subsystem.

# --check -C Only run with Health-checker subsystem.

# --dont-release-vrrp -V Dont remove VRRP VIPs & VROUTEs on daemon stop.

# --dont-release-ipvs -I Dont remove IPVS topology on daemon stop.

# --dump-conf -d Dump the configuration data.

# --log-detail -D Detailed log messages.

# --log-facility -S 0-7 Set local syslog facility (default=LOG_DAEMON)

#

KEEPALIVED_OPTIONS="-D -S 6"

[root@ka2 ~]#vim /etc/rsyslog.

rsyslog.conf rsyslog.d/

[root@ka2 ~]#vim /etc/rsyslog.conf

[root@ka2 ~]#cat /etc/rsyslog.conf |grep local6

local6.* /var/log/keepalived.log

[root@ka2 ~]#systemctl restart keepalived rsyslog

#观察日志

[root@ka2 ~]#tail -f /var/log/keepalived.log

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: Registering Kernel netlink command channel

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: (/etc/keepalived/keepalived.conf: Line 17) Unknown configuration entry 'lable' for ip address - ignoring

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: (/etc/keepalived/keepalived.conf: Line 17) Unknown configuration entry 'eth0:1' for ip address - ignoring

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: Assigned address 192.168.10.203 for interface eth1

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: Assigned address fe80::20c:29ff:febb:efd2 for interface eth1

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: Registering gratuitous ARP shared channel

Jan 20 11:46:33 ka2 Keepalived[1467]: Startup complete

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: (VI_1) removing VIPs.

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: (VI_1) Entering BACKUP STATE (init)

Jan 20 11:46:33 ka2 Keepalived_vrrp[1468]: VRRP sockpool: [ifindex( 3), family(IPv4), proto(112), fd(12,13) multicast, address(224.1.1.1)]

实现 Keepalived 独立子配置文件

- 当生产环境复杂时, /etc/keepalived/keepalived.conf 文件中保存所有集群的配置会导致内容过多,不易管理,可以将不同集群的配置,比如:不同集群的VIP配置放在独立的子配置文件中

案例:

[root@ka1 ~]#vim /etc/keepalived/keepalived.conf

[root@ka1 ~]#cat /etc/keepalived/keepalived.conf

global_defs {

router_id ka1.mooreyxia.org

vrrp_mcast_group4 224.1.1.1

}

include /etc/keepalived/conf.d/*.conf

[root@ka1 ~]#mkdir /etc/keepalived/conf.d/

[root@ka1 ~]#vim /etc/keepalived/conf.d/mooreyxia.org.conf

[root@ka1 ~]#cat /etc/keepalived/conf.d/mooreyxia.org.conf

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 88

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

[root@ka1 ~]#systemctl restart keepalived

[root@ka1 ~]#hostname -I

10.0.0.202 10.0.0.200 192.168.10.202

脑裂

主备节点同时拥有同一个VIP,此时为脑裂理象

脑裂现象原因

- 心跳线故障

- 防火墙错误配置

- Keepalived 配置错误

- interface错误,virtual_router_id不一致,密码不一致,优先级相同

范例: 发现脑裂

#在从节点服务器执行iptables -A INPUT -s 主服务心跳网卡IP -j DROP 进行模拟

[root@ka2 ~]#iptables -A INPUT -s 192.168.10.202 -j DROP

#主从节点都出现vrrp地址

[root@ka1 ~]#hostname -I

10.0.0.202 10.0.0.200 192.168.10.202

[root@ka2 ~]#hostname -I

10.0.0.203 10.0.0.200 192.168.10.203

#arping 发现两个MAC对外广播vrrp地址

[root@rocky8 ~]#arping 10.0.0.200

ARPING 10.0.0.200 from 10.0.0.8 eth0

Unicast reply from 10.0.0.200 [00:0C:29:BB:EF:C8] 1.081ms

Unicast reply from 10.0.0.200 [00:0C:29:F6:07:67] 1.104ms

非抢占模式 nopreempt

- 默认为抢占模式 preempt,即当高优先级的主机恢复在线后,会抢占低先级的主机的master角色,造成网络抖动,建议设置为非抢占模式 nopreempt ,即高优先级主机恢复后,并不会抢占低优先级主机的master 角色

- 注意: 非抢占模式下,如果原主机down机, VIP迁移至的新主机, 后续新主机也发生down((keepalived服务down))时,VIP还会迁移回修复好的原主机

- 但如果新主机的服务down掉(keepalived服务正常),原主机也不会接管VIP,仍会由新主机拥有VIP即非抢占式模式,只是适合当主节点宕机,切换到从节点的一次性的高可用性,后续即使当原主节点修复好,仍无法再次起到高用功能

注意:要关闭 VIP抢占,必须将各 Keepalived 服务器 state 配置为 BACKUP

#ka1

[root@ka1 ~]#vim /etc/keepalived/conf.d/mooreyxia.org.conf

[root@ka1 ~]#cat /etc/keepalived/conf.d/mooreyxia.org.conf

vrrp_instance VI_1 {

state BACKUP #都为BACKUP

interface eth1

virtual_router_id 88

priority 100

advert_int 1

nopreempt #添加此行,设为nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

[root@ka1 ~]#systemctl restart keepalived

#ka2 - 从服务器不需要添加nopreempt

[root@ka2 ~]#cat /etc/keepalived/conf.d/mooreyxia.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 88

priority 80 #优先级低

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

}

#测试

#正常情况

[root@ka1 ~]#hostname -I

10.0.0.202 10.0.0.200 192.168.10.202

[root@ka2 ~]#hostname -I

10.0.0.203 192.168.10.203

#停止ka1服务,主服务vrrp漂移至从服务

[root@ka1 ~]#systemctl stop keepalived

[root@ka1 ~]#hostname -I

10.0.0.202 192.168.10.202

[root@ka2 ~]#hostname -I

10.0.0.203 10.0.0.200 192.168.10.203

#修复ka1服务,vrrp未漂移

[root@ka1 ~]#systemctl start keepalived

[root@ka1 ~]#hostname -I

10.0.0.202 192.168.10.202

[root@ka2 ~]#hostname -I

10.0.0.203 10.0.0.200 192.168.10.203

#如果此时从节点服务dwon机,vrrp依然会漂移至主节点

[root@ka2 ~]#systemctl stop keepalived

[root@ka2 ~]#hostname -I

10.0.0.203 192.168.10.203

[root@ka1 ~]#hostname -I

10.0.0.202 10.0.0.200 192.168.10.202

抢占延迟模式 preempt_delay

- 抢占延迟模式,即优先级高的主机恢复后,不会立即抢回VIP,而是延迟一段时间(默认300s)再抢回VIP

#更改主节点抢占模式

[root@ka1 ~]#vim /etc/keepalived/conf.d/mooreyxia.org.conf

[root@ka1 ~]#cat /etc/keepalived/conf.d/mooreyxia.org.conf

vrrp_instance VI_1 {

state BACKUP #都为BACKUP

interface eth1

virtual_router_id 88

priority 100

advert_int 1

preempt_delay 60 #添加此行,设为延迟10秒再抢占

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

[root@ka1 ~]#systemctl restart keepalived

VIP 单播配置

- 默认keepalived主机之间利用多播相互通告消息,会造成网络拥塞,可以替换成单播,减少网络流量

- 另外:有些公有云不支持多播,可以利用单播实现

注意:启用 vrrp_strict 时,不能启用单播

案例:

#设置心跳线网络为单播模式

#主节点

[root@ka1 ~]#vim /etc/keepalived/conf.d/mooreyxia.org.conf

[root@ka1 ~]#cat /etc/keepalived/conf.d/mooreyxia.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 88

priority 100

advert_int 1

nopreempt

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

unicast_src_ip 192.168.10.202 #本机IP #单播优先于多播

unicast_peer{

192.168.10.203 #如果有多个keepalived,再加其它节点的IP

}

}

[root@ka1 ~]#systemctl restart keepalived

#从节点

[root@ka2 ~]#vim /etc/keepalived/conf.d/mooreyxia.org.conf

[root@ka2 ~]#cat /etc/keepalived/conf.d/mooreyxia.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 88

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

unicast_src_ip 192.168.10.203

unicast_peer{

192.168.10.202

}

}

[root@ka2 ~]#systemctl restart keepalived

Keepalived 通知脚本配置

- 通知脚本类型

#当前节点成为主节点时触发的脚本

notify_master <STRING>|<QUOTED-STRING>

#当前节点转为备节点时触发的脚本

notify_backup <STRING>|<QUOTED-STRING>

#当前节点转为“失败”状态时触发的脚本

notify_fault <STRING>|<QUOTED-STRING>

#通用格式的通知触发机制,一个脚本可完成以上三种状态的转换时的通知

notify <STRING>|<QUOTED-STRING>

#当停止VRRP时触发的脚本

notify_stop <STRING>|<QUOTED-STRING>

#脚本的调用方法 - 在 vrrp_instance VI_1 语句块的末尾加下面行

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

- 实现 Keepalived 状态切换的通知脚本

案例:

#在所有 keepalived节点配置如下

[root@ka1 ~]#cat /etc/keepalived/notify.sh

#!/bin/bash

contact='mooreyxia@gmail.com'

email_send='xxxxx@qq.com'

email_passwd='xxxxx'

email_smtp_server='smtp.qq.com'

. /etc/os-release

msg_error() {

echo -e "\033[1;31m$1\033[0m"

}

msg_info() {

echo -e "\033[1;32m$1\033[0m"

}

msg_warn() {

echo -e "\033[1;33m$1\033[0m"

}

color () {

RES_COL=60

MOVE_TO_COL="echo -en \\033[${RES_COL}G"

SETCOLOR_SUCCESS="echo -en \\033[1;32m"

SETCOLOR_FAILURE="echo -en \\033[1;31m"

SETCOLOR_WARNING="echo -en \\033[1;33m"

SETCOLOR_NORMAL="echo -en \E[0m"

echo -n "$1" && $MOVE_TO_COL

echo -n "["

if [ $2 = "success" -o $2 = "0" ] ;then

${SETCOLOR_SUCCESS}

echo -n $" OK "

elif [ $2 = "failure" -o $2 = "1" ] ;then

${SETCOLOR_FAILURE}

echo -n $"FAILED"

else

${SETCOLOR_WARNING}

echo -n $"WARNING"

fi

${SETCOLOR_NORMAL}

echo -n "]"

echo

}

install_sendemail () {

if [[ $ID =~ rhel|centos|rocky ]];then

rpm -q sendemail &> /dev/null || yum install -y sendemail

elif [ $ID = 'ubuntu' ];then

dpkg -l |grep -q sendemail || { apt update; apt install -y libiosocket-

ssl-perl libnet-ssleay-perl sendemail ; }

else

color "不支持此操作系统,退出!" 1

exit

fi

}

send_email () {

local email_receive="$1"

local email_subject="$2"

local email_message="$3"

sendemail -f $email_send -t $email_receive -u $email_subject -m

$email_message -s $email_smtp_server -o message-charset=utf-8 -o tls=yes -xu

$email_send -xp $email_passwd

[ $? -eq 0 ] && color "邮件发送成功!" 0 || color "邮件发送失败!" 1

}

notify() {

if [[ $1 =~ ^(master|backup|fault)$ ]];then

mailsubject="$(hostname) to be $1, vip floating"

mailbody="$(date +'%F %T'): vrrp transition, $(hostname) changed to be

$1"

send_email "$contact" "$mailsubject" "$mailbody"

else

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

fi

}

install_sendemail

notify $1

[root@ka1 ~]#chmod a+x /etc/keepalived/notify.sh

[root@ka1 ~]#vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

......

virtual_ipaddress {

10.0.0.200/24 dev eth0 label eth0:1

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

#模拟master故障

[root@ka1 ~]#killall keepalived

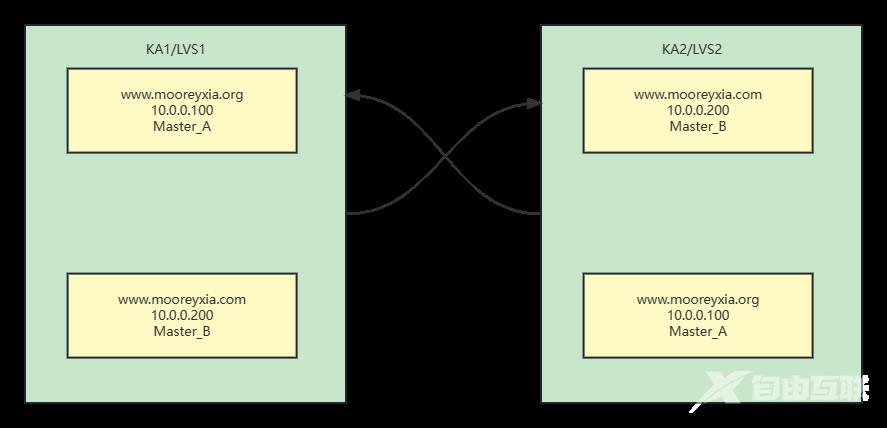

实现 Master/Master 的 Keepalived 双主架构

- 将两个或以上VIP分别运行在不同的keepalived服务器,以实现服务器并行提供web访问的目的,提高服务器资源利用率

- 注意:多主模型在发生VIP迁移时会使另一个服务器负载加大,注意承压阈值

#在拥有了一个vip的基础上再添加一组vip,与上一个vip设置主从关系相反

#ka1

[root@ka1 ~]#vim /etc/keepalived/conf.d/mooreyxia.com.conf

[root@ka1 ~]#cat /etc/keepalived/conf.d/mooreyxia.com.conf

vrrp_instance VI_2 {

state BACKUP

interface eth1

virtual_router_id 89

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 654321

}

virtual_ipaddress {

10.0.0.100/24 dev eth0 lable eth0:2

}

unicast_src_ip 192.168.10.202

unicast_peer{

192.168.10.203

}

}

[root@ka1 ~]#systemctl restart keepalived

#ka2

[root@ka2 ~]#vim /etc/keepalived/conf.d/mooreyxia.com.conf

[root@ka2 ~]#cat /etc/keepalived/conf.d/mooreyxia.com.conf

vrrp_instance VI_2 {

state MASTER

interface eth1

virtual_router_id 89

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 654321

}

virtual_ipaddress {

10.0.0.100/24 dev eth0 lable eth0:2

}

unicast_src_ip 192.168.10.203

unicast_peer{

192.168.10.202

}

}

[root@ka2 ~]#systemctl restart keepalived

#测试

[root@ka1 conf.d]#hostname -I

10.0.0.202 10.0.0.200 192.168.10.202

[root@ka2 conf.d]#hostname -I

10.0.0.203 10.0.0.100 192.168.10.203

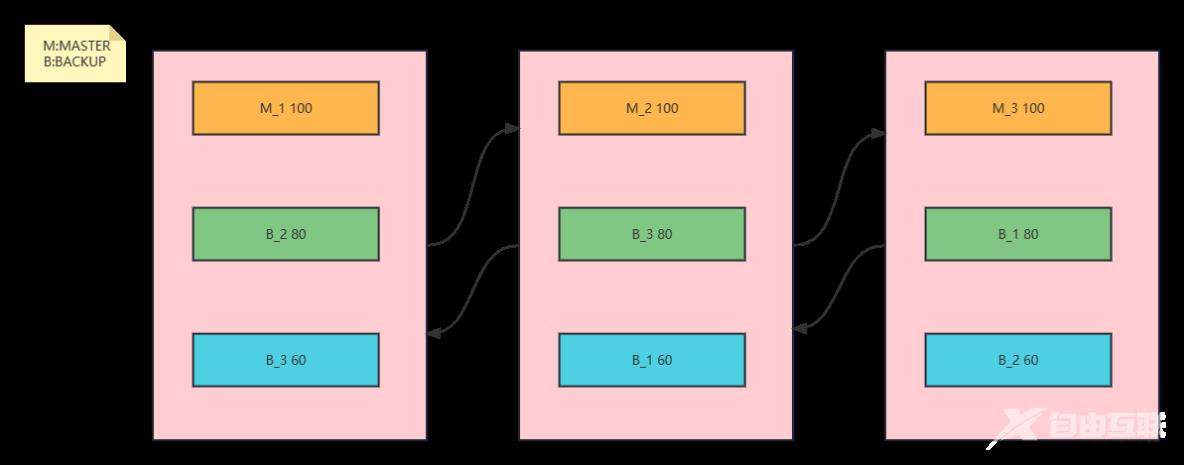

实现多主模型架构

- 三个节点的三主六从架构实现

- 实际上超出三个节点的情况时每个服务器一主两从也足够应对

- 注意:多主模型在发生VIP迁移时会使另一个服务器负载加大,注意承压阈值

案例:

#第一个节点ka1配置:

virtual_router_id 1 , Vrrp instance 1 , MASTER,优先级100

virtual_router_id 2 , Vrrp instance 2 , BACKUP,优先级80

virtual_router_id 3 , Vrrp instance 3 , BACKUP,优先级60

#第二个节点ka2配置:

virtual_router_id 1 , Vrrp instance 1 , BACKUP,优先级60

virtual_router_id 2 , Vrrp instance 2 , MASTER,优先级100

virtual_router_id 3 , Vrrp instance 3 , BACKUP,优先级80

#第三个节点ka3配置:

virtual_router_id 1 , Vrrp instance 1 , BACKUP,优先级80

virtual_router_id 2 , Vrrp instance 2 , BACKUP,优先级60

virtual_router_id 3 , Vrrp instance 3 , MASTER,优先级100

同步组

- LVS NAT 模型VIP和DIP需要同步,需要同步组

案例:

vrrp_sync_group VG_1 {

group {

VI_1 # name of vrrp_instance (below)

VI_2 # One for each moveable IP

}

}

vrrp_instance VI_1 {

eth0

vip

}

vrrp_instance VI_2 {

eth1

dip

}实现单主的 LVS-DR 模式

- 多主LVS-DR模型的实现也可参照,更改keepalived为互为主从策略即可

虚拟服务器配置

virtual_server IP port { #VIP和PORT

delay_loop <INT> #检查后端服务器的时间间隔

lb_algo rr|wrr|lc|wlc|lblc|sh|dh #定义调度方法

lb_kind NAT|DR|TUN #集群的类型,注意要大写

persistence_timeout <INT> #持久连接时长

protocol TCP|UDP|SCTP #指定服务协议,一般为TCP

sorry_server <IPADDR> <PORT> #所有RS故障时,备用服务器地址

real_server <IPADDR> <PORT> { #RS的IP和PORT

weight <INT> #RS权重

notify_up <STRING>|<QUOTED-STRING> #RS上线通知脚本

notify_down <STRING>|<QUOTED-STRING> #RS下线通知脚本

HTTP_GET|SSL_GET|TCP_CHECK|SMTP_CHECK|MISC_CHECK { ... } #定义当前主机健康状态检测方法

}

}

#注意:括号必须分行写,两个括号写在同一行,如: }} 会出错案例:

#准备两台后端RS主机

[root@rocky8 ~]#curl 10.0.0.204

web01 www.mooreyxia.org 10.0.0.204

[root@rocky8 ~]#curl 10.0.0.28

web02 www.mooreyxia.org 10.0.0.28

#使用脚本绑定VIP至web服务器lo网卡

[root@web01 ~]#cat lvs_dr_rs.sh

#!/bin/bash

#Author:mooreyxia

#Date:2023-01-20

vip=10.0.0.200

mask='255.255.255.255'

dev=lo:1

#rpm -q httpd &> /dev/null || yum -y install httpd &>/dev/null

#service httpd start &> /dev/null && echo "The httpd Server is Ready!"

#echo "`hostname -I`" > /var/www/html/index.html

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig $dev $vip netmask $mask #broadcast $vip up

#route add -host $vip dev $dev

echo "The RS Server is Ready!"

;;

stop)

ifconfig $dev down

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "The RS Server is Canceled!"

;;

*)

echo "Usage: $(basename $0) start|stop"

exit 1

;;

esac

#web01

[root@web01 ~]#bash lvs_dr_rs.sh start

The RS Server is Ready!

[root@web01 ~]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 10.0.0.200/32 scope global lo:1

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:e3:99:33 brd ff:ff:ff:ff:ff:ff

altname enp2s1

altname ens33

inet 10.0.0.204/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fee3:9933/64 scope link

valid_lft forever preferred_lft forever

#web02

[root@web02 ~]#bash lvs_dr_rs.sh start

The RS Server is Ready!

[root@web02 ~]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 10.0.0.200/32 scope global lo:1

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:d3:0d:20 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.28/24 brd 10.0.0.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fed3:d20/64 scope link

valid_lft forever preferred_lft forever

配置keepalived

#ka1节点的配置

[root@ka1 conf.d]#vim /etc/keepalived/conf.d/mooreyxia.org.conf

[root@ka1 conf.d]#cat /etc/keepalived/conf.d/mooreyxia.org.conf

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 88

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

unicast_src_ip 192.168.10.202

unicast_peer{

192.168.10.203

}

}

virtual_server 10.0.0.200 80 {

delay_loop 3

lb_algo wrr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 10.0.0.204 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 10.0.0.28 80 {

weight 2

TCP_CHECK {

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

[root@ka1 conf.d]#systemctl restart keepalived

[root@ka1 conf.d]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f6:07:67 brd ff:ff:ff:ff:ff:ff

altname enp2s1

altname ens33

inet 10.0.0.202/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.0.0.200/24 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fef6:767/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:f6:07:71 brd ff:ff:ff:ff:ff:ff

altname enp2s5

altname ens37

inet 192.168.10.202/24 brd 192.168.10.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fef6:771/64 scope link

valid_lft forever preferred_lft forever

#ka2节点的配置

[root@ka2 conf.d]#vim mooreyxia.org.conf

[root@ka2 conf.d]#cat mooreyxia.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 88

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

unicast_src_ip 192.168.10.203

unicast_peer{

192.168.10.202

}

}

virtual_server 10.0.0.200 80 {

delay_loop 3

lb_algo wrr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 10.0.0.204 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 10.0.0.28 80 {

weight 2

TCP_CHECK {

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

[root@ka2 conf.d]#systemctl restart keepalived

#观察lvs调度模型

[root@ka1 conf.d]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.0.200:80 wrr

-> 10.0.0.28:80 Route 2 0 0

-> 10.0.0.204:80 Route 1 0 0

#测试 - 权重策略成功

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web01 www.mooreyxia.org 10.0.0.204

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web01 www.mooreyxia.org 10.0.0.204

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

#测试健康检查功能

#关闭后端服务后会自动调度到另一台服务上

[root@web01 ~]#systemctl stop nginx

#调度策略自动撤下204

[root@ka1 conf.d]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.0.200:80 wrr

-> 10.0.0.28:80 Route 2 0 0

#访问调度到28

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

#再度恢复204服务

[root@web01 ~]#systemctl start nginx

#调度策略自动加入204

[root@ka1 conf.d]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.0.200:80 wrr

-> 10.0.0.28:80 Route 2 0 4

-> 10.0.0.204:80 Route 1 0 0

#访问恢复调度策略

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web01 www.mooreyxia.org 10.0.0.204

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl www.mooreyxia.org

web01 www.mooreyxia.org 10.0.0.204

#当后端服务都失效,keepalived会将自身加入策略组

[root@web01 ~]#systemctl stop nginx

[root@web02 ~]#systemctl stop nginx

[root@ka1 conf.d]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.0.200:80 wrr

-> 127.0.0.1:80 Route 1 0 1

#本机服务可以做个失效页面或者做服务转发 --> 比如本机安装nginx 做失效html提醒

实现单主的 LVS-DR 模式,利用FWM绑定成多个服务为一个集群服务

- 将http 80和https 443绑定到一个集群服务上

#在单主的 LVS-DR 模式的基础上配置后端服务https认证

[root@web01 conf.d]#vim certificate.sh

[root@web01 conf.d]#cat certificate.sh

#!/bin/bash

SITE_NAME=www.mooreyxia.org

CA_SUBJECT="/O=mooreyxia/CN=ca.mooreyxia.org"

SUBJECT="/C=CN/ST=huanggang/L=hubei/O=mooreyxia/CN=$SITE_NAME"

SERIAL=34

EXPIRE=202002

FILE=$SITE_NAME

openssl req -x509 -newkey rsa:2048 -subj $CA_SUBJECT -keyout ca.key -nodes -days 202002 -out ca.crt

openssl req -newkey rsa:2048 -nodes -keyout ${FILE}.key -subj $SUBJECT -out ${FILE}.csr

openssl x509 -req -in ${FILE}.csr -CA ca.crt -CAkey ca.key -set_serial $SERIAL -days $EXPIRE -out ${FILE}.crt

chmod 600 ${FILE}.key ca.key

[root@web01 conf.d]#bash certificate.sh

.+....+......+..+.+..+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++*........+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++*.....+.+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

.........+......+.........+..+....+.....+...+.......+...+......+..+...+......+....+.........+.....+......+....+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++*.+...+.......+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++*........+...+..+.........+....+..+.............+..+...+.+.........+...+...+.....+.+...+...........+.+.....+...+..........+...+.........+.......................+...+............+..........+...+...+.....+....+..+...+......+......+......+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

-----

..+...+......+.......+.....+.........+......+...+....+..+..........+...+..+...+...+.......+.....+.+...........+.+..+.+......+.....+.+..+.......+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++*.........+..+...+...+....+...+.....+.+...............+..+...+......+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++*..+.....+.........+......+......+...+..........+.....+....+...+..+...+............+..........+...+...+......+.....+........................+............+....+...+...........+......+.+..............+.........+.......+........+......+...+.+......+.....+.+.....+...+.+......+.........+..............+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

...+.+.....+...+.+......+...+...+..+......+......+.+............+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++*..+...+..........+..+.+..................+..+.......+.................+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++*..+...........+......+.........+....+..+.+.........+...+........+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

-----

Certificate request self-signature ok

subject=C = CN, ST = huanggang, L = hubei, O = mooreyxia, CN = www.mooreyxia.org

[root@web01 conf.d]#ls

ca.crt ca.key certificate.sh www.mooreyxia.org.crt www.mooreyxia.org.csr www.mooreyxia.org.key

#合并ca.crt文件及www.mooreyxia.org.crt

[root@web01 conf.d]#mv www.mooreyxia.org.crt www.mooreyxia.org.pem

[root@web01 conf.d]#ls

ca.crt ca.key certificate.sh www.mooreyxia.org.crt www.mooreyxia.org.csr www.mooreyxia.org.key www.mooreyxia.org.pem

#nginx启用https

[root@web01 conf.d]#vim /etc/nginx/sites-enabled/default

[root@web01 conf.d]#cat /etc/nginx/sites-enabled/default

...

server {

listen 80 default_server;

listen [::]:80 default_server;

# SSL configuration

#

listen 443 ssl default_server; --> 开放443

# listen [::]:443 ssl default_server;

#

# Note: You should disable gzip for SSL traffic.

# See: https://bugs.debian.org/773332

#

# Read up on ssl_ciphers to ensure a secure configuration.

# See: https://bugs.debian.org/765782

#

# Self signed certs generated by the ssl-cert package

# Don't use them in a production server!

#

# include snippets/snakeoil.conf;

root /var/www/html;

# Add index.php to the list if you are using PHP

index index.html index.htm index.nginx-debian.html;

ssl_certificate /etc/nginx/conf.d/www.mooreyxia.org.pem; --> 添加证书

ssl_certificate_key /etc/nginx/conf.d/www.mooreyxia.org.key;

[root@web01 conf.d]#nginx -s reload

[root@web01 conf.d]#ss -nlt

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 511 0.0.0.0:80 0.0.0.0:*

LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 127.0.0.1:6010 0.0.0.0:*

LISTEN 0 511 0.0.0.0:443 0.0.0.0:*

LISTEN 0 128 127.0.0.1:6011 0.0.0.0:*

LISTEN 0 511 [::]:80 [::]:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 128 [::1]:6010 [::]:*

LISTEN 0 128 [::1]:6011 [::]:*

#web02上的nginx也开启443

[root@web02 conf.d]#cat /etc/nginx/nginx.conf

...

server {

listen 443 ssl http2 default_server;

# listen [::]:443 ssl http2 default_server;

server_name _;

root /usr/share/nginx/html;

#

ssl_certificate "/etc/nginx/conf.d/www.mooreyxia.org.pem";

ssl_certificate_key "/etc/nginx/conf.d/www.mooreyxia.org.key";

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 10m;

# ssl_ciphers PROFILE=SYSTEM;

# ssl_prefer_server_ciphers on;

#

# # Load configuration files for the default server block.

# include /etc/nginx/default.d/*.conf;

#

location / {

}

#

error_page 404 /404.html;

location = /40x.html {

}

#

# error_page 500 502 503 504 /50x.html;

# location = /50x.html {

# }

}

}

[root@web02 conf.d]#nginx -s reload

[root@web02 conf.d]#ss -nlt

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 100 127.0.0.1:25 0.0.0.0:*

LISTEN 0 128 0.0.0.0:443 0.0.0.0:*

LISTEN 0 128 [::]:80 [::]:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

#测试

[root@rocky8 ~]#curl -k https://10.0.0.204

web01 www.mooreyxia.org 10.0.0.204

[root@rocky8 ~]#curl -k https://10.0.0.28

web02 www.mooreyxia.org 10.0.0.28

合并http和https两个集群

#两个节点都执行以下操作 - 将80和443作为相同的一组通讯 通过iptables贴标签的方式

[root@ka1 conf.d]#iptables -t mangle -A PREROUTING -d 10.0.0.200 -p tcp -m multiport --dports 80,443 -j MARK --set-mark 6

[root@ka2 conf.d]#iptables -t mangle -A PREROUTING -d 10.0.0.200 -p tcp -m multiport --dports 80,443 -j MARK --set-mark 6

#在keepalived中添加一组防火墙标签的服务

#ka1

[root@ka1 conf.d]#vim mooreyxia.org.conf

[root@ka1 conf.d]#cat mooreyxia.org.conf

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 88

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

unicast_src_ip 192.168.10.202

unicast_peer{

192.168.10.203

}

}

virtual_server fwmark 6 { #指定FWM为6

delay_loop 3

lb_algo wrr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80 #注意端口必须指定

real_server 10.0.0.204 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 10.0.0.28 80 {

weight 2

TCP_CHECK {

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

[root@ka1 conf.d]#systemctl restart keepalived

[root@ka1 conf.d]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

FWM 6 wrr

-> 10.0.0.28:0 Route 2 0 0

-> 10.0.0.204:0 Route 1 0 0

#ka2

[root@ka2 conf.d]#vim mooreyxia.org.conf

[root@ka2 conf.d]#cat mooreyxia.org.conf

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 88

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.200/24 dev eth0 lable eth0:1

}

unicast_src_ip 192.168.10.203

unicast_peer{

192.168.10.202

}

}

virtual_server fwmark 6 {

delay_loop 3

lb_algo wrr

lb_kind DR

protocol TCP

sorry_server 127.0.0.1 80

real_server 10.0.0.204 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 10.0.0.28 80 {

weight 2

TCP_CHECK {

connect_timeout 5

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

[root@ka2 conf.d]#systemctl restart keepalived

[root@ka2 conf.d]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

FWM 6 wrr

-> 10.0.0.28:0 Route 2 0 0

-> 10.0.0.204:0 Route 1 0 0

测试 - http与https都会调度到同一集群,只要一个服务器调度到http,另一个服务器必定调度到https,实现负载均衡策略

[root@rocky8 ~]#curl http://www.mooreyxia.org -k https://www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

web01 www.mooreyxia.org 10.0.0.204

[root@rocky8 ~]#curl http://www.mooreyxia.org -k https://www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28 --> 这里设置了权重导致

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl http://www.mooreyxia.org -k https://www.mooreyxia.org

web01 www.mooreyxia.org 10.0.0.204

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl http://www.mooreyxia.org -k https://www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28

web01 www.mooreyxia.org 10.0.0.204

[root@rocky8 ~]#curl http://www.mooreyxia.org -k https://www.mooreyxia.org

web02 www.mooreyxia.org 10.0.0.28 --> 这里设置了权重导致

web02 www.mooreyxia.org 10.0.0.28

[root@rocky8 ~]#curl http://www.mooreyxia.org -k https://www.mooreyxia.org

web01 www.mooreyxia.org 10.0.0.204

web02 www.mooreyxia.org 10.0.0.28

VRRP Script

- keepalived利用 VRRP Script 技术,可以调用外部的辅助脚本进行资源监控,并根据监控的结果实现优先动态调整,从而实现其它应用的高可用性功能

案例:实现单主模式的 Nginx 反向代理的高可用

#在两个节点都配置nginx反向代理

[root@ka1-centos8 ~]#vim /etc/nginx/nginx.conf

http {

upstream websrvs {

server 10.0.0.7:80 weight=1;

server 10.0.0.17:80 weight=1;

}

server {

listen 80;

location /{

proxy_pass http://websrvs/;

}

}

}

#在两个节点都配置实现nginx反向代理高可用

[root@ka1-centos8 ~]#cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from kaadmin@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ka1.mooreyxia.org #在另一个节点为ka2.mooreyxia.org

vrrp_mcast_group4 224.0.100.100

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

#script "/usr/bin/killall -0 nginx" 此写法支持

#script "/usr/bin/killall -0 nginx &>/dev/null" 不支持&>此写法

interval 1

weight -30

fall 3

rise 5

timeout 2

}

vrrp_instance VI_1 {

state MASTER #在另一个节点为BACKUP

interface eth0

virtual_router_id 66

priority 100 #在另一个节点为80

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

10.0.0.10/24 dev eth0 label eth0:1

}

track_interface {

eth0

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

track_script {

check_nginx

}

}

[root@ka1-centos8 ~]# yum install psmisc -y

[root@ka1-centos8 ~]# cat /etc/keepalived/check_nginx.sh

#!/bin/bash

/usr/bin/killall -0 nginx || systemctl restart nginx --> killall -0 service 可以检查僵尸进程

[root@ka1-centos8 ~]# chmod a+x /etc/keepalived/check_nginx.sh

我是moore,大家一起加油!!!